吴恩达机器学习笔记第七周 SVM支持向量机

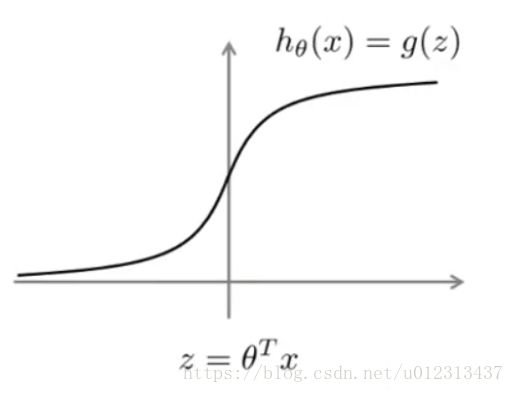

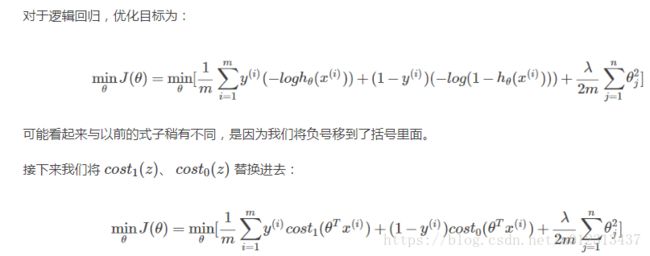

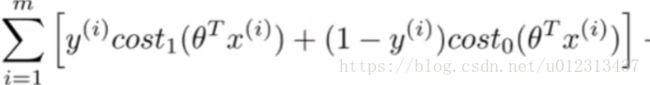

由逻辑回归引入SVM:

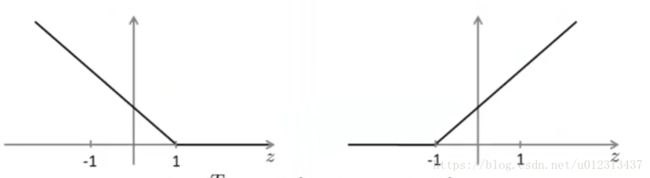

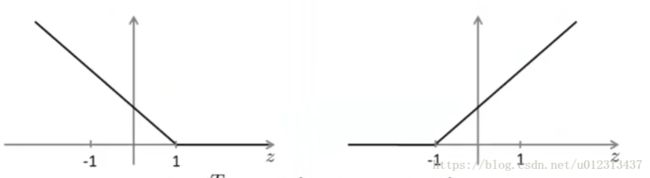

当y=1时,我们希望假设函数能趋向于1,即z>=0,当预测y=0时,我们希望假设函数能趋向于0,即z<0。cost1 cost2 如下图:

大间距分类器:

由图中的cost1 cost2 我们在使代价函数最小的时候会有如下的期望:

对于正样本y=1,我们希望cost1(z)=0,即z>=1.

对于负样本y=0,我们希望cost2(z)=0,即z<-1.

当C非常大时,我们希望这部分为0,那么SVM的优化目标就变成了![]()

SVM的数学原理:

这是我们之前提到的SVM的优化目标: ![]()

![]()

![]()

![]()

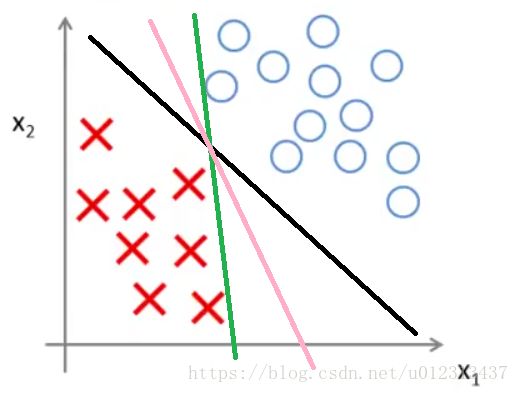

我们来看对于下面的数据集,SVM会选择哪种决策边界(假设 θ0=0)。

![]()

![]()

![]()

反过来看,通过在优化目标里让 ∥θ∥ 不断变小,SVM就可以选择出上图所示的大间距决策边界。这也是SVM可以产生大间距分类器的原因。

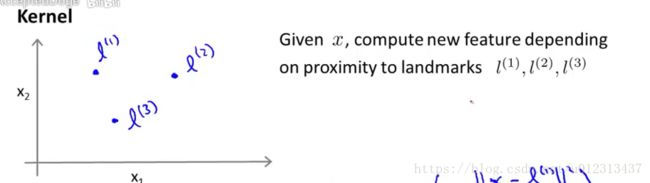

Kernels(核函数):

当我们进行复杂的非线性分类的的时候,我们的决策边界通常会使用多次多项式。但是我们并不知道这些高次项是否有用。

假设有二维向量x1、x2,l1,l2,l3表示三个特征变量.

定义f1=similarty(x,l1)=exp(-||x-l1||^2/2*sigma^2)

||x-l1||表示x与l1的欧氏距离。 如果欧式距离接近于0的时候,f1接近于1.当欧式距离为大数的时候f1接近于0.

这种相似度,用数学术语来说,就是核函数(Kernels)。核函数有不同的种类,其中常用的就是我们上述这种高斯核函数。

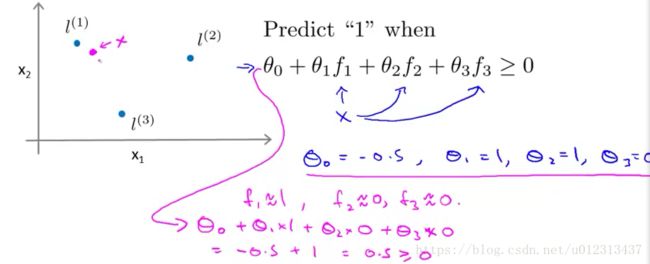

我们得到特征变量之后,就要来得到预测函数了。假设通过x得到f1,f2,f3,那么当我们的预测值为1的时候:

theta0+theta1*f1+theta2*f2+theta3*f3>=0

在应用中我们可能需要标记点l1,l2,l3或者更多,我们如何来选择这些标记点呢。

我们可以直接选择样本中的点作为标记点:choose l(1)=x(1),l(2)=x(2),.....,l(m)=x(m)

f1=similarity(x,l(1))

f2=similarity(x,l(2))

......

还可以得到一个,额外的特疼f0=1。

现在有m+1行特征f,y=1时 为theta'f>=0.我们可以得到代价函数如上图。

对于参数C的选择C与逻辑回归中的1/lambda功能相似:当C过大时,会产生高方差,低偏差,过拟合,当C过小时会产生高偏差,低方差,欠拟合。

参数sigma^2的过大过小也会对方差偏差产生影响:

sigma^2过大的时候,会导致近似范围过大,特征f(i)变化缓慢,产生高偏差,低方差,欠拟合。

sigma^2过小时,近似范围太小,特征f(i)变化不平滑,产生高方差,低偏差,过拟合。

Using An SVM(使用SVM):

使用SVM软件包来解决参数theta的问题。我们需要指定的是参数C的选择和核函数的选择

在SVM中对于多分类问题,我们可以使用多分类的SVM软件包,也可以使用一对多分类法训练K个分类器,把y=i 的类与其他类分开选择theta(i)'*x最大的作为识别的结果。

对于SVM与逻辑回归的选择上主要观察特征数n与样本数m的大小。

当特征数很大且远大于m的时候,使用逻辑回归或者不用核函数的SVM效果会更好,有足够的数据拟合出非线性分类器。

当特征数n较小,训练样本m中等大小时使用SVM核函数。

当特征数n很小但是样本数m很大时可以使用无核函数的SVM或者逻辑回归。

编程作业:

gaussianKernel:写出高斯核函数的表达式

function sim = gaussianKernel(x1, x2, sigma)

%RBFKERNEL returns a radial basis function kernel between x1 and x2

% sim = gaussianKernel(x1, x2) returns a gaussian kernel between x1 and x2

% and returns the value in sim

% Ensure that x1 and x2 are column vectors

x1 = x1(:); x2 = x2(:);

% You need to return the following variables correctly.

sim = 0;

% ====================== YOUR CODE HERE ======================

% Instructions: Fill in this function to return the similarity between x1

% and x2 computed using a Gaussian kernel with bandwidth

% sigma

%

%

sim=exp(-(x1-x2)'*(x1-x2)/(2*sigma^2));

% =============================================================

enddataset3Params:选择合适的常数C与sigma

function [C, sigma] = dataset3Params(X, y, Xval, yval)

%DATASET3PARAMS returns your choice of C and sigma for Part 3 of the exercise

%where you select the optimal (C, sigma) learning parameters to use for SVM

%with RBF kernel

% [C, sigma] = DATASET3PARAMS(X, y, Xval, yval) returns your choice of C and

% sigma. You should complete this function to return the optimal C and

% sigma based on a cross-validation set.

%

% You need to return the following variables correctly.

C = 1;

sigma = 0.3;

% ====================== YOUR CODE HERE ======================

% Instructions: Fill in this function to return the optimal C and sigma

% learning parameters found using the cross validation set.

% You can use svmPredict to predict the labels on the cross

% validation set. For example,

% predictions = svmPredict(model, Xval);

% will return the predictions on the cross validation set.

%

% Note: You can compute the prediction error using

% mean(double(predictions ~= yval))

%

columns=[0.01,0.03,0.1,0.3,1,3,10,30];

maxError = inf;

for i = 1:length(columns)

for j=1 : length(columns)

model = svmTrain(X, y, columns(i), @(x1, x2) gaussianKernel(x1, x2, columns(j)));

predictions = svmPredict(model,Xval);

nowError = mean(double(predictions ~=yval));

if(maxError>nowError)

maxError=nowError;

C=columns(i);

sigma=columns(j);

end

end

end

% =========================================================================

end

processEmail:邮件处理

function word_indices = processEmail(email_contents)

%PROCESSEMAIL preprocesses a the body of an email and

%returns a list of word_indices

% word_indices = PROCESSEMAIL(email_contents) preprocesses

% the body of an email and returns a list of indices of the

% words contained in the email.

%

% Load Vocabulary

vocabList = getVocabList();

% Init return value

word_indices = [];

% ========================== Preprocess Email ===========================

% Find the Headers ( \n\n and remove )

% Uncomment the following lines if you are working with raw emails with the

% full headers

% hdrstart = strfind(email_contents, ([char(10) char(10)]));

% email_contents = email_contents(hdrstart(1):end);

% Lower case

email_contents = lower(email_contents);

% Strip all HTML

% Looks for any expression that starts with < and ends with > and replace

% and does not have any < or > in the tag it with a space

email_contents = regexprep(email_contents, '<[^<>]+>', ' ');

% Handle Numbers

% Look for one or more characters between 0-9

email_contents = regexprep(email_contents, '[0-9]+', 'number');

% Handle URLS

% Look for strings starting with http:// or https://

email_contents = regexprep(email_contents, ...

'(http|https)://[^\s]*', 'httpaddr');

% Handle Email Addresses

% Look for strings with @ in the middle

email_contents = regexprep(email_contents, '[^\s]+@[^\s]+', 'emailaddr');

% Handle $ sign

email_contents = regexprep(email_contents, '[$]+', 'dollar');

% ========================== Tokenize Email ===========================

% Output the email to screen as well

fprintf('\n==== Processed Email ====\n\n');

% Process file

l = 0;

while ~isempty(email_contents)

% Tokenize and also get rid of any punctuation

[str, email_contents] = ...

strtok(email_contents, ...

[' @$/#.-:&*+=[]?!(){},''">_<;%' char(10) char(13)]);

% Remove any non alphanumeric characters

str = regexprep(str, '[^a-zA-Z0-9]', '');

% Stem the word

% (the porterStemmer sometimes has issues, so we use a try catch block)

try str = porterStemmer(strtrim(str));

catch str = ''; continue;

end;

% Skip the word if it is too short

if length(str) < 1

continue;

end

% Look up the word in the dictionary and add to word_indices if

% found

% ====================== YOUR CODE HERE ======================

% Instructions: Fill in this function to add the index of str to

% word_indices if it is in the vocabulary. At this point

% of the code, you have a stemmed word from the email in

% the variable str. You should look up str in the

% vocabulary list (vocabList). If a match exists, you

% should add the index of the word to the word_indices

% vector. Concretely, if str = 'action', then you should

% look up the vocabulary list to find where in vocabList

% 'action' appears. For example, if vocabList{18} =

% 'action', then, you should add 18 to the word_indices

% vector (e.g., word_indices = [word_indices ; 18]; ).

%

% Note: vocabList{idx} returns a the word with index idx in the

% vocabulary list.

%

% Note: You can use strcmp(str1, str2) to compare two strings (str1 and

% str2). It will return 1 only if the two strings are equivalent.

%

for i = 1:length(vocabList)

if(strcmp(str,vocabList(i))==1)

word_indices=[word_indices,i];

end

end

% =============================================================

% Print to screen, ensuring that the output lines are not too long

if (l + length(str) + 1) > 78

fprintf('\n');

l = 0;

end

fprintf('%s ', str);

l = l + length(str) + 1;

end

% Print footer

fprintf('\n\n=========================\n');

end

emailFeatures:

function x = emailFeatures(word_indices)

%EMAILFEATURES takes in a word_indices vector and produces a feature vector

%from the word indices

% x = EMAILFEATURES(word_indices) takes in a word_indices vector and

% produces a feature vector from the word indices.

% Total number of words in the dictionary

n = 1899;

% You need to return the following variables correctly.

x = zeros(n, 1);

% ====================== YOUR CODE HERE ======================

% Instructions: Fill in this function to return a feature vector for the

% given email (word_indices). To help make it easier to

% process the emails, we have have already pre-processed each

% email and converted each word in the email into an index in

% a fixed dictionary (of 1899 words). The variable

% word_indices contains the list of indices of the words

% which occur in one email.

%

% Concretely, if an email has the text:

%

% The quick brown fox jumped over the lazy dog.

%

% Then, the word_indices vector for this text might look

% like:

%

% 60 100 33 44 10 53 60 58 5

%

% where, we have mapped each word onto a number, for example:

%

% the -- 60

% quick -- 100

% ...

%

% (note: the above numbers are just an example and are not the

% actual mappings).

%

% Your task is take one such word_indices vector and construct

% a binary feature vector that indicates whether a particular

% word occurs in the email. That is, x(i) = 1 when word i

% is present in the email. Concretely, if the word 'the' (say,

% index 60) appears in the email, then x(60) = 1. The feature

% vector should look like:

%

% x = [ 0 0 0 0 1 0 0 0 ... 0 0 0 0 1 ... 0 0 0 1 0 ..];

%

%

for i=1:length(word_indices)

x(word_indices(i))=1;

end

% =========================================================================

end