Android NDK在Mac OS 上面编译FFmpeg时遇见的坑?

第一步:下载NDK

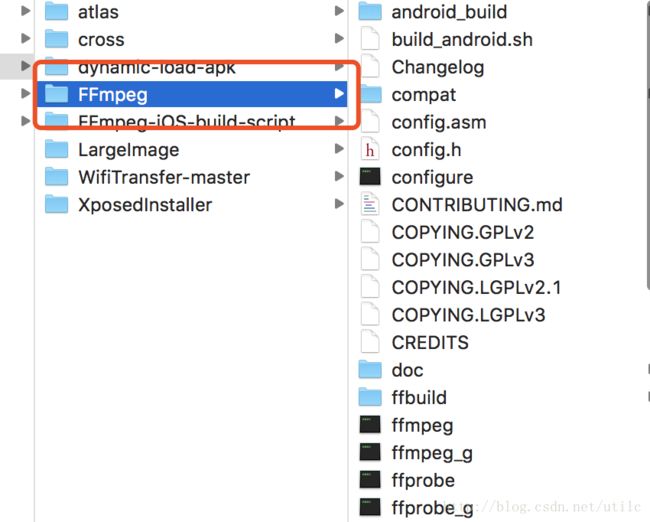

第二步:下载FFmpeg的源码,git clone https://github.com/FFmpeg/FFmpeg.git

第三步:编写shell脚本,编译FFmpeg成.so库#!/bin/bash

echo "$ANDROID_NDK"

NDK=${ANDROID_NDK}

#NDK=/Users/ianchang/Public/Android/android-ndk-r14b

NDK_VERSION=android-19

echo "NDK=$NDK NDK_VERSION=$NDK_VERSION"

# darwin linux

function build_one {

./configure \

--target-os=linux \

--arch=$ARCH \

--prefix=$PREFIX \

--enable-shared \

--disable-static \

--disable-doc \

--disable-ffmpeg \

--disable-ffplay \

--disable-ffprobe \

--disable-ffserver \

--disable-doc \

--disable-symver \

--enable-cross-compile \

--cross-prefix=$CROSS_COMPILE \

--sysroot=$SYSROOT \

--extra-cflags="-fpic"

$ADDITIONAL_CONFIGURE_FLAG

make clean

make -j8

make install

}

ARCH=arm

CPU=arm

PREFIX=$(pwd)/android/$CPU

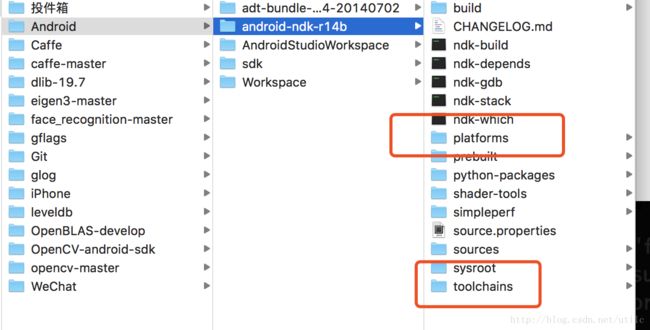

TOOLCHAIN=$NDK/toolchains/arm-linux-androideabi-4.9/prebuilt/darwin-x86_64

CROSS_COMPILE=$TOOLCHAIN/bin/arm-linux-androideabi-

SYSROOT=$NDK/platforms/$NDK_VERSION/arch-$ARCH

echo "PREFIX=${PREFIX}"

echo "TOOLCHAIN=${TOOLCHAIN}"

echo "CROSS_COMPILE=${CROSS_COMPILE}"

echo "SYSROOT=${SYSROOT}"

echo "******************************"

build_android

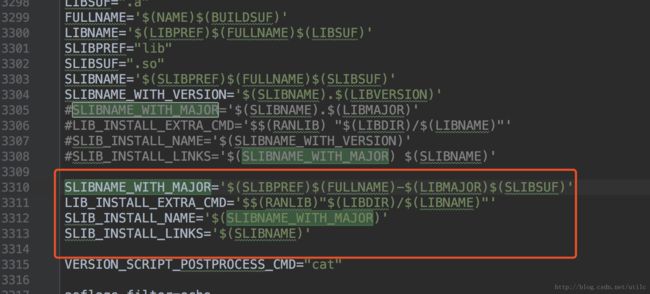

1、修改configure文件,替换以下几个字段的值:

#SLIBNAME_WITH_MAJOR='$(SLIBNAME).$(LIBMAJOR)'

#LIB_INSTALL_EXTRA_CMD='$$(RANLIB) "$(LIBDIR)/$(LIBNAME)"'

#SLIB_INSTALL_NAME='$(SLIBNAME_WITH_VERSION)'

#SLIB_INSTALL_LINKS='$(SLIBNAME_WITH_MAJOR) $(SLIBNAME)'

SLIBNAME_WITH_MAJOR='$(SLIBPREF)$(FULLNAME)-$(LIBMAJOR)$(SLIBSUF)'

LIB_INSTALL_EXTRA_CMD='$$(RANLIB)"$(LIBDIR)/$(LIBNAME)"'

SLIB_INSTALL_NAME='$(SLIBNAME_WITH_MAJOR)'

SLIB_INSTALL_LINKS='$(SLIBNAME)'

2、运行shell脚本,开始进行编译。建议最好不要下载最新的版本,因为最新的版本在NDK交叉编译时,会报各有文件找

不到的错误。

注意:进入到ffmpeg(cd ffmpeg-3.3.6/) 所在的目录下面,运行 ./build_android.sh。如果无法执行脚本,可能是没有权限,可以使用下面命令

chmod 755 ./build_android.sh

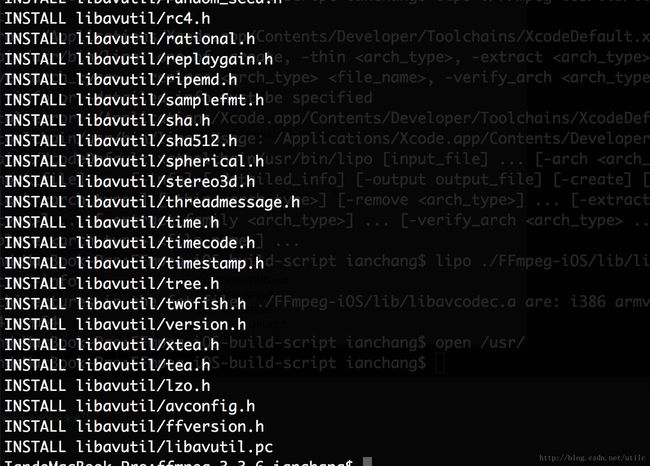

3、运行完成的结果,如下图:

4、编译完成,所生成的so库,如下图:

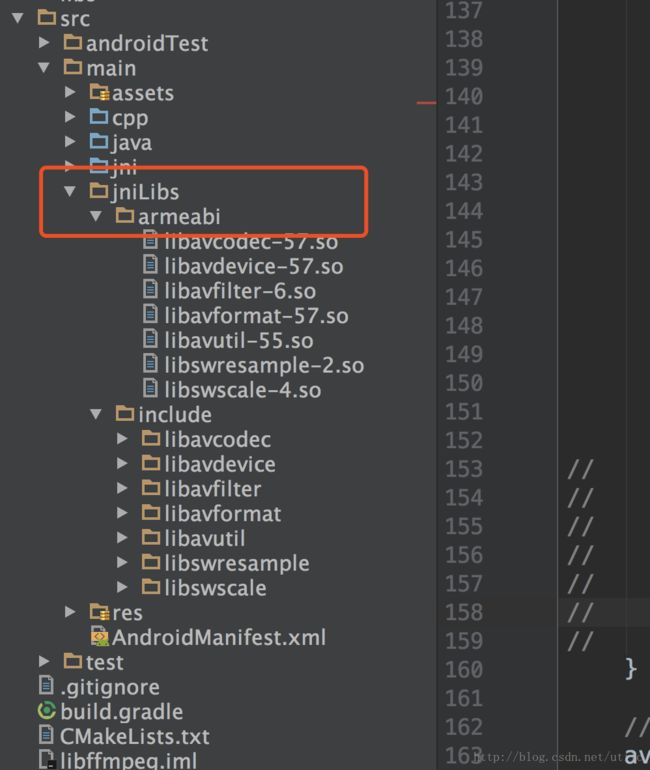

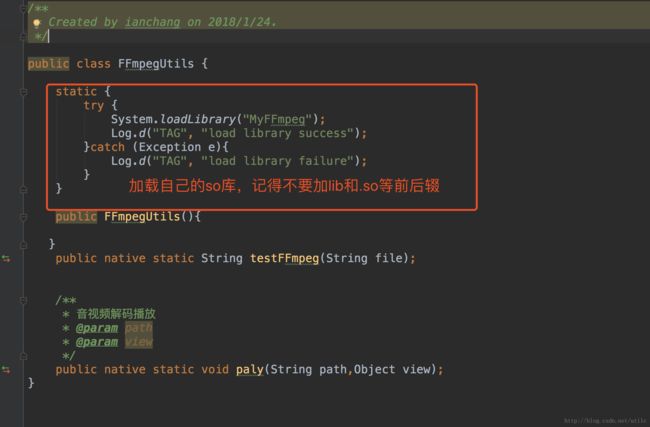

第四步:导入项目中,使用Android Studio 并运行项目

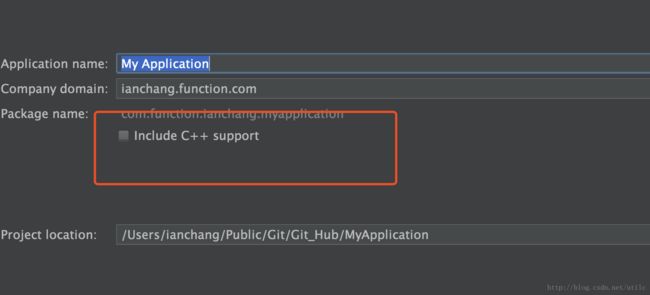

1、创建一个工程,使用Android Studio,在创建时记得勾选上

2、配置gradle文件,如下:

externalNativeBuild {

cmake {

cppFlags "-std=c++11 -frtti -fexceptions"

arguments "-DANDROID_STL=c++_shared"

}

}

ndk {

//这里我们只有armeabi就只配置这个就行了

// abiFilters 'x86', 'armeabi', 'armeabi-v7a', 'x86_64', 'arm64-v8a'

abiFilters 'armeabi'

}

sourceSets.main {

jniLibs.srcDirs = ['src/main/jniLibs']

}

3、编辑 CMakeLists.txt 文件:

#CMake版本信息

cmake_minimum_required(VERSION 3.4.1)

#支持-std=gnu++11

set(CMAKE_VERBOSE_MAKEFILE on)

message("--I print it:----------->>>>>>>'${CMAKE_CXX_FLAGS}'")

project( libFFmpeg )

message("Checking CMAKE_SYSTEM_NAME = '${CMAKE_SYSTEM_NAME}'")

if (${CMAKE_SYSTEM_NAME} MATCHES "Darwin")

add_definitions(-DOS_OSX)

elseif (${CMAKE_SYSTEM_NAME} MATCHES "Linux")

add_definitions(-DOS_LINUX)

elseif (${CMAKE_SYSTEM_NAME} MATCHES "Windows")

add_definitions(-DOS_WIN)

elseif (${CMAKE_SYSTEM_NAME} MATCHES "Android")

add_definitions(-DOS_ANDROID)

message("Checking CMAKE_ABI_NAME = '${CMAKE_ANDROID_ARCH_ABI}'")

else()

message("OS not detected.")

endif()

### 配置资源目录

add_library( MyFFmpeg

SHARED

src/main/cpp/native-lib.cpp )

find_library( log-lib

log )

find_library( android-lib

android )

set(distribution_DIR ${CMAKE_SOURCE_DIR}/src/main/jniLibs/${ANDROID_ABI})

add_library( avutil-55

SHARED

IMPORTED )

set_target_properties( avutil-55

PROPERTIES IMPORTED_LOCATION

${distribution_DIR}/libavutil-55.so)

add_library( swresample-2

SHARED

IMPORTED )

set_target_properties( swresample-2

PROPERTIES IMPORTED_LOCATION

${distribution_DIR}/libswresample-2.so)

add_library( avfilter-6

SHARED

IMPORTED )

set_target_properties( avfilter-6

PROPERTIES IMPORTED_LOCATION

${distribution_DIR}/libavfilter-6.so)

add_library( avformat-57

SHARED

IMPORTED )

set_target_properties( avformat-57

PROPERTIES IMPORTED_LOCATION

${distribution_DIR}/libavformat-57.so)

add_library( swscale-4

SHARED

IMPORTED )

set_target_properties( swscale-4

PROPERTIES IMPORTED_LOCATION

${distribution_DIR}/libswscale-4.so)

add_library( avcodec-57

SHARED

IMPORTED )

set_target_properties( avcodec-57

PROPERTIES IMPORTED_LOCATION

${distribution_DIR}/libavcodec-57.so)

set(CMAKE_VERBOSE_MAKEFILE on)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=gnu++11")

include_directories(src/main/cpp)

include_directories(src/main/jniLibs/include)

target_link_libraries(MyFFmpeg

avcodec-57

avfilter-6

avformat-57

avutil-55

swresample-2

swscale-4

${log-lib}

${android-lib})

6、编译写cpp文件:

#include "FFmpegHeader.h"

using namespace std;

/*

* Class: com_function_ianchang_libffmpeg_FFmpegUtils

* Method: testFFmpeg

* Signature: (Ljava/lang/String;)Ljava/lang/String;

*/

extern "C"

JNIEXPORT jstring JNICALL

Java_com_function_ianchang_libffmpeg_FFmpegUtils_testFFmpeg

(JNIEnv *env, jclass, jstring){

std::string hello = "Hello from C++";

return env->NewStringUTF(hello.c_str());

}

extern "C"

JNIEXPORT void JNICALL

METHOD_NAME(paly)(JNIEnv *env, jclass, jstring path, jobject view){

const char* file = env->GetStringUTFChars(path, 0);

if(file == NULL){

LOGD("The file is a null object.");

}

LOGD("file=%s", file);

//注册所有的编解码器

av_register_all();

// avcodec_register_all();

int ret;

//封装格式上线文

AVFormatContext *fmt_ctx = avformat_alloc_context();

//打开输入流并读取头文件。此时编解码器还没有打开

if(avformat_open_input(&fmt_ctx, file, NULL, NULL) < 0){

return;

}

//获取信息

if(avformat_find_stream_info(fmt_ctx, NULL) < 0){

return;

}

//获取视频流的索引位置

int video_stream_index = -1;

for (int i = 0; i < fmt_ctx->nb_streams; i++) {

if(fmt_ctx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO){

video_stream_index = i;

LOGE("找到视频流索引位置video_stream_index=%d",video_stream_index);

break;

}

}

if (video_stream_index == -1){

LOGE("未找到视频流索引");

}

ANativeWindow* nativeWindow = ANativeWindow_fromSurface(env,view);

if (nativeWindow == NULL) {

LOGE("ANativeWindow_fromSurface error");

return;

}

//绘制时候的缓冲区

ANativeWindow_Buffer outBuffer;

//获取视频流解码器

AVCodecContext *codec_ctx = avcodec_alloc_context3(NULL);

avcodec_parameters_to_context(codec_ctx, fmt_ctx->streams[video_stream_index]->codecpar);

AVCodec *avCodec = avcodec_find_decoder(codec_ctx->codec_id);

//打开解码器

if((ret = avcodec_open2(codec_ctx,avCodec,NULL)) < 0){

ret = -3;

return;

}

//循环从文件读取一帧压缩数据

//开始读取视频

int y_size = codec_ctx->width * codec_ctx->height;

AVPacket *pkt = (AVPacket *)malloc(sizeof(AVPacket));//分配一个packet

av_new_packet(pkt,y_size);//分配packet的数据

AVFrame *yuvFrame = av_frame_alloc();

AVFrame *rgbFrame = av_frame_alloc();

// 颜色转换器

SwsContext *m_swsCtx = sws_getContext(codec_ctx->width, codec_ctx->height, codec_ctx->pix_fmt, codec_ctx->width,

codec_ctx->height, AV_PIX_FMT_RGBA, SWS_BICUBIC, NULL, NULL, NULL);

//int numBytes = av_image_get_buffer_size(AV_PIX_FMT_RGBA, codec_ctx->width, codec_ctx->height, 1);

//uint8_t *out_buffer = (uint8_t *) av_malloc(numBytes * sizeof(uint8_t));

LOGE("开始解码");

int index = 0;

while (1){

if(av_read_frame(fmt_ctx,pkt) < 0){

//这里就认为视频读完了

break;

}

if(pkt->stream_index == video_stream_index) {

//视频解码

ret = avcodec_send_packet(codec_ctx, pkt);

if (ret < 0 && ret != AVERROR(EAGAIN) && ret != AVERROR_EOF) {

LOGE("avcodec_send_packet ret=%d", ret);

av_packet_unref(pkt);

continue;

}

//从解码器返回解码输出数据

ret = avcodec_receive_frame(codec_ctx, yuvFrame);

if (ret < 0 && ret != AVERROR_EOF) {

LOGE("avcodec_receive_frame ret=%d", ret);

av_packet_unref(pkt);

continue;

}

//avcodec_decode_video2(codec_ctx,yuvFrame,&got_pictue,&pkt);

sws_scale(m_swsCtx, (const uint8_t *const *) yuvFrame->data, yuvFrame->linesize, 0,

codec_ctx->height, rgbFrame->data, rgbFrame->linesize);

//设置缓冲区的属性

ANativeWindow_setBuffersGeometry(nativeWindow, codec_ctx->width, codec_ctx->height,

WINDOW_FORMAT_RGBA_8888);

ret = ANativeWindow_lock(nativeWindow, &outBuffer, NULL);

if (ret != 0) {

LOGE("ANativeWindow_lock error");

return;

}

av_image_fill_arrays(rgbFrame->data, rgbFrame->linesize,

(const uint8_t *) outBuffer.bits, AV_PIX_FMT_RGBA,

codec_ctx->width, codec_ctx->height, 1);

//fill_ANativeWindow(&outBuffer,outBuffer.bits,rgbFrame);

//将缓冲区数据显示到surfaceView

ret = ANativeWindow_unlockAndPost(nativeWindow);

if (ret != 0) {

LOGE("ANativeWindow_unlockAndPost error");

return;

}

LOGE("成功显示到缓冲区%d次",++index);

}

av_packet_unref(pkt);

usleep(1000*16);

// /*

// //UYVY

// fwrite(pFrameYUV->data[0],(pCodecCtx->width)*(pCodecCtx->height),2,output);

// //YUV420P

// fwrite(pFrameYUV->data[0],(pCodecCtx->width)*(pCodecCtx->height),1,output);

// fwrite(pFrameYUV->data[1],(pCodecCtx->width)*(pCodecCtx->height)/4,1,output);

// fwrite(pFrameYUV->data[2],(pCodecCtx->width)*(pCodecCtx->height)/4,1,output);

}

//av_free(out_buffer);

av_frame_free(&rgbFrame);

avcodec_close(codec_ctx);

sws_freeContext(m_swsCtx);

avformat_close_input(&fmt_ctx);

ANativeWindow_release(nativeWindow);

env->ReleaseStringUTFChars(path, file);

LOGI("解析完成");

}7、调用方式,如下:

public void playVideo(View view){

File resFile = new File(resVideo);

Log.d("TAG", "playVideo->resFile = "+resFile.getPath());

Log.d("TAG", "playVideo->resFile = "+resFile.exists());

ffmpeg.paly(resVideo, surfaceView.getHolder().getSurface());

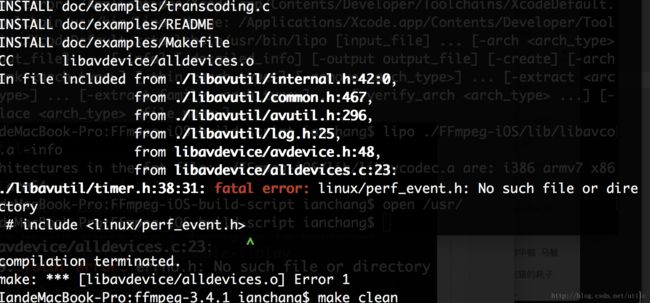

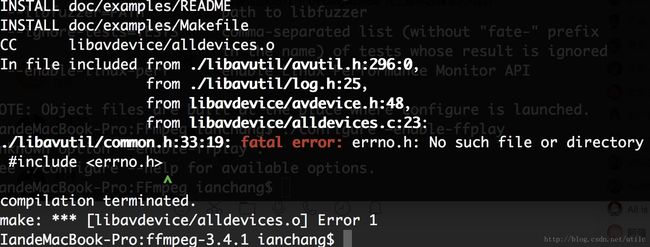

}Error编译异常总结和编译血泪史:折腾了很久,还是版本太高,导致的问题

Error:在编译时,遇见的问题

折腾了很久,还是版本太高,导致的问题,建议下载3.3.6以下的版本,要不在编译时,会报各种编译异常。

./libavutil/timer.h:38:31: fatal error: linux/perf_event.h: No such file or directory

INSTALL doc/examples/transcoding.c

INSTALL doc/examples/README

INSTALL doc/examples/Makefile

CC libavdevice/alldevices.o

In file included from ./libavutil/internal.h:42:0,

from ./libavutil/common.h:467,

from ./libavutil/avutil.h:296,

from ./libavutil/log.h:25,

from libavdevice/avdevice.h:48,

from libavdevice/alldevices.c:23:

./libavutil/timer.h:38:31: fatal error: linux/perf_event.h: No such file or directory

# include

^

compilation terminated.

make: *** [libavdevice/alldevices.o] Error 1