利用Matlab构建深度前馈神经网络以及各类优化算法的应用(SGD、mSGD、AdaGrad、RMSProp、Adam)

- 本文介绍如何利用Matlab从头搭建深度前馈神经网络,实现手写字体mnist数据集的识别,以及展示各类优化算法的训练效果,包括SGD、mSGD、AdaGrad、RMSProp、Adam,最终网络的识别率能达到98%。读者可自行调整网络结构和参数。

- 本文的Matlab代码和mnist数据集下载地址为:

链接: https://pan.baidu.com/s/1Me0T2xZwpn3xnN7b7XPrbg 密码: t23u

首先,神经网络的激活函数选择Sigmoid和Relu两种。神经网络的隐藏层选择Relu激活函数,输出层选择Sigmoid激活函数。

function [y] = sigmoid(x)

%sigmoid sigmoid激活函数

% 此处显示详细说明

y = 1./(1 + exp(-x));

end

function [y] = relu(x)

%relu 激活函数

% 此处显示详细说明

p = (x > 0);

y = x.*p;

end

创建深度网络的结构

function [dnn,parameter] = creatnn(K)

%UNTITLED6 此处显示有关此函数的摘要

% parameter 是结构体,包括参数:

% learning_rate: 学习率

% momentum: 动量系数,一般为0.5,0.9,0.99

% attenuation_rate: 衰减系数

% delta:稳定数值

% step: 步长 一般为 0.001

% method: 方法{'SGD','mSGD','nSGD','AdaGrad','RMSProp','nRMSProp','Adam'}

L = size(K.a,2);

for i = 1:L-1

dnn{i}.W = unifrnd(-sqrt(6/(K.a(i)+K.a(i+1))),sqrt(6/(K.a(i)+K.a(i+1))),K.a(i+1),K.a(i));

% dnn{i}.W = normrnd(0,0.1,K.a(i+1),K.a(i));

dnn{i}.function = K.f{i};

dnn{i}.b = 0.01*ones(K.a(i+1),1);

end

parameter.learning_rate = 0.01;

parameter.momentum = 0.9;

parameter.attenuation_rate = 0.9;

parameter.delta = 1e-6;

parameter.step = 0.001;

parameter.method = "SGD";

parameter.beta1 = 0.9;

parameter.beta2 = 0.999;

end

构建前向传播函数

function [y, Y] = forwordprop(dnn,x)

%UNTITLED3 此处显示有关此函数的摘要

% 此处显示详细说明

L = size(dnn,2);

m = size(x,2);

Y{1} = x;

for i = 1:L

z = dnn{i}.W*x + repmat(dnn{i}.b,1,m);

if dnn{i}.function == "relu"

y = relu(z);

end

if dnn{i}.function == "sigmoid"

y = sigmoid(z);

end

Y{i+1} = y;

x = y;

end

end

构建反向误差传播函数

function [dnn] = backprop(x,label,dnn,parameter)

%UNTITLED2 此处显示有关此函数的摘要

% parameter 是结构体,包括参数:

% learning_rate: 学习率

% momentum: 动量系数,一般为0.5,0.9,0.99

% attenuation_rate: 衰减系数

% delta:稳定数值

% step: 步长 一般为 0.001

% method: 方法{'SGD','mSGD','nSGD','AdaGrad','RMSProp','nRMSProp','Adam'}

%

L = size(dnn,2)+1;

m = size(x,2);

[y, Y] = forwordprop(dnn,x);

g = -label./y + (1 - label)./(1 - y);

method = {"SGD","mSGD","nSGD","AdaGrad","RMSProp","nRMSProp","Adam"};

persistent global_step;

if isempty(global_step)

global_step = 0;

end

global_step = global_step + 1;

% fprintf("global_step %d\n",global_step)

global E;

E(global_step) = sum(sum(-label.*log(y)-(1 - label).*log(1 - y)))/m;

persistent V;

if isempty(V)

for i = 1:L-1

V{i}.vw = dnn{i}.W*0;

V{i}.vb = dnn{i}.b*0;

end

end

if parameter.method == method{1,1}

for i = L : -1 : 2

if dnn{i-1}.function == "relu"

g = g.*(Y{i} > 0);

end

if dnn{i-1}.function == "sigmoid"

g = g.*Y{i}.*(1 - Y{i});

end

dw = g*Y{i - 1}.'/m;

db = sum(g,2)/m;

g = dnn{i-1}.W'*g;

dnn{i-1}.W = dnn{i-1}.W - parameter.learning_rate*dw;

dnn{i-1}.b = dnn{i-1}.b - parameter.learning_rate*db;

end

end

if parameter.method == method{1,2}

for i = L : -1 : 2

if dnn{i-1}.function == "relu"

g = g.*(Y{i} > 0);

end

if dnn{i-1}.function == "sigmoid"

g = g.*Y{i}.*(1 - Y{i});

end

dw = g*Y{i - 1}.'/m;

db = sum(g,2)/m;

g = dnn{i-1}.W'*g;

V{i-1}.vw = parameter.momentum*V{i-1}.vw - parameter.learning_rate*dw;

V{i-1}.vb = parameter.momentum*V{i-1}.vb - parameter.learning_rate*db;

dnn{i-1}.W = dnn{i-1}.W + V{i-1}.vw;

dnn{i-1}.b = dnn{i-1}.b + V{i-1}.vb;

end

end

if parameter.method == method{1,3} % 未实现

for i = L : -1 : 2

if dnn{i-1}.function == "relu"

g = g.*(Y{i} > 0);

end

if dnn{i-1}.function == "sigmoid"

g = g.*Y{i}.*(1 - Y{i});

end

dw = g*Y{i - 1}.'/m;

db = sum(g,2)/m;

g = dnn{i-1}.W'*g;

V{i-1}.vw = parameter.momentum*V{i-1}.vw - parameter.learning_rate*dw;

V{i-1}.vb = parameter.momentum*V{i-1}.vb - parameter.learning_rate*db;

dnn{i-1}.W = dnn{i-1}.W + V{i-1}.vw;

dnn{i-1}.b = dnn{i-1}.b + V{i-1}.vb;

end

end

if parameter.method == method{1,4}

for i = L : -1 : 2

if dnn{i-1}.function == "relu"

g = g.*(Y{i} > 0);

end

if dnn{i-1}.function == "sigmoid"

g = g.*Y{i}.*(1 - Y{i});

end

dw = g*Y{i - 1}.'/m;

db = sum(g,2)/m;

g = dnn{i-1}.W'*g;

V{i-1}.vw = V{i-1}.vw + dw.*dw;

V{i-1}.vb = V{i-1}.vb + db.*db;

dnn{i-1}.W = dnn{i-1}.W - parameter.learning_rate./(parameter.delta + sqrt(V{i-1}.vw)).*dw;

dnn{i-1}.b = dnn{i-1}.b - parameter.learning_rate./(parameter.delta + sqrt(V{i-1}.vb)).*db;

end

end

if parameter.method == method{1,5}

for i = L : -1 : 2

if dnn{i-1}.function == "relu"

g = g.*(Y{i} > 0);

end

if dnn{i-1}.function == "sigmoid"

g = g.*Y{i}.*(1 - Y{i});

end

dw = g*Y{i - 1}.'/m;

db = sum(g,2)/m;

g = dnn{i-1}.W'*g;

V{i-1}.vw = parameter.attenuation_rate*V{i-1}.vw + (1 - parameter.attenuation_rate)*dw.*dw;

V{i-1}.vb = parameter.attenuation_rate*V{i-1}.vb + (1 - parameter.attenuation_rate)*db.*db;

dnn{i-1}.W = dnn{i-1}.W - parameter.learning_rate./sqrt(parameter.delta + V{i-1}.vw).*dw;

dnn{i-1}.b = dnn{i-1}.b - parameter.learning_rate./sqrt(parameter.delta + V{i-1}.vb).*db;

end

end

persistent s;

if parameter.method == method{1,7}

if isempty(s)

for i = 1:L-1

s{i}.vw = dnn{i}.W*0;

s{i}.vb = dnn{i}.b*0;

end

end

for i = L : -1 : 2

if dnn{i-1}.function == "relu"

g = g.*(Y{i} > 0);

end

if dnn{i-1}.function == "sigmoid"

g = g.*Y{i}.*(1 - Y{i});

end

dw = g*Y{i - 1}.'/m;

db = sum(g,2)/m;

g = dnn{i-1}.W'*g;

s{i-1}.vw = parameter.beta2*s{i-1}.vw + (1 - parameter.beta1)*dw;

s{i-1}.vb = parameter.beta2*s{i-1}.vb + (1 - parameter.beta1)*db;

V{i-1}.vw = parameter.beta2*V{i-1}.vw + (1 - parameter.beta2)*dw.*dw;

V{i-1}.vb = parameter.beta2*V{i-1}.vb + (1 - parameter.beta2)*db.*db;

dnn{i-1}.W = dnn{i-1}.W - parameter.learning_rate*(s{i-1}.vw/(1-parameter.beta1.^global_step))./(parameter.delta + sqrt(V{i-1}.vw./(1 - parameter.beta2.^global_step)));

dnn{i-1}.b = dnn{i-1}.b - parameter.learning_rate*(s{i-1}.vb/(1-parameter.beta1.^global_step))./(parameter.delta + sqrt(V{i-1}.vb./(1 - parameter.beta2.^global_step)));

end

end

end

- 注意,如果训练过程中出现正确率很低,而且保持不变,应该考虑数值不稳定的问题,也就是出现了无穷小NaN,考虑在函数开始的g中添加数值稳定项。

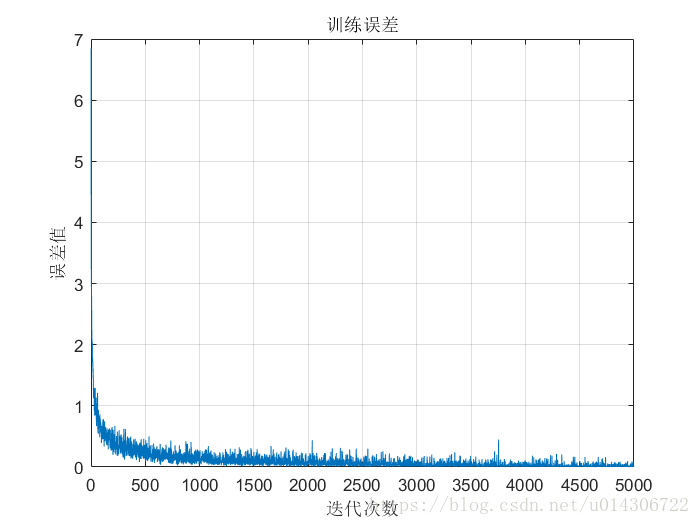

好了,到这里,网络需要的函数都搭建完成了。下面开始构建一个双隐层的前馈神经网络,实现mnist数据集的识别。

clear all

load('mnist_uint8.mat');

test_x = (double(test_x)/255)';

train_x = (double(train_x)/255)';

test_y = double(test_y.');

train_y = double(train_y.');

K.f = {"relu","relu","relu","sigmoid"};

K.a = [784,400,300,500,10];

[net,P] = creatnn(K);

P.method = "RMSProp";

P.learning_rate = 0.001;

m = size(train_x,2);

batch_size = 100;

MAX_P = 2000;

global E;

for i = 1:MAX_P

q = randi(m,1,batch_size);

train = train_x(:,q);

label = train_y(:,q);

net = backprop(train,label,net,P);

if mod(i,50) == 0

[output,~] = forwordprop(net,train);

[~,index0] = max(output);

[~,index1] = max(label);

rate = sum(index0 == index1)/batch_size;

fprintf("第%d训练包的正确率:%f\n",i,rate)

[output,~] = forwordprop(net,test_x);

[~,index0] = max(output);

[~,index1] = max(test_y);

rate = sum(index0 == index1)/size(test_x,2);

fprintf("测试集的正确率:%f\n",rate)

end

end