import torch

from torch.autograd import Variable

from torchvision import transforms

from torch.utils.data import Dataset, DataLoader

from PIL import Image

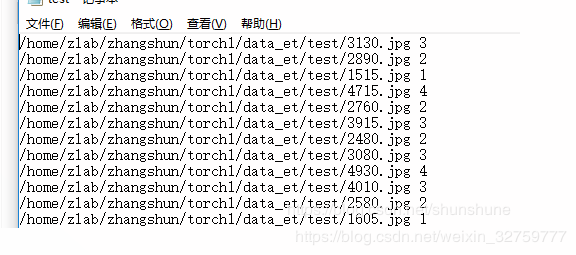

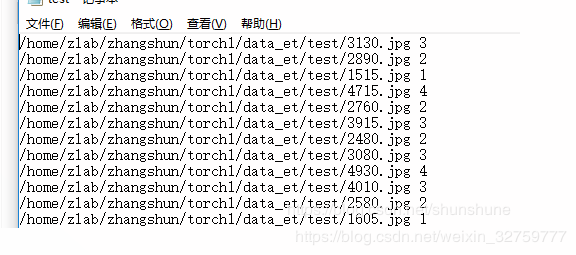

root = "/home/zlab/zhangshun/torch1/data_et/"

def default_loader(path):

return Image.open(path).convert('RGB')

class MyDataset (Dataset):

def __init__(self, txt, transform=None, target_transform=None, loader=default_loader):

fh = open(txt, 'r')

imgs = []

for line in fh:

line = line.strip('\n')

line = line.rstrip()

words = line.split()

imgs.append((words[0], int(words[1])))

self.imgs = imgs

self.transform = transform

self.target_transform = target_transform

self.loader = loader

def __getitem__(self, index):

fn, label = self.imgs[index]

img = self.loader(fn)

if self.transform is not None:

img = self.transform(img)

return img, label

def __len__(self):

return len(self.imgs)

train_data = MyDataset(txt=root + 'train.txt', transform=transforms.ToTensor())

test_data = MyDataset(txt=root + 'test.txt', transform=transforms.ToTensor())

train_loader = DataLoader(dataset=train_data, batch_size=64, shuffle=True)

test_loader = DataLoader(dataset=test_data, batch_size=64)

from torchvision import transforms

from torch.utils.data import Dataset, DataLoader

from PIL import Image

root = "/home/zlab/zhangshun/torch1/data_et/"

def default_loader(path):

return Image.open(path).convert('RGB')

class MyDataset(Dataset):

def __init__(self, txt, transform=None, target_transform=None, loader=default_loader):

fh = open(txt, 'r')

imgs = []

for line in fh:

line = line.strip('\n')

line = line.rstrip()

words = line.split()

imgs.append((words[0], int(words[1])))

self.imgs = imgs

self.transform = transform

self.target_transform = target_transform

self.loader = loader

def __getitem__(self, index):

fn, label = self.imgs[index]

img = self.loader(fn)

if self.transform is not None:

img = self.transform(img)

return img, label

def __len__(self):

return len(self.imgs)

transform = transforms.Compose([

transforms.Resize((256, 256)),

transforms.CenterCrop(224),

transforms.ToTensor()])

train_data = MyDataset(txt=root + 'train.txt', transform=transform)

test_data = MyDataset(txt=root + 'test.txt', transform=transform)

train_loader = DataLoader(dataset=train_data, batch_size=64, shuffle=True)

test_loader = DataLoader(dataset=test_data, batch_size=64)

使用ImageFolder

使用ImageFolder

import os

from torch.utils.data import DataLoader

from torchvision import datasets, transforms

TRAIN_DIR = 'train'

VALIDATION_DIR = 'valid'

MEAN_RGB = (0.485, 0.456, 0.406)

VAR_RGB = (0.229, 0.224, 0.225)

transform_train = transforms.Compose([

transforms.RandomSizedCrop(224, scale=(0.2, 1.0)),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(MEAN_RGB, VAR_RGB),

])

transform_test = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(MEAN_RGB, VAR_RGB),

])

def get_imagenet_dataset(batch_size, dataset_root='./dataset/imagenet/', dataset_tpye='train'):

if dataset_tpye == 'train':

train_dataset_root = os.path.join(dataset_root, TRAIN_DIR)

trainset = datasets.ImageFolder(root=train_dataset_root, transform=transform_train)

trainloader = DataLoader(trainset,

batch_size=batch_size,

shuffle=True,

num_workers=8,

pin_memory=True,

drop_last=False)

print('Succeed to init ImageNet train DataLoader!')

return trainloader

elif dataset_tpye == 'val' or dataset_tpye == 'valid':

val_dataset_root = os.path.join(dataset_root, VALIDATION_DIR)

valset = datasets.ImageFolder(root=val_dataset_root, transform=transform_test)

valloader = DataLoader(valset,

batch_size=batch_size,

shuffle=False,

num_workers=8,

pin_memory=False,

drop_last=False)

print('Succeed to init ImageNet val DataLoader!')

return valloader

else:

raise Exception('IMAGENET DataLoader: Unknown dataset type -- %s' % dataset_tpye)