Faster-RCNN_TF 训练

code

代码:Github

关于faster rcnn 文章的细节,请关注:paper

运行环境

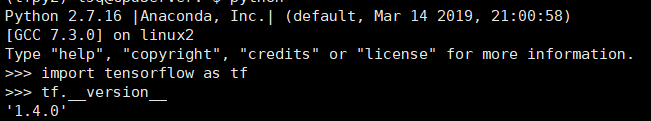

笔者的笔者的运行环境是 linux + tensorflow 1.4 + python 2.7,代码版本是python 2.7

错误集锦

- /usr/local/lib/python2.7/dist-packages/tensorflow/include/tensorflow/core/platform/default/mutex.h:25:22: fatal error: nsync_cv.h: No such file or directory.

解决办法:

将文件路径中的文件mutex.h修改如下:

#include "nsync_cv.h"

#include "nsync_mu.h"

改为

#include "external/nsync/public/nsync_cv.h"

#include "external/nsync/public/nsync_mu.h"

---------------------

- tensorflow.python.framework.errors_impl.NotFoundError: /home/lsq/detection/Faster-RCNN_TF/tools/…/lib/roi_pooling_layer/roi_pooling.so: undefined symbol: _ZTIN10tensorflow8OpKernelE

修改Faster-RCNN_TF/lib/make.sh文件:

TF_INC=$(python -c 'import tensorflow as tf; print(tf.sysconfig.get_include())')

TF_LIB=$(python -c 'import tensorflow as tf; print(tf.sysconfig.get_lib())')

CUDA_PATH=/usr/local/cuda/

CXXFLAGS=''

if [[ "$OSTYPE" =~ ^darwin ]]; then

CXXFLAGS+='-undefined dynamic_lookup'

fi

cd roi_pooling_layer

if [ -d "$CUDA_PATH" ]; then

nvcc -std=c++11 -c -o roi_pooling_op.cu.o roi_pooling_op_gpu.cu.cc \

-I $TF_INC -D GOOGLE_CUDA=1 -x cu -Xcompiler -fPIC $CXXFLAGS --expt-relaxed-constexpr\

-arch=sm_37

g++ -std=c++11 -shared -o roi_pooling.so roi_pooling_op.cc -D_GLIBCXX_USE_CXX11_ABI=0 \

roi_pooling_op.cu.o -I $TF_INC -I $TF_INC/external/nsync/public -L $TF_LIB -D GOOGLE_CUDA=1 -ltensorflow_framework -fPIC $CXXFLAGS \

-lcudart -L $CUDA_PATH/lib64

else

g++ -std=c++11 -shared -o roi_pooling.so roi_pooling_op.cc \

-I $TF_INC -fPIC $CXXFLAGS

fi

cd ..

附链接:

link1

Github

修改完成 重新 make 一下

3. ResourceExhaustedError (see above for traceback): OOM when allocating tensor with shape[4096]

代码定位:~/Faster-RCNN_TF/lib/fast_rcnn/train.py

参考地址:Resource

def train_net(network, imdb, roidb, output_dir, pretrained_model=None, max_iters=40000):

"""Train a Fast R-CNN network."""

roidb = filter_roidb(roidb)

saver = tf.train.Saver(max_to_keep=100)

with tf.Session(config=tf.ConfigProto(allow_soft_placement=True)) as sess:

sw = SolverWrapper(sess, saver, network, imdb, roidb, output_dir, pretrained_model=pretrained_model)

print 'Solving...'

sw.train_model(sess, max_iters)

print 'done solving'

修改代码为:

def train_net(network, imdb, roidb, output_dir, pretrained_model=None, max_iters=40000):

"""Train a Fast R-CNN network."""

roidb = filter_roidb(roidb)

saver = tf.train.Saver(max_to_keep=100)

config = tf.ConfigProto(allow_soft_placement=True)

config.gpu_options.allocator_type = 'BFC'

config.gpu_options.per_process_gpu_memory_fraction = 0.40

#with tf.Session(config=tf.ConfigProto(allow_soft_placement=True)) as sess:

with tf.Session(config=config) as sess:

sw = SolverWrapper(sess, saver, network, imdb, roidb, output_dir, pretrained_model=pretrained_model)

print 'Solving...'

sw.train_model(sess, max_iters)

print 'done solving'

- ValueError: Object arrays cannot be loaded when allow_pickle=False

错误原因:在笔者的问题中,使用faster rcnn 训练数据集 是numpy版本问题,不符合当前代码的版本,最原始使用的版本是Numpy最新版本(1.16.3),导致数据加载出错,后经排查修改后,改为Numpy(1.16.2),修改办法:

修改后,问题得到解决pip uninstall numpy pip install numpy==1.16.2 - File “/Faster-RCNN_TF/tools/…/lib/roi_data_layer/roidb.py”, line 48, in add_bbox_regression_targets

assert len(roidb) > 0

AssertionError

解决办法:

https://github.com/smallcorgi/Faster-RCNN_TF/issues/154

修改 Faster-RCNN-TF/lib/datasets/pascal_voc.py. line 32 “self._classes”

self._classes = ('__background__','person','bicycle','motorbike','car','bus')

Faster-RCNN-TF/lib/datasets/imdb.py. line 104

def append_flipped_images(self):

num_images = self.num_images

widths = self._get_widths()

for i in xrange(num_images):

boxes = self.roidb[i]['boxes'].copy()

oldx1 = boxes[:, 0].copy()

oldx2 = boxes[:, 2].copy()

#boxes[:, 0] = widths[i] - oldx2 - 1

#boxes[:, 2] = widths[i] - oldx1 - 1

#assert (boxes[:, 2] >= boxes[:, 0]).all()

boxes[:, 0] = widths[i] - oldx2 - 1

boxes[:, 2] = widths[i] - oldx1 - 1

for b in range(len(boxes)):

if boxes[b][2] < boxes[b][0]:

boxes[b][0] = 0

assert (boxes[:, 2] >= boxes[:, 0]).all()

entry = {'boxes' : boxes,

'gt_overlaps' : self.roidb[i]['gt_overlaps'],

'gt_classes' : self.roidb[i]['gt_classes'],

'flipped' : True}

self.roidb.append(entry)

self._image_index = self._image_index * 2

- 重新训练失误

2019-05-08 18:40:00.237193: E tensorflow/stream_executor/cuda/cuda_dnn.cc:385] could not create cudnn handle: CUDNN_STATUS_INTERNAL_ERROR

2019-05-08 18:40:00.237278: E tensorflow/stream_executor/cuda/cuda_dnn.cc:352] could not destroy cudnn handle: CUDNN_STATUS_BAD_PARAM

2019-05-08 18:40:00.237292: F tensorflow/core/kernels/conv_ops.cc:667] Check failed: stream->parent()->GetConvolveAlgorithms( conv_parameters.ShouldIncludeWinogradNonfusedAlgo(), &algorithms)

./experiments/scripts/faster_rcnn_end2end.sh: line 57: 53112 Aborted (core dumped) python ./tools/train_net.py --device ${DEV} --device_id ${DEV_ID} --weights data/pretrain_model/VGG_imagenet.npy --imdb ${TRAIN_IMDB} --iters ${ITERS} --cfg experiments/cfgs/faster_rcnn_end2end.yml --network VGGnet_train ${EXTRA_ARGS}

经查询是因为修改了 ~/Faster-RCNN_TF/lib/fast_rcnn/train.py

修改后

def train_net(network, imdb, roidb, output_dir, pretrained_model=None, max_iters=40000):

"""Train a Fast R-CNN network."""

roidb = filter_roidb(roidb)

saver = tf.train.Saver(max_to_keep=100)

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

#config.gpu_options.allocator_type = 'BFC'

config.gpu_options.per_process_gpu_memory_fraction = 0.60

#with tf.Session(config=tf.ConfigProto(allow_soft_placement=True)) as sess:

with tf.Session(config=config) as sess:

sw = SolverWrapper(sess, saver, network, imdb, roidb, output_dir, pretrained_model=pretrained_model)

print 'Solving...'

sw.train_model(sess, max_iters)

print 'done solving'

或者要清除缓存:

rm -f ~/.nv/

测试错误

Demo for data/demo/000456.jpg

Detection took 0.354s for 300 object proposals

Traceback (most recent call last):

File "./tools/demo.py", line 134, in

demo(sess, net, im_name)

File "./tools/demo.py", line 71, in demo

fig, ax = plt.subplots(figsize=(12, 12))

File "/data8T/lsq/anaconda3/envs/tfpy2/lib/python2.7/site-packages/matplotlib/pyplot.py", line 1184, in subplots

fig = figure(**fig_kw)

File "/data8T/lsq/anaconda3/envs/tfpy2/lib/python2.7/site-packages/matplotlib/pyplot.py", line 533, in figure

**kwargs)

File "/data8T/lsq/anaconda3/envs/tfpy2/lib/python2.7/site-packages/matplotlib/backend_bases.py", line 161, in new_figure_manager

return cls.new_figure_manager_given_figure(num, fig)

File "/data8T/lsq/anaconda3/envs/tfpy2/lib/python2.7/site-packages/matplotlib/backends/_backend_tk.py", line 1046, in new_figure_manager_given_figure

window = Tk.Tk(className="matplotlib")

File "/data8T/lsq/anaconda3/envs/tfpy2/lib/python2.7/lib-tk/Tkinter.py", line 1825, in __init__

self.tk = _tkinter.create(screenName, baseName, className, interactive, wantobjects, useTk, sync, use)

_tkinter.TclError: no display name and no $DISPLAY environment variable

解决办法:修改 /tools/demo.py

import matplotlib

matplotlib.use('Agg')

import matplotlib.pyplot as plt

import numpy as np

开头两行一定要放在整个代码的起始部分