- 如何建设和维护数据仓库:深入指南

数据库数据库开发

摘要数据仓库是企业数据管理的核心,它不仅支持决策制定,还能提供深入的数据分析。本文将详细介绍如何从零开始建设和维护一个高效、可靠的数据仓库,涵盖设计、实施、监控和优化的全过程。通过具体的代码示例和最佳实践,帮助读者深入理解数据仓库的构建和管理。引言数据仓库是企业数据管理的心脏,它集中存储和管理来自不同来源的数据,支持复杂的查询和分析。随着数据量的爆炸性增长,如何高效地建设和维护数据仓库成为企业面临

- 用Python爬虫获取微博热搜词:数据抓取、分析与可视化全流程

Python爬虫项目

2025年爬虫实战项目python爬虫开发语言selenium

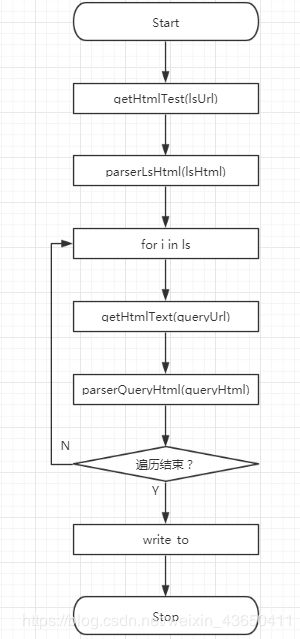

引言微博作为中国最受欢迎的社交平台之一,每时每刻都在更新着海量的内容。其中,微博热搜词反映了用户关注的热点话题、社会事件及潮流趋势。对于数据分析、情感分析以及趋势预测等领域,获取微博热搜数据是一个非常有价值的任务。在本篇博客中,我们将详细介绍如何使用Python爬虫技术获取微博的热搜词,并进行数据分析和可视化。通过全流程的讲解,帮助你了解如何通过爬虫技术抓取并分析微博热搜词数据。一、爬虫技术概述与

- 【人工智能 | 大数据】基于人工智能的大数据分析方法

用心去追梦

人工智能大数据数据分析

基于人工智能(AI)的大数据分析方法是指利用机器学习、深度学习和其他AI技术来分析和处理大规模数据集。这些方法能够自动识别模式、提取有用信息,并做出预测或决策,从而帮助企业和组织更好地理解市场趋势、客户行为以及其他关键因素。以下是几种主要的基于AI的大数据分析方法:机器学习模型:通过训练算法让计算机从历史数据中学习并做出预测或分类。常见的机器学习技术包括监督学习(如回归分析、支持向量机)、非监督学

- hive电影数据分析系统 Springboot协同过滤-余弦函数推荐系统 爬虫2万+数据 大屏数据展示 + [手把手视频教程 和 开发文档]

QQ-1305637939

毕业设计大数据毕设计算机毕业设计hivespringboot爬虫

hive电影数据分析Springboot协同过滤-余弦函数推荐系统爬虫2万+数据大屏数据展示+[手把手视频教程和开发文档]【功能介绍】1.java爬取【豆瓣电影】网站中电影数据,保存为data.csv文件,数据量2万+2.data.csv上传到hadoop集群环境3.MR数据清洗data.csv4.Hive汇总处理,将Hive处理的结果数据保存到本地Mysql数据库中5.Springboot+Vu

- hadoop电影数据分析系统 Springboot协同过滤-余弦函数推荐系统 爬虫2万+数据 大屏数据展示 + [手把手视频教程 和 开发文档]

QQ-1305637939

计算机毕业设计毕业设计大数据毕设hadoopspringboot爬虫

全套视频教程全套开发文档hadoop电影数据分析系统Springboot协同过滤-余弦函数推荐系统爬虫2万+数据大屏数据展示【Hadoop项目】1.java爬取【豆瓣电影】网站中电影数据,保存为data.csv文件,数据量2万+2.data.csv上传到hadoop集群环境3.data.csv数据清洗4.MR数据汇总处理,将Reduce的结果数据保存到本地Mysql数据库中5.Springboot

- spark电影数据分析系统 Springboot协同过滤-余弦函数推荐系统 爬虫2万+数据 大屏数据展示 + [手把手视频教程 和 开发文档]

QQ-1305637939

毕业设计大数据毕设计算机毕业设计sparkspringboot爬虫大数据电影推荐电影分析

spark电影数据分析系统Springboot协同过滤-余弦函数推荐系统爬虫2万+数据大屏数据展示+[手把手视频教程和开发文档【功能介绍】1.java爬取【豆瓣电影】网站中电影数据,保存为data.csv文件,数据量2万+2.data.csv上传到hadoop集群环境3.MR数据清洗data.csv4.Spark汇总处理,将Spark处理的结果数据保存到本地Mysql数据库中5.Springboo

- hadoop图书数据分析系统 Springboot协同过滤-余弦函数推荐系统 爬虫1万+数据 大屏数据展示 + [手把手视频教程 和 开发文档]

QQ-1305637939

毕业设计大数据毕设图书数据分析hadoopspringboot爬虫

hadoop图书数据分析系统Springboot协同过滤-余弦函数推荐系统爬虫1万+数据大屏数据展示+[手把手视频教程和开发文档]【亮点功能】1.Springboot+Vue+Element-UI+Mysql前后端分离2.Echarts图表统计数据,直观展示数据情况3.发表评论后,用户可以回复评论,回复的评论可以被再次回复,一级评论可以添加图片附件4.爬虫图书数据1万+5.推荐图书列表展示,推荐图

- 大数据组件之Azkaban简介

努力的小星星

大数据linux运维数据结构

一、Azkaban介绍1.1背景一个完整的大数据分析系统,必然由很多任务单元(如数据收集、数据清洗、数据存储、数据分析等)组成,所有的任务单元及其之间的依赖关系组成了复杂的工作流。复杂的工作流管理涉及到很多问题:如何定时调度某个任务?如何在某个任务执行完成后再去执行另一个任务?如何在任务失败时候发出预警?......面对这些问题,工作流调度系统应运而生。Azkaban就是其中之一。1.2功能Azk

- 分析-MQ消息队列中间件-在IM即时通讯系统的用途

酱油瓶啤酒杯

中间件分布式队列kafka

MQ消息队列在IM即时通讯的用途1)用户聊天消息的离线存储环节:因为IM消息的发送属于高吞吐场景,直接操作DB可能会让DB崩溃,所有离线消息在落地入库前,可以先扔到MQ消息队列中,再由单独部署的消费者来有节奏地存储到DB中;2)用户的行为数据收集环节:因为用户的聊天消息和指令等,可以用于大数据分析,而且基于国家监管要求也是必须要存储一段时间的,所以此类数据的收集同样可以用于MQ消息队列,再由单独部

- 降维算法:主成分分析

一个人在码代码的章鱼

数学建模机器学习概率论

主成分分析一种常用的数据分析技术,主要用于数据降维,在众多领域如统计学、机器学习、信号处理等都有广泛应用。主成分分析是一种通过正交变换将一组可能存在相关性的变量转换为一组线性不相关的变量(即主成分)的方法。这些主成分按照方差从大到小排列,方差越大,包含的原始数据信息越多。通常会选取前几个方差较大的主成分,以达到在尽量保留原始数据信息的前提下降低数据维度的目的。它通过将多个指标转换为少数几个主成分,

- 数据分析 基础定义

阿金要当大魔王~~

数据分析数据分析数据挖掘

一、大数据的定义数据分析是基于商业等目的,有目的的进行收集、整理、加工和分析数据,提炼有价值信息的过程。大数据分析即针对海量的、多样化的数据集合的分析大数据分析是一种利用大规模数据集进行分析和挖掘知识的方法。随着互联网、社交媒体、移动设备等产生庞大的数据,大数据分析成为了当今世界各行业的重要技术。这篇文章将从数据收集、存储、处理、分析、可视化、应用等方面进行全面讲解,以帮助读者更好地理解大数据分析

- 大数据分析专业毕业设计最新最全选题精华汇总--持续更新中⑤

源码空间站11

pythondjango大数据分析数据可视化hadoophive大数据分析毕设

目录前言开题指导建议更多精选选题选题帮助最后前言大家好,这里是源码空间站学长大数据分析专业毕业设计毕设专题!大四是整个大学期间最忙碌的时光,一边要忙着准备考研、考公、考教资或者实习为毕业后面临的升学就业做准备,一边要为毕业设计耗费大量精力。学长给大家整理了大数据分析专业最新精选选题,如遇选题困难或选题有任何疑问,都可以问学长哦(见文末)!以下是学长精心整理的一些选题:21.基于Hadoop和Spa

- Python读取通达信日线数据(.day文件)

逝去的紫枫

Pythonpython

Python读取通达信日线数据(.day文件)1.day文件位置2.day文件内容的构成3.Python代码识别day文件4.将识别结果输出为csv文件5.最终结果展示在金融数据分析中,通达信软件提供的数据文件(如日线数据文件.day)是非常宝贵的资源。本文将详细介绍如何使用Python读取和解析这些文件,并将解析结果输出为CSV文件,以便进行进一步的数据分析和处理。1.day文件位置通达信日线数

- Python读取通达信一分钟K线数据(.lc1文件)

逝去的紫枫

Pythonpython

Python读取通达信一分钟K线数据(.lc1文件)1.lc1文件位置2.lc1文件内容的构成3.Python代码识别lc1文件4.将识别结果输出为csv文件5.最终结果展示在金融数据分析中,通达信软件提供的数据文件(如1分钟K线数据文件.lc1)是非常宝贵的资源。本文将详细介绍如何使用Python读取和解析这些文件,并将解析结果输出为CSV文件,以便进行进一步的数据分析和处理。1.lc1文件位置

- Python 爬虫入门教程:从零构建你的第一个网络爬虫

m0_74825223

面试学习路线阿里巴巴python爬虫开发语言

网络爬虫是一种自动化程序,用于从网站抓取数据。Python凭借其丰富的库和简单的语法,是构建网络爬虫的理想语言。本文将带你从零开始学习Python爬虫的基本知识,并实现一个简单的爬虫项目。1.什么是网络爬虫?网络爬虫(WebCrawler)是一种通过网络协议(如HTTP/HTTPS)获取网页内容,并提取其中有用信息的程序。常见的爬虫用途包括:收集商品价格和评价。抓取新闻或博客内容。统计数据分析。爬

- Python数据分析与程序设计-番外:在vscode中使用Jupyter Notebook

想当糕手

python数据分析vscodejupyter

前言在系列文章的第二篇中,我们介绍了使用“if__name__=="__main__":”来模拟c语言中的main函数+封装测试函数的方法来提高代码可读性。当然,这并不是最佳的选择,本篇博客为您将介绍更为高效便捷的工具,希望能对你有所帮助!关于JupyterNotebookJupyterNotebook是一个开源的Web应用程序,它允许你创建和共享包含实时代码、方程、可视化和解释性文本的文档。它是

- 《利用python进行数据分析》——3.1数据结构和序列——元组、列表、字典、集合——读书笔记

pillow_L

python数据分析

第3章Python的数据结构、函数和文件3.1数据结构和序列Python中常见的数据结构可以统称为容器。序列(如列表和元组)、映射(如字典)以及集合(set)是三类主要的容器。1.元组——tuple元组是一个固定长度,不可改变的Python序列对象。元组与列表一样,也是一种序列,唯一不同的是元组不能被修改(字符串其实也有这种特点)元组Tuple,一经初始化,就不能修改,没有列表List中的appe

- Numpy基础01(Jupyter基本用法/Ndarray创建与基本操作)

XYX的Blog

数据分析与可视化numpyjupyter

内容一:Jupyter开发环境IPython是一个增强型的Python交互式解释器,提供了自动补全、命令历史、魔法命令等功能。它支持与操作系统命令交互、内联绘图和多语言扩展,并可与JupyterNotebook集成,适用于数据分析和科学计算。IPython还支持远程访问、包管理和插件扩展,是一个功能强大且灵活的开发工具。JupyterNotebook是IPython的开发环境。1.1Jupyter

- 飞轮科技荣获中国电信星海大数据最佳合作伙伴奖!

近日,由中国电信集团数据发展中心主办的数据要素合作论坛在广州召开。大会以“数聚共生·智启未来”为主题,旨在展示数据要素应用成果,探索数据要素创新实践。与会期间,为了感谢2024年生态合作伙伴对中国电信数据业务发展工作的支持,会议举行了“星海大数据·最佳合作伙伴奖”颁奖仪式。飞轮科技凭借其在数据分析领域的卓越表现与深厚实力,获得这一殊荣。作为中国电信的长期合作伙伴,飞轮科技持续致力于为中国电信提供先

- Python语言的编程范式

AI向前看

包罗万象golang开发语言后端

Python语言的编程范式Python是一种广泛使用的高级编程语言,它因其简单易读的语法和强大的功能而受到程序员的喜爱。自1991年由荷兰人GuidolvanRossum首次发布以来,Python的发展迅速,其应用范围涵盖了Web开发、数据分析、人工智能、科学计算、自动化等多个领域。本文将深入探讨Python的编程范式,帮助读者更好地理解该语言的特性和优势。1.什么是编程范式编程范式是对程序设计风

- 云原生周刊:K8s 生产环境架构设计及成本分析

KubeSphere 云原生

k8s容器平台kubesphere云计算

开源项目推荐KubeZoneNetKubeZoneNet旨在帮助监控和优化Kubernetes集群中的跨可用区(Cross-Zone)网络流量。这个项目提供了一种简便的方式来跟踪和分析Kubernetes集群中跨不同可用区的通信,帮助用户优化集群的网络架构、提高资源利用效率并减少网络延迟。通过实时监控和数据分析,KubeZoneNet能有效地识别跨可用区的网络瓶颈,并提供改进建议,以支持Kuber

- 多查询分析中的并发处理实践

FADxafs

python

在进行查询分析时,某些技术可能会生成多个查询。在这种情况下,我们需要记得执行所有查询并合并结果。本文将通过一个简单的示例(使用模拟数据)展示如何实现这一点。技术背景介绍在数据分析和信息检索领域,查询分析技术能够帮助我们生成和优化查询以提高搜索效率。然而,当同时生成多个查询时,处理这些查询并有效地合并结果就显得尤为重要。本次我们将使用langchain库来演示如何处理多查询情况。核心原理解析通过生成

- PHP语言的编程范式

代码驿站520

包罗万象golang开发语言后端

PHP语言的编程范式引言PHP(PHP:HypertextPreprocessor)是一种广泛使用的开源脚本语言,特别适合于Web开发。虽然最初被设计用于生成动态网页,但随着技术的发展,PHP已逐渐演化为一种功能强大的编程语言,广泛应用于服务器端编程、命令行脚本以及桌面应用程序的开发。目前,PHP的应用范围涵盖了网站开发、数据分析、内容管理系统等多个领域。本文将深入探讨PHP语言的编程范式,包括面

- Python气象数据分析:风速预报订正、台风预报数据智能订正、机器学习预测风电场的风功率、浅水模型、预测ENSO等

小艳加油

大气科学python人工智能气象机器学习

目录专题一Python和科学计算基础专题二机器学习和深度学习基础理论和实操专题三气象领域中的机器学习应用实例专题四气象领域中的深度学习应用实例更多应用Python是功能强大、免费、开源,实现面向对象的编程语言,在数据处理、科学计算、数学建模、数据挖掘和数据可视化方面具备优异的性能,这些优势使得Python在气象、海洋、地理、气候、水文和生态等地学领域的科研和工程项目中得到广泛应用。可以预见未来Py

- 探索泰坦尼克号生存分类数据集:机器学习与数据分析的完美起点

岑童嵘

探索泰坦尼克号生存分类数据集:机器学习与数据分析的完美起点【下载地址】泰坦尼克号生存分类数据集本仓库提供了一个经典的机器学习数据集——泰坦尼克号生存分类数据集。该数据集包含两个CSV文件:训练集和测试集。数据集主要用于训练和评估机器学习模型,以预测泰坦尼克号乘客的生存情况项目地址:https://gitcode.com/open-source-toolkit/35561项目介绍泰坦尼克号生存分类数

- 【Python】Tkinter电器销售有限公司销售数据分析(源码)【独一无二】

不争不抢不显不露

python数据分析开发语言

一、设计要求该项目创建一个数据分析软件,利用Tkinter和Matplotlib构建图形用户界面(GUI),读取和分析美迪电器销售有限公司销售数据。用户可以通过界面选择月份查看数据详情、生成销量图表并计算月总销量和年总销量。二、设计思路2.模块引入首先引入了所需的模块,包括Tkinter(用于GUI创建和管理)、ttk(Tkinter主题化控件)、messagebox(用于弹出消息框)、panda

- MDX语言的数据类型

BinaryBardC

包罗万象golang开发语言后端

MDX语言的数据类型详解引言MDX(多维表达式)是一种用于查询和操作多维数据集的查询语言,广泛用于数据分析和商业智能领域。MDX语言的设计旨在帮助用户高效地从多维数据库(如MicrosoftSQLServerAnalysisServices)中提取和分析数据。随着数据量的不断增加和数据结构的日益复杂,MDX提供了一种强大的方式来处理和分析这些多维数据。在MDX中,数据类型是理解和使用该语言的基础,

- R语言的软件工程

BinaryBardC

包罗万象golang开发语言后端

R语言的软件工程1.引言随着数据科学的快速发展,R语言作为一种统计计算和图形绘制的编程语言,其在数据分析、可视化以及机器学习等领域的应用日益广泛。尽管R语言在数据处理上有其独特的优势,但要将其运用于大型项目和商业应用中,就需要遵循软件工程的原则。本篇文章将探讨R语言在软件工程中的应用,主要涵盖软件开发生命周期、代码规范、版本控制、测试和文档等方面。2.软件开发生命周期软件开发生命周期(SDLC)是

- StarRocks Awards 2024 年度贡献人物

开源

在过去一年,StarRocks在Lakehouse与AI等关键领域取得了显著进步,其卓越的产品功能极大地简化和提升了数据分析的效率,使得"OneData,AllAnalytics"的愿景变得更加触手可及。虽然实现这一目标的道路充满挑战且漫长,但我们并不孤单,因为有一群社区伙伴与我们并肩作战。每一位贡献者的代码提交和每一次的布道,都在推动着StarRocks社区向前发展。为了表达对这些贡献者的深深感

- StarRocks on AWS Graviton3,实现 50% 以上性价比提升

大数据数据库数据湖云计算云服务

在数据时代,企业拥有前所未有的大量数据资产,但如何从海量数据中发掘价值成为挑战。数据分析凭借强大的分析能力,可从不同维度挖掘数据中蕴含的见解和规律,为企业战略决策提供依据。数据分析在营销、风险管控、产品优化等领域发挥着关键作用,帮助企业提高运营效率、优化业务流程、发现新商机、增强竞争力。低成本高效率的完成对海量数据的分析,及时准确的释放数据价值,已成为企业赢得竞争优势的利器。StarRockson

- web前段跨域nginx代理配置

刘正强

nginxcmsWeb

nginx代理配置可参考server部分

server {

listen 80;

server_name localhost;

- spring学习笔记

caoyong

spring

一、概述

a>、核心技术 : IOC与AOP

b>、开发为什么需要面向接口而不是实现

接口降低一个组件与整个系统的藕合程度,当该组件不满足系统需求时,可以很容易的将该组件从系统中替换掉,而不会对整个系统产生大的影响

c>、面向接口编口编程的难点在于如何对接口进行初始化,(使用工厂设计模式)

- Eclipse打开workspace提示工作空间不可用

0624chenhong

eclipse

做项目的时候,难免会用到整个团队的代码,或者上一任同事创建的workspace,

1.电脑切换账号后,Eclipse打开时,会提示Eclipse对应的目录锁定,无法访问,根据提示,找到对应目录,G:\eclipse\configuration\org.eclipse.osgi\.manager,其中文件.fileTableLock提示被锁定。

解决办法,删掉.fileTableLock文件,重

- Javascript 面向对面写法的必要性?

一炮送你回车库

JavaScript

现在Javascript面向对象的方式来写页面很流行,什么纯javascript的mvc框架都出来了:ember

这是javascript层的mvc框架哦,不是j2ee的mvc框架

我想说的是,javascript本来就不是一门面向对象的语言,用它写出来的面向对象的程序,本身就有些别扭,很多人提到js的面向对象首先提的是:复用性。那么我请问你写的js里有多少是可以复用的,用fu

- js array对象的迭代方法

换个号韩国红果果

array

1.forEach 该方法接受一个函数作为参数, 对数组中的每个元素

使用该函数 return 语句失效

function square(num) {

print(num, num * num);

}

var nums = [1,2,3,4,5,6,7,8,9,10];

nums.forEach(square);

2.every 该方法接受一个返回值为布尔类型

- 对Hibernate缓存机制的理解

归来朝歌

session一级缓存对象持久化

在hibernate中session一级缓存机制中,有这么一种情况:

问题描述:我需要new一个对象,对它的几个字段赋值,但是有一些属性并没有进行赋值,然后调用

session.save()方法,在提交事务后,会出现这样的情况:

1:在数据库中有默认属性的字段的值为空

2:既然是持久化对象,为什么在最后对象拿不到默认属性的值?

通过调试后解决方案如下:

对于问题一,如你在数据库里设置了

- WebService调用错误合集

darkranger

webservice

Java.Lang.NoClassDefFoundError: Org/Apache/Commons/Discovery/Tools/DiscoverSingleton

调用接口出错,

一个简单的WebService

import org.apache.axis.client.Call;import org.apache.axis.client.Service;

首先必不可

- JSP和Servlet的中文乱码处理

aijuans

Java Web

JSP和Servlet的中文乱码处理

前几天学习了JSP和Servlet中有关中文乱码的一些问题,写成了博客,今天进行更新一下。应该是可以解决日常的乱码问题了。现在作以下总结希望对需要的人有所帮助。我也是刚学,所以有不足之处希望谅解。

一、表单提交时出现乱码:

在进行表单提交的时候,经常提交一些中文,自然就避免不了出现中文乱码的情况,对于表单来说有两种提交方式:get和post提交方式。所以

- 面试经典六问

atongyeye

工作面试

题记:因为我不善沟通,所以在面试中经常碰壁,看了网上太多面试宝典,基本上不太靠谱。只好自己总结,并试着根据最近工作情况完成个人答案。以备不时之需。

以下是人事了解应聘者情况的最典型的六个问题:

1 简单自我介绍

关于这个问题,主要为了弄清两件事,一是了解应聘者的背景,二是应聘者将这些背景信息组织成合适语言的能力。

我的回答:(针对技术面试回答,如果是人事面试,可以就掌

- contentResolver.query()参数详解

百合不是茶

androidquery()详解

收藏csdn的博客,介绍的比较详细,新手值得一看 1.获取联系人姓名

一个简单的例子,这个函数获取设备上所有的联系人ID和联系人NAME。

[java]

view plain

copy

public void fetchAllContacts() {

- ora-00054:resource busy and acquire with nowait specified解决方法

bijian1013

oracle数据库killnowait

当某个数据库用户在数据库中插入、更新、删除一个表的数据,或者增加一个表的主键时或者表的索引时,常常会出现ora-00054:resource busy and acquire with nowait specified这样的错误。主要是因为有事务正在执行(或者事务已经被锁),所有导致执行不成功。

1.下面的语句

- web 开发乱码

征客丶

springWeb

以下前端都是 utf-8 字符集编码

一、后台接收

1.1、 get 请求乱码

get 请求中,请求参数在请求头中;

乱码解决方法:

a、通过在web 服务器中配置编码格式:tomcat 中,在 Connector 中添加URIEncoding="UTF-8";

1.2、post 请求乱码

post 请求中,请求参数分两部份,

1.2.1、url?参数,

- 【Spark十六】: Spark SQL第二部分数据源和注册表的几种方式

bit1129

spark

Spark SQL数据源和表的Schema

case class

apply schema

parquet

json

JSON数据源 准备源数据

{"name":"Jack", "age": 12, "addr":{"city":"beijing&

- JVM学习之:调优总结 -Xms -Xmx -Xmn -Xss

BlueSkator

-Xss-Xmn-Xms-Xmx

堆大小设置JVM 中最大堆大小有三方面限制:相关操作系统的数据模型(32-bt还是64-bit)限制;系统的可用虚拟内存限制;系统的可用物理内存限制。32位系统下,一般限制在1.5G~2G;64为操作系统对内存无限制。我在Windows Server 2003 系统,3.5G物理内存,JDK5.0下测试,最大可设置为1478m。典型设置:

java -Xmx355

- jqGrid 各种参数 详解(转帖)

BreakingBad

jqGrid

jqGrid 各种参数 详解 分类:

源代码分享

个人随笔请勿参考

解决开发问题 2012-05-09 20:29 84282人阅读

评论(22)

收藏

举报

jquery

服务器

parameters

function

ajax

string

- 读《研磨设计模式》-代码笔记-代理模式-Proxy

bylijinnan

java设计模式

声明: 本文只为方便我个人查阅和理解,详细的分析以及源代码请移步 原作者的博客http://chjavach.iteye.com/

import java.lang.reflect.InvocationHandler;

import java.lang.reflect.Method;

import java.lang.reflect.Proxy;

/*

* 下面

- 应用升级iOS8中遇到的一些问题

chenhbc

ios8升级iOS8

1、很奇怪的问题,登录界面,有一个判断,如果不存在某个值,则跳转到设置界面,ios8之前的系统都可以正常跳转,iOS8中代码已经执行到下一个界面了,但界面并没有跳转过去,而且这个值如果设置过的话,也是可以正常跳转过去的,这个问题纠结了两天多,之前的判断我是在

-(void)viewWillAppear:(BOOL)animated

中写的,最终的解决办法是把判断写在

-(void

- 工作流与自组织的关系?

comsci

设计模式工作

目前的工作流系统中的节点及其相互之间的连接是事先根据管理的实际需要而绘制好的,这种固定的模式在实际的运用中会受到很多限制,特别是节点之间的依存关系是固定的,节点的处理不考虑到流程整体的运行情况,细节和整体间的关系是脱节的,那么我们提出一个新的观点,一个流程是否可以通过节点的自组织运动来自动生成呢?这种流程有什么实际意义呢?

这里有篇论文,摘要是:“针对网格中的服务

- Oracle11.2新特性之INSERT提示IGNORE_ROW_ON_DUPKEY_INDEX

daizj

oracle

insert提示IGNORE_ROW_ON_DUPKEY_INDEX

转自:http://space.itpub.net/18922393/viewspace-752123

在 insert into tablea ...select * from tableb中,如果存在唯一约束,会导致整个insert操作失败。使用IGNORE_ROW_ON_DUPKEY_INDEX提示,会忽略唯一

- 二叉树:堆

dieslrae

二叉树

这里说的堆其实是一个完全二叉树,每个节点都不小于自己的子节点,不要跟jvm的堆搞混了.由于是完全二叉树,可以用数组来构建.用数组构建树的规则很简单:

一个节点的父节点下标为: (当前下标 - 1)/2

一个节点的左节点下标为: 当前下标 * 2 + 1

&

- C语言学习八结构体

dcj3sjt126com

c

为什么需要结构体,看代码

# include <stdio.h>

struct Student //定义一个学生类型,里面有age, score, sex, 然后可以定义这个类型的变量

{

int age;

float score;

char sex;

}

int main(void)

{

struct Student st = {80, 66.6,

- centos安装golang

dcj3sjt126com

centos

#在国内镜像下载二进制包

wget -c http://www.golangtc.com/static/go/go1.4.1.linux-amd64.tar.gz

tar -C /usr/local -xzf go1.4.1.linux-amd64.tar.gz

#把golang的bin目录加入全局环境变量

cat >>/etc/profile<

- 10.性能优化-监控-MySQL慢查询

frank1234

性能优化MySQL慢查询

1.记录慢查询配置

show variables where variable_name like 'slow%' ; --查看默认日志路径

查询结果:--不用的机器可能不同

slow_query_log_file=/var/lib/mysql/centos-slow.log

修改mysqld配置文件:/usr /my.cnf[一般在/etc/my.cnf,本机在/user/my.cn

- Java父类取得子类类名

happyqing

javathis父类子类类名

在继承关系中,不管父类还是子类,这些类里面的this都代表了最终new出来的那个类的实例对象,所以在父类中你可以用this获取到子类的信息!

package com.urthinker.module.test;

import org.junit.Test;

abstract class BaseDao<T> {

public void

- Spring3.2新注解@ControllerAdvice

jinnianshilongnian

@Controller

@ControllerAdvice,是spring3.2提供的新注解,从名字上可以看出大体意思是控制器增强。让我们先看看@ControllerAdvice的实现:

@Target(ElementType.TYPE)

@Retention(RetentionPolicy.RUNTIME)

@Documented

@Component

public @interface Co

- Java spring mvc多数据源配置

liuxihope

spring

转自:http://www.itpub.net/thread-1906608-1-1.html

1、首先配置两个数据库

<bean id="dataSourceA" class="org.apache.commons.dbcp.BasicDataSource" destroy-method="close&quo

- 第12章 Ajax(下)

onestopweb

Ajax

index.html

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/

- BW / Universe Mappings

blueoxygen

BO

BW Element

OLAP Universe Element

Cube Dimension

Class

Charateristic

A class with dimension and detail objects (Detail objects for key and desription)

Hi

- Java开发熟手该当心的11个错误

tomcat_oracle

java多线程工作单元测试

#1、不在属性文件或XML文件中外化配置属性。比如,没有把批处理使用的线程数设置成可在属性文件中配置。你的批处理程序无论在DEV环境中,还是UAT(用户验收

测试)环境中,都可以顺畅无阻地运行,但是一旦部署在PROD 上,把它作为多线程程序处理更大的数据集时,就会抛出IOException,原因可能是JDBC驱动版本不同,也可能是#2中讨论的问题。如果线程数目 可以在属性文件中配置,那么使它成为

- 推行国产操作系统的优劣

yananay

windowslinux国产操作系统

最近刮起了一股风,就是去“国外货”。从应用程序开始,到基础的系统,数据库,现在已经刮到操作系统了。原因就是“棱镜计划”,使我们终于认识到了国外货的危害,开始重视起了信息安全。操作系统是计算机的灵魂。既然是灵魂,为了信息安全,那我们就自然要使用和推行国货。可是,一味地推行,是否就一定正确呢?

先说说信息安全。其实从很早以来大家就在讨论信息安全。很多年以前,就据传某世界级的网络设备制造商生产的交