Python爬虫实战之爬取51job详情(1)

爬虫之爬取51同城详情并生成Excel文件的完整代码:

爬取的数据清洗地址https://blog.csdn.net/weixin_43746433/article/details/91346274

数据分析与可视化地址:https://blog.csdn.net/weixin_43746433/article/details/91349199

import urllib

import re, codecs

import time, random

import requests

from lxml import html

from urllib import parse

import xlwt

key = '大数据'

key = parse.quote(parse.quote(key))

headers = {'Host': 'search.51job.com',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'}

def get_links(i):

url = 'http://search.51job.com/list/000000,000000,0000,00,9,99,' + key + ',2,' + str(i) + '.html'

r = requests.get(url, headers, timeout=10)

s = requests.session()

s.keep_alive = False

r.encoding = 'gbk'

reg = re.compile(r'class="t1 ">.*? ', re.S)

reg1 = re.compile(r'class="t1 ">.*? (.*?).*?(.*?).*? (.*?)',re.S) # 匹配换行符

links = re.findall(reg, r.text)

return links

# 多页处理,下载到文件

def get_content(link):

r1 = requests.get(link, headers, timeout=10)

s = requests.session()

s.keep_alive = False

r1.encoding = 'gbk'

t1 = html.fromstring(r1.text)

try:

job = t1.xpath('//div[@class="tHeader tHjob"]//h1/text()')[0].strip()

company = t1.xpath('//p[@class="cname"]/a/text()')[0].strip()

print('工作:', job)

print('公司:', company)

area = t1.xpath('//div[@class="tHeader tHjob"]//p[@class="msg ltype"]/text()')[0].strip()

print('地区', area)

workyear = t1.xpath('//div[@class="tHeader tHjob"]//p[@class="msg ltype"]/text()')[1].strip()

print('工作经验', workyear)

education = t1.xpath('//div[@class="tHeader tHjob"]//p[@class="msg ltype"]/text()')[2].strip()

print('学历:', education)

people = t1.xpath('//div[@class="tHeader tHjob"]//p[@class="msg ltype"]/text()')[3].strip()

print('人数', people)

date = t1.xpath('//div[@class="tHeader tHjob"]//p[@class="msg ltype"]/text()')[4].strip()

print('发布日期', date)

# date = t1.xpath('//div[@class="tBorderTop_box"]/div[@class="bmsg job_msg inbox"]/text()')

# print('职位信息', date)

describes = re.findall(re.compile('(.*?)div class="mt10"', re.S), r1.text)

describe = describes[0].strip().replace(''

, '').replace('', '').replace(''

, '').replace('', '').replace('', '').replace('\t', '').replace('<', '').replace('

', '').replace('\n', '').replace(' ','')

print('职位信息', describe)

describes1 = re.findall(re.compile('(.*?)', re.S), r1.text)

company0 = describes1[0].strip().replace(''

, '').replace('', '').replace(''

, '').replace('','').replace('', '').replace('\t', '').replace(' ','').replace('

', '').replace('

', '')

print('公司信息', company0)

companytypes = t1.xpath('//div[@class="com_tag"]/p/text()')[:2]

company1 = ''

for i in companytypes:

company1 = company1 + ' ' + i

print('公司信息', company1)

salary=t1.xpath('//div[@class="cn"]/h1/strong/text()')

salary = re.findall(re.compile(r'div class="cn">.*?(.*?)',re.S),r1.text)[0]

print('薪水',salary)

labels = t1.xpath('//div[@class="jtag"]/div[@class="t1"]/span/text()')

label = ''

for i in labels:

label = label + ' ' + i

print('待遇',label)

item=[]

item.append(str(company))

item.append(str(job))

item.append(str(education))

item.append(str(area))

item.append(str(salary))

item.append(str(label))

item.append(str(workyear))

item.append(str(people))

item.append(str(date))

item.append(str(describe))

item.append(str(company0))

item.append(str(company1))

print(item)

except:

pass

return item

def run():

workbook = xlwt.Workbook(encoding='utf-8')

sheet1 = workbook.add_sheet('message', cell_overwrite_ok=True)

row0 = ["公司", "岗位", "学历", "地区","薪水", "待遇","工作经验", "需求人数", "发布日期", "职位信息", "公司介绍", "公司信息及人数"]

for j in range(0, len(row0)):

sheet1.write(0, j, row0[j])

try:

i=0

for a in range(1,4): ##控制页数

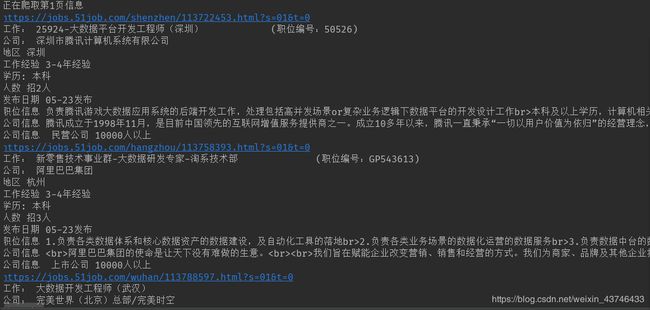

print('正在爬取第{}页信息'.format(a))

try:

# time.sleep(random.random()+random.randint(1,5))

links= get_links(a)

try:

for link in links:

time.sleep(random.random() + random.randint(0, 1))

#i=i+1

print(i)

print(link)

item=get_content(link)

sheet1.write(i + 1, 0, item[0])

sheet1.write(i + 1, 1, item[1])

sheet1.write(i + 1, 2, item[2])

sheet1.write(i + 1, 3, item[3])

sheet1.write(i + 1, 4, item[4])

sheet1.write(i + 1, 5, item[5])

sheet1.write(i + 1, 6, item[6])

sheet1.write(i + 1, 7, item[7])

sheet1.write(i + 1, 8, item[8])

sheet1.write(i + 1, 9, item[9])

sheet1.write(i + 1, 10, item[10])

sheet1.write(i + 1, 11, item[11])

i = i + 1

except:

continue

except IndexError:

pass

except IndexError:

pass

workbook.save('51job.xls')

run()

运行如下:

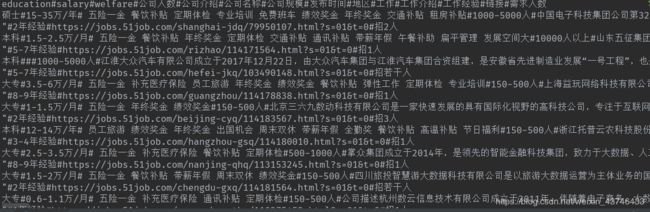

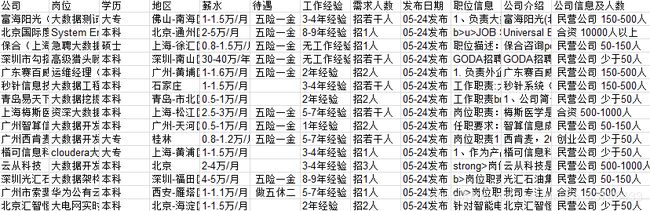

生成的Excel文件:

爬虫之爬取51同城详情生成Csv文件代码如下:

import re

import time, random

import requests

from lxml import html

from urllib import parse

import xlwt

import pandas as pd

key = '大数据'

key = parse.quote(parse.quote(key))

headers = {'Host': 'search.51job.com',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'}

def get_links(i):

url = 'http://search.51job.com/list/000000,000000,0000,00,9,99,' + key + ',2,' + str(i) + '.html'

r = requests.get(url, headers, timeout=10)

s = requests.session()

s.keep_alive = False

r.encoding = 'gbk'

reg = re.compile(r'class="t1 ">.*? ', re.S)

reg1 = re.compile(r'class="t1 ">.*? (.*?).*?(.*?).*? (.*?)',re.S) # 匹配换行符

links = re.findall(reg, r.text)

return links

# 多页处理,下载到文件

def get_content(link):

r1 = requests.get(link, headers, timeout=10)

s = requests.session()

s.keep_alive = False

r1.encoding = 'gbk'

t1 = html.fromstring(r1.text)

try:

job = t1.xpath('//div[@class="tHeader tHjob"]//h1/text()')[0].strip()

companyname = t1.xpath('//p[@class="cname"]/a/text()')[0].strip()

print('工作:', job)

print('公司:', companyname)

area = t1.xpath('//div[@class="tHeader tHjob"]//p[@class="msg ltype"]/text()')[0].strip()

print('地区', area)

workyear = t1.xpath('//div[@class="tHeader tHjob"]//p[@class="msg ltype"]/text()')[1].strip()

print('工作经验', workyear)

education = t1.xpath('//div[@class="tHeader tHjob"]//p[@class="msg ltype"]/text()')[2].strip()

print('学历:', education)

require_people = t1.xpath('//div[@class="tHeader tHjob"]//p[@class="msg ltype"]/text()')[3].strip()

print('人数', require_people)

date = t1.xpath('//div[@class="tHeader tHjob"]//p[@class="msg ltype"]/text()')[4].strip()

print('发布日期', date)

describes = re.findall(re.compile('(.*?)div class="mt10"', re.S), r1.text)

job_describe = describes[0].strip().replace(''

, '').replace('', '').replace(''

, '').replace('', '').replace('', '').replace('\t', '').replace('<', '').replace('

', '').replace('\n', '').replace(' ','')

print('职位信息', job_describe)

describes1 = re.findall(re.compile('(.*?)', re.S), r1.text)

company_describe= describes1[0].strip().replace(''

, '').replace('', '').replace(''

, '').replace('','').replace('', '').replace('\t', '').replace(' ','').replace('

', '').replace('

', '')

print('公司信息', company_describe)

companytypes = t1.xpath('//div[@class="com_tag"]/p/text()')[0]

print('公司类型', companytypes)

company_people = t1.xpath('//div[@class="com_tag"]/p/text()')[1]

print('公司人数',company_people)

salary=t1.xpath('//div[@class="cn"]/h1/strong/text()')

salary = re.findall(re.compile(r'div class="cn">.*?(.*?)',re.S),r1.text)[0]

print('薪水',salary)

labels = t1.xpath('//div[@class="jtag"]/div[@class="t1"]/span/text()')

label = ''

for i in labels:

label = label + ' ' + i

print('待遇',label)

datalist = [

str(area),

str(companyname),

str(job),

str(education),

str(salary),

str(label),

str(workyear),

str(require_people),

str(date),

str(job_describe),

str(company_describe),

str(companytypes),

str(company_people),

str(link)]

series = pd.Series(datalist, index=[

'地区',

'公司名称',

'工作',

'education',

'salary',

'welfare',

'工作经验',

'需求人数',

'发布时间',

'工作介绍',

'公司介绍',

'公司规模',

'公司人数',

'链接',

])

return (1,series)

except IndexError:

print('error,未定位到有效信息导致索引越界')

series = None

return (-1, series)

if __name__ == '__main__':

for a in range(1,2):

datasets = pd.DataFrame()

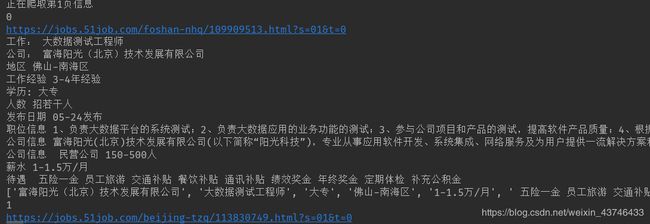

print('正在爬取第{}页信息'.format(a))

# time.sleep(random.random()+random.randint(1,5))

links= get_links(a)

print(links)

for link in links:

#time.sleep(random.random() + random.randint(0, 1))

print(link)

state,series=get_content(link)

if state==1:

datasets = datasets.append(series, ignore_index=True)

print('datasets---------',datasets)

print('第{}页信息爬取完成\n'.format(a))

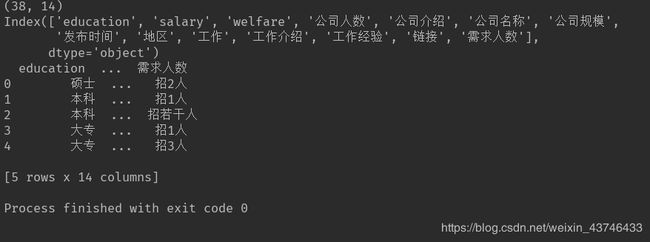

print(datasets)

datasets.to_csv('51job_test2.csv', sep='#', index=False, index_label=False,

encoding='utf-8', mode='a+')

爬取的数据清洗操作:https://blog.csdn.net/weixin_43746433/article/details/91346274