怎么在视频上叠加字幕和Logo--技术实现1

这篇文章我给大家讲解的这种字幕叠加和Logo叠加方法是在渲染视频的时候“画“上去的,其实是通过某种API将OSD和Logo绘制到显卡缓存,然后提交缓存到屏幕。我们知道渲染视频有几种常用的API:GDI,DirectDraw,D3D,OpenGL,SDL,其中SDL库是对前面几种API在不同平台上的封装,是一个大集合。我给大家演示的例子是针对Windows平台的,一般在Windows平台上我们会用DirectDraw或D3D绘制图像(不建议用GDI,因为效率低),而我写的这个叠加字幕和Logo的例子是基于DirectDraw绘图的,DirectDraw API用起来比较简单,并且效率也不错。

用本文介绍的DirectDraw API来绘制字幕和Logo(位图),实现起来并不复杂。因为DirectDraw创建的表面能通过GetDC方法获得一个DC,那么你可以往这个DC上画任何东西,就像用GDI在Windows窗口上绘图一样简单。基于DirectDraw实现,叠加字幕和叠加Logo没有太大区别,因为对显卡来说,两者都是一个图层,你可以往显卡缓存的某个坐标上输出一段字符,也可以画一个位图,只是输出字符和绘制位图调用的API有点不同。因为DirectDraw绘图对字幕叠加和位图叠加都是同样原理,所以没有必要对这两种对象的实现方式作一一介绍,下面提到的字幕叠加的实现方式跟位图叠加方式基本是一样的。

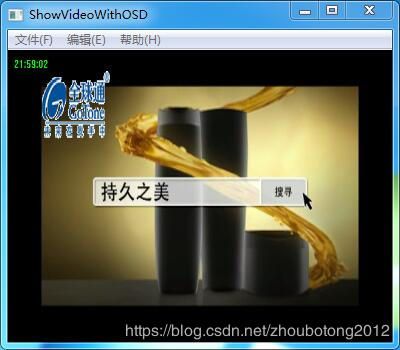

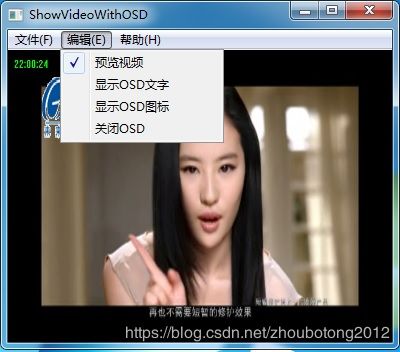

为了更清晰的给大家讲解这种方法的实现思路,我写了一个例子来演示叠加字幕和位图的效果。先亮一下这个Demo的界面:

这个例子具有的功能:播放视频,显示OSD文字,显示OSD图标,关闭OSD。打开视频从“文件”菜单选择一个视频文件的路径,然后按“开始播放”,视频就开始渲染到界面的窗口中。点击“编辑菜单”可以对OSD的打开关闭进行控制。

这个例子对视频的分离和解码用到FFmpeg库,而视频的显示和字幕和图标(Logo)的显示则用的是DirectDraw。

下面讲一下代码实现的流程。

因为用到FFmpeg,需要在预编译头文件引用FFmpeg的头文件和静态库:

#ifdef __cplusplus

extern "C" {

#endif

#ifdef HAVE_AV_CONFIG_H

#undef HAVE_AV_CONFIG_H

#endif

#include "./include/libavcodec/avcodec.h"

#include "./include/libavutil/mathematics.h"

#include "./include/libavutil/avutil.h"

#include "./include/libswscale/swscale.h"

#include "./include/libavutil/fifo.h"

#include "./include/libavformat/avformat.h"

#include "./include/libavutil/opt.h"

#include "./include/libavutil/error.h"

#include "./include/libswresample/swresample.h"

#include "./include/libavutil/audio_fifo.h"

#include "./include/libavutil/time.h"

#ifdef __cplusplus

}

#endif

#pragma comment( lib, "avcodec.lib")

#pragma comment( lib, "avutil.lib")

#pragma comment( lib, "avformat.lib")

#pragma comment(lib, "swresample.lib")

#pragma comment(lib, "swscale.lib" )还有要初始化FFmpeg库:

avcodec_register_all();

av_register_all();程序中用到几个重要的类:

FileStreamReadTask: 这个类封装了FFmpeg对媒体文件的音视频分离,和视频解码的解码操作。并提供回调函数将解码出来的帧传到外部调用者。

CDXDrawPainter(基类是CVideoPlayer): 这个类负责渲染视频图像,以及在视频上叠加字幕和图标,支持叠加多个OSD区域,可以动态打开或关闭某个OSD图层显示。

CMainFrame: 这个主窗口界面内,负责管理各种对象,处理界面上某些Windows消息事件,菜单和按钮的响应都放在这个类里去处理。

CVideoDisplayWnd: 视频显示窗口,目前只是在OnPaint函数里用黑色画刷填充窗口背景,没有其他操作。

其中,在CMainFrame类里面定义了如下几个对象:

FileStreamReadTask m_FileStreamTask; //解码视频

CDXDrawPainter m_Painter; //用DirectDraw绘制

CVideoDisplayWnd m_wndView; //视频窗口类

在界面菜单上选择文件后,然后点击“开始播放”,会调用到CMainFrame的一个方法OnStartStream:

LRESULT CMainFrame:: OnStartStream(WPARAM wParam, LPARAM lParam)

{

if(strlen(m_szFilePath) == 0)

{

return 1;

}

int nRet = m_FileStreamTask.OpenMediaFile(m_szFilePath);

if(nRet != 0)

{

MessageBox(_T("打开文件失败"), _T("提示"), MB_OK|MB_ICONERROR);

return -1;

}

long cx, cy;

cx = cy = 0;

m_FileStreamTask.GetVideoSize(cx, cy); //获取视频的分辨率

if(cx > 0 && cy > 0)

{

m_Painter.SetVideoWindow(m_wndView.GetSafeHwnd()); //设置视频预览窗口

m_Painter.SetRenderSurfaceType(SURFACE_TYPE_YV12);

m_Painter.SetSourceSize(CSize(cx, cy));

m_Painter.Open(); //打开渲染器

}

m_FileStreamTask.SetVideoCaptureCB(VideoCaptureCallback);

m_FileStreamTask.StartReadFile();

StartTime = timeGetTime();

m_frmCount = 0;

m_nFPS = 0;

m_bCapture = TRUE;

return 0;

}而“停止播放”触发的另外一个方法是OnStopStream,代码如下:

LRESULT CMainFrame:: OnStopStream(WPARAM wParam, LPARAM lParam)

{

m_FileStreamTask.StopReadFile();

//m_Painter.Close();

m_Painter.Stop();

m_wndView.Invalidate(); //刷新视频窗口

//TRACE("播放用时:%d 秒\n", (timeGetTime() - StartTime)/1000);

m_bCapture = FALSE;

StartTime = 0;

return 0;

} 在OnStartStream方法中,我们设置了视频解码后回调图像的回调函数:m_FileStreamTask.SetVideoCaptureCB(VideoCaptureCallback);

其中VideoCaptureCallback回调函数的实现代码是:

//视频图像回调

LRESULT CALLBACK VideoCaptureCallback(AVStream * input_st, enum PixelFormat pix_fmt, AVFrame *pframe, INT64 lTimeStamp)

{

if(gpMainFrame->IsPreview())

{

gpMainFrame->m_Painter.PlayAVFrame(input_st, pframe);

}

return 0;

}m_Painter是CDXDrawPainter类的对象,负责渲染视频和叠加字幕,图标。回调函数中调用了m_Painter对象的一个方法PlayAVFrame来转换输入的图像格式,然后在函数内显示视频和OSD。

接着,看一下CDXDrawPainter类的声明,它继承与CVideoPlayer类。

class CDXDrawPainter : public CVideoPlayer

{

private:

//VIDEOINFOHEADER * mVideoInfo;

HWND mVideoWindow;

HDC mWindowDC;

RECT mTargetRect;

RECT mSourceRect;

BOOL mNeedStretch;

struct SwsContext *img_convert_ctx;

BYTE * m_pYUVDataCache; //YV12的临时缓冲区,Y,U,V平面依次排列,将非YV12的图像格式转换后存储到这里

public:

CDXDrawPainter();

~CDXDrawPainter();

void SetVideoWindow(HWND inWindow);

//BOOL SetInputFormat(BYTE * inFormat, long inLength);

BOOL Open(void);

BOOL Stop(void);

BOOL Play(BYTE * inData, DWORD inLength, ULONG inSampleTime, int inputFormat); //传入的图像支持YUY2,YV12,RGB24和RGB32, 指针inData指向图像的首地址

BOOL PlayAVFrame(AVStream * st, AVFrame * picture); //显示FFmpeg的AVFrame的图像数据, 传入的AVFrame Format可以是RGB或YUV格式,为了统一处理,函数内会将所有格式转换为YV12

void SetSourceSize(CSize size);

BOOL GetSourceSize(CSize & size);

};Play方法将一个视频图像传入,并带上时间戳,图像格式等信息。PlayAVFrame方法的作用跟Play函数一样,只是它的参数有点不一样,它接受的不是一个连续内存的图像地址,而是一个FFmpeg的AVFrame结构指针,指向的结构携带了图像数据地址信息,还有解码出来的图像帧信息。Play和PlayAVFrame两个函数都是负责渲染视频和绘制OSD,但后者对图像数据多一些转换操作(下文会提到)。为了控制OSD显示外观,我们必须设置OSD显示属性(比如OSD的文字,显示坐标,显示颜色等),这就需要调用CDXDrawPainter基类中的几个方法,基类是CVideoPlayer。

让我们看看CVideoPlayer类里面有哪些重要的方法:

void SetRenderSurfaceType(RenderSurfaceType type) { m_SurfaceType = type; }

// Initialization

int Init( HWND hWnd , BOOL bUseYUV, int width, int height);

void Uninit();

void SetPlayRect(RECT r);

// Rendering

BOOL RenderFrame(BYTE* frame,int w,int h, int inputFmt);

//设置OSD接口

BOOL SetOsdText(int nIndex, CString strText, COLORREF TextColor, RECT & OsdRect);

BOOL SetOsdBitmap(int nIndex, HBITMAP hBitmap, RECT & OsdRect);

void DisableOsd(int nIndex);

下面是这些方法的说明:

1. SetRenderSurfaceType:设置Directdraw表面创建的类型,目前支持YUY2,YV12.

2. Init: 创建和初始化DirectDraw表面,传入视频窗口的句柄和图像大小,并且设置是否用Overlay模式,bUserYUV参数为TRUE表示使用Overlay模式,但是Overlay模式经常使用不了,并且有很多限制。建议该参数赋值为FALSE。

3. Unit: 销毁DirectDraw表面。

4. SetPlayRect:设置视频在窗口中的显示区域。

5. RenderFrame: 显示图像和叠加OSD。父类CDXDrawPainter的成员Play和PlayAVFrame 都会在内部调用这个函数。参数意义:inputFmt是传入的图像格式,取值为:0--YV12, 1--YUY2, 2--RGB24, 3--RGB32。

6. SetOsdText: 设置OSD文字的相关属性,包括Index,字符内容,文字颜色,和显示坐标。

7. SetOsdBitmap: 设置叠加的OSD位图属性,包括Index,传入位图句柄,显示位置。

8. DisableOsd:关闭OSD。

OSD的信息用一个结构体类型--OSDPARAM表示,OSDPARAM定义如下:

typedef struct _osdparam

{

BOOL bEnable;

int nIndex;

char szText[128];

LOGFONT mLogFont;

COLORREF clrColor;

RECT rcPosition;

HBITMAP hWatermark;

}OSDPARAM;我们定义了一个OSD数组: OSDPARAM m_OsdInfo[MAX_OSD_NUM];

所有OSD信息都存到这个数组里,Index是OSD的一个索引,每个OSD是数组的一个成员,可自定义最大的叠加的OSD个数。

下面看看CVideoPlayer::Init怎么创建DiectDraw表面的。

int CVideoPlayer::Init( HWND hWnd , BOOL bUseYUV, int width, int height )

{

HRESULT hr;

m_hWnd = hWnd;

m_bOverlay = bUseYUV;

// DDraw stuff begins here

if( FAILED( hr = DirectDrawCreateEx( NULL, (VOID**)&m_pDD,

IID_IDirectDraw7, NULL ) ) )

return FALSE;

// Set cooperative level

hr = m_pDD->SetCooperativeLevel( hWnd, DDSCL_NORMAL );

if( FAILED(hr) )

return FALSE;

// Create the primary surface

DDSURFACEDESC2 ddsd;

ZeroMemory( &ddsd, sizeof( ddsd ) );

ddsd.dwSize = sizeof( ddsd );

ddsd.dwFlags = DDSD_CAPS;

ddsd.ddsCaps.dwCaps = DDSCAPS_PRIMARYSURFACE;

// Create the primary surface.

if( FAILED( m_pDD->CreateSurface( &ddsd, &m_pddsFrontBuffer, NULL ) ) )

return FALSE;

if(m_bOverlay) // use Overlay

{

DDCAPS caps;

ZeroMemory(&caps, sizeof(DDCAPS));

caps.dwSize = sizeof(DDCAPS);

if (m_pDD->GetCaps(&caps, NULL)==DD_OK)

{

if (caps.dwCaps & DDCAPS_OVERLAY)

{

ASSERT(m_SurfaceType == SURFACE_TYPE_YUY2 || m_SurfaceType == SURFACE_TYPE_YV12);

ZeroMemory(&ddsd, sizeof(DDSURFACEDESC2));

ddsd.dwBackBufferCount = 0;

ddsd.dwSize = sizeof(DDSURFACEDESC2);

ddsd.dwFlags = DDSD_CAPS | DDSD_WIDTH | DDSD_HEIGHT | DDSD_PIXELFORMAT;

ddsd.ddsCaps.dwCaps = DDSCAPS_OVERLAY /*| DDSCAPS_VIDEOMEMORY*/;

ddsd.dwWidth = width;

ddsd.dwHeight = height;

DDPIXELFORMAT ddPixelFormat;

ZeroMemory(&ddPixelFormat, sizeof(DDPIXELFORMAT));

ddPixelFormat.dwSize = sizeof(DDPIXELFORMAT);

ddPixelFormat.dwFlags = DDPF_FOURCC;

if(m_SurfaceType == SURFACE_TYPE_YUY2)

{

ddPixelFormat.dwFourCC = MAKEFOURCC('Y','U','Y','2'); //YUY2

ddPixelFormat.dwYUVBitCount = 16;

m_nBitCount = 16;

}

else if(m_SurfaceType == SURFACE_TYPE_YV12)

{

ddPixelFormat.dwFourCC = MAKEFOURCC('Y','V','1','2'); //YV12

ddPixelFormat.dwYUVBitCount = 12;

m_nBitCount = 12;

}

else

{

return FALSE;

}

memcpy(&(ddsd.ddpfPixelFormat), &ddPixelFormat, sizeof(DDPIXELFORMAT));

// Create overlay surface

hr = m_pDD->CreateSurface(&ddsd, &m_pddsOverlay, NULL);

if(FAILED(hr))

{

TRACE("CreateSurface failed!! \n");

return FALSE;

}

hr=m_pDD->CreateClipper(0, &m_pddClipper, NULL);

hr=m_pddClipper->SetHWnd(0, m_hWnd);

hr=m_pddsFrontBuffer->SetClipper(m_pddClipper);

}

}

else

{

ASSERT(0);

return FALSE;

}

}

else //None Overlay

{

ZeroMemory( &ddsd, sizeof(ddsd) );

ddsd.dwSize = sizeof(ddsd);

ddsd.dwFlags = DDSD_CAPS | DDSD_HEIGHT | DDSD_WIDTH | DDSD_PIXELFORMAT;

ddsd.ddsCaps.dwCaps = DDSCAPS_OFFSCREENPLAIN /*| DDSCAPS_VIDEOMEMORY*/;

ddsd.dwWidth = width;

ddsd.dwHeight = height;

// DDPIXELFORMAT pft = {sizeof(DDPIXELFORMAT), DDPF_RGB, 0, 16, 0x0000001F, 0x000007E0, 0x0000F800, 0};

if(m_SurfaceType == SURFACE_TYPE_YUY2)

{

DDPIXELFORMAT pft = { sizeof(DDPIXELFORMAT), DDPF_FOURCC, MAKEFOURCC('Y', 'U', 'Y', '2'), 0, 0, 0, 0, 0};

memcpy(&ddsd.ddpfPixelFormat, &pft, sizeof(DDPIXELFORMAT));

m_nBitCount = 16;

}

else if(m_SurfaceType == SURFACE_TYPE_YV12)

{

DDPIXELFORMAT pft = { sizeof(DDPIXELFORMAT), DDPF_FOURCC, MAKEFOURCC('Y', 'V', '1', '2'), 0, 0, 0, 0, 0};

memcpy(&ddsd.ddpfPixelFormat, &pft, sizeof(DDPIXELFORMAT));

m_nBitCount = 12;

}

//else if(m_SurfaceType == SURFACE_TYPE_RGB24)

//{

// DDPIXELFORMAT pft = {sizeof(DDPIXELFORMAT), DDPF_RGB, 0, 24, 0x000000FF, 0x0000FF00, 0x00FF0000, 0};

// memcpy(&ddsd.ddpfPixelFormat, &pft, sizeof(DDPIXELFORMAT));

// m_nBitCount = 24;

//}

//else if(m_SurfaceType == SURFACE_TYPE_RGB32)

//{

// DDPIXELFORMAT pft = {sizeof(DDPIXELFORMAT), DDPF_RGB, 0, 32, 0x000000FF, 0x0000FF00, 0x00FF0000, 0};

// memcpy(&ddsd.ddpfPixelFormat, &pft, sizeof(DDPIXELFORMAT));

// m_nBitCount = 32;

//}

else

{

ASSERT(0);

return FALSE;

}

// Create surface

if(FAILED(m_pDD->CreateSurface(&ddsd, &m_pddsBack, NULL)))

{

TRACE("CreateSurface failed!!! \n");

return FALSE;

}

/*create clipper for non overlay mode*/

hr=m_pDD->CreateClipper(0, &m_pddClipper, NULL);

hr=m_pddClipper->SetHWnd(0, m_hWnd);

hr=m_pddsFrontBuffer->SetClipper(m_pddClipper);

}

////////////////////////////////////////////

//OSD表面

ZeroMemory(&ddsd, sizeof(ddsd));

ddsd.dwSize = sizeof(ddsd);

ddsd.dwFlags = DDSD_CAPS | DDSD_HEIGHT | DDSD_WIDTH ;

ddsd.ddsCaps.dwCaps = DDSCAPS_OFFSCREENPLAIN;

ddsd.dwWidth = width;

ddsd.dwHeight = height;

//HRESULT hr;

hr = m_pDD->CreateSurface(&ddsd, &m_pddsOSD, NULL);

if ( hr != DD_OK)

{

CString sError;

sError.Format("Create m_pOsdSurface failed:%d, %.8x\n", GetLastError(), hr);

OutputDebugString(sError);

return FALSE;

}

return TRUE;

}Init函数调用的时候会创建显示视频的后备缓冲区(Back Buffer)和前缓冲区(Front Buffer),DirectDraw Suface其实就是后备缓冲区。视频图像和OSD、位图在RenderFrame调用时会先拷贝到后备缓冲区,然后再送到前缓冲区(通过Blt操作)显示。对于YUY2和YV12的图像会直接拷贝到后备缓冲区(前提是图像格式和DDraw Surface的格式对应),其他格式的会转为YV12再拷贝到缓冲区(SurfaceType为YV12)。

强调一下:CVideoPlayer接受的YUY2和YV12图像是各个分量紧密存储到一起的连续内存块,而FFmpeg解码出来的是一个AVFrame,默认是YUV420P格式,其中Y,U,V是分开三个平面存储的,那么传给CVideoPlayer类必须把这三个分离的平面数据拷贝到另外一个内存块,使得三个平面的内存连续。所以PlayAVFrame需要对AVFrame作一些数据转换操作。

下面是PlayAVFrame函数的代码:

//显示FFmpeg的AVFrame的图像数据, 传入的pFrame可以是RGB或YUV格式,如果不是YV12,则会进行转换

BOOL CDXDrawPainter::PlayAVFrame(AVStream * st, AVFrame * picture)

{

if(m_pYUVDataCache == NULL)

{

int nYUVSize = st->codec->width * st->codec->height*2;

m_pYUVDataCache = new BYTE[nYUVSize];

memset(m_pYUVDataCache, 0, nYUVSize);

}

if(st->codec->pix_fmt != PIX_FMT_YUV420P && st->codec->pix_fmt != PIX_FMT_YUVJ420P )

{

if(img_convert_ctx == NULL)

{

img_convert_ctx = sws_getContext(st->codec->width, st->codec->height,

st->codec->pix_fmt,

st->codec->width, st->codec->height,

PIX_FMT_YUV420P,

SWS_BICUBIC, NULL, NULL, NULL);

if (img_convert_ctx == NULL)

{

TRACE("sws_getContext() failed \n");

return FALSE;

}

}

int nWidth = st->codec->width;

int nHeight = st->codec->height;

AVPicture dstbuf;

dstbuf.data[0] = (uint8_t*)m_pYUVDataCache;

dstbuf.data[2] = (uint8_t*)m_pYUVDataCache + nWidth*nHeight;

dstbuf.data[1] = (uint8_t*)m_pYUVDataCache + nWidth*nHeight + nWidth*nHeight / 4;

dstbuf.linesize[0] = nWidth;

dstbuf.linesize[1] = nWidth / 2;

dstbuf.linesize[2] = nWidth / 2;

dstbuf.linesize[3] = 0;

//转成YV12格式

sws_scale(img_convert_ctx, picture->data, picture->linesize, 0, st->codec->height, dstbuf.data, dstbuf.linesize);

RenderFrame(m_pYUVDataCache, nWidth, nHeight, 0);

}

else //YUV420P

{

int Y_PLANE_SIZE, U2_PLANE_SIZE, V2_PLANE_SIZE;

int nWidth = st->codec->width;

int nHeight = st->codec->height;

Y_PLANE_SIZE = nWidth * nHeight;

U2_PLANE_SIZE = nWidth * nHeight/4;

V2_PLANE_SIZE = nWidth * nHeight/4;

uint8_t *dest_y = picture->data[0];

uint8_t *dest_v = picture->data[1];

uint8_t *dest_u = picture->data[2];

//将Y、u、v三个分离的平面数据拷贝到YV12的连续内存区

if(m_pYUVDataCache != NULL)

{

if(picture->linesize[0] == st->codec->width)

{

memcpy(m_pYUVDataCache, dest_y, Y_PLANE_SIZE);

memcpy(m_pYUVDataCache + Y_PLANE_SIZE, dest_u, U2_PLANE_SIZE);

memcpy(m_pYUVDataCache + Y_PLANE_SIZE + U2_PLANE_SIZE, dest_v, V2_PLANE_SIZE);

}

else

{

for (int i=0; idata[0] + i*picture->linesize[0],

nWidth);

}

int half_h = nHeight/2;

int half_x = nWidth/2;

for (int i=0; idata[2] + i*picture->linesize[2],

half_x);

}

for (int i=0; idata[1] + i*picture->linesize[1],

half_x);

}

}

}

RenderFrame(m_pYUVDataCache, nWidth, nHeight, 0);

}

return TRUE;

} 在上面代码中,PlayAVFrame函数内部调用了RenderFrame函数,而RenderFrame才是负责视频显示和OSD的绘制的核心函数。下面是RenderFrame函数的代码,代码有点长,但是大家浏览一下,大概能明白其中的流程。

//函数名: RenderFrame

//函数作用:渲染图像

//参数意义:

// frame -- 传入的图像Buffer地址

// w, h -- 图像宽高

// inputFmt -- 图像像素格式, 0--YV12, 1--YUY2, 2--RGB24, 3--RGB32

//-----------------------------------------------------------------------------

BOOL CVideoPlayer::RenderFrame(BYTE* frame,int w,int h, int inputFmt)

{

HRESULT hr;

RECT prect,orect;

int ok_decode=1;

DDSURFACEDESC2 ddsd;

ZeroMemory( &ddsd, sizeof( ddsd ) );

ddsd.dwSize = sizeof( ddsd );

if(m_pddsFrontBuffer->IsLost()==DDERR_SURFACELOST)

m_pddsFrontBuffer->Restore();

if(m_bOverlay)

{

//do something

//我把Overlay处理的代码给省略了(在源文件中是有的),因为跟非Overlay的处理基本一样。

}

else //None Overlay

{

if(m_pddsBack->IsLost()==DDERR_SURFACELOST)

m_pddsBack->Restore();

if(m_pddsBack->Lock( NULL, &ddsd, DDLOCK_SURFACEMEMORYPTR|DDLOCK_WAIT, NULL)!=DD_OK)

return FALSE;

if(inputFmt == 1 && m_SurfaceType == SURFACE_TYPE_YUY2)

{

ASSERT(m_nBitCount == 16);

int nBytesPerLine = ddsd.dwWidth*m_nBitCount/8;

BYTE * srcAddr = frame;

BYTE * destAddr = (BYTE*)ddsd.lpSurface;

for(int i = 0; i> 2;

y_buf_size = buf_width * buf_height;

u_buf_size = y_buf_size >> 2;

p_SrcBuf = srcAddr;

p_DestBuf = destAddr;

for(i = 0; i < h; i++){

memcpy(p_DestBuf, p_SrcBuf, w);

p_SrcBuf += w;

p_DestBuf += buf_width;

}

p_DestBuf = destAddr + y_buf_size;

p_SrcBuf = srcAddr + y_size;

for(i = 0; i < h/2; i++){

memcpy(p_DestBuf, p_SrcBuf, w/2);

p_SrcBuf += w/2;

p_DestBuf += buf_width/2;

}

p_DestBuf = destAddr + y_buf_size + u_buf_size;

p_SrcBuf = srcAddr + y_size + u_size;

for(i = 0; i < h/2; i++){

memcpy(p_DestBuf, p_SrcBuf, w/2);

p_SrcBuf += w/2;

p_DestBuf += buf_width/2;

}

}

else if((inputFmt == 2 || inputFmt == 3) && m_SurfaceType == SURFACE_TYPE_YV12) //RGB24/RGB32

{

//将RGB转为YV12

long y_size, u_size;

long y_buf_size, u_buf_size;

BYTE *p_DestBuf, *p_SrcBuf;

int buf_height, buf_width, i;

buf_width = ddsd.lPitch;

buf_height = ddsd.dwHeight;

y_size = w * h;

u_size = y_size >> 2;

y_buf_size = buf_width * buf_height;

u_buf_size = y_buf_size >> 2;

if(m_picture_buf == NULL)

m_picture_buf = (BYTE*)malloc(y_size * 3/2); //为YV12的图像缓冲区分配内存

img_convert_ctx = sws_getContext(w, h, (inputFmt == 2) ? PIX_FMT_RGB24 :PIX_FMT_RGB32,

w, h, PIX_FMT_YUV420P, SWS_POINT, NULL, NULL, NULL);

if (img_convert_ctx == NULL)

{

OutputDebugString("sws_getContext() failed \n");

}

BOOL bRevertPicture = TRUE; //解决RGB图像倒置显示的问题

if(bRevertPicture)

{

uint8_t *dest_y = m_picture_buf;

uint8_t *dest_u = dest_y + y_size;

uint8_t *dest_v = dest_y + y_size + u_size;

int nSrcLineSize = w * (inputFmt == 2 ? 3 : 4);

uint8_t *rgb_src[3] = { frame + (h - 1)*nSrcLineSize, NULL, NULL};

int rgb_stride[3] = { -nSrcLineSize, 0, 0};

uint8_t *dest[3]= { dest_y, dest_u, dest_v };

int dest_stride[3] = { w, w/2, w/2 };

sws_scale(img_convert_ctx, rgb_src, rgb_stride, 0, h, dest, dest_stride);

}

else

{

uint8_t *dest_y = m_picture_buf;

uint8_t *dest_u = dest_y + y_size;

uint8_t *dest_v = dest_y + y_size + u_size;

int nSrcLineSize = w * (inputFmt == 2 ? 3 : 4);

uint8_t *rgb_src[3] = { frame, NULL, NULL};

int rgb_stride[3] = { nSrcLineSize, 0, 0};

uint8_t *dest[3]= { dest_y, dest_u, dest_v };

int dest_stride[3] = { w, w/2, w/2 };

sws_scale(img_convert_ctx, rgb_src, rgb_stride, 0, h, dest, dest_stride);

}

BYTE * srcAddr = m_picture_buf;

BYTE * destAddr = (BYTE*)ddsd.lpSurface;

p_SrcBuf = srcAddr;

p_DestBuf = destAddr;

for(i = 0; i < h; i++){

memcpy(p_DestBuf, p_SrcBuf, w);

p_SrcBuf += w;

p_DestBuf += buf_width;

}

p_DestBuf = destAddr + y_buf_size;

p_SrcBuf = srcAddr + y_size;

for(i = 0; i < h/2; i++){

memcpy(p_DestBuf, p_SrcBuf, w/2);

p_SrcBuf += w/2;

p_DestBuf += buf_width/2;

}

p_DestBuf = destAddr + y_buf_size + u_buf_size;

p_SrcBuf = srcAddr + y_size + u_size;

for(i = 0; i < h/2; i++){

memcpy(p_DestBuf, p_SrcBuf, w/2);

p_SrcBuf += w/2;

p_DestBuf += buf_width/2;

}

}

if (m_pddsBack->Unlock(NULL)!=DD_OK)

return FALSE;

AdjustRectangle(&orect,&prect,w,h);

#if 1

CAutoLock lock(&m_csOSDLock);

if(m_pddsOSD != NULL)

{

hr = m_pddsOSD->Blt(&orect, m_pddsBack, &orect, DDBLT_WAIT, NULL);

if (hr != DD_OK)

{

hr = m_pddsFrontBuffer->Blt(&prect, m_pddsBack, &orect, DDBLT_WAIT, NULL);

}

else

{

HDC hDC = NULL;

hr = m_pddsOSD->GetDC(&hDC);

if ((hr == DD_OK)&&(hDC != NULL))

{

for(int nIndex = 0; nIndex < MAX_OSD_NUM; nIndex++)

{

if(!m_OsdInfo[nIndex].bEnable)

continue;

if(strlen(m_OsdInfo[nIndex].szText) > 0)

{

SetBkMode(hDC, TRANSPARENT);

SetTextColor(hDC, m_OsdInfo[nIndex].clrColor);

DrawText(hDC, m_OsdInfo[nIndex].szText, strlen(m_OsdInfo[nIndex].szText), &m_OsdInfo[nIndex].rcPosition, DT_LEFT|DT_VCENTER);

}

else if(m_OsdInfo[nIndex].hWatermark != NULL)

{

m_hMemDC = ::CreateCompatibleDC(hDC);

::SelectObject(m_hMemDC, m_OsdInfo[nIndex].hWatermark);

BITMAP bmpinfo;

::GetObject(m_OsdInfo[nIndex].hWatermark, sizeof(BITMAP), &bmpinfo);

int nLeft = m_OsdInfo[nIndex].rcPosition.left;

int nTop = m_OsdInfo[nIndex].rcPosition.top;

int nDestWidth = m_OsdInfo[nIndex].rcPosition.right - m_OsdInfo[nIndex].rcPosition.left;

int nDestHeight = m_OsdInfo[nIndex].rcPosition.bottom - m_OsdInfo[nIndex].rcPosition.top;

int nSrcWidth = bmpinfo.bmWidth;

int nSrcHeight = bmpinfo.bmHeight;

::TransparentBlt(hDC, nLeft, nTop, nDestWidth, nDestHeight, m_hMemDC, 0, 0, nSrcWidth, nSrcHeight, RGB(255,255,255));

DeleteDC(m_hMemDC);

}

}

m_pddsOSD->ReleaseDC(hDC);

m_pddsFrontBuffer->Blt(&prect, m_pddsOSD, &orect, DDBLT_WAIT, NULL);

}

}

}

#else

hr = m_pddsFrontBuffer->Blt(&prect, m_pddsBack, &orect, DDBLT_WAIT, NULL);

#endif

//m_pddsFrontBuffer->BltFast(prect.left,prect.top,m_pddsBack,&orect,0);

//hr = m_pddsFrontBuffer->Blt(&prect,m_pddsBack, &orect, DDBLT_WAIT, 0);

}

return TRUE;

}

例子代码下载地址:https://download.csdn.net/download/zhoubotong2012/11855592