电费敏感数据挖掘一: 数据处理与特征工程

目录:

- 一. 数据筛选

- 1.1 读取数据

- 1.2 加入label值

- 1.3 留下一条工单记录的数据

- 1.4 加载通话数据

- 二. 离散值处理

- 2.1 对离散型数值进行编码

- 2.2 数据编码长度所占比例

- 2.3 时间数据处理

- 2.4 用电方式

- 2.5 城市编码

- 2.6 收费信息表数据

- 三. 构建统计特征

- 存下特征

一. 数据筛选

1.1 读取数据

import numpy as np

import pandas as pd

import csv

data_path = r'..\电费敏感预测\rawdata'

# 工单信息

file_jobinfo_train = '01_arc_s_95598_wkst_train.tsv'

file_jobinfo_test = '01_arc_s_95598_wkst_test.tsv'

# 通话信息记录

file_comm = '02_s_comm_rec.tsv'

# 应收电费信息

file_flow_train = '09_arc_a_rcvbl_flow.tsv'

file_flow_test = '09_arc_a_rcvbl_flow_test.tsv'

# 训练集

file_label = 'train_label.csv'

# 测试集

file_test = 'test_to_predict.csv'

train_info = pd.read_csv(data_path + '\processed_' + file_jobinfo_train,

sep = '\t', quoting = csv.QUOTE_NONE)

# quoting 防止文本里包含英文双引号导致报错

# 过滤CUST_NO为空的用户

train_info = train_info.loc[~train_info.CUST_NO.isnull()]

train_info['CUST_NO'] = train_info.CUST_NO.astype(np.int64)

train_info.head(2)

- 统计单数

train = train_info.CUST_NO.value_counts().to_frame().reset_index()

train.columns = ['CUST_NO', 'counts_of_jobinfo']

train.head()

1.2 加入label值

temp = pd.read_csv(data_path + '/' + file_label, header = None)

temp.columns = ['CUST_NO']

train['label'] = 0

train.loc[train.CUST_NO.isin(temp.CUST_NO), 'label'] = 1

train = train[['CUST_NO', 'label', 'counts_of_jobinfo']]

print(train.shape)

train.head(4)

- 测试集标签用-1 表示

test_info = pd.read_csv(data_path + 'processed_' + file_jobinfo_test, sep='\t', encoding='utf-8', quoting=csv.QUOTE_NONE)

test = test_info.CUST_NO.value_counts().to_frame().reset_index()

test.columns = ['CUST_NO', 'counts_of_jobinfo']

test['label'] = -1

test = test[['CUST_NO', 'label', 'counts_of_jobinfo']]

test.head()

df = train.append(test).copy()

del temp, train, test

1.3 留下一条工单记录的数据

df = df.loc[df.counts_of_jobinfo == 1].copy()

df.reset_index(drop = True, inplace = True)

train = df.loc[df.label != -1]

test = df.loc[df.label == -1]

print('低敏用户训练集: ', train.shape[0])

print('低敏用户正样本: ', train.loc[train.label == 1].shape[0])

print('低敏用户负样本: ', train.loc[train.label == 0].shape[0])

print('低敏用户测试集: ', test.shape[0])

df.drop(['counts_of_jobinfo'], axis = 1, inplace = True)

低敏用户训练集: 401626

低敏用户正样本: 13139

低敏用户负样本: 388487

低敏用户测试集: 327437

jobinfo = train_info.append(test_info).copy()

jobinfo = jobinfo.loc[jobinfo.CUST_NO.isin(df.CUST_NO)].copy()

jobinfo.reset_index(drop = True, inplace = True)

jobinfo = jobinfo.merge(df[['CUST_NO', 'label']], on = 'CUST_NO', how = 'left')

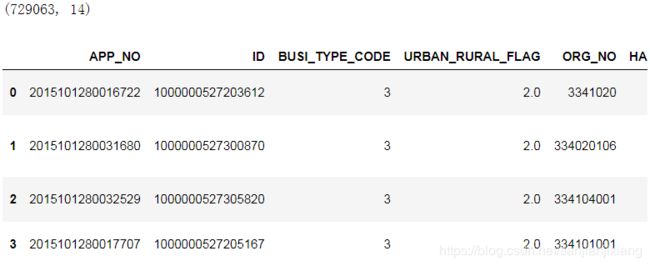

print(jobinfo.shape)

jobinfo.head()

1.4 加载通话数据

comm = pd.read_csv(data_path + '/' + file_comm, sep = '\t')

print('总数据量: ', comm.shape)

comm.drop_duplicates(inplace = True)

print('去掉重复无用的: ', comm.shape)

# 过滤掉没出现在jobinfo中的数据

comm = comm.loc[comm.APP_NO.isin(jobinfo.ID)]

comm = comm.rename(columns = {'APP_NO': 'ID'})

comm = comm.merge(jobinfo[['ID', 'CUST_NO']], on = 'ID', how = 'left')

print('可用数据量: ', comm.shape)

# 过滤掉日期错误的

comm['REQ_BEGIN_DATE'] = comm.REQ_BEGIN_DATE.apply(lambda x: pd.to_datetime(x))

comm['REQ_FINISH_DATE'] = comm.REQ_FINISH_DATE.apply(lambda x: pd.to_datetime(x))

comm = comm.loc[~(comm.REQ_BEGIN_DATE > comm.REQ_FINISH_DATE)]

print('过滤错误信息后数据量: ', comm.shape)

df = df.loc[df.CUST_NO.isin(comm.CUST_NO)].copy()

总数据量: (1593088, 8)

去掉重复无用的: (1584351, 8)

可用数据量: (726248, 9)

过滤错误信息后数据量: (726242, 9)

# 构建特征:通话时间,并进行归一化

comm['holding_time'] = comm['REQ_FINISH_DATE'] - comm['REQ_BEGIN_DATE']

comm['holding_time_seconds'] = comm.holding_time.apply(lambda x: x.seconds)

df = df.merge(comm[['CUST_NO', 'holding_time_seconds']], how = 'left', on = 'CUST_NO')

from sklearn.preprocessing import MinMaxScaler

df['holding_time_seconds'] = MinMaxScaler().fit_transform(df['holding_time_seconds'].values.reshape(-1, 1))

del comm

df.head()

jobinfo = jobinfo.loc[jobinfo.CUST_NO.isin(df.CUST_NO)].copy()

jobinfo.reset_index(drop = True, inplace = True)

# rank函数进行排名

df['rank_CUST_NO'] = df.CUST_NO.rank(method = 'max')

df.head()

# 归一化

df['rank_CUST_NO'] = MinMaxScaler().fit_transform(df.rank_CUST_NO.values.reshape(-1, 1))

df.head()

二. 离散值处理

2.1 对离散型数值进行编码

df = df.merge(jobinfo[['CUST_NO', 'BUSI_TYPE_CODE']], on = 'CUST_NO', how = 'left')

temp = pd.get_dummies(df.BUSI_TYPE_CODE, prefix = 'onehot_BUSI_TYPE_CODE', dummy_na = True)

df = pd.concat([df, temp], axis = 1)

df.drop(['BUSI_TYPE_CODE'], axis = 1, inplace = True)

del temp

df = df.merge(jobinfo[['CUST_NO', 'URBAN_RURAL_FLAG']], on='CUST_NO', how='left')

temp = pd.get_dummies(df.URBAN_RURAL_FLAG, prefix='onehot_URBAN_RURAL_FLAG', dummy_na=True)

df = pd.concat([df, temp], axis=1)

df.drop(['URBAN_RURAL_FLAG'], axis=1, inplace=True)

del temp

# 供电单位编码,按长度

df = df.merge(jobinfo[['CUST_NO', 'ORG_NO']], on='CUST_NO', how='left')

df['len_of_ORG_NO'] = df.ORG_NO.apply(lambda x:len(str(x)))

df.fillna(-1, inplace=True)

2.2 数据编码长度所占比例

train = df[df.label != -1]

ratio = {}

for i in train.ORG_NO.unique():

ratio[i] = len(train.loc[(train.ORG_NO == i) & (train.label == 1)]) / len(train.loc[train.ORG_NO == i])

df['ratio_ORG_NO'] = df.ORG_NO.map(ratio)

df['ratio_ORG_NO'].head()

temp = pd.get_dummies(df.len_of_ORG_NO, prefix = 'onehot_len_of_ORG_NO')

df = pd.concat([df, temp], axis = 1)

df.drop(['ORG_NO', 'len_of_ORG_NO'], axis = 1, inplace = True)

2.3 时间数据处理

df = df.merge(jobinfo[['CUST_NO', 'HANDLE_TIME']], on = 'CUST_NO', how = 'left')

df['date'] = df['HANDLE_TIME'].apply(lambda x: pd.to_datetime(x.split()[0]))

df['time'] = df['HANDLE_TIME'].apply(lambda x: x.split()[1])

df['month'] = df['date'].apply(lambda x: x.month)

df['day'] = df.date.apply(lambda x: x.day)

features = ['CUST_NO','date','time','month','day']

df[features].head()

# 按照上旬,中旬,下旬进行统计

df['is_in_first_tendays'] = 0

df.loc[df.day.isin(range(1, 11)), 'is_in_first_tendays'] = 1

df['is_in_middle_tendays'] = 0

df.loc[df.day.isin(range(11, 21)), 'is_in_middle_tendays'] = 1

df['is_in_last_tendays'] = 0

df.loc[df.day.isin(range(21, 32)), 'is_in_last_tendays'] = 1

df['hour'] = df.time.apply(lambda x: int(x.split(':')[0]))

df.drop(['HANDLE_TIME', 'date', 'time'], axis = 1, inplace = True)

2.4 用电方式

# 用电方式首位

df = df.merge(jobinfo[['CUST_NO', 'ELEC_TYPE']], on = 'CUST_NO', how = 'left')

df.fillna(0, inplace = True)

df['head_of_ELEC_TYPE'] = df.ELEC_TYPE.apply(lambda x: str(x)[0])

# 是否是空值

df['is_ELEC_TYPE_NaN'] = 0

df.loc[df.ELEC_TYPE == 0, 'is_ELEC_TYPE_NaN'] = 1

# 1.label encoder

from sklearn.preprocessing import LabelEncoder

df['label_encoder_ELEC_TYPE'] = LabelEncoder().fit_transform(df['ELEC_TYPE'])

# 2.ratio

train = df[df.label != -1]

ratio = {}

for i in train.ELEC_TYPE.unique():

ratio[i] = len(train.loc[(train.ELEC_TYPE == i) & (train.label == 1)]) / len(train.loc[train.ELEC_TYPE == i])

df['ratio_ELEC_TYPE'] = df.ELEC_TYPE.map(ratio)

df.fillna(0, inplace = True)

df[['ratio_ELEC_TYPE','head_of_ELEC_TYPE']].head()

temp = pd.get_dummies(df.head_of_ELEC_TYPE, prefix = 'onehot_head_of_ELEC_TYPE')

df = pd.concat([df, temp], axis = 1)

df.drop(['ELEC_TYPE', 'head_of_ELEC_TYPE'], axis = 1, inplace = True)

2.5 城市编码

df = df.merge(jobinfo[['CUST_NO', 'CITY_ORG_NO']], on = 'CUST_NO', how = 'left')

train = df[df.label != -1]

ratio = {}

for i in train.CITY_ORG_NO.unique():

ratio[i] = len(train.loc[(train.CITY_ORG_NO == i) & (train.label == 1)]) / len(train.loc[train.CITY_ORG_NO == i])

df['ratio_CITY_ORG_NO'] = df.CITY_ORG_NO.map(ratio)

temp = pd.get_dummies(df.CITY_ORG_NO, prefix = 'onehot_CITY_ORG_NO')

df = pd.concat([df, temp], axis = 1)

df.drop(['CITY_ORG_NO'], axis = 1, inplace = True)

2.6 收费信息表数据

train_flow = pd.read_csv(data_path + '/' + file_flow_train, sep = '\t')

test_flow = pd.read_csv(data_path + '/' + file_flow_test, sep = '\t')

flow = train_flow.append(test_flow).copy()

flow.rename(columns = {'CONS_NO':'CUST_NO'}, inplace = True)

flow.drop_duplicates(inplace = True)

flow = flow.loc[flow.CUST_NO.isin(df.CUST_NO)].copy()

print(flow.shape)

flow.head()

flow['T_PQ'] = flow.T_PQ.apply(lambda x: -x if x < 0 else x)

flow['RCVBL_AMT'] = flow.RCVBL_AMT.apply(lambda x: -x if x < 0 else x)

flow['RCVED_AMT'] = flow.RCVED_AMT.apply(lambda x: -x if x < 0 else x)

flow['OWE_AMT'] = flow.OWE_AMT.apply(lambda x: -x if x < 0 else x)

# 有些数据缺失了

df['has_biao9'] = 0

df.loc[df.CUST_NO.isin(flow.CUST_NO), 'has_biao9'] = 1

df['counts_of_09flow'] = df.CUST_NO.map(flow.groupby('CUST_NO').size())

df[['CUST_NO', 'counts_of_09flow']].head()

三. 构建统计特征

from numpy import log

# 应收金额

df['sum_yingshoujine'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVBL_AMT.sum()) + 1)

df['mean_yingshoujine']= log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVBL_AMT.mean())+ 1)

df['max_yingshoujine'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVBL_AMT.max()) + 1)

df['min_yingshoujine'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVBL_AMT.min()) + 1)

df['std_yingshoujine'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVBL_AMT.std()) + 1)

# 实收金额

df['sum_shishoujine'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVED_AMT.sum()) + 1)

# 少交了多少

df['qianfei'] = df['sum_yingshoujine'] - df['sum_shishoujine']

# 总电量

df['sum_T_PQ'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').T_PQ.sum()) + 1)

df['mean_T_PQ']= log(df.CUST_NO.map(flow.groupby('CUST_NO').T_PQ.mean())+ 1)

df['max_T_PQ'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').T_PQ.max()) + 1)

df['min_T_PQ'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').T_PQ.min()) + 1)

df['std_T_PQ'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').T_PQ.std()) + 1)

# 电费金额

df['sum_OWE_AMT'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').OWE_AMT.sum()) + 1)

df['mean_OWE_AMT']= log(df.CUST_NO.map(flow.groupby('CUST_NO').OWE_AMT.mean())+ 1)

df['max_OWE_AMT'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').OWE_AMT.max()) + 1)

df['min_OWE_AMT'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').OWE_AMT.min()) + 1)

df['std_OWE_AMT'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').OWE_AMT.std()) + 1)

# 电费金额和应收金额差多少

df['dianfei_chae'] = df['sum_OWE_AMT'] - df['sum_yingshoujine']

# 应收违约金

df['sum_RCVBL_PENALTY'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVBL_PENALTY.sum()) + 1)

df['mean_RCVBL_PENALTY']= log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVBL_PENALTY.mean())+ 1)

df['max_RCVBL_PENALTY'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVBL_PENALTY.max()) + 1)

df['min_RCVBL_PENALTY'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVBL_PENALTY.min()) + 1)

df['std_RCVBL_PENALTY'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVBL_PENALTY.std()) + 1)

# 实收违约金

df['sum_RCVED_PENALTY'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVED_PENALTY.sum()) + 1)

df['mean_RCVED_PENALTY']= log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVED_PENALTY.mean())+ 1)

df['max_RCVED_PENALTY'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVED_PENALTY.max()) + 1)

df['min_RCVED_PENALTY'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVED_PENALTY.min()) + 1)

df['std_RCVED_PENALTY'] = log(df.CUST_NO.map(flow.groupby('CUST_NO').RCVED_PENALTY.std()) + 1)

# 差多少违约金

df['chaduoshao_weiyuejin'] = df['sum_RCVBL_PENALTY'] - df['sum_RCVED_PENALTY']

# 每个用户有几个月的记录

df['nunique_RCVBL_YM'] = df.CUST_NO.map(flow.groupby('CUST_NO').RCVBL_YM.nunique())

# 平均每个月几条

df['mean_RCVBL_YM'] = df['counts_of_09flow'] / df['nunique_RCVBL_YM']

del train_flow, test_flow, flow

存下特征

import os

import pickle

if not os.path.isdir(r'..\数据挖掘\电费'):

os.makedirs(r'..\数据挖掘\电费')

# os.path.isdir()用于判断某一对象(需提供绝对路径)是否为目录

print('统计特征搞定!')

pickle.dump(df, open(r'..\电费\statistical_features_1.pkl', 'wb'))

统计特征搞定!

- 待续:

电费敏感数据挖掘二: 文本特征构造

电费敏感数据挖掘三: 构建低敏用户模型