Triplet Selection

样本选取的理想情况:

a minimal number of exemplars of any one identity is present in each mini-batch

单个个体的全部样本必须存在于每个mini-batch中

In our experiments we sample the training data such that around 40 faces are selected per identity per minibatch. Additionally, randomly sampled negative faces are added to each mini-batch.

每个mini-batch中每个个体有40张图片,先组成A-P对,然后再随机抽取负样本到mini-batch中组成(A,P,N)三元组。

Instead of picking the hardest positive, we use all anchor-positive pairs in a mini-batch while still selecting the hard negatives. We found in practice that the all anchor-positive method was more stable and converged slightly faster(收敛更快) at the beginning of training

所有的A-P对和满足条件的A-N对

具体过程:

先从训练数据集中抽样出一组图片到mini-batch中,计算这些图片在当时的网络模型中得到的embedding,保存在emb_array当中。

每个mini-batch有多类图,每类图有多张

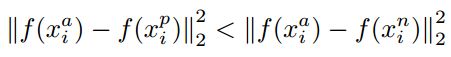

(A,N)需满足的条件:

all_neg = np.where(neg_dists_sqr-pos_dist_sqr0] # VGG Face #多少人,alpha参数

def select_triplets(embeddings, nrof_images_per_class, image_paths, people_per_batch, alpha):

""" Select the triplets for training

"""

trip_idx = 0

#某个人的图片的embedding在emb_arr中的开始的索引

emb_start_idx = 0

num_trips = 0

triplets = []

# VGG Face: Choosing good triplets is crucial and should strike a balance between

# selecting informative (i.e. challenging) examples and swamping training with examples that

# are too hard. This is achieve by extending each pair (a, p) to a triplet (a, p, n) by sampling

# the image n at random, but only between the ones that violate the triplet loss margin. The

# latter is a form of hard-negative mining, but it is not as aggressive (and much cheaper) than

# choosing the maximally violating example, as often done in structured output learning.

#遍历每一个人

for i in xrange(people_per_batch):

#这个人有多少张图片

nrof_images = int(nrof_images_per_class[i])

#遍历第i个人的所有图片

for j in xrange(1,nrof_images):

#第j张图的embedding在emb_arr 中的位置

a_idx = emb_start_idx + j - 1

#第j张图跟其他所有图片的欧氏距离

neg_dists_sqr = np.sum(np.square(embeddings[a_idx] - embeddings), 1)

#遍历每一对可能的(anchor,postive)图片,记为(a,p)吧

for pair in xrange(j, nrof_images): # For every possible positive pair.

#第p张图片在emb_arr中的位置

p_idx = emb_start_idx + pair

#(a,p)之前的欧式距离

pos_dist_sqr = np.sum(np.square(embeddings[a_idx]-embeddings[p_idx]))

#同一个人的图片不作为negative,所以将距离设为无穷大

neg_dists_sqr[emb_start_idx:emb_start_idx+nrof_images] = np.NaN

#all_neg = np.where(np.logical_and(neg_dists_sqr-pos_dist_sqr

#其他人的图片中有哪些图片与a之间的距离-p与a之间的距离小于alpha的

all_neg = np.where(neg_dists_sqr-pos_dist_sqr0 ] # VGG Face selecction

#所有可能的negative

nrof_random_negs = all_neg.shape[0]

#如果有满足条件的negative

if nrof_random_negs>0:

#从中随机选取一个作为n

rnd_idx = np.random.randint(nrof_random_negs)

n_idx = all_neg[rnd_idx]

# 选到(a,p,n)作为三元组

triplets.append((image_paths[a_idx], image_paths[p_idx], image_paths[n_idx]))

#print('Triplet %d: (%d, %d, %d), pos_dist=%2.6f, neg_dist=%2.6f (%d, %d, %d, %d, %d)' %

# (trip_idx, a_idx, p_idx, n_idx, pos_dist_sqr, neg_dists_sqr[n_idx], nrof_random_negs, rnd_idx, i, j, emb_start_idx))

trip_idx += 1

num_trips += 1

emb_start_idx += nrof_images

np.random.shuffle(triplets)

return triplets, num_trips, len(triplets)(更多解释:http://www.cnblogs.com/irenelin/p/7831338.html)

转自:http://blog.csdn.net/baidu_27643275/article/details/79222206