(实战篇)TensorFlow 2.0 + 泰坦尼克号CVS数据集_理解dataset进行数据的预处理

通过这篇博客我们学到什么?

- 通过加载CVS数据生成dataset

- 使用dataset数据集,对输入数据标准化

- 了解dataset数据集常用的属性,比如:batch_size、num_epochs等

学习的步骤

- 通过网络下载“泰坦尼克号”乘客的CVS数据集,并加载到内存中

- 将内存中的CVS数据集,加载到dataset中

- 对dataset中的数据进行处理

- 使用处理后的数据,进行训练测试

先导入必须的包

import functools

import numpy as np

import tensorflow as tf

import tensorflow_datasets as tfds

- 如果没有安装tensorflow_datasets,可以通过这个命名安装:pip install tensorflow_datasets

下载“泰坦尼克号”CVS数据集,并查看

TRAIN_DATA_URL = "https://storage.googleapis.com/tf-datasets/titanic/train.csv"

TEST_DATA_URL = "https://storage.googleapis.com/tf-datasets/titanic/eval.csv"

train_file_path = tf.keras.utils.get_file("train.csv", TRAIN_DATA_URL)

test_file_path = tf.keras.utils.get_file("eval.csv", TEST_DATA_URL)

np.set_printoptions(precision=3, suppress=True) ###### 让 numpy 数据更易读

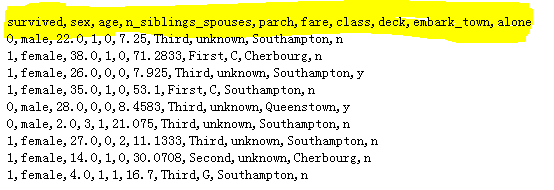

!head {train_file_path}

- 数据集长下面这样子,第一行是数据的列名,默认显示前10行

将数据集导入dataset中

代码之前,我们先来看dataset可以做哪些事情呢?

- 如果cvs数据集没有列名,可以补充列名

- 需要为每个样本设置哪个是值是lable(lable相当于y,这里也就是表示存活与否的值)

- 训练不是需要批次吗?dataset可以设置每批次的样本个数

- 如果数据中存在NA/NaN值,可以用其他字符串代替

- 如果读取cvs数据出现问题,可以选择跳过本次的样本

- 如果样本中,有些属性没有用,可以选择不加入dataset中

- 可以设置训练时循环的次数

LABEL_COLUMN = 'survived'

LABELS = [0, 1]

def get_dataset(file_path):

dataset = tf.data.experimental.make_csv_dataset(

file_path,

batch_size=12,

label_name=LABEL_COLUMN,

na_value="?",

num_epochs=1,

ignore_errors=True)

return dataset

raw_train_data = get_dataset(train_file_path)

raw_test_data = get_dataset(test_file_path)

- batch_size:每批训练的样本个数

- label_name:指定数据集中,那个是lable标签(相当于y,预测值)

- na_value:当数据集中有NA/NaN时,用“?”代替

- num_epochs:重复此数据集的次数

- ignore_errors:当遇到问题时,是停止还是忽略,True为忽略

- select_columns:只要选择的这些列,没有选中的就不添加到dataset中去了

- column_names:标题名,如果没有标题名,可以通过这个属性设置

数据预处理

数据预处理分2中,一种是分类数据,比如“男女”,一种是连续数据,比如“岁数”

- 处理分类数据:

先定义每个类别含有哪些种类

CATEGORIES = {

'sex': ['male', 'female'],

'class' : ['First', 'Second', 'Third'],

'deck' : ['A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'I', 'J'],

'embark_town' : ['Cherbourg', 'Southhampton', 'Queenstown'],

'alone' : ['y', 'n']

}

再把它加入categorical_columns 中去

categorical_columns = []

for feature, vocab in CATEGORIES.items():

cat_col = tf.feature_column.categorical_column_with_vocabulary_list(

key=feature, vocabulary_list=vocab)

categorical_columns.append(tf.feature_column.indicator_column(cat_col))

- 处理连续数据

定义标准化的函数

def process_continuous_data(mean, data):

# 标准化数据

data = tf.cast(data, tf.float32) * 1/(2*mean)

return tf.reshape(data, [-1, 1])

再定义传入函数的mean值

MEANS = {

'age' : 29.631308,

'n_siblings_spouses' : 0.545455,

'parch' : 0.379585,

'fare' : 34.385399

}

最后添加到numerical_columns 中去

numerical_columns = []

for feature in MEANS.keys():

num_col = tf.feature_column.numeric_column(feature, normalizer_fn=functools.partial(process_continuous_data, MEANS[feature]))

numerical_columns.append(num_col)

- 特殊处理好了后,使用DenseFeatures预处理数据,得到preprocessing_layer

preprocessing_layer = tf.keras.layers.DenseFeatures(categorical_columns+numerical_columns)

数据处理完,进行训练测试

将preprocessing_layer放入模型中

model = tf.keras.Sequential([

preprocessing_layer,

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid'),

])

model.compile(

loss='binary_crossentropy',

optimizer='adam',

metrics=['accuracy'])

model.fit(raw_train_data.shuffle(500), epochs=10) #将数据打乱了再训练