深度学习之风格迁移具体实现

npy文件

在网上可以下载到关于VGG-16的npy文件,这个是已经训练好的模型,文件保存的是训练好的相关参数,大约1.3亿个参数。npy文件,是以python的nupmy保存的文件,可以使用numpy来提取。

import numpy as np

data = np.load(r'D:\BaiduNetdiskDownload\vgg16.npy',allow_pickle=True,encoding='latin1')

dict_data = data.item()

print(dict_data.keys())

'''

效果:可以打印出每个卷积层和全连接的名字,通过其可以提取到对应的权重和偏置

dict_keys(['conv5_1', 'fc6', 'conv5_3',

'conv5_2', 'fc8', 'fc7', 'conv4_1',

'conv4_2', 'conv4_3', 'conv3_3',

'conv3_2', 'conv3_1', 'conv1_1',

'conv1_2', 'conv2_2', 'conv2_1'])

'''

构建网络架构

'''

构建网络架构200多行代码,主体为: 读取要转换的原图

构建模型类,

然后调用模型类

构建损失函数

梯度下降

开启会话

执行训练

保存图片

'''

import os

import numpy as np

import tensorflow as tf

from PIL import Image

import time

# 超参数

VGG_MEAN = [103.939, 116.778, 123.68]

path_Vgg16 = 'vgg16.npy' # vgg16.npy模型所在路径

output_dir = './run_style_trainsfer'

if not os.path.exists(output_dir):

os.mkdir(output_dir)

num_steps = 201

learning_rate = 10

lambda_c = 1

lambda_s = 20

# 读取图片(其中做个两个技巧:

# 1,将不是rgb格式的图片转为rgb格式

# 2,重新设置图片的尺寸为:224,224,3)

def read_img(img_name):

img = Image.open(img_name)

# 因为使用的是vgg16模型,所以图片像素格式为:224,224,3 如果不是,需要事先处理

if img.mode != "RGB":

img = img.convert("RGB")

# 设置尺寸

img = img.resize((224, 224), Image.ANTIALIAS)

np_img = np.array(img) # 224.224.3

np_img = np.asarray([np_img],dtype=np.int32) # 1,224,224,3

return np_img

# 内容图像[[[]]]

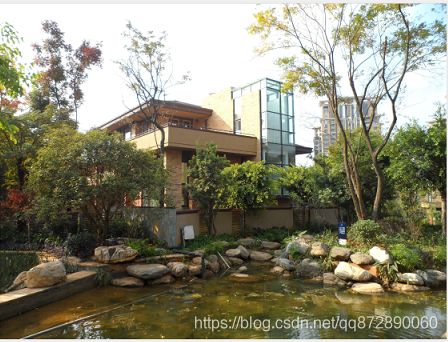

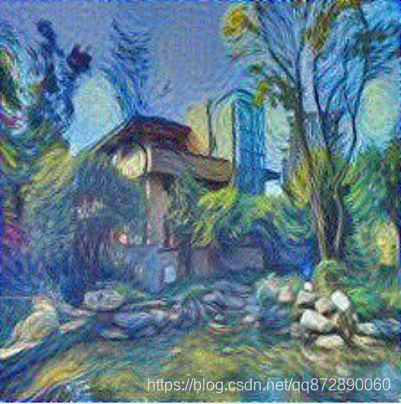

content_val = read_img(input('请输入内容图片名:'))

# 风格图像

style_val = read_img(input('请输入风格图片名:'))

# 获得一个初始化的矩阵

def initial_result(shape,mean,stddev):

initial = tf.truncated_normal(shape,mean=mean,stddev=stddev)

return tf.Variable(initial)

# 初始化合成后图片数字矩阵

result = initial_result((1,224,224,3),127.5,20)

# 构建VGG神经网络模型

class VGGNet:

def __init__(self, data_dict):

self.data_dict = data_dict

# 获取卷积层卷积核

def get_conv_filter(self, name):

return tf.constant(self.data_dict[name][0], name='conv')

# 获取全连接层权重

def get_fc_weight(self, name):

return tf.constant(self.data_dict[name][0], name='fc')

# 获取偏置

def get_bias(self, name):

return tf.constant(self.data_dict[name][1], name='bias')

# 构建卷积层

def conv_layer(self, x, name):

with tf.name_scope(name):

conv_w = self.get_conv_filter(name)

conv_b = self.get_bias(name)

h = tf.nn.conv2d(x, conv_w, [1, 1, 1, 1], padding='SAME')

h = tf.nn.bias_add(h, conv_b)

h = tf.nn.relu(h)

return h

# 池化层

def pooling_layer(self, x, name):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME', name=name)

# 构建全连接

def fc_layer(self, x, name, activation=tf.nn.relu):

with tf.name_scope(name):

fc_w = self.get_fc_weight(name)

fc_b = self.get_bias(name)

h = tf.matmul(x, fc_w)

h = tf.nn.bias_add(h, fc_b)

if activation is None:

return h

else:

return activation(h)

# 构建展平层

def flatten_layer(self, x, name):

with tf.name_scope(name):

x_shape = x.get_shape().as_list()

dim = 1

for d in x_shape:

dim *= d

x = tf.reshape(x, [-1, dim])

return x

#建模方法(调用上述构建各类型层次的方法,组建网络模型)

def build(self, x_rgb):

start_time = time.time()

print('开始构建')

r, g, b = tf.split(x_rgb, [1, 1, 1], axis=3)

x_bgr = tf.concat([b - VGG_MEAN[0],

g - VGG_MEAN[1],

r - VGG_MEAN[2]],

axis=3)

assert x_bgr.get_shape().as_list()[1:] == [224, 224, 3]

self.conv1_1 = self.conv_layer(x_bgr, 'conv1_1')

self.conv1_2 = self.conv_layer(self.conv1_1, 'conv1_2')

self.pool1 = self.pooling_layer(self.conv1_2, 'pool1')

self.conv2_1 = self.conv_layer(self.pool1, 'conv2_1')

self.conv2_2 = self.conv_layer(self.conv2_1, 'conv2_2')

self.pool2 = self.pooling_layer(self.conv2_2, 'pool2')

self.conv3_1 = self.conv_layer(self.pool2, 'conv3_1')

self.conv3_2 = self.conv_layer(self.conv3_1, 'conv3_2')

self.conv3_3 = self.conv_layer(self.conv3_2, 'conv3_3')

self.pool3 = self.pooling_layer(self.conv3_3, 'pool3')

self.conv4_1 = self.conv_layer(self.pool3, 'conv4_1')

self.conv4_2 = self.conv_layer(self.conv4_1, 'conv4_2')

self.conv4_3 = self.conv_layer(self.conv4_2, 'conv4_3')

self.pool4 = self.pooling_layer(self.conv4_3, 'pool4')

self.conv5_1 = self.conv_layer(self.pool4, 'conv5_1')

self.conv5_2 = self.conv_layer(self.conv5_1, 'conv5_2')

self.conv5_3 = self.conv_layer(self.conv5_2, 'conv5_3')

self.pool5 = self.pooling_layer(self.conv5_3, 'pool5')

# 图像风格转换用不到全连接层,所以可以注释掉,节省生成网络的时间

'''

self.flatten5 = self.flatten_layer(self.pool5, 'flatten')

self.fc6 = self.fc_layer(self.flatten5, 'fc6')

self.fc7 = self.fc_layer(self.fc6, 'fc7')

self.fc8 = self.fc_layer(self.fc7, 'fc8', activation=None)

self.prob = tf.nn.softmax(self.fc8, name='prob')

'''

print('结束构建', time.time() - start_time)

# 占位符

content = tf.placeholder(tf.float32,shape=[1,224,224,3])

style = tf.placeholder(tf.float32,shape=[1,224,224,3])

# 载入模型

data_dict = np.load(path_Vgg16,allow_pickle=True,encoding='latin1').item()

# 实例化

vgg_for_content = VGGNet(data_dict)

vgg_for_style = VGGNet(data_dict)

vgg_for_result = VGGNet(data_dict)

# 建模

vgg_for_content.build(content)

vgg_for_style.build(style)

vgg_for_result.build(result)

# 提取哪些特征

'''

经过测试 ,内容图片一般一二层可以提取到实际的内容,345是特征边边角角,看不懂

风格图片一般第四层可以和内容融合的很好,调整权重系数可以改变图片谁的比重更大

'''

content_features = [

# vgg_for_content.conv1_2,

vgg_for_content.conv2_2,

vgg_for_content.conv3_3,

# vgg_for_content.conv4_3,

# vgg_for_content.conv5_3

]

# 5个大卷积层我们可以随意组合各个层次,然后合成图片,

# 需要注意的是,内容与生产图片的内容,风格和生产图片的风格,要对应提取一致的层次

result_content_features = [

# vgg_for_result.conv1_2,

vgg_for_result.conv2_2,

vgg_for_result.conv3_3,

# vgg_for_result.conv4_3,

# vgg_for_result.conv5_3

]

style_features = [

# vgg_for_style.conv1_2,

# vgg_for_style.conv2_2,

vgg_for_style.conv3_3,

# vgg_for_style.conv4_3,

# vgg_for_style.conv5_3

]

result_style_features = [

# vgg_for_result.conv1_2,

# vgg_for_result.conv2_2,

vgg_for_result.conv3_3,

# vgg_for_result.conv4_3,

# vgg_for_result.conv5_3

]

# 计算内容损失

content_loss = tf.zeros(1,tf.float32)

# a = [1,2] b = [3,4] [1,3],[2,4] [1,32,32,3]

for c,c_ in zip(content_features,result_content_features):

content_loss += tf.reduce_mean((c - c_) ** 2,[1,2,3])

# gram矩阵计算各个通道之间的相关系数

def gram_matrix(x):

b,w,h,ch = x.get_shape().as_list()

features = tf.reshape(x,[b,h*w,ch])

# 计算相似度

gram = tf.matmul(features,features,adjoint_a=True) / tf.constant(ch * w * h,tf.float32)

return gram

style_gram = [gram_matrix(feature) for feature in style_features]

result_style_gram = [gram_matrix(feature) for feature in result_style_features]

# 计算风格损失

style_loss = tf.zeros(1,tf.float32)

for s,s_ in zip(style_gram,result_style_gram):

style_loss += tf.reduce_mean((s - s_) ** 2,[1,2])

# 总体损失

loss = content_loss * lambda_c + style_loss * lambda_s

train_op = tf.train.AdamOptimizer(learning_rate).minimize(loss)

init_op = tf.global_variables_initializer()

# 开启会话

with tf.Session() as sess:

sess.run(init_op)

for step in range(num_steps):

loss_value,content_loss_value,style_loss_value,_ \

= sess.run([loss,content_loss,style_loss,train_op],feed_dict={

content:content_val,

style:style_val

})

if step % 10 == 0:

print(step+1,loss_value[0],content_loss_value[0],style_loss_value[0])

# 图片保存路径

result_img_path = os.path.join(output_dir,'result-%05d.jpg'%(step+1))

result_val = result.eval(sess)[0]

result_val = np.clip(result_val,0,255)

img_arr = np.asarray(result_val,np.uint8)

img = Image.fromarray(img_arr)

img.save(result_img_path)

print('转换完成!')