cs231n课程作业assignment1之knn学习笔记及易错点

一.k近邻(k-Nearest Neighbor)算法

1.训练:分类器简单地记住全部数据

2.预测:计算测试数据和所有训练数据之间的距离,利用k近邻算法找到最近的k个点,用投票机制选择k个中出现最多的label作为预测label。

二.加载数据集

1.CIFAR10数据集介绍

CIFAR10数据集包含十个类别,60000张图片,每个类别6000张

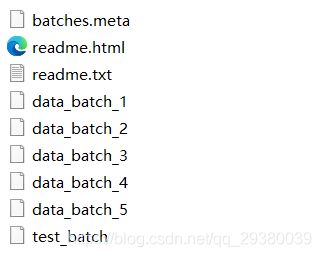

下载数据集解压后分为以上文件:

batches.meta为ASCII编码文件,记录0-9标签对应的类别名。

下边六个data_batch.bin文件每个各包含10000张照片,二进制编码,每张 照片尺寸为32×32×3,所以每个文件字节数为10000*3072。

2.加载数据集代码

#该函数设置序列化读入文件

def load_pickle(f):

version = platform.python_version_tuple()

if version[0] == '2':

return pickle.load(f)

elif version[0] == '3':

return pickle.load(f, encoding='latin1')

raise ValueError("invalid python version: {}".format(version))

#加载一个batch

def load_CIFAR_batch(filename):

""" load single batch of cifar """

with open(filename, 'rb') as f:

datadict = load_pickle(f) #datadict字典 包括两个关键字 ‘data’和‘labels’

X = datadict['data'] #10000*3072(32*32*3)的数组

Y = datadict['labels'] #10000*1 每个数范围为0-9

X = X.reshape(10000, 3, 32, 32).transpose(0,2,3,1).astype("float")

Y = np.array(Y)

return X, Y

#该函数为加载数据集主函数,返回训练集和测试集的数据和标签

def load_CIFAR10(ROOT):

""" load all of cifar """

xs = []

ys = []

for b in range(1,6):

f = os.path.join(ROOT, 'data_batch_%d' % (b, ))

X, Y = load_CIFAR_batch(f)

xs.append(X)

ys.append(Y)

#加载X_train Y_train

Xtr = np.concatenate(xs) #array(【】【】【】【】)→array(【】)拼接起来

Ytr = np.concatenate(ys) #拼接五个batch的图片数据和label

del X, Y

#加载X_test Y_test

Xte, Yte = load_CIFAR_batch(os.path.join(ROOT, 'test_batch'))

return Xtr, Ytr, Xte, Yte

三.欧式距离计算

1.双循环

def compute_distances_two_loops(self, X):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in range(num_test):

for j in range(num_train):

#使用两次循环,默认是ord=2 即计算欧氏距离 平方和开根号

dists[i][j] = np.linalg.norm(X[i,:]-self.X_train[j,:])

return dists

这里利用了np.linalg.norm函数计算欧氏距离。

我们知道在python中利用循环时间复杂度很高,所以想办法减小循环。

2.单循环

def compute_distances_one_loop(self, X):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in range(num_test):

dists[i:] = np.linalg.norm(X[i,:]-self.X_train[:],axis=1)

return dists

利用numpy的广播机制同时计算一个测试数据和所有训练数据之间的距离,从而实现减少一层循环。

3.无循环

def compute_distances_no_loops(self, X):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

#根号(X所有行向量平方和矩阵+X_train所有行向量平方矩阵-2*X*X_train转置,类似余弦定理的向量形式)

# num_test*1 1*num_train num_test*num_train

dists += np.sum(np.multiply(X,X),axis=1,keepdims=True).reshape(num_test,1)

dists += np.sum(np.multiply(self.X_train,self.X_train),axis=1,keepdims=True).reshape(1,num_train)

dists += -2*np.dot(X,self.X_train.T)

dists = np.sqrt(dists)

return dists

四.预测

def predict_labels(self, dists, k=1):

num_test = dists.shape[0]

y_pred = np.zeros(num_test)

for i in range(num_test):

closest_y = []

#这里得reshape 不然数组太深

closest_y = self.y_train[np.argsort(dists[i,:])[:k]]

y_pred[i] = np.argmax(np.bincount(closest_y.reshape(len(closest_y))))

return y_pred

这里利用np.argsort对距离矩阵进行排序找出第i个测试数据的k近邻closest_y

利用bincount统计各元素出现频次并用argmax找出最高频次所对应的标签作为最终预测标签。

这里有个需要注意的地方,但凡遇到numpy操作,尽量处理完矩阵后进行reshape保险起见化为下个操作需要的形状,不然容易出问题。

五.knn对数据集训练主过程

1.基础库应用和画图设置

# Run some setup code for this notebook.

import random

import numpy as np

from cs231n.data_utils import load_CIFAR10

import matplotlib.pyplot as plt

# This is a bit of magic to make matplotlib figures appear inline in the notebook

# rather than in a new window.

%matplotlib inline

plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

# Some more magic so that the notebook will reload external python modules;

# see http://stackoverflow.com/questions/1907993/autoreload-of-modules-in-ipython

%load_ext autoreload

%autoreload 2

2.只取5000个训练样本和500个测试样本

# Subsample the data for more efficient code execution in this exercise

#为了让本算法实现速度快点,只取一部分数据

num_training = 5000

mask = list(range(num_training))

X_train = X_train[mask]

y_train = y_train[mask]

num_test = 500

mask = list(range(num_test))

X_test = X_test[mask]

y_test = y_test[mask]

# Reshape the image data into rows

X_train = np.reshape(X_train, (X_train.shape[0], -1))

X_test = np.reshape(X_test, (X_test.shape[0], -1))

print(X_train.shape, X_test.shape)

**```

3.训练分类器(记录所有训练数据)**

```python

from cs231n.classifiers import KNearestNeighbor

# Create a kNN classifier instance.

# Remember that training a kNN classifier is a noop:

# the Classifier simply remembers the data and does no further processing

classifier = KNearestNeighbor()

classifier.train(X_train, y_train)

4.计算距离矩阵并预测样本

dists = classifier.compute_distances_two_loops(X_test)

print(dists.shape)

y_test_pred = classifier.predict_labels(dists, k=5)

num_correct = np.sum(y_test_pred == y_test)

accuracy = float(num_correct) / num_test

print('Got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy))

5.交叉验证:将5000个训练样本分成五块,轮流取其中一块做测试集,其余四块做训练集,计算在不同k值下的准确率。

num_folds = 5

k_choices = [1, 3, 5, 8, 10, 12, 15, 20, 50, 100]

X_train_folds = []

y_train_folds = []

################################################################################

# TODO: #

# Split up the training data into folds. After splitting, X_train_folds and #

# y_train_folds should each be lists of length num_folds, where #

# y_train_folds[i] is the label vector for the points in X_train_folds[i]. #

# Hint: Look up the numpy array_split function. #

################################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

X_train_folds = np.array_split(X_train,num_folds)

y_train_folds = np.array_split(y_train,num_folds)

print(len(X_train_folds))

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# A dictionary holding the accuracies for different values of k that we find

# when running cross-validation. After running cross-validation,

# k_to_accuracies[k] should be a list of length num_folds giving the different

# accuracy values that we found when using that value of k.

k_to_accuracies = {}

################################################################################

# TODO: #

# Perform k-fold cross validation to find the best value of k. For each #

# possible value of k, run the k-nearest-neighbor algorithm num_folds times, #

# where in each case you use all but one of the folds as training data and the #

# last fold as a validation set. Store the accuracies for all fold and all #

# values of k in the k_to_accuracies dictionary. #

################################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

for k in k_choices:

k_to_accuracies[k] = np.zeros(num_folds)

for i in range(num_folds):

X_test_temp = X_train_folds[i]

y_test_temp = y_train_folds[i]

X_train_temp = np.concatenate((np.array(X_train_folds)[:i],np.array(X_train_folds)[(i+1):]),axis=0)

y_train_temp = np.concatenate((np.array(y_train_folds)[:i],np.array(y_train_folds)[(i+1):]),axis=0)

X_test_temp = np.reshape(X_test_temp, (int(X_train.shape[0] * 1 / 5), -1))

y_test_temp = np.reshape(y_test_temp, (int(y_train.shape[0] * 1 / 5), -1))

X_train_temp= np.reshape(X_train_temp, (int(X_train.shape[0]*4 / 5), -1))

y_train_temp= np.reshape(y_train_temp, (int(y_train.shape[0]*4 / 5), -1))

#print(X_train_temp.shape)

classifier.train(X_train_temp, y_train_temp)

#dists_temp = classifier.compute_distances_no_loops(X_test_temp)

#print(dists_temp.shape)

y_test_pred_temp = classifier.predict(X_test_temp,k)

y_test_pred_temp = y_test_pred_temp.reshape(y_test_temp.shape[0],-1)

#print(y_test_pred_temp.shape)

num_correct = np.sum(y_test_pred_temp == y_test_temp)

num_test_temp = y_test_temp.shape[0]

#print(num_correct)

#print(num_test_temp)

accuracy = float(num_correct) / num_test_temp

k_to_accuracies[k][i]=accuracy

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# Print out the computed accuracies

for k in sorted(k_to_accuracies):

for accuracy in k_to_accuracies[k]:

print('k = %d, accuracy = %f' % (k, accuracy))

6.画出k值变化的准确率曲线图

# plot the raw observations

for k in k_choices:

accuracies = k_to_accuracies[k]

plt.scatter([k] * len(accuracies), accuracies)

# plot the trend line with error bars that correspond to standard deviation

accuracies_mean = np.array([np.mean(v) for k,v in sorted(k_to_accuracies.items())])

accuracies_std = np.array([np.std(v) for k,v in sorted(k_to_accuracies.items())])

plt.errorbar(k_choices, accuracies_mean, yerr=accuracies_std)

plt.title('Cross-validation on k')

plt.xlabel('k')

plt.ylabel('Cross-validation accuracy')

plt.show()

可以看出在k=10的时候性能最佳。

7.将k=10应用于整个训练集训练。

best_k = 10

classifier = KNearestNeighbor()

classifier.train(X_train, y_train)

y_test_pred = classifier.predict(X_test, k=best_k)

# Compute and display the accuracy

num_correct = np.sum(y_test_pred == y_test)

accuracy = float(num_correct) / num_test

print('Got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy))

Got 141 / 500 correct => accuracy: 0.282000

六.心得体会

这算是第一次手撸经典算法代码,numpy的运用还是很不熟练,很多地方磕磕碰碰犯了很多次错,但也算是基本实现了功能,继续努力吧,希望我的经验可以给你一些帮助,一起努力。