实验环境必须保证每个节点有解析:

redhat 7.3

server1 172.25.14.1 mfsmaster节点

server2 172.25.14.2 从节点,就是真正储存数据的节点

server3 172.25.14.3 通server2

server4 172.25.24.4 高可用节点

真机: 172.25.14.250 client

- server4上安装master,编辑域名解析,开启服务

[root@server4 3.0.103]# yum install -y moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm

[root@server4 3.0.103]# vim /etc/hosts

172.25.14.1 server1 mfsmaster

[root@server4 ~]# vim /usr/lib/systemd/system/moosefs-master.service

8 ExecStart=/usr/sbin/mfsmaster -a

[root@server4 ~]# systemctl daemon-reload

[root@server4 ~]# systemctl start moosefs-master

- server1和server4上配置高可用yum源

[root@server1 ~]# vim /etc/yum.repos.d/yum.repo

[rhel7.3]

name=rhel7.3

baseurl=http://172.25.254.250/rhel7.3/x86_64/dvd

gpgcheck=0

[HighAvailability]

name=HighAvailability

baseurl=http://172.25.254.250/rhel7.3/x86_64/dvd/addons/HighAvailability

gpgcheck=0

[ResilientStorage]

name=ResilientStorage

baseurl=http://172.25.254.250/rhel7.3/x86_64/dvd/addons/ResilientStorage

gpgcheck=0

[root@server1 ~]# scp /etc/yum.repos.d/yum.repo 172.25.14.4:/etc/yum.repos.d/yum.repo

root@172.25.14.4's password:

yum.repo

- 在serevr1和server4安装组件

[root@server1 ~]# yum install -y pacemaker corosync

[root@server4 ~]# yum install -y pacemaker corosync

- 在server1和server4上做免密

[root@server1 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

d7:92:3e:97:0a:d9:30:62:d5:00:52:b1:e6:23:ef:75 root@server1

The key's randomart image is:

+--[ RSA 2048]----+

| ..+o. |

| . . o |

| o . . |

| o . o |

| . = S + . |

| + o B . . |

| . + E o |

| . . o + |

| . . |

+-----------------+

[root@server1 ~]# ssh-copy-id server4

[root@server1 ~]# ssh-copy-id server1

- 安装资源管理工具pcs

[root@server1 ~]# yum install -y pcs

[root@server4 ~]# yum install -y pcs

[root@server1 ~]# systemctl start pcsd

[root@server4 ~]# systemctl start pcsd

[root@server1 ~]# systemctl enable pcsd

[root@server4 ~]# systemctl enable pcsd

[root@server1 ~]# passwd hacluster

[root@server4 ~]# passwd hacluster

- 创建集群,并启动

[root@server1 ~]# pcs cluster auth server1 server4

[root@server1 ~]# pcs cluster setup --name mycluster server1 server4

[root@server1 ~]# pcs cluster start --all

- 查看状态

[root@server1 ~]# corosync-cfgtool -s

Printing ring status.

Local node ID 1

RING ID 0

id = 172.25.14.1

status = ring 0 active with no faults

[root@server1 ~]# pcs status corosync

Membership information

----------------------

Nodeid Votes Name

1 1 server1 (local)

2 1 server4

- 解决报错

[root@server1 ~]# crm_verify -L -V

error: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

error: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

error: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

[root@server1 ~]# pcs property set stonith-enabled=false

[root@server1 ~]# crm_verify -L -V

[root@server1 ~]#

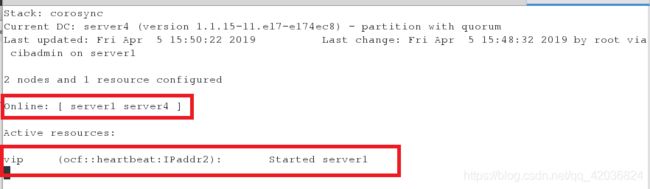

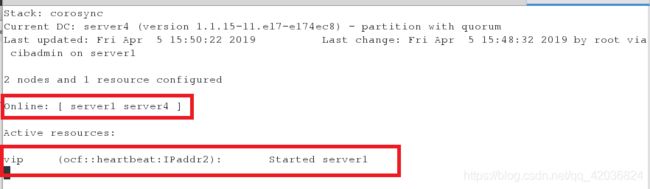

- 创建资源

[root@server1 ~]# pcs resource create vip ocf:heartbeat:IPaddr2 ip=172.25.14.100 cidr_netmask=32 op monitor interval=30s

[root@server1 ~]# pcs resource show

vip (ocf::heartbeat:IPaddr2): Started server1

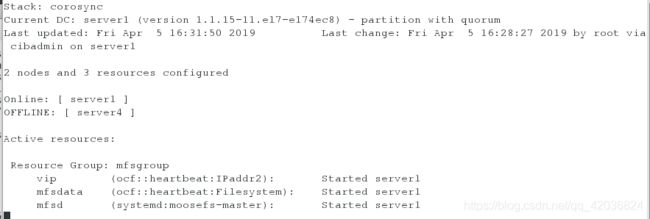

[root@server1 ~]# pcs status

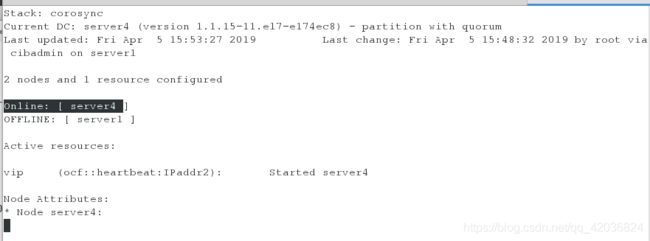

[root@server4 ~]# crm_mon

10. 实现高可用

[root@server1 ~]# ip addr

```

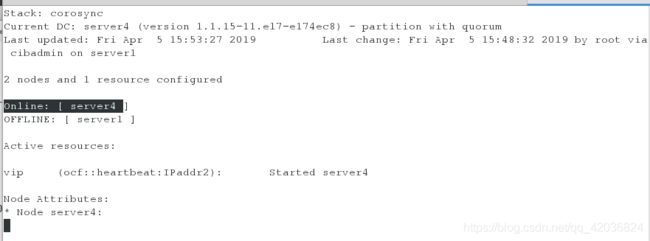

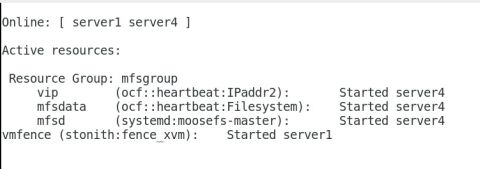

- 将server1,停掉,vip漂移到server4上

```javascript

[root@server1 ~]# pcs cluster stop server1

[root@server4 ~]# ip addr

- 用crm_mon命令,查看监控信息可以查看只有server4是online

- 再开启server1,vip不会漂移

[root@server1 ~]# pcs cluster start server1

实现数据共享

- 清理之前的环境

[root@foundation14 ~]# umount /mnt/mfsmeta

[root@server1 ~]# systemctl stop moosefs-master

[root@server2 chunk1]# systemctl stop moosefs-chunkserver

[root@server3 3.0.103]# systemctl stop moosefs-chunkserver

- 所有节点,添加如下解析(真机,server1-4)

[root@foundation14 ~]# vim /etc/hosts

[root@foundation14 ~]# cat /etc/hosts

172.25.14.100 mfsmaster

172.24.14.1 server1

- 给sevrer2加虚拟磁盘

[root@server2 chunk1]# fdisk -l

Disk /dev/vda: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

- 安装targetcli,并做相应配置

[root@server2 ~]# yum install -y targetcli

[root@server2 ~]# targetcli

Warning: Could not load preferences file /root/.targetcli/prefs.bin.

targetcli shell version 2.1.fb41

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'.

/> ls

o- / ...................................................................................... [...]

o- backstores ........................................................................... [...]

| o- block ............................................................... [Storage Objects: 0]

| o- fileio .............................................................. [Storage Objects: 0]

| o- pscsi ............................................................... [Storage Objects: 0]

| o- ramdisk ............................................................. [Storage Objects: 0]

o- iscsi ......................................................................... [Targets: 0]

o- loopback ...................................................................... [Targets: 0]

/> cd backstores/block

/backstores/block> create my_disk1 /dev/vda

Created block storage object my_disk1 using /dev/vda.

/iscsi> create iqn.2019-04.com.example:server2

/iscsi/iqn.20...er2/tpg1/luns> create /backstores/block/my_disk1

/iscsi/iqn.20...er2/tpg1/acls> create iqn.2019-04.com.example:client

5. server1

##安装iscsi

[root@server1 ~]# yum install -y iscsi-*

[root@server1 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2019-04.com.example:client

[root@server1 ~]# iscsiadm -m discovery -t st -p 172.25.14.2

172.25.14.2:3260,1 iqn.2019-04.com.example:server2

[root@server1 ~]# iscsiadm -m node -l

##磁盘共享成功

[root@server1 ~]# fdisk -l

Disk /dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

##创建分区,并格式化

[root@server1 ~]# fdisk /dev/sdb

[root@server1 ~]# mkfs.xfs /dev/sdb1

##挂载

[root@server1 ~]# mount /dev/sdb1 /mnt/

[root@server1 ~]# cd /var/lib/mfs/

[root@server1 mfs]# ls

changelog.10.mfs changelog.6.mfs changelog.9.mfs metadata.mfs.back.1

changelog.1.mfs changelog.7.mfs metadata.crc metadata.mfs.empty

changelog.3.mfs changelog.8.mfs metadata.mfs stats.mfs

[root@server1 mfs]# cp -p * /mnt

[root@server1 mfs]# cd /mnt

[root@server1 mnt]# ls

changelog.10.mfs changelog.6.mfs changelog.9.mfs metadata.mfs.back.1

changelog.1.mfs changelog.7.mfs metadata.crc metadata.mfs.empty

changelog.3.mfs changelog.8.mfs metadata.mfs stats.mfs

[root@server1 mnt]# chown mfs.mfs /mnt/

[root@server1 mnt]# cd

[root@server1 ~]# umount /mnt

[root@server1 ~]# mount /dev/sdb1 /var/lib/mfs

[root@server1 ~]# systemctl start moosefs-master

[root@server1 ~]# systemctl stop moosefs-master

- server4,同server1

[root@server4 ~]# yum install -y iscsi-*

[root@server4 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2019-04.com.example:client

[root@server4 ~]# iscsiadm -m discovery -t st -p 172.25.14.2

172.25.14.2:3260,1 iqn.2019-04.com.example:server2

[root@server4 ~]# iscsiadm -m node -l

[root@server4 ~]# mount /dev/sdb1 /var/lib/mfs

[root@server4 ~]# systemctl start moosefs-master

[root@server4 ~]# systemctl stop moosefs-master

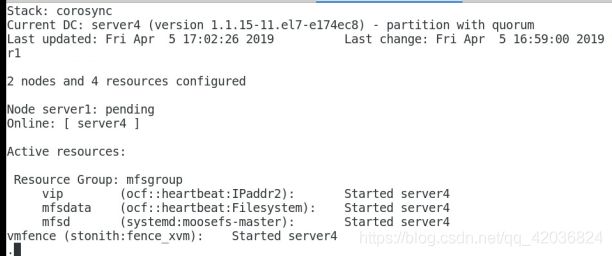

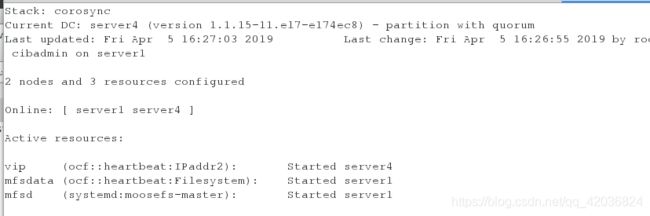

- 再次创建资源

[root@server1 ~]# pcs resource create mfsdata ocf:heartbeat:Filesystem device=/dev/sdb1 directory=/var/lib/mfs fstype=xfs op monitor interval=30s

[root@server1 ~]# pcs resource create mfsd systemd:moosefs-master op monitor interval=1min

[root@server1 ~]# pcs resource group add mfsgroup vip mfsdata mfsd

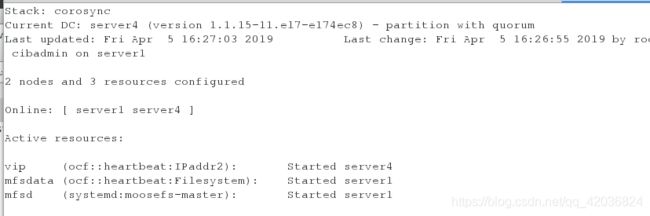

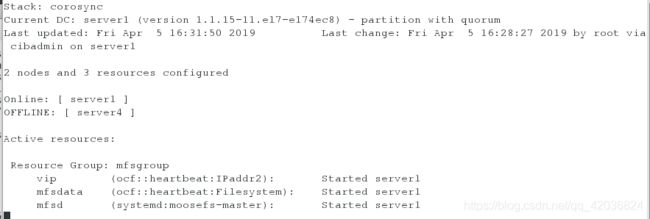

- 测试,关掉server4,则server1上线

[root@server1 ~]# pcs cluster stop server4

[root@server1 ~]# pcs cluster start server4

配置fence

- server1,server4安装fence-virt

[root@server1 mfs]# yum install -y fence-virt

[root@server4 mfs]# yum install -y fence-virt

[root@server1 mfs]# pcs stonith list

fence_virt - Fence agent for virtual machines

fence_xvm - Fence agent for virtual machines

- 客户端安装 fence-virtd,并做配置

[root@foundation14 ~]# yum install -y fence-virtd

[root@foundation14 ~]# mkdir /etc/cluster

[root@foundation14 ~]# cd /etc/cluster

[root@foundation14 cluster]# fence_virtd -c

Interface [virbr0]: br0 ##注意br0需要修改,其余回车即可

[root@foundation14 cluster]# dd if=/dev/urandom of=fence_xvm.key bs=128 count=1

1+0 records in

1+0 records out

128 bytes (128 B) copied, 0.000205145 s, 624 kB/s

[root@foundation14 cluster]# ls

fence_xvm.key

[root@foundation14 cluster]# scp fence_xvm.key root@172.25.14.1:

[root@foundation14 cluster]# scp fence_xvm.key root@172.25.14.4:

[root@server1 mfs]# mkdir /etc/cluster

[root@server4 ~]# mkdir /etc/cluster

[root@foundation14 cluster]# systemctl start fence_virtd

[root@foundation14 images]# netstat -anulp | grep :1229 ##默认断口1229开启

udp 0 0 0.0.0.0:1229 0.0.0.0:* 14972/fence_virtd

- 在server1继续配置策略

[root@server1 mfs]# cd /etc/cluster

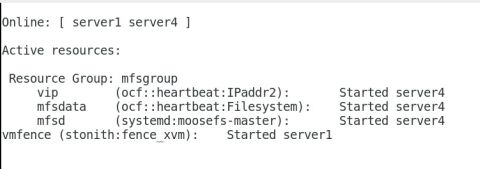

[root@server1 cluster]# pcs stonith create vmfence fence_xvm pcmk_host_map="server1:server1;server4:server4" op monitor interval=1min

[root@server1 cluster]# pcs property set stonith-enabled-true

[root@server1 cluster]# crm_verify -L -V ##没有报错

[root@server1 cluster]# fence_xvm -H server4

[root@server4 ~]# pcs cluster start server4

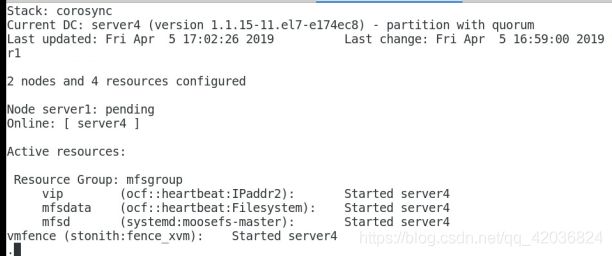

- 使server1崩溃,server1会自启动,然后server4上线

[root@server1 ~]# echo c > /proc/sysrq-trigger