音频系统底层API

Windows Vista、Windows 7、Windows server 2008等系统音频系统相比之前的系统有很大的变化,产生了一套新的底层API即Core Audio APIs。该低层API为高层API( 如Media Foundation(将要取代DirectShow等高层API)等 )提供服务。

微软参考文档

几个概念,参考自 Windows下Core Audio APIS 音频应用开发

1. IMMDevice : 创建音频设备终端,我们可以把它简单的理解为设备对象

2. IAudioClient : 创建一个用来管理音频数据流的对象,应用程序通过这个对象可以获取的音频设备里的数据,我们可以把它想象成一个大水池,里面都是一些数据

3. IAudioCaptureClient : 很明显,专用于获取采集数据的对象,它还有个兄弟IAudioRenderClient

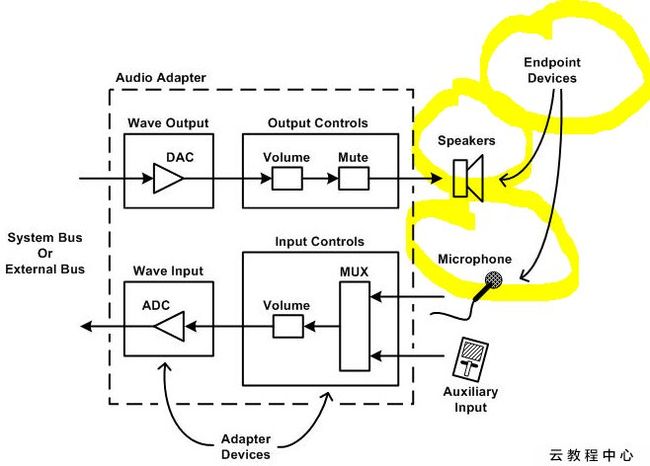

Core Audio APIs 在系统中的位置:

一 Core Audio APIs的组成:

1) Multimedia Device (MMDevice) API //创建音频终端设备

2) EndpointVolume API

3) Windows Audio Session API (WASAPI)

4) DeviceTopology API

1.1 Multimedia Device (MMDevice) API

什么是MMDeivice?

全名:The Windows MultiMediaDevice(MMDevice) API

头文件: Mmdeviceapi.h defines the interfaces in the MMDevice API

作用:

The Windows Multimedia Device (MMDevice) API enables audio clients to discover audio endpoint devices, determine their capabilities, and create driver instances for those devices.

该API用于枚举系统中的音频终端设备(Audio Endpoint Devices)。告诉音频客户端程序有哪些音频终端设备以及它们的性能,并且为这些设备创建驱动实例(driver instances)。是最基本的Core Audio API,为其他三个API提供服务。

主要接口:

IMMDeviceEnumerator 用来列举音频终端设备。

IMMDevice 代表一个音频设备(audio device)。

IMMEndpoint 代表一个音频终端设备(audio endpoint device),只有一个方法GetDataFlow,用来识别一个音频终端设备是一个输出设备(rendering device)还是一个输入设备(capture device)。

IMMDeviceCollection 代表一个音频终端设备的集合

示例一:获取默认的扬声器设备

HRESULT hr = S_OK;

IMMDeviceEnumerator *pMMDeviceEnumerator;

// activate a device enumerator

hr = CoCreateInstance(

__uuidof(MMDeviceEnumerator), NULL, CLSCTX_ALL,

__uuidof(IMMDeviceEnumerator),

(void**)&pMMDeviceEnumerator

);

if (FAILED(hr)) {

ERR(L"CoCreateInstance(IMMDeviceEnumerator) failed: hr = 0x%08x", hr);

return hr;

}

// get the default render endpoint //

hr = pMMDeviceEnumerator->GetDefaultAudioEndpoint(eRender, eConsole, ppMMDevice);

if (FAILED(hr)) {

ERR(L"IMMDeviceEnumerator::GetDefaultAudioEndpoint failed: hr = 0x%08x", hr);

return hr;

}

enum __MIDL___MIDL_itf_mmdeviceapi_0000_0000_0001

{

eRender = 0, //扬声器

eCapture = ( eRender + 1 ) , //麦克风

eAll = ( eCapture + 1 ) ,

EDataFlow_enum_count = ( eAll + 1 )

} EDataFlow;

HRESULT get_default_device(IMMDevice **ppMMDevice) {

HRESULT hr = S_OK;

IMMDeviceEnumerator *pMMDeviceEnumerator;

// activate a device enumerator

hr = CoCreateInstance(

__uuidof(MMDeviceEnumerator), NULL, CLSCTX_ALL,

__uuidof(IMMDeviceEnumerator),

(void**)&pMMDeviceEnumerator

);

if (FAILED(hr)) {

ERR(L"CoCreateInstance(IMMDeviceEnumerator) failed: hr = 0x%08x", hr);

return hr;

}

ReleaseOnExit releaseMMDeviceEnumerator(pMMDeviceEnumerator);

// get the default render endpoint

//eRender 扬声器

//eCapture 麦克风

hr = pMMDeviceEnumerator->GetDefaultAudioEndpoint(eRender, eConsole, ppMMDevice);

if (FAILED(hr)) {

ERR(L"IMMDeviceEnumerator::GetDefaultAudioEndpoint failed: hr = 0x%08x", hr);

return hr;

}

return S_OK;

}示例二:枚举所有的音频设备

HRESULT list_devices() {

HRESULT hr = S_OK;

// get an enumerator

IMMDeviceEnumerator *pMMDeviceEnumerator;

hr = CoCreateInstance(

__uuidof(MMDeviceEnumerator), NULL, CLSCTX_ALL,

__uuidof(IMMDeviceEnumerator),

(void**)&pMMDeviceEnumerator

);

if (FAILED(hr)) {

ERR(L"CoCreateInstance(IMMDeviceEnumerator) failed: hr = 0x%08x", hr);

return hr;

}

ReleaseOnExit releaseMMDeviceEnumerator(pMMDeviceEnumerator);

IMMDeviceCollection *pMMDeviceCollection;

// get all the active render endpoints

// eRender设备

hr = pMMDeviceEnumerator->EnumAudioEndpoints(

eRender, DEVICE_STATE_ACTIVE, &pMMDeviceCollection

);

if (FAILED(hr)) {

ERR(L"IMMDeviceEnumerator::EnumAudioEndpoints failed: hr = 0x%08x", hr);

return hr;

}

ReleaseOnExit releaseMMDeviceCollection(pMMDeviceCollection);

UINT count;

hr = pMMDeviceCollection->GetCount(&count);

if (FAILED(hr)) {

ERR(L"IMMDeviceCollection::GetCount failed: hr = 0x%08x", hr);

return hr;

}

LOG(L"Active render endpoints found: %u", count);

for (UINT i = 0; i < count; i++) {

IMMDevice *pMMDevice;

// get the "n"th device

hr = pMMDeviceCollection->Item(i, &pMMDevice);

if (FAILED(hr)) {

ERR(L"IMMDeviceCollection::Item failed: hr = 0x%08x", hr);

return hr;

}

ReleaseOnExit releaseMMDevice(pMMDevice);

// open the property store on that device

IPropertyStore *pPropertyStore;

hr = pMMDevice->OpenPropertyStore(STGM_READ, &pPropertyStore);

if (FAILED(hr)) {

ERR(L"IMMDevice::OpenPropertyStore failed: hr = 0x%08x", hr);

return hr;

}

ReleaseOnExit releasePropertyStore(pPropertyStore);

// get the long name property

PROPVARIANT pv; PropVariantInit(&pv);

hr = pPropertyStore->GetValue(PKEY_Device_FriendlyName, &pv);

if (FAILED(hr)) {

ERR(L"IPropertyStore::GetValue failed: hr = 0x%08x", hr);

return hr;

}

PropVariantClearOnExit clearPv(&pv);

if (VT_LPWSTR != pv.vt) {

ERR(L"PKEY_Device_FriendlyName variant type is %u - expected VT_LPWSTR", pv.vt);

return E_UNEXPECTED;

}

LOG(L" %ls", pv.pwszVal);

}

return S_OK;

}

示例3: 获取指定设备

HRESULT get_specific_device(LPCWSTR szLongName, IMMDevice **ppMMDevice) {

HRESULT hr = S_OK;

*ppMMDevice = NULL;

// get an enumerator

IMMDeviceEnumerator *pMMDeviceEnumerator;

hr = CoCreateInstance(

__uuidof(MMDeviceEnumerator), NULL, CLSCTX_ALL,

__uuidof(IMMDeviceEnumerator),

(void**)&pMMDeviceEnumerator

);

if (FAILED(hr)) {

ERR(L"CoCreateInstance(IMMDeviceEnumerator) failed: hr = 0x%08x", hr);

return hr;

}

ReleaseOnExit releaseMMDeviceEnumerator(pMMDeviceEnumerator);

IMMDeviceCollection *pMMDeviceCollection;

// get all the active render endpoints

hr = pMMDeviceEnumerator->EnumAudioEndpoints(

eRender, DEVICE_STATE_ACTIVE, &pMMDeviceCollection

);

if (FAILED(hr)) {

ERR(L"IMMDeviceEnumerator::EnumAudioEndpoints failed: hr = 0x%08x", hr);

return hr;

}

ReleaseOnExit releaseMMDeviceCollection(pMMDeviceCollection);

UINT count;

hr = pMMDeviceCollection->GetCount(&count);

if (FAILED(hr)) {

ERR(L"IMMDeviceCollection::GetCount failed: hr = 0x%08x", hr);

return hr;

}

for (UINT i = 0; i < count; i++) {

IMMDevice *pMMDevice;

// get the "n"th device

hr = pMMDeviceCollection->Item(i, &pMMDevice);

if (FAILED(hr)) {

ERR(L"IMMDeviceCollection::Item failed: hr = 0x%08x", hr);

return hr;

}

ReleaseOnExit releaseMMDevice(pMMDevice);

// open the property store on that device

IPropertyStore *pPropertyStore;

hr = pMMDevice->OpenPropertyStore(STGM_READ, &pPropertyStore);

if (FAILED(hr)) {

ERR(L"IMMDevice::OpenPropertyStore failed: hr = 0x%08x", hr);

return hr;

}

ReleaseOnExit releasePropertyStore(pPropertyStore);

// get the long name property

PROPVARIANT pv; PropVariantInit(&pv);

hr = pPropertyStore->GetValue(PKEY_Device_FriendlyName, &pv);

if (FAILED(hr)) {

ERR(L"IPropertyStore::GetValue failed: hr = 0x%08x", hr);

return hr;

}

PropVariantClearOnExit clearPv(&pv);

if (VT_LPWSTR != pv.vt) {

ERR(L"PKEY_Device_FriendlyName variant type is %u - expected VT_LPWSTR", pv.vt);

return E_UNEXPECTED;

}

// is it a match?

if (0 == _wcsicmp(pv.pwszVal, szLongName)) {

// did we already find it?

if (NULL == *ppMMDevice) {

*ppMMDevice = pMMDevice;

pMMDevice->AddRef();

} else {

ERR(L"Found (at least) two devices named %ls", szLongName);

return E_UNEXPECTED;

}

}

}

if (NULL == *ppMMDevice) {

ERR(L"Could not find a device named %ls", szLongName);

return HRESULT_FROM_WIN32(ERROR_NOT_FOUND);

}

return S_OK;

}示例4:

如需将扬声器的声音记录到文件中,需创建一个声音文件

HRESULT open_file(LPCWSTR szFileName, HMMIO *phFile) {

MMIOINFO mi = {0};

*phFile = mmioOpen(

// some flags cause mmioOpen write to this buffer

// but not any that we're using

const_cast(szFileName),

&mi,

MMIO_WRITE | MMIO_CREATE

);

if (NULL == *phFile) {

ERR(L"mmioOpen(\"%ls\", ...) failed. wErrorRet == %u", szFileName, mi.wErrorRet);

return E_FAIL;

}

return S_OK;

}

1.2 WASAPI(Windows Audio Stream API)

The Windows Audio Session API (WASAPI) enables client applications to manage the flow of audio data between the application and an audio endpoint device.

Header files Audioclient.h and Audiopolicy.h define the WASAPI interfaces.

用于创建、管理进出音频端节点设备的音频流。

程序可通过audio engine,以共享模式访问audio endpoint device(比如麦克风 或Speakers)。

audio engine在endpoint buffer和endpoint device之间传输数据。

当播放音频数据时,程序向rendering endpoint buffer周期性写入数据。

当采集音频数据时,程序从capture endpoint buffer周期性读取数据。

WASAPI 接口:

IAudioClient接口

创建管理音频数据流的对象

涉及的几个方法:

1)、Activate

要访问WASAPI接口,

客户端首先通过调用IMMDevice :: Activate方法获取对音频终端设备的IAudioClient接口的引用。

IMMDevice::Activate来获取an audio endpoint device的IAudioClient interface引用。

// activate an IAudioClient

IAudioClient *pAudioClient;

hr = pMMDevice->Activate(

__uuidof(IAudioClient),

CLSCTX_ALL, NULL,

(void**)&pAudioClient

);In Windows Vista, which supports endpoint devices, the process of connecting to the same endpoint device is much simpler:

- Select a microphone from a collection of endpoint devices.

- Activate an audio-capture interface on that microphone.

The operating system does all the work necessary to identify and enable the endpoint device. For example, if the data path from the microphone includes a multiplexer, the system automatically selects the microphone input to the multiplexer.

Windows Vista,以音频采集为例,连接到endpoint device就两步:

(1) 从设备集合中选择一个麦克风。

(2) 用Activate激活该麦克风的音频采集接口。

IAudioClient *pAudioClient = NULL;

...

hr = pDevice->Activate(IID_IAudioClient, CLSCTX_ALL, NULL, (void**)&pAudioClient);

2) IAudioClient::GetDevicePeriod

The GetDevicePeriod method retrieves the length of the periodic interval separating successive processing passes by the audio engine on the data in the endpoint buffer.

HRESULT GetDevicePeriod(

REFERENCE_TIME *phnsDefaultDevicePeriod,

REFERENCE_TIME *phnsMinimumDevicePeriod

);

Pointer to a REFERENCE_TIME variable into which the method writes a time value specifying the default interval between periodic processing passes by the audio engine. The time is expressed in 100-nanosecond units. For information about REFERENCE_TIME, see the Windows SDK documentation.

可以认为是音频频率周期。

音频设备本身会有个设备周期,而另外一方面,我们在初始化Core Audio 音频管理对象的时候,也可以设置一个周期,这个是指处理音频数据的周期。这两个时间异常重要,一旦我们设定的处理周期大于设备周期一定范围时,采集出来的数据就会出现丢帧的现象,所以我们要根据设备周期来设置我们的处理周期。

示例:

音频设置频率周期

// get the default device periodicity

REFERENCE_TIME hnsDefaultDevicePeriod;

hr = pAudioClient->GetDevicePeriod(&hnsDefaultDevicePeriod, NULL);

if (FAILED(hr)) {

ERR(L"IAudioClient::GetDevicePeriod failed: hr = 0x%08x", hr);

return hr;

}自己处理音频数据周期

// set the waitable timer

LARGE_INTEGER liFirstFire;

liFirstFire.QuadPart = -hnsDefaultDevicePeriod / 2; // negative means relative time

LONG lTimeBetweenFires = (LONG)hnsDefaultDevicePeriod / 2 / (10 * 1000); // convert to milliseconds

BOOL bOK = SetWaitableTimer(

hWakeUp,

&liFirstFire,

lTimeBetweenFires,

NULL, NULL, FALSE

);

又如:

UINT32 BufferSizePerPeriod()

{

REFERENCE_TIME defaultDevicePeriod, minimumDevicePeriod;

HRESULT hr = _AudioClient->GetDevicePeriod(&defaultDevicePeriod, &minimumDevicePeriod);

if (FAILED(hr))

{

WriteLog(hr, "Unable to retrieve device period: %x\n");

return 0;

}

double devicePeriodInSeconds = defaultDevicePeriod / (10000.0*1000.0);

return devicePeriodInSeconds*1000;//这里返回的就是我们需要的时间

}

3)IAudioClient::GetMixFormat

The GetMixFormat method retrieves the stream format that the audio engine uses for its internal processing of shared-mode streams.

音频引擎内部处理的音频流格式。

// get the default device format

WAVEFORMATEX *pwfx;

hr = pAudioClient->GetMixFormat(&pwfx);

if (FAILED(hr)) {

ERR(L"IAudioClient::GetMixFormat failed: hr = 0x%08x", hr);

return hr;

}The mix format is the format that the audio engine uses internally for digital processing of shared-mode streams. This format is not necessarily a format that the audio endpoint device supports. Thus, the caller might not succeed in creating an exclusive-mode stream with a format obtained by calling GetMixFormat.

经常见到这样的描述: 44100HZ 16bit stereo 或者 22050HZ 8bit mono 等等.

44100HZ 16bit stereo: 每秒钟有 44100 次采样, 采样数据用 16 位(2字节)记录, 双声道(立体声);

22050HZ 8bit mono: 每秒钟有 22050 次采样, 采样数据用 8 位(1字节)记录, 单声道;

当然也可以有 16bit 的单声道或 8bit 的立体声, 等等。

如果需要将音频流格式,转成16位,可以进行如下操作

// coerce int-16 wave format

// can do this in-place since we're not changing the size of the format

// also, the engine will auto-convert from float to int for us

switch (pwfx->wFormatTag) {

case WAVE_FORMAT_IEEE_FLOAT:

pwfx->wFormatTag = WAVE_FORMAT_PCM;

pwfx->wBitsPerSample = 16;

pwfx->nBlockAlign = pwfx->nChannels * pwfx->wBitsPerSample / 8;

pwfx->nAvgBytesPerSec = pwfx->nBlockAlign * pwfx->nSamplesPerSec;

break;

case WAVE_FORMAT_EXTENSIBLE:

{

// naked scope for case-local variable

PWAVEFORMATEXTENSIBLE pEx = reinterpret_cast(pwfx);

if (IsEqualGUID(KSDATAFORMAT_SUBTYPE_IEEE_FLOAT, pEx->SubFormat)) {

pEx->SubFormat = KSDATAFORMAT_SUBTYPE_PCM;

pEx->Samples.wValidBitsPerSample = 16;

pwfx->wBitsPerSample = 16;

pwfx->nBlockAlign = pwfx->nChannels * pwfx->wBitsPerSample / 8;

pwfx->nAvgBytesPerSec = pwfx->nBlockAlign * pwfx->nSamplesPerSec;

} else {

printf("Don't know how to coerce mix format to int-16\n");

CoTaskMemFree(pwfx);

pAudioClient->Release();

return E_UNEXPECTED;

}

}

break;

default:

printf("Don't know how to coerce WAVEFORMATEX with wFormatTag = 0x%08x to int-16\n", pwfx->wFormatTag);

CoTaskMemFree(pwfx);

pAudioClient->Release();

return E_UNEXPECTED;

}

4)IAudioClient::Initialize

客户端调用IAudioClient :: Initialize方法来初始化终端设备上的流

IAudioClient::Initialize用来在endpoint device初始化流。

// call IAudioClient::Initialize

// note that AUDCLNT_STREAMFLAGS_LOOPBACK and AUDCLNT_STREAMFLAGS_EVENTCALLBACK

// do not work together...

// the "data ready" event never gets set

// so we're going to do a timer-driven loop

hr = pAudioClient->Initialize(

AUDCLNT_SHAREMODE_SHARED,

AUDCLNT_STREAMFLAGS_LOOPBACK,

0, 0, pwfx, 0

);官方demo中

采集时

示例1、采集麦克风:

HRESULT hr = _AudioClient->Initialize(AUDCLNT_SHAREMODE_SHARED,

AUDCLNT_STREAMFLAGS_EVENTCALLBACK | AUDCLNT_STREAMFLAGS_NOPERSIST,

_EngineLatencyInMS*10000, 0, _MixFormat, NULL);

if (FAILED(hr))

{

printf("Unable to initialize audio client: %x.\n", hr);

return false;

}

AUDCLNT_STREAMFLAGS_EVENTCALLBACK 表示当audio buffer数据就绪时,会给系统发个信号,也就是事件触发。

示例2、混音

希望同时采集本机声卡上的默认麦克风和默认render的数据, 用AUDCLNT_STREAMFLAGS_LOOPBACK参数来设置

hr = pAudioClient->Initialize(

AUDCLNT_SHAREMODE_SHARED,

AUDCLNT_STREAMFLAGS_LOOPBACK,

hnsRequestedDuration,

0,

pwfx,

NULL);

AUDCLNT_STREAMFLAGS_LOOPBACK模式下,音频engine会将rending设备正在播放的音频流, 拷贝一份到音频的endpoint buffer。这样的话,WASAPI client可以采集到the stream。

如果AUDCLNT_STREAMFLAGS_LOOPBACK被设置, IAudioClient::Initialize会尝试在rending设备开辟一块capture buffer。

AUDCLNT_STREAMFLAGS_LOOPBACK只对rending设备有效,

Initialize仅在AUDCLNT_SHAREMODE_SHARED时才可以使用, 否则Initialize会失败。

Initialize成功后,可以用IAudioClient::GetService可获取该rending设备的IAudioCaptureClient接口

/*

The AUDCLNT_STREAMFLAGS_LOOPBACK flag enables loopback recording.

In loopback recording, the audio engine copies the audio stream

that is being played by a rendering endpoint device into an audio endpoint buffer

so that a WASAPI client can capture the stream.

If this flag is set, the IAudioClient::Initialize method attempts to open a capture buffer on the rendering device.

This flag is valid only for a rendering device

and only if the Initialize call sets the ShareMode parameter to AUDCLNT_SHAREMODE_SHARED.

Otherwise the Initialize call will fail.

If the call succeeds,

the client can call the IAudioClient::GetService method

to obtain an IAudioCaptureClient interface on the rendering device.

For more information, see Loopback Recording.

*/

- render时

int _EngineLatencyInMS = 50;

...

HRESULT hr = _AudioClient->Initialize(AUDCLNT_SHAREMODE_SHARED,

AUDCLNT_STREAMFLAGS_NOPERSIST,

_EngineLatencyInMS*10000,

0,

_MixFormat,

NULL);

if (FAILED(hr))

{

printf("Unable to initialize audio client: %x.\n", hr);

return false;

}

5)IAudioClient::GetService

初始化流后,客户端可以通过调用IAudioClient :: GetService方法获取对其他WASAPI接口的引用

// activate an IAudioCaptureClient

IAudioCaptureClient *pAudioCaptureClient;

hr = pAudioClient->GetService(

__uuidof(IAudioCaptureClient),

(void**)&pAudioCaptureClient

);IAudioClient::GetService

初始化流之后,可调用IAudioClient::GetService来获取其它 WASAPI interfaces的引用。

- 采集时

IAudioCaptureClient *pCaptureClient = NULL;

...

hr = pAudioClient->GetService(

IID_IAudioCaptureClient,

(void**)&pCaptureClient);

EXIT_ON_ERROR(hr)

- render时

IAudioRenderClient *pRenderClient = NULL;

...

hr = pAudioClient->GetService(

IID_IAudioRenderClient,

(void**)&pRenderClient);

EXIT_ON_ERROR(hr)

Start is a control method that the client calls to start the audio stream.

Starting the stream causes the IAudioClient object to begin streaming data between the endpoint buffer and the audio engine.

It also causes the stream's audio clock to resume counting from its current position.

6)AudioClient::Start

Start is a control method that the client calls to start the audio stream.

Starting the stream causes the IAudioClient object to begin streaming data between the endpoint buffer and the audio engine.

It also causes the stream's audio clock to resume counting from its current position.

// call IAudioClient::Start

hr = pAudioClient->Start();

if (FAILED(hr)) {

ERR(L"IAudioClient::Start failed: hr = 0x%08x", hr);

return hr;

}7) IAudioCaptureClient::GetNextPacketSize

The GetNextPacketSize method retrieves the number of frames in the next data packet in the capture endpoint buffer.

这里有两个注意的。

(1) 单位为audio frame。

(2) 注意是采集buffer(capture endpoint buffer)

(3)仅在共享模式下生效,独占模式下无效。Use this method only with shared-mode streams. It does not work with exclusive-mode streams.

Before calling the IAudioCaptureClient::GetBuffer method to retrieve the next data packet, the client can call GetNextPacketSize to retrieve the number of audio frames in the next packet.

The count reported by GetNextPacketSize matches the count retrieved in the GetBuffer call (through the pNumFramesToRead output parameter) that follows the GetNextPacketSize call.

UINT32 nNextPacketSize;

for (

hr = pAudioCaptureClient->GetNextPacketSize(&nNextPacketSize);

SUCCEEDED(hr) && nNextPacketSize > 0;

hr = pAudioCaptureClient->GetNextPacketSize(&nNextPacketSize)

)

8)IAudioCaptureClient::GetBuffer

https://msdn.microsoft.com/en-us/library/windows/desktop/dd370859(v=vs.85).aspx

Retrieves a pointer to the next available packet of data in the capture endpoint buffer.

获得终端缓存中,下一个可用的数据包

HRESULT GetBuffer(

[out] BYTE **ppData,

[out] UINT32 *pNumFramesToRead,

[out] DWORD *pdwFlags,

[out] UINT64 *pu64DevicePosition,

[out] UINT64 *pu64QPCPosition

);

ppData: 可读的下个数据包的起始地址。

pNumFramesToRead : 下个数据包的长度(单位为audio frames)。客户端或者全部读取或者一个也不读。

pdwFlags:

The method writes either 0 or the bitwise-OR combination of one or more of the following [**_AUDCLNT_BUFFERFLAGS**](https://msdn.microsoft.com/en-us/library/windows/desktop/dd371458(v=vs.85).aspx) enumeration values:

AUDCLNT_BUFFERFLAGS_SILENT

AUDCLNT_BUFFERFLAGS_DATA_DISCONTINUITY

AUDCLNT_BUFFERFLAGS_TIMESTAMP_ERROR

可利用flag来判断是否为静音。

enum _AUDCLNT_BUFFERFLAGS

{ AUDCLNT_BUFFERFLAGS_DATA_DISCONTINUITY = 0x1,

AUDCLNT_BUFFERFLAGS_SILENT = 0x2,

AUDCLNT_BUFFERFLAGS_TIMESTAMP_ERROR = 0x4

} ;

其中

AUDCLNT_BUFFERFLAGS_SILENT

Treat all of the data in the packet as silence and ignore the actual data values.

//

// The flags on capture tell us information about the data.

//

// We only really care about the silent flag

// since we want to put frames of silence into the buffer

// when we receive silence.

// We rely on the fact that a logical bit 0 is silence for both float and int formats.

//

if (flags & AUDCLNT_BUFFERFLAGS_SILENT)

{

//

// Fill 0s from the capture buffer to the output buffer.

//

ZeroMemory(&_CaptureBuffer[_CurrentCaptureIndex], framesToCopy*_FrameSize);

}

else

{

//

// Copy data from the audio engine buffer to the output buffer.

//

CopyMemory(&_CaptureBuffer[_CurrentCaptureIndex], pData, framesToCopy*_FrameSize);

}

一个周期所处理的音频数据

nBlockAlign 音频流每帧数据的大小

typedef struct tWAVEFORMATEX

{

WORD wFormatTag; /* format type */

WORD nChannels; /* number of channels (i.e. mono, stereo...) */

DWORD nSamplesPerSec; /* sample rate */

DWORD nAvgBytesPerSec; /* for buffer estimation */

WORD nBlockAlign; /* block size of data */

WORD wBitsPerSample; /* number of bits per sample of mono data */

WORD cbSize; /* the count in bytes of the size of */

/* extra information (after cbSize) */

} WAVEFORMATEX, *PWAVEFORMATEX, NEAR *NPWAVEFORMATEX, FAR *LPWAVEFORMATEX;

// get the captured data

BYTE *pData;

UINT32 nNumFramesToRead;

DWORD dwFlags;

hr = pAudioCaptureClient->GetBuffer(

&pData,

&nNumFramesToRead,

&dwFlags,

NULL,

NULL

);

if (FAILED(hr)) {

ERR(L"IAudioCaptureClient::GetBuffer failed on pass %u after %u frames: hr = 0x%08x", nPasses, *pnFrames, hr);

return hr;

} LONG lBytesToWrite = nNumFramesToRead * nBlockAlign; //要处理的音频数据大小

LONG lBytesWritten = mmioWrite(hFile, reinterpret_cast(pData), lBytesToWrite);

总结:

![]()

后记:

采集到了本端音频数据,但将音频数据发送到远端时候,如果10ms数据量一传,则呲呲声噪音,如果调大,比如200ms 则听起来断断续续。

而且,音频SDK传送数据,传送的是单声道,所以需要将多声道转成单声道数据。

多声道转单声道,就是略过其余声道的数据, 应该是各个声道的数据都是一样的

std::string MutliChannelSoundToSingleEx(const int16_t *buff, int32_t size, int32_t channels)

{

std::string sample_data;

for (int i = 0; i < size && channels > 0; i += channels)

{

sample_data.append((char*)(&buff[i]), 2);

}

return sample_data;

}

std::string MutliChannelSoundToSingle(const std::string &src_data, int32_t channels)

{

if (channels == 1)

{

return src_data;

}

else

{

int16_t *buffer = (int16_t*)src_data.c_str();

int size = src_data.size() / 2;

return MutliChannelSoundToSingleEx(buffer, size, channels);

}

}

BYTE *pData;

UINT32 nNumFramesToRead;

DWORD dwFlags;

hr = pAudioCaptureClient->GetBuffer(

&pData,

&nNumFramesToRead,

&dwFlags,

NULL,

NULL

);

LONG lBytesToWrite = nNumFramesToRead * nBlockAlign;

if (pAudioCB)

{

std::string pdata(reinterpret_cast(pData), lBytesToWrite);

std::string result_data = pdata;

result_data = MutliChannelSoundToSingle(pdata, pwfx->nChannels);

pAudioCB(result_data.c_str(), result_data.size(), netImNeedLength, pwfx->nSamplesPerSec);

}

补充:

For PCM audio data on no more than two channels and with 8-bit or 16-bit samples, use the WAVEFORMATEX structure to specify the data format.

The following example shows how to set up a WAVEFORMATEX structure for 11.025 kilohertz (kHz) 8-bit mono and for 44.1 kHz 16-bit stereo. After setting up WAVEFORMATEX, the example calls the IsFormatSupported function to verify that the PCM waveform output device supports the format. The source code for IsFormatSupported is shown in an example in Determining Nonstandard Format Support.

UINT wReturn;

WAVEFORMATEX pcmWaveFormat;

// Set up WAVEFORMATEX for 11 kHz 8-bit mono.

pcmWaveFormat.wFormatTag = WAVE_FORMAT_PCM;

pcmWaveFormat.nChannels = 1;

pcmWaveFormat.nSamplesPerSec = 11025L;

pcmWaveFormat.nAvgBytesPerSec = 11025L;

pcmWaveFormat.nBlockAlign = 1;

pcmWaveFormat.wBitsPerSample = 8;

pcmWaveFormat.cbSize = 0;

// See if format is supported by any device in system.

wReturn = IsFormatSupported(&pcmWaveFormat, WAVE_MAPPER);

// Report results.

if (wReturn == 0)

MessageBox(hMainWnd, "11 kHz 8-bit mono is supported.",

"", MB_ICONINFORMATION);

else if (wReturn == WAVERR_BADFORMAT)

MessageBox(hMainWnd, "11 kHz 8-bit mono NOT supported.",

"", MB_ICONINFORMATION);

else

MessageBox(hMainWnd, "Error opening waveform device.",

"Error", MB_ICONEXCLAMATION);

// Set up WAVEFORMATEX for 44.1 kHz 16-bit stereo.

pcmWaveFormat.wFormatTag = WAVE_FORMAT_PCM;

pcmWaveFormat.nChannels = 2;

pcmWaveFormat.nSamplesPerSec = 44100L;

pcmWaveFormat.nAvgBytesPerSec = 176400L;

pcmWaveFormat.nBlockAlign = 4;

pcmWaveFormat.wBitsPerSample = 16;

pcmWaveFormat.cbSize = 0;

// See if format is supported by any device in the system.

wReturn = IsFormatSupported(&pcmWaveFormat, WAVE_MAPPER);

// Report results.

if (wReturn == 0)

MessageBox(hMainWnd, "44.1 kHz 16-bit stereo is supported.",

"", MB_ICONINFORMATION);

else if (wReturn == WAVERR_BADFORMAT)

MessageBox(hMainWnd, "44.1 kHz 16-bit stereo NOT supported.",

"", MB_ICONINFORMATION);

else

MessageBox(hMainWnd, "Error opening waveform device.",

"Error", MB_ICONEXCLAMATION);

参考资料:

https://docs.microsoft.com/en-us/windows/desktop/multimedia/using-the-waveformatex-structure

WASAPI 01 采集默认设备的音频

About MMDevice API

Win7音频系统底层API

Core Audio APIS in Vista/Win7实现

Core Audio APIs