Android Binder机制——ServiceManager的启动

基于Android 7.0源码,分析ServiceManager的启动过程。

binder驱动的初始化是binder_init函数

一、概述

ServiceManager是整个Binder IPC通信过程中的守护进程,本身也是一个Binder服务,但并没有采用libbinder中的多线程模型来与Binder驱动通信,而是自行编写了binder.c直接和Binder驱动来通信,并且只有一个循环binder_loop来进行读取和处理事务,这样的好处是简单而高效。

ServiceManager本身工作相对并不复杂,主要就两个工作:查询和注册服务。

ServiceManager模块的代码位于frameworks\native\cmds\servicemanager文件目录下。具体的内容可以参考该目录下的Android.mk文件。ServiceManager的入口函数是位于frameworks\native\cmds\servicemanager\service_manager.c文件中的main函数。

int main()

{

struct binder_state *bs;

bs = binder_open(128*1024); // 打开binder驱动

if (binder_become_context_manager(bs)) { // 成为上下文管理者

ALOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

binder_loop(bs, svcmgr_handler); // 进入无限循环,处理客户端发来的请求。万分注意svcmgr_handler函数指针

return 0;

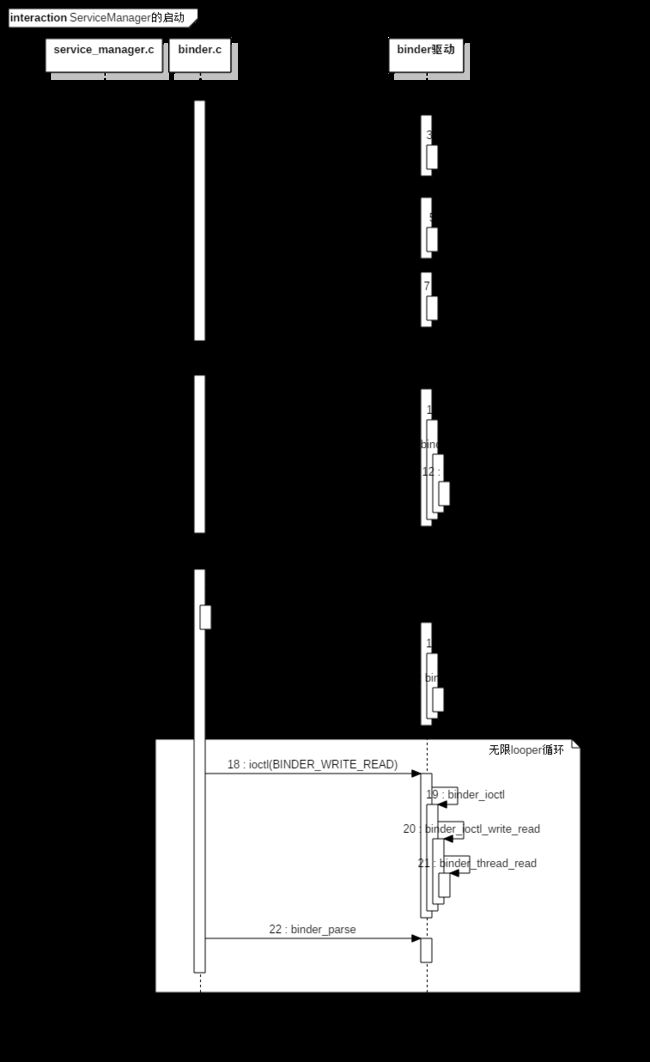

}1. 流程图

2. 时序图

二、流程分析

1. binder_open

[===>frameworks\native\cmds\servicemanager\binder.c]

struct binder_state *binder_open(size_t mapsize)

{

struct binder_state *bs;

struct binder_version vers;

bs = malloc(sizeof(*bs));//分配内存

if (!bs) {

errno = ENOMEM;

return NULL;

}

bs->fd = open("/dev/binder", O_RDWR | O_CLOEXEC);//打开binder设备驱动

if (bs->fd < 0) {

...

goto fail_open;

}

if ((ioctl(bs->fd, BINDER_VERSION, &vers) == -1) ||

(vers.protocol_version != BINDER_CURRENT_PROTOCOL_VERSION)) {//通过ioctl获取驱动版本信息

...

goto fail_open;

}

bs->mapsize = mapsize;//mapsize为128*1024

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

if (bs->mapped == MAP_FAILED) {

...

goto fail_map;

}

return bs;

fail_map:

close(bs->fd);

fail_open:

free(bs);

return NULL;

} 这里通过malloc分配内存,open打开binder设备驱动,ioctl获取binder版本信息,mmap映射内存,这里暂且不纠结上面的四个系统调用时如何执行原理,先只要知道open、ioctl和mmap最后分别都会调用到binder驱动(文件位于kernel\drivers\staging\android\binder.c)中的binder_open、binder_ioctl和binder_mmap。

上面函数返回的是一个binder_state结构体,来看看在执行完此处的binder_open后返回的binder_state结构体的数据是如何。

| binder_state结构体 | 数值 | 备注 |

|---|---|---|

| int fd | /dev/binder | /dev/binder设备文件描述符 |

| void* mmap | mmap(NULL,mapsize,PROT_READ,MAP_PRIVATE,bs->fd,0) | 设备文件/dev/binder映射到进程空间的起始地址 |

| int mapsize | 128*1024 | 上述内存映射空间的大小 |

2. binder_become_context_manager

[===>frameworks\native\cmds\servicemanager\binder.c]

int binder_become_context_manager(struct binder_state *bs)

{

return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0);

}成为整个系统唯一的一个上下文管理者,这里只是将BINDER_SET_CONTEXT_MGR传给binder驱动,这里的ioctl由binder驱动的binder_ioctl继续处理。

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

...

switch (cmd) {

...

case BINDER_SET_CONTEXT_MGR:

ret = binder_ioctl_set_ctx_mgr(filp);

if (ret)

goto err;

ret = security_binder_set_context_mgr(proc->tsk);

if (ret < 0)

goto err;

break;

...

}

...

}这里又继续把工作设置上下文管理者交给binder_ioctl_set_ctx_mgr。

static int binder_ioctl_set_ctx_mgr(struct file *filp)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

kuid_t curr_euid = current_euid();

if (binder_context_mgr_node != NULL) {

pr_err("BINDER_SET_CONTEXT_MGR already set\n");

ret = -EBUSY;

goto out;

}

...

binder_context_mgr_node = binder_new_node(proc, 0, 0);

if (binder_context_mgr_node == NULL) {

ret = -ENOMEM;

goto out;

}

binder_context_mgr_node->local_weak_refs++;

binder_context_mgr_node->local_strong_refs++;

binder_context_mgr_node->has_strong_ref = 1;

binder_context_mgr_node->has_weak_ref = 1;

out:

return ret;

}这里创建生成一个binder_node节点,并将刚创建的binder_node保存在静态变量binder_context_mgr_node中。

3. binder_loop

[===>framework/native/cmds/servicemanager/binder.c]

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

uint32_t readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(uint32_t));//让service_manager进入无限循环

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);//不断读写binder设备

if (res < 0) {

ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

if (res == 0) {

ALOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

ALOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

} 注意,根据前文可以知道,这里的func为svcmgr_handler函数指针。

函数开始就实例化了binder_write_read结构体,其结构体数据如下:

| binder_write_read结构体 | 数据类型 | 备注 |

|---|---|---|

| write_size | binder_size_t | 写入binder设备节点的数据size大小 |

| write_consumed | binder_size_t | |

| write_buffer | binder_uintptr_t | 将要写入binder设备节点的数据缓冲区 |

| read_size | binder_size_t | 读取binder设备节点的数据size大小 |

| read_consumed | binder_size_t | |

| read_buffer | binder_uintptr_t | 从binder设备节点读取的数据缓冲区 |

binder_write_read结构体的作用相当于一个用于binder读写的缓冲区管理者。当需要向binder驱动读数据时,需要将与”write”有关的成员置为0,读取到的数据内容存储到read_buffer缓冲区,读到的数据大小存入read_size;当需要想binder驱动写数据时,需要将与”read”有关的成员置为0,写入binder驱动的数据内容和数据大小分别由write_buffer和write_size指定。

3.1 BC_ENTER_LOOPER

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(uint32_t));3.1.1 binder_write

[===>frameworks\native\cmds\servicemanager\binder.c]

int binder_write(struct binder_state *bs, void *data, size_t len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len;

bwr.write_consumed = 0;

bwr.write_buffer = (uintptr_t) data;

bwr.read_size = 0;

bwr.read_consumed = 0;

bwr.read_buffer = 0;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

...

}

return res;

}在binder_write中就利用了binder_write_read结构体,将与“read”相关的成员置0,将BC_ENTERN_LOOPER指令通过BINDER_WRITE_READ指令传给binder驱动端。

3.1.2 binder_ioctl

[===>kernel\drivers\android\binder.c]

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;//对应上部分的从用户空间传入的binder_write_read结构体

...

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

case BINDER_WRITE_READ:

ret = binder_ioctl_write_read(filp, cmd, arg, thread);

if (ret)

goto err;

break;

...

}

...

}这里的函数参数arg包含着从用户空间传入的binder_write_read结构体数据,其“read”部分为0,“write”部分包含BC_ENTER_LOOPER指令。

3.1.3 binder_ioctl_write_read

[===>kernel\drivers\android\binder.c]

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto out;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {//从用户空间传入的binder_write_read结构体拷贝至内核空间

ret = -EFAULT;

goto out;

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d write %lld at %016llx, read %lld at %016llx\n",

proc->pid, thread->pid,

(u64)bwr.write_size, (u64)bwr.write_buffer,

(u64)bwr.read_size, (u64)bwr.read_buffer);

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

if (bwr.read_size > 0) {

...

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d wrote %lld of %lld, read return %lld of %lld\n",

proc->pid, thread->pid,

(u64)bwr.write_consumed, (u64)bwr.write_size,

(u64)bwr.read_consumed, (u64)bwr.read_size);

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

out:

return ret;

}在从用户空间拷贝获取到binder_write_read结构体数据后,其binder_write_read结构体“write”数据不为0且包含“BC_ENTER_LOOPER”,其“read”数据为0,所以会执行binder_thread_write。

3.1.4 binder_thread_write

[===>kernel\drivers\android\binder.c]

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

trace_binder_command(cmd);

if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

binder_stats.bc[_IOC_NR(cmd)]++;

proc->stats.bc[_IOC_NR(cmd)]++;

thread->stats.bc[_IOC_NR(cmd)]++;

}

switch (cmd) {

...

case BC_ENTER_LOOPER:

binder_debug(BINDER_DEBUG_THREADS,

"%d:%d BC_ENTER_LOOPER\n",

proc->pid, thread->pid);

if (thread->looper & BINDER_LOOPER_STATE_REGISTERED) {

thread->looper |= BINDER_LOOPER_STATE_INVALID;

binder_user_error("%d:%d ERROR: BC_ENTER_LOOPER called after BC_REGISTER_LOOPER\n",

proc->pid, thread->pid);

}

thread->looper |= BINDER_LOOPER_STATE_ENTERED;

break;

...

}

return 0;

}去除掉其他无关指令,只留下“BC_ENTER_LOOPER指令后,就是很简单的给binder_thread类似的thread节点的looper状态增加了BINDER_LOOPER_STATE_ENTERED位,以表示thread描述的线程已经进入到了looper循环。

3.2 BINDER_READ_WRITE

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);binder_write_read结构体“write”有关数据为0,“read”有关部分不为0。这部分的过程和3.1大致相同,只不过具体走的代码有所差异。

3.2.1 binder_ioctl

同3.1.2

3.2.2 binder_ioctl_write_read

[===>kernel\drivers\android\binder.c]

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto out;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {//从用户空间传入的binder_write_read结构体拷贝至内核空间

ret = -EFAULT;

goto out;

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d write %lld at %016llx, read %lld at %016llx\n",

proc->pid, thread->pid,

(u64)bwr.write_size, (u64)bwr.write_buffer,

(u64)bwr.read_size, (u64)bwr.read_buffer);

if (bwr.write_size > 0) {

...

}

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d wrote %lld of %lld, read return %lld of %lld\n",

proc->pid, thread->pid,

(u64)bwr.write_consumed, (u64)bwr.write_size,

(u64)bwr.read_consumed, (u64)bwr.read_size);

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

out:

return ret;

}此

3.2.3 binder_thread_read

这部分表示没有看懂。只明白最终把读取到的数据先保存到了内核空间的binder_write_read结构体中,然后通过copy_to_user拷贝到了用户空间的binder_write_read结构体中。

3.3 binder_parse

[===>frameworks\native\cmds\servicemanager\binder.c]

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func); 在获取到从内核空间拷贝而来的binder_write_read结构体后,该结构体对象read_buffer存储着读取到的数据,随后就通过binder_parse解析这些数据。

在这个场景下,在传入res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);中bwr的“read”和“write”没有含有任何“BC_XXX”码,因此传入binder_parse中的readbuff并没有包含“BR_XXX”码,因此binder_parse应该是直接返回,不做处理了。

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

int r = 1;

uintptr_t end = ptr + (uintptr_t) size;

while (ptr < end) {

uint32_t cmd = *(uint32_t *) ptr;

ptr += sizeof(uint32_t);

#if TRACE

fprintf(stderr,"%s:\n", cmd_name(cmd));

#endif

switch(cmd) {

case BR_NOOP:

break;

case BR_TRANSACTION_COMPLETE:

break;

case BR_INCREFS:

case BR_ACQUIRE:

case BR_RELEASE:

case BR_DECREFS:

#if TRACE

fprintf(stderr," %p, %p\n", (void *)ptr, (void *)(ptr + sizeof(void *)));

#endif

ptr += sizeof(struct binder_ptr_cookie);

break;

case BR_TRANSACTION: {

struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

if ((end - ptr) < sizeof(*txn)) {

ALOGE("parse: txn too small!\n");

return -1;

}

binder_dump_txn(txn);

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

bio_init_from_txn(&msg, txn);

res = func(bs, txn, &msg, &reply);

binder_send_reply(bs, &reply, txn->data.ptr.buffer, res);

}

ptr += sizeof(*txn);

break;

}

case BR_REPLY: {

struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

if ((end - ptr) < sizeof(*txn)) {

ALOGE("parse: reply too small!\n");

return -1;

}

binder_dump_txn(txn);

if (bio) {

bio_init_from_txn(bio, txn);

bio = 0;

} else {

/* todo FREE BUFFER */

}

ptr += sizeof(*txn);

r = 0;

break;

}

case BR_DEAD_BINDER: {

struct binder_death *death = (struct binder_death *)(uintptr_t) *(binder_uintptr_t *)ptr;

ptr += sizeof(binder_uintptr_t);

death->func(bs, death->ptr);

break;

}

case BR_FAILED_REPLY:

r = -1;

break;

case BR_DEAD_REPLY:

r = -1;

break;

default:

ALOGE("parse: OOPS %d\n", cmd);

return -1;

}

}

return r;

}回到binder_loop函数。

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

uint32_t readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(uint32_t));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

...

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

...

}

}这里会无限循环读取binder驱动传来的数据,然后解析,通过解析数据,在合适的情况下就会回调svcmgr_handler函数。那么什么时候是所谓的“合适的情况”呢?后面其他的binder的文章中以具体的用法来分析。

int svcmgr_handler(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

struct binder_io *reply)

{

struct svcinfo *si;

uint16_t *s;

size_t len;

uint32_t handle;

uint32_t strict_policy;

int allow_isolated;

//ALOGI("target=%p code=%d pid=%d uid=%d\n",

// (void*) txn->target.ptr, txn->code, txn->sender_pid, txn->sender_euid);

if (txn->target.ptr != BINDER_SERVICE_MANAGER)

return -1;

if (txn->code == PING_TRANSACTION)

return 0;

// Equivalent to Parcel::enforceInterface(), reading the RPC

// header with the strict mode policy mask and the interface name.

// Note that we ignore the strict_policy and don't propagate it

// further (since we do no outbound RPCs anyway).

strict_policy = bio_get_uint32(msg);

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

if ((len != (sizeof(svcmgr_id) / 2)) ||

memcmp(svcmgr_id, s, sizeof(svcmgr_id))) {

fprintf(stderr,"invalid id %s\n", str8(s, len));

return -1;

}

if (sehandle && selinux_status_updated() > 0) {

struct selabel_handle *tmp_sehandle = selinux_android_service_context_handle();

if (tmp_sehandle) {

selabel_close(sehandle);

sehandle = tmp_sehandle;

}

}

switch(txn->code) {

case SVC_MGR_GET_SERVICE:

case SVC_MGR_CHECK_SERVICE:

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

handle = do_find_service(bs, s, len, txn->sender_euid, txn->sender_pid);

if (!handle)

break;

bio_put_ref(reply, handle);

return 0;

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

handle = bio_get_ref(msg);

allow_isolated = bio_get_uint32(msg) ? 1 : 0;

if (do_add_service(bs, s, len, handle, txn->sender_euid,

allow_isolated, txn->sender_pid))

return -1;

break;

case SVC_MGR_LIST_SERVICES: {

uint32_t n = bio_get_uint32(msg);

if (!svc_can_list(txn->sender_pid)) {

ALOGE("list_service() uid=%d - PERMISSION DENIED\n",

txn->sender_euid);

return -1;

}

si = svclist;

while ((n-- > 0) && si)

si = si->next;

if (si) {

bio_put_string16(reply, si->name);

return 0;

}

return -1;

}

default:

ALOGE("unknown code %d\n", txn->code);

return -1;

}

bio_put_uint32(reply, 0);

return 0;

}