Springboot项目中使用Kafka

Springboot项目中使用Kafka

第一步:安装好Kafka服务器

具体可参考: https://blog.csdn.net/weixin_40990818/article/details/107793976

第二步:创建Kafka生产者

a.引入依赖

<!--Kafka依赖-->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

b.spring-kafka-producer.xml:以XML的方式配置生产者

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans.xsd">

<!-- 定义producer的参数 -->

<bean id="producerProperties" class="java.util.HashMap">

<constructor-arg>

<map>

<entry key="bootstrap.servers" value="127.0.0.1:9092"/>

<!-- acks表示所有需同步返回确认的节点数,all或者‑1表示分区全部备份节点均需响应,可靠性最

高,但吞吐量会相对降低;

1表示只需分区leader节点响应;

0表示无需等待服务端响应;

大部分业务建议配置1,风控或安全建议配置0 -->

<entry key="acks" value="1"/>

<!-- retries表示重试次数,如果配置重试请保证消费端具有业务上幂等,根据业务需求配置 -->

<entry key="retries" value="1"/>

<!-- 发送消息请求的超时时间,规范2000 -->

<entry key="request.timeout.ms" value="2000"/>

<!-- 如果发送方buffer满或者获取不到元数据时最大阻塞时间,规范2000 -->

<entry key="max.block.ms" value="2000"/>

<entry key="key.serializer" value="org.apache.kafka.common.serialization.StringSerializer"/>

<entry key="value.serializer" value="org.apache.kafka.common.serialization.StringSerializer"/>

</map>

</constructor-arg>

</bean>

<!-- 创建kafkaTemplate需要使用的producerFactory bean -->

<bean id="defaultKafkaProducerFactory" class="org.springframework.kafka.core.DefaultKafkaProducerFactory">

<constructor-arg ref="producerProperties"/>

</bean>

<!-- 创建kafkaTemplate发送模版 -->

<bean id="kafkaTemplate" class="org.springframework.kafka.core.KafkaTemplate">

<constructor-arg ref="defaultKafkaProducerFactory"/>

<!-- autoFlush=true表示与kafka同步交互,可靠性高但吞吐量较差,核心场景可以配置true,一般设为false -->

<constructor-arg name="autoFlush" value="false"/>

</bean>

</beans>

c.在启动类引入spring-kafka-producer.xml配置类

@SpringBootApplication(exclude = {DataSourceAutoConfiguration.class})

@ImportResource("classpath:*spring-kafka-producer.xml")

public class UsermanageAPP extends SpringBootServletInitializer {

private static Logger logger= LogManager.getLogger(LogManager.ROOT_LOGGER_NAME);

@Override

protected SpringApplicationBuilder configure(SpringApplicationBuilder application) {

return application.sources(UsermanageAPP.class);

}

/**

* 项目的启动方法

* @param args

*/

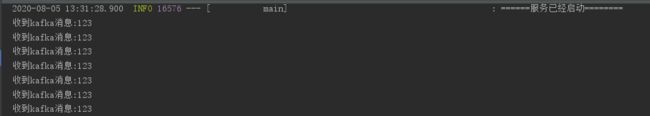

public static void main(String[] args) {

SpringApplication.run(UsermanageAPP.class, args);

logger.info("======服务已经启动========");

}

}

d.使用kafkaTemplate发送消息

@RestController

public class UserManageController {

@Autowired

private KafkaTemplate kafkaTemplate;

public static final String TOPIC_NAME = "my-replicated-topic";

@RequestMapping("/hello")

public void test(){

kafkaTemplate.send(TOPIC_NAME, "123");

}

}

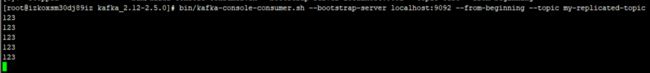

e.访问127.0.0.1:8080/hello后,进入kafka中进行验证

第二步:创建Kafka消费者

a.引入依赖

<!--Kafka依赖-->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

b.spring-kafka-consumer.xml:以XML的方式配置消费者

groupId:consumer group是kafka提供的可扩展且具有容错性的消费者机制。一个组可以有多个消费者或消费者实例(consumer instance),它们共享一个公共的ID,即group ID。

组内的所有消费者协调在一起来消费订阅主题(subscribed topics)的所有分区(partition)。当然,每个分区只能由同一个消费组内的一个consumer来消费,从而保证消息不会被重复消费。

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:context="http://www.springframework.org/schema/context"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/context

http://www.springframework.org/schema/context/spring-context.xsd">

<!-- 定义consumer的参数 -->

<bean id="consumerProperties" class="java.util.HashMap">

<constructor-arg>

<map>

<entry key="bootstrap.servers" value="127.0.0.1:9092" />

<!--关闭自动提交,使用spring实现的提交方案-->

<entry key="enable.auto.commit" value="false" />

<entry key="key.deserializer" value="org.apache.kafka.common.serialization.StringDeserializer" />

<entry key="value.deserializer" value="org.apache.kafka.common.serialization.StringDeserializer" />

</map>

</constructor-arg>

</bean>

<!-- 创建consumerFactory bean -->

<bean id="consumerFactory"

class="org.springframework.kafka.core.DefaultKafkaConsumerFactory">

<constructor-arg ref="consumerProperties"/>

</bean>

<!-- 这里以收单为例 -->

<!-- start 收单 -->

<!-- 消息监听器,实际执行消息消费的类 收单-->

<bean id="tradeprodMessageListener" class="com.luo.nacos.kafka.TradeprodMessageListener" />

<!-- 消费者容器配置信息 收单-->

<bean id="tradeprodProperties" class="org.springframework.kafka.listener.ContainerProperties">

<constructor-arg value="my-replicated-topic" />

<!-- 每接收到一条消息ack一次,可靠性最高 -->

<property name="ackMode" value="RECORD" />

<property name="groupId" value="my-replicated-topic" />

<!-- 消息监听器 -->

<property name="messageListener" ref="tradeprodMessageListener" />

</bean>

<!--消费者容器 -->

<bean id="tradeprodListenerContainer" class="org.springframework.kafka.listener.ConcurrentMessageListenerContainer"

init-method="doStart">

<constructor-arg ref="consumerFactory" />

<constructor-arg ref="tradeprodProperties" />

<!-- 多线程消费 -->

<property name="concurrency" value="3" />

</bean>

<!-- end 收单 -->

</beans>

c.启动类引入spring-kafka-consumer.xml配置文件

@SpringBootApplication(exclude = {DataSourceAutoConfiguration.class})

@ImportResource("classpath:*spring-kafka-consumer.xml")

public class NacosApp extends SpringBootServletInitializer {

private static Logger logger= LogManager.getLogger(LogManager.ROOT_LOGGER_NAME);

@Override

protected SpringApplicationBuilder configure(SpringApplicationBuilder application) {

return application.sources(NacosApp.class);

}

/**

* 项目的启动方法

* @param args

*/

public static void main(String[] args) {

SpringApplication.run(NacosApp.class, args);

logger.info("======服务已经启动========");

}

}

d.TradeprodMessageListener :消息消费的类要实现MessageListener接口,重写onMessage方法

public class TradeprodMessageListener implements MessageListener<String, String> {

@Override

public void onMessage(ConsumerRecord<String, String> record) {

try {

if (record != null && record.value() != null) {

System.out.println("收到kafka消息:"+ record.value());

}

} catch (Exception e) {

System.out.println("消费kafka消息失败,失败原因:"+ e.getMessage());

}

}

}