K8S集群添加NODE节点的简单实现

前提:在已经部署的K8S集群基础上进行,部署细节大约如前面写的另一篇博文:https://blog.csdn.net/lsysafe/article/details/85851376

先配好/etc/hosts等解析最好和各MASTR节点的SSH认证也一并做好

NODE节点需要部署的功能:

kubectl 命令行工具(可选)

部署 flannel 网络

docker

kubelet

kube-proxy

NODE无需安装的功能:kube-apiserver, kube-scheduler, kube-controller-manager

1、初始化配置,关闭防火墙,SELINUX,关闭SWAP分区,配置IPTABLES,建立对应的目录,调整内核参数,安装必要的软件等等

[root@k8s3 75]# cat stopfirewall addk8suser adddockeruser1 path2.sh yum3.sh iptables4.sh swap5.sh mod6.sh mk7.sh cfssl8.sh

#!/bin/bash

systemctl stop firewalld

chkconfig firewalld off

setenforce 0

sed -i s#SELINUX=enforcing#SELINUX=disabled# /etc/selinux/config

#!/bin/bash

useradd -m k8s

sh -c 'echo 123456 | passwd k8s --stdin'

gpasswd -a k8s wheel

#!/bin/bash

useradd -m docker

gpasswd -a k8s docker

mkdir -p /etc/docker/

cat > /etc/docker/daemon.json <

eof

#!/bin/bash

sh -c "echo 'PATH=/opt/k8s/bin:$PATH:$HOME/bin:$JAVA_HOME/bin' >>/root/.bashrc"

echo 'PATH=/opt/k8s/bin:$PATH:$HOME/bin:$JAVA_HOME/bin' >>~/.bashrc

#!/bin/bash

yum install -y epel-release

yum install -y conntrack ipvsadm ipset jq sysstat curl iptables libseccomp

#!/bin/bash

iptables -F

iptables -X

iptables -F -t nat

iptables -X -t nat

iptables -P FORWARD ACCEPT

#!/bin/bash

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

modprobe br_netfilter

modprobe ip_vs

cat > kubernetes.conf <

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf

mount -t cgroup -o cpu,cpuacct none /sys/fs/cgroup/cpu,cpuacct

timedatectl set-timezone Asia/Shanghai

timedatectl set-local-rtc 0

systemctl restart rsyslog

systemctl restart crond

ntpdate cn.pool.ntp.org

#!/bin/bash

mkdir -p /opt/k8s/bin

chown -R k8s /opt/k8s

mkdir -p /etc/kubernetes/cert

chown -R k8s /etc/kubernetes

mkdir -p /etc/etcd/cert

chown -R k8s /etc/etcd/cert

mkdir -p /var/lib/etcd && chown -R k8s /etc/etcd/cert

#!/bin/bash

mkdir -p /opt/k8s/cert

chown -R k8s /opt/k8s

cd /opt/k8s

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

mv cfssl_linux-amd64 /opt/k8s/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

mv cfssljson_linux-amd64 /opt/k8s/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /opt/k8s/bin/cfssl-certinfo

chmod +x /opt/k8s/bin/*

export PATH=/opt/k8s/bin:$PATH

文件都执行一遍,如有报错具体情况具体分析处理

再把MASTER的证书也拷过来到相同的路径/etc/kubernetes/cert/*,有一部份可能会用到

2、在NODE节点部署kubectl 命令行工具,可选

把MASTER上面的/opt/k8s/bin/kubectl二进制文件拷过来,再拷贝~/.kube/config

[root@m1 ~]# ll /opt/k8s/bin/kubectl

-rwxr-xr-x 1 root root 54308597 6月 12 15:24 /opt/k8s/bin/kubectl

[root@m1 ~]# ll /root/.kube/config

-rwxr-xr-x 1 root root 6286 6月 12 15:36 /root/.kube/config

其实就是复用了部署K8S集群时候的访问K8S的API的方式

部署好了可以通过kubectl访问API

[root@m1 ~]# kubectl get namespace

NAME STATUS AGE

default Active 70d

ingress-nginx Active 46d

kube-public Active 70d

kube-system Active 70d

[root@m1 ~]#

3、部署flannel网络

把MASTER的二进制文件和证书拷过来

/opt/k8s/bin/flannel

/etc/flanneld/cert/flanneld*.pem

source /opt/k8s/bin/environment.sh 没有文件的话同样从MASTER拷过来

flanneld.service文件如下:

[root@m1 ~]# cat /etc/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

ExecStart=/opt/k8s/bin/flanneld \

-etcd-cafile=/etc/kubernetes/cert/ca.pem \

-etcd-certfile=/etc/flanneld/cert/flanneld.pem \

-etcd-keyfile=/etc/flanneld/cert/flanneld-key.pem \

-etcd-endpoints=https://192.168.137.71:2379,https://192.168.137.72:2379,https://192.168.137.73:2379 \

-etcd-prefix=/kubernetes/network \

-iface=eno16777736 #注意网卡的名称与实际的一致

ExecStartPost=/opt/k8s/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

启动服务

[root@m1 flannel]# systemctl daemon-reload && systemctl enable flanneld && systemctl restart flanneld

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /etc/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.requires/flanneld.service to /etc/systemd/system/flanneld.service.

确保能PING通其它节点的flannel接口IP

[root@m1 ~]# ping 172.30.46.0

PING 172.30.46.0 (172.30.46.0) 56(84) bytes of data.

64 bytes from 172.30.46.0: icmp_seq=1 ttl=64 time=0.485 ms

64 bytes from 172.30.46.0: icmp_seq=2 ttl=64 time=0.184 ms

64 bytes from 172.30.46.0: icmp_seq=3 ttl=64 time=0.304 ms

4、部署DOCKER

yum install -y epel-release

yum install -y conntrack ipvsadm ipset jq iptables curl sysstat libseccomp && /usr/sbin/modprobe ip_vs

把MASTER的/opt/k8s/bin/docker/docker*拷过来

同样拷贝这个服务的文件:

[root@m1 ~]# cat /etc/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io

[Service]

Environment="PATH=/opt/k8s/bin:/bin:/sbin:/usr/bin:/usr/sbin"

EnvironmentFile=-/run/flannel/docker

ExecStart=/opt/k8s/bin/dockerd --log-level=error $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

启动服务

systemctl stop firewalld && systemctl disable firewalld

usr/sbin/iptables -F && /usr/sbin/iptables -X && /usr/sbin/iptables -F -t nat && /usr/sbin/iptables -X -t nat

/usr/sbin/iptables -P FORWARD ACCEPT

systemctl daemon-reload && systemctl enable docker && systemctl restart docker

for intf in /sys/devices/virtual/net/docker0/brif/*; do echo 1 > $intf/hairpin_mode; done'

sysctl -p /etc/sysctl.d/kubernetes.conf

[root@m1 ~]# systemctl status docker|grep Active

Active: active (running) since 四 2019-06-13 11:26:20 CST; 6h ago

5、部署kubelet

创建token,生成kubelet-bootstrap-m1.kubeconfig,这个须得在MASTER服务器端创建,token有效期1天

[root@k8s1 ~]# cat /tmp/configonenode.sh

#!/bin/bash

source /opt/k8s/bin/environment.sh

# 创建 token

export BOOTSTRAP_TOKEN=$(kubeadm token create \

--description kubelet-bootstrap-token \

--groups system:bootstrappers:m1 \

--kubeconfig ~/.kube/config)

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubelet-bootstrap-m1.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=kubelet-bootstrap-m1.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=kubelet-bootstrap-m1.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=kubelet-bootstrap-m1.kubeconfig

生成的文件放到/etc/kubernetes/kubelet-bootstrap.kubeconfig

如果有报错参考MASTER的/etc/kubernetes/kubelet-bootstrap.kubeconfig,注意token是不一样的

查看申请的token

[root@k8s1 tmp]# kubeadm token list --kubeconfig ~/.kube/config

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

il6513.n2i31tezvsqdsdgd 23h 2019-06-13T17:20:24+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:m1

同样拷贝:/opt/k8s/bin/kubelet,/etc/kubernetes/kubelet.config.json,/etc/systemd/system/kubelet.service

注意各节点略有不同之处

[root@m1 ~]# cat /etc/kubernetes/kubelet.config.json

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/cert/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "192.168.137.81",

"port": 10250,

"readOnlyPort": 0,

"cgroupDriver": "cgroupfs",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"featureGates": {

"RotateKubeletClientCertificate": true,

"RotateKubeletServerCertificate": true

},

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.254.0.2"]

}

[root@m1 ~]# cat /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/opt/k8s/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/cert \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet.config.json \

--hostname-override=m1 \

--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest \

--allow-privileged=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

授权创建CSR

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers

kube-apiserver 收到 CSR 请求后,对其中的 Token 进行认证(事先使用 kubeadm 创建的 token),认证通过后将请求的 user 设置为 system:bootstrap:

启动服务:

mkdir -p /var/lib/kubelet

/usr/sbin/swapoff -a

mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes

systemctl daemon-reload && systemctl enable kubelet && systemctl restart kubelet

服务启动后自动生成/etc/kubernetes/kubelet.kubeconfig文件

6、部署kube-proxy

从MASTER拷贝如下文件

/opt/k8s/bin/kube-proxy

/etc/kubernetes/kube-proxy.config.yaml

/etc/kubernetes/kube-proxy.kubeconfig

/etc/systemd/system/kube-proxy.service

/etc/kubernetes/kube-proxy.config.yaml文件略有不同

[root@m1 ~]# cat /etc/kubernetes/kube-proxy.config.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 192.168.137.81

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR:

healthzBindAddress: 192.168.137.81:10256

hostnameOverride: m1

kind: KubeProxyConfiguration

metricsBindAddress: 192.168.137.81:10249

mode: "ipvs"

启动服务

mkdir -p /var/lib/kube-proxy

mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes

systemctl daemon-reload && systemctl enable kube-proxy && systemctl restart kube-proxy

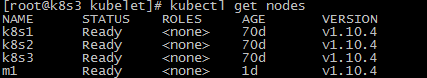

验证节点加入的情况

此时M1作为K8S的节点已经加进来了