flume-hdfs(file/dir/taildir)配置,日志监控单个/多个追加文件,目录内容追加跟踪日志,断点续传

1.flume-hadoop包准备

(1)安装包版本判定:x(程序版本入口).y(功能更新的版本).z(修复版本bug)

(2)举例:goole的chrome浏览器出现在firefox之后,迭代快于所有浏览器

2.解压-配置flume环境

[cevent@hadoop207 apache-flume-1.7.0]$ tar -zxvf apache-flume-1.7.0-bin.tar.gz -C /opt/module 解压flume

apache-flume-1.7.0-bin/docs/mail-lists.html

apache-flume-1.7.0-bin/docs/objects.inv

apache-flume-1.7.0-bin/docs/project-info.html

apache-flume-1.7.0-bin/docs/project-reports.html

apache-flume-1.7.0-bin/docs/search.html

apache-flume-1.7.0-bin/docs/searchindex.js

apache-flume-1.7.0-bin/docs/team-list.html

[cevent@hadoop207 soft]$ cd /opt/module/

[cevent@hadoop207 module]$ ll

总用量 24

drwxrwxr-x.

7 cevent cevent 4096 6月 11 13:35 apache-flume-1.7.0-bin

drwxrwxr-x.

7 cevent cevent 4096 6月 10 13:53 datas

drwxr-xr-x. 11 cevent cevent 4096 5月 22

2017 hadoop-2.7.2

drwxrwxr-x.

3 cevent cevent 4096 6月 5 13:27 hadoop-2.7.2-snappy

drwxrwxr-x. 10 cevent cevent 4096 5月 22

13:34 hive-1.2.1

drwxr-xr-x.

8 cevent cevent 4096 4月 11 2015 jdk1.7.0_79

[cevent@hadoop207 module]$ mv apache-flume-1.7.0-bin/ apache-flume-1.7.0 修改flume文件夹名

[cevent@hadoop207 module]$ ll

总用量 24

drwxrwxr-x.

7 cevent cevent 4096 6月 11 13:35 apache-flume-1.7.0

drwxrwxr-x.

7 cevent cevent 4096 6月 10 13:53 datas

drwxr-xr-x. 11 cevent cevent 4096 5月 22

2017 hadoop-2.7.2

drwxrwxr-x.

3 cevent cevent 4096 6月 5 13:27 hadoop-2.7.2-snappy

drwxrwxr-x. 10 cevent cevent 4096 5月 22

13:34 hive-1.2.1

drwxr-xr-x.

8 cevent cevent 4096 4月 11 2015 jdk1.7.0_79

[cevent@hadoop207 module]$ cd apache-flume-1.7.0/

[cevent@hadoop207 apache-flume-1.7.0]$ ll

总用量 148

drwxr-xr-x.

2 cevent cevent 4096 6月 11

13:35 bin

-rw-r--r--.

1 cevent cevent 77387 10月 11 2016

CHANGELOG

drwxr-xr-x.

2 cevent cevent 4096 6月 11

13:35 conf

-rw-r--r--.

1 cevent cevent 6172 9月 26 2016

DEVNOTES

-rw-r--r--.

1 cevent cevent 2873 9月 26

2016 doap_Flume.rdf

drwxr-xr-x. 10 cevent cevent 4096 10月 13 2016 docs

drwxrwxr-x.

2 cevent cevent 4096 6月 11

13:35 lib

-rw-r--r--.

1 cevent cevent 27625 10月 13 2016

LICENSE

-rw-r--r--.

1 cevent cevent 249 9月 26

2016 NOTICE

-rw-r--r--.

1 cevent cevent 2520 9月 26

2016 README.md

-rw-r--r--.

1 cevent cevent 1585 10月 11 2016 RELEASE-NOTES[cevent@hadoop207

etc]$ cat profile 查看配置文件

# /etc/profile

# System wide environment and startup programs,

for login setup

# Functions and aliases go in /etc/bashrc

# It's NOT a good idea to change this file unless

you know what you

# are doing. It's much better to create a

custom.sh shell script in

# /etc/profile.d/ to make custom changes to your

environment, as this

# will prevent the need for merging in future

updates.

pathmunge () {

case

":${PATH}:" in

*:"$1":*)

;;

*)

if [ "$2" = "after" ] ; then

PATH=$PATH:$1

else

PATH=$1:$PATH

fi

esac

}

if [ -x /usr/bin/id ]; then

if [ -z

"$EUID" ]; then

#

ksh workaround

EUID=`id -u`

UID=`id -ru`

fi

USER="`id -un`"

LOGNAME=$USER

MAIL="/var/spool/mail/$USER"

fi

# Path manipulation

if [ "$EUID" = "0" ]; then

pathmunge /sbin

pathmunge /usr/sbin

pathmunge /usr/local/sbin

else

pathmunge /usr/local/sbin after

pathmunge /usr/sbin after

pathmunge /sbin after

fi

HOSTNAME=`/bin/hostname 2>/dev/null`

HISTSIZE=1000

if [ "$HISTCONTROL" =

"ignorespace" ] ; then

export

HISTCONTROL=ignoreboth

else

export

HISTCONTROL=ignoredups

fi

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE

HISTCONTROL

# By default, we want umask to get set. This sets

it for login shell

# Current threshold for system reserved uid/gids

is 200

# You could check uidgid reservation validity in

# /usr/share/doc/setup-*/uidgid file

if [ $UID -gt 199 ] && [ "`id

-gn`" = "`id -un`" ]; then

umask 002

else

umask

022

fi

for i in /etc/profile.d/*.sh ; do

if [ -r

"$i" ]; then

if

[ "${-#*i}" != "$-" ]; then

. "$i"

else

. "$i" >/dev/null 2>&1

fi

fi

done

unset i

unset -f pathmunge

#JAVA_HOME

export JAVA_HOME=/opt/module/jdk1.7.0_79

export PATH=$PATH:$JAVA_HOME/bin

#HADOOP_HOME

export

HADOOP_HOME=/opt/module/hadoop-2.7.2

export

PATH=$PATH:$HADOOP_HOME/bin

export

PATH=$PATH:$HADOOP_HOME/sbin

#HIVE_HOME

export

HIVE_HOME=/opt/module/hive-1.2.1

export

PATH=$PATH:$HIVE_HOME/bin

[cevent@hadoop207 etc]$ cd /opt/module/apache-flume-1.7.0/

[cevent@hadoop207 apache-flume-1.7.0]$ ll

总用量 148

drwxr-xr-x.

2 cevent cevent 4096 6月 11

13:35 bin

-rw-r--r--.

1 cevent cevent 77387 10月 11 2016

CHANGELOG

drwxr-xr-x.

2 cevent cevent 4096 6月 11

13:35 conf

-rw-r--r--.

1 cevent cevent 6172 9月 26

2016 DEVNOTES

-rw-r--r--.

1 cevent cevent 2873 9月 26

2016 doap_Flume.rdf

drwxr-xr-x. 10 cevent cevent 4096 10月 13 2016 docs

drwxrwxr-x.

2 cevent cevent 4096 6月 11

13:35 lib

-rw-r--r--.

1 cevent cevent 27625 10月 13 2016

LICENSE

-rw-r--r--.

1 cevent cevent 249 9月 26

2016 NOTICE

-rw-r--r--.

1 cevent cevent 2520 9月 26

2016 README.md

-rw-r--r--.

1 cevent cevent 1585 10月 11 2016 RELEASE-NOTES

drwxrwxr-x.

2 cevent cevent 4096 6月 11

13:35 tools

[cevent@hadoop207 apache-flume-1.7.0]$ cd conf/

[cevent@hadoop207 conf]$ ll

总用量 16

-rw-r--r--. 1 cevent cevent 1661 9月 26

2016 flume-conf.properties.template

-rw-r--r--. 1 cevent cevent 1455 9月 26

2016 flume-env.ps1.template

-rw-r--r--. 1 cevent cevent 1565 9月 26

2016

flume-env.sh.template

-rw-r--r--. 1 cevent cevent 3107 9月 26

2016 log4j.properties

[cevent@hadoop207 conf]$ vim flume-env.sh.template 配置flume的java_home

# Licensed to the Apache Software Foundation

(ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional

information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0

(the

# "License"); you may not use this file

except in compliance

# with the License. You may obtain a copy of the License at

#

#

http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to

in writing, software

# distributed under the License is distributed on

an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND,

either express or implied.

# See the License for the specific language

governing permissions and

# limitations under the License.

# If this file is placed at

FLUME_CONF_DIR/flume-env.sh, it will be sourced

# during Flume startup.

# Enviroment variables can be set here.

export

JAVA_HOME=/opt/module/jdk1.7.0_79

# Give Flume more memory and pre-allocate, enable

remote monitoring via JMX

# export JAVA_OPTS="-Xms100m -Xmx2000m

-Dcom.sun.management.jmxremote"

# Let Flume write raw event data and

configuration information to its log files for debugging

# purposes. Enabling these flags is not

recommended in production,

# as it may result in logging sensitive user

information or encryption secrets.

# export JAVA_OPTS="$JAVA_OPTS

-Dorg.apache.flume.log.rawdata=true -Dorg.apache.flume.log.printconfig=true

"

# Note that the Flume conf directory is always

included in the classpath.

#FLUME_CLASSPATH=""

~

"flume-env.sh.template" 34L, 1563C 已写入

[cevent@hadoop207 conf]$ mv flume-env.sh.template flume-env.sh 修改文件名

[cevent@hadoop207 conf]$ ll

总用量 16

-rw-r--r--. 1 cevent cevent 1661 9月 26

2016 flume-conf.properties.template

-rw-r--r--. 1 cevent cevent 1455 9月 26

2016 flume-env.ps1.template

-rw-r--r--. 1 cevent cevent 1563 6月 11

13:41 flume-env.sh

-rw-r--r--. 1 cevent cevent 3107 9月 26

2016 log4j.properties

drwxrwxr-x.

2 cevent cevent 4096 6月 11 13:35

tools

[cevent@hadoop207 profile.d]$ cd /etc/

3.安装发送数据接口 -y nc

[cevent@hadoop207 conf]$ sudo yum install -y nc

[sudo] password for cevent:

已加载插件:fastestmirror, refresh-packagekit, security

设置安装进程

Loading mirror speeds from cached

hostfile

base

| 3.7 kB 00:00

extras

| 3.4 kB 00:00

updates

| 3.4 kB 00:00

updates/primary_db

| 10 MB 00:03

解决依赖关系

--> 执行事务检查

---> Package nc.x86_64 0:1.84-24.el6

will be 安装

--> 完成依赖关系计算

依赖关系解决

===========================================================================================

软件包 架构 版本 仓库 大小

===========================================================================================

正在安装:

nc x86_64 1.84-24.el6 base 57 k

事务概要

===========================================================================================

Install 1 Package(s)

总下载量:57 k

Installed size: 109 k

下载软件包:

nc-1.84-24.el6.x86_64.rpm

| 57 kB 00:00

运行 rpm_check_debug

执行事务测试

事务测试成功

执行事务

正在安装 : nc-1.84-24.el6.x86_64

1/1

Verifying : nc-1.84-24.el6.x86_64

1/1

已安装:

nc.x86_64 0:1.84-24.el6

完毕!

[cevent@hadoop207 conf]$ nc 命令测试

usage: nc [-46DdhklnrStUuvzC] [-i

interval] [-p source_port]

[-s source_ip_address] [-T ToS] [-w timeout] [-X proxy_version]

[-x proxy_address[:port]] [hostname] [port[s]]

4.编写flume-conf配置文件

原版

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in

memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

解析版

# Name the components on this agent 代理的所有组件

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source 配置source资源

a1.sources.r1.type = netcat 资源类型netcat

a1.sources.r1.bind = localhost 监听地址localhost

a1.sources.r1.port = 44444 监听端口44444

# Describe the sink

a1.sinks.k1.type = logger 收到的数据写到logger日志中

# Use a channel which buffers events in memory 使用channel的配置

a1.channels.c1.type = memory 通道的类型memory

a1.channels.c1.capacity = 1000 容量1000个event

a1.channels.c1.transactionCapacity = 100 每次transaction事务的容量为100

# Bind the source and sink to the channel

资源和清洗传输数据的中间件channel关联

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

5.文件配置flume-netcat-logger.conf,启动flume

[cevent@hadoop207 ~]$

cd /opt/module/apache-flume-1.7.0/

[cevent@hadoop207 apache-flume-1.7.0]$ ll

总用量 148

drwxr-xr-x.

2 cevent cevent 4096 6月 11

13:35 bin

-rw-r--r--.

1 cevent cevent 77387 10月 11 2016

CHANGELOG

drwxr-xr-x.

2 cevent cevent 4096 6月 11

13:42 conf

-rw-r--r--.

1 cevent cevent 6172 9月 26

2016 DEVNOTES

-rw-r--r--.

1 cevent cevent 2873 9月 26

2016 doap_Flume.rdf

drwxr-xr-x. 10 cevent cevent 4096 10月 13 2016 docs

drwxrwxr-x.

2 cevent cevent 4096 6月 11

13:35 lib

-rw-r--r--.

1 cevent cevent 27625 10月 13 2016

LICENSE

-rw-r--r--.

1 cevent cevent 249 9月 26

2016 NOTICE

-rw-r--r--.

1 cevent cevent 2520 9月 26

2016 README.md

-rw-r--r--.

1 cevent cevent 1585 10月 11 2016 RELEASE-NOTES

drwxrwxr-x.

2 cevent cevent 4096 6月 11

13:35 tools

[cevent@hadoop207 apache-flume-1.7.0]$ mkdir job

[cevent@hadoop207 apache-flume-1.7.0]$ ll

总用量 152

drwxr-xr-x.

2 cevent cevent 4096 6月 11

13:35 bin

-rw-r--r--. 1 cevent cevent 77387 10月 11 2016 CHANGELOG

drwxr-xr-x.

2 cevent cevent 4096 6月 11

13:42 conf

-rw-r--r--.

1 cevent cevent 6172 9月 26

2016 DEVNOTES

-rw-r--r--.

1 cevent cevent 2873 9月 26

2016 doap_Flume.rdf

drwxr-xr-x. 10 cevent cevent 4096 10月 13 2016 docs

drwxrwxr-x.

2 cevent cevent 4096 6月 11

16:52 job

drwxrwxr-x.

2 cevent cevent 4096 6月 11

13:35 lib

-rw-r--r--.

1 cevent cevent 27625 10月 13 2016

LICENSE

-rw-r--r--.

1 cevent cevent 249 9月 26

2016 NOTICE

-rw-r--r--.

1 cevent cevent 2520 9月 26

2016 README.md

-rw-r--r--.

1 cevent cevent 1585 10月 11 2016 RELEASE-NOTES

drwxrwxr-x.

2 cevent cevent 4096 6月 11

13:35 tools

[cevent@hadoop207 apache-flume-1.7.0]$ vim

job/flume-netcat-logger.conf

#

Name the components on this agent

a1.sources

= r1

a1.sinks

= k1

a1.channels

= c1

#

Describe/configure the source

a1.sources.r1.type

= netcat

a1.sources.r1.bind

= localhost

a1.sources.r1.port

= 44444

#

Describe the sink

a1.sinks.k1.type

= logger

#

Use a channel which buffers events in memory

a1.channels.c1.type

= memory

a1.channels.c1.capacity

= 1000

a1.channels.c1.transactionCapacity

= 100

#

Bind the source and sink to the channel

a1.sources.r1.channels

= c1

a1.sinks.k1.channel

= c1

~

"job/flume-netcat-logger.conf" [新] 22L, 495C 已写入

[cevent@hadoop207 apache-flume-1.7.0]$ sudo netstat -nlp | grep 44444 查询端口是否被占用netstat

-nlp | grep

[sudo] password for cevent:

[cevent@hadoop207 apache-flume-1.7.0]$ ll bin

总用量 36

-rwxr-xr-x. 1 cevent cevent 12387 9月 26

2016 flume-ng

-rw-r--r--. 1 cevent cevent 936 9月 26 2016 flume-ng.cmd

-rwxr-xr-x. 1 cevent cevent 14176 9月 26

2016 flume-ng.ps1

[cevent@hadoop207 apache-flume-1.7.0]$ bin/flume-ng agent --name a1 --conf conf/ --conf-file job/flume-netcat-logger.conf

启动bin 设置flume name

配置文件 自定义配置文件路径

Info: Sourcing environment configuration script

/opt/module/apache-flume-1.7.0/conf/flume-env.sh

Info: Including Hadoop libraries found via

(/opt/module/hadoop-2.7.2/bin/hadoop) for HDFS access

Info: Including Hive libraries found via

(/opt/module/hive-1.2.1) for Hive access

+ exec /opt/module/jdk1.7.0_79/bin/java -Xmx20m

-cp '/opt/module/apache-flume-1.7.0/conf:/opt/module/apache-flume-1.7.0/lib/*:/opt/module/hadoop-2.7.2/etc/hadoop:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/common/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/*:/opt/module/hadoop-2.7.2/contrib/capacity-scheduler/*.jar:/opt/module/hive-1.2.1/lib/*'

-Djava.library.path=:/opt/module/hadoop-2.7.2/lib/native

org.apache.flume.node.Application --name a1 --conf-file

job/flume-netcat-logger.conf

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in

[jar:file:/opt/module/apache-flume-1.7.0/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in

[jar:file:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See

http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

6.logger存储的位置

[cevent@hadoop207 apache-flume-1.7.0]$ cd conf/

[cevent@hadoop207 conf]$ ll

总用量 16

-rw-r--r--. 1 cevent cevent 1661 9月 26 2016 flume-conf.properties.template

-rw-r--r--. 1 cevent cevent 1455 9月 26 2016 flume-env.ps1.template

-rw-r--r--. 1 cevent cevent 1563 6月 11 13:41 flume-env.sh

-rw-r--r--. 1 cevent cevent 3107 9月 26 2016 log4j.properties

[cevent@hadoop207 conf]$ vim

log4j.properties

#

# Licensed to the Apache Software

Foundation (ASF) under one

# or more contributor license

agreements. See the NOTICE file

# distributed with this work for

additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License,

Version 2.0 (the

# "License"); you may not use

this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or

agreed to in writing,

# software distributed under the License

is distributed on an

# "AS IS" BASIS, WITHOUT

WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions

and limitations

# under the License.

#

# Define some default values that can be

overridden by system properties.

#

# For testing, it may also be convenient

to specify

# -Dflume.root.logger=DEBUG,console when

launching flume.

#flume.root.logger=DEBUG,console

# 默认输出日志在logfile,而不是console

flume.root.logger=INFO,LOGFILE

#flume.log.dir=./logs

flume.log.dir=/opt/module/apache-flume-1.7.0/loggers

flume.log.file=flume.log

log4j.logger.org.apache.flume.lifecycle =

INFO

log4j.logger.org.jboss = WARN

log4j.logger.org.mortbay = INFO

log4j.logger.org.apache.avro.ipc.NettyTransceiver

= WARN

log4j.logger.org.apache.hadoop = INFO

log4j.logger.org.apache.hadoop.hive =

ERROR

# Define the root logger to the system

property "flume.root.logger".

log4j.rootLogger=${flume.root.logger}

# 将所有日志放到flume.log.dir一个目录内 Stock log4j rolling file

appender

# Default log rotation configuration

log4j.appender.LOGFILE=org.apache.log4j.RollingFileAppender

log4j.appender.LOGFILE.MaxFileSize=100MB

log4j.appender.LOGFILE.MaxBackupIndex=10

log4j.appender.LOGFILE.File=${flume.log.dir}/${flume.log.file}

log4j.appender.LOGFILE.layout=org.apache.log4j.PatternLayout

log4j.appender.LOGFILE.layout.ConversionPattern=%d{dd

MMM yyyy HH:mm:ss,SSS} %-5p [%t] (%C.%M:%L) %x - %m%n

# Warning: If you enable the following

appender it will fill up your disk if you don't have a cleanup job!

# This uses the updated rolling file

appender from log4j-extras that supports a reliable time-based rolling

policy.

# See

http://logging.apache.org/log4j/companions/extras/apidocs/org/apache/log4j/rolling/TimeBasedRollingPolicy.html

# 每天日志按天滚动 Add "DAILY" to flume.root.logger

above if you want to use this

log4j.appender.DAILY=org.apache.log4j.rolling.RollingFileAppender

log4j.appender.DAILY.rollingPolicy=org.apache.log4j.rolling.TimeBasedRollingPolicy

log4j.appender.DAILY.rollingPolicy.ActiveFileName=${flume.log.dir}/${flume.log.file}

log4j.appender.DAILY.rollingPolicy.FileNamePattern=${flume.log.dir}/${flume.log.file}.%d{yyyy-MM-dd}

log4j.appender.DAILY.layout=org.apache.log4j.PatternLayout

log4j.appender.DAILY.layout.ConversionPattern=%d{dd

MMM yyyy HH:mm:ss,SSS} %-5p [%t] (%C.%M:%L) %x - %m%n

# 控制台输出console

# Add "console" to

flume.root.logger above if you want to use this

log4j.appender.console=org.apache.log4j.ConsoleAppender

log4j.appender.console.target=System.err

log4j.appender.console.layout=org.apache.log4j.PatternLayout

7.启动flume,配置log输出控制台

[cevent@hadoop207 apache-flume-1.7.0]$ sudo netstat -nlp | grep 44444 查看端口是否被占用netstat -nlp | grep 44444

[sudo] password for cevent:

[cevent@hadoop207 apache-flume-1.7.0]$ ll bin

总用量 36

-rwxr-xr-x. 1 cevent cevent 12387 9月 26 2016 flume-ng

-rw-r--r--. 1 cevent cevent 936 9月 26 2016 flume-ng.cmd

-rwxr-xr-x. 1 cevent cevent 14176 9月 26 2016 flume-ng.ps1

[cevent@hadoop207 apache-flume-1.7.0]$ bin/flume-ng agent --name a1 --conf conf/ --conf-file

job/flume-netcat-logger.conf

启动agent flume名 配置文件 自定义日志配置

Info: Sourcing environment configuration

script /opt/module/apache-flume-1.7.0/conf/flume-env.sh

Info: Including Hadoop libraries found

via (/opt/module/hadoop-2.7.2/bin/hadoop) for HDFS access

Info: Including Hive libraries found via

(/opt/module/hive-1.2.1) for Hive access

+ exec /opt/module/jdk1.7.0_79/bin/java

-Xmx20m -cp

'/opt/module/apache-flume-1.7.0/conf:/opt/module/apache-flume-1.7.0/lib/*:/opt/module/hadoop-2.7.2/etc/hadoop:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/common/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/*:/opt/module/hadoop-2.7.2/contrib/capacity-scheduler/*.jar:/opt/module/hive-1.2.1/lib/*'

-Djava.library.path=:/opt/module/hadoop-2.7.2/lib/native

org.apache.flume.node.Application --name a1 --conf-file

job/flume-netcat-logger.conf

SLF4J: Class path contains multiple SLF4J

bindings.

SLF4J: Found binding in

[jar:file:/opt/module/apache-flume-1.7.0/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in

[jar:file:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See

http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

8.端口监听:nc localhost 44444

[cevent@hadoop207 apache-flume-1.7.0]$ cd conf/

[cevent@hadoop207 conf]$ ll

总用量 16

-rw-r--r--. 1 cevent cevent 1661 9月 26 2016 flume-conf.properties.template

-rw-r--r--. 1 cevent cevent 1455 9月 26 2016 flume-env.ps1.template

-rw-r--r--. 1 cevent cevent 1563 6月 11 13:41 flume-env.sh

-rw-r--r--. 1 cevent cevent 3107 9月 26 2016 log4j.properties

[cevent@hadoop207 conf]$ vim log4j.properties 编辑日志配置

#

# Licensed to the Apache Software

Foundation (ASF) under one

# or more contributor license

agreements. See the NOTICE file

# distributed with this work for

additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License,

Version 2.0 (the

# "License"); you may not use

this file except in compliance

# with the License. You may obtain a copy of the License at

#

#

http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or

agreed to in writing,

# software distributed under the License

is distributed on an

# "AS IS" BASIS, WITHOUT

WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions

and limitations

# under the License.

#

# Define some default values that can be

overridden by system properties.

#

# For testing, it may also be convenient

to specify

# -Dflume.root.logger=DEBUG,console when

launching flume.

#flume.root.logger=DEBUG,console

# cevent:default output log to logfile,not console

flume.root.logger=INFO,LOGFILE

#flume.log.dir=./logs

flume.log.dir=/opt/module/apache-flume-1.7.0/loggers

flume.log.file=flume.log

log4j.logger.org.apache.flume.lifecycle =

INFO

log4j.logger.org.jboss = WARN

log4j.logger.org.mortbay = INFO

log4j.logger.org.apache.avro.ipc.NettyTransceiver

= WARN

log4j.logger.org.apache.hadoop = INFO

log4j.logger.org.apache.hadoop.hive =

ERROR

# Define the root logger to the system

property "flume.root.logger".

log4j.rootLogger=${flume.root.logger}

# cevent:all log put on flume.log.dir. Stock log4j rolling file

appender

# Default log rotation configuration

log4j.appender.LOGFILE=org.apache.log4j.RollingFileAppender

log4j.appender.LOGFILE.MaxFileSize=100MB

log4j.appender.LOGFILE.MaxBackupIndex=10

log4j.appender.LOGFILE.File=${flume.log.dir}/${flume.log.file}

log4j.appender.LOGFILE.layout=org.apache.log4j.PatternLayout

log4j.appender.LOGFILE.layout.ConversionPattern=%d{dd

MMM yyyy HH:mm:ss,SSS} %-5p [%t] (%C.%M:%L) %x - %m%n

# Warning: If you enable the following

appender it will fill up your disk if you don't have a cleanup job!

# This uses the updated rolling file

appender from log4j-extras that supports a reliable time-based rolling

policy.

# See http://logging.apache.org/log4j/companions/extras/apidocs/org/apache/log4j/rolling/TimeBasedRollingPolicy.html

# cevent:log scall every day.

Add "DAILY" to flume.root.logger above if you want to use

this

log4j.appender.DAILY=org.apache.log4j.rolling.RollingFileAppender

log4j.appender.DAILY.rollingPolicy=org.apache.log4j.rolling.TimeBasedRollingPolicy

log4j.appender.DAILY.rollingPolicy.ActiveFileName=${flume.log.dir}/${flume.log.file}

log4j.appender.DAILY.rollingPolicy.FileNamePattern=${flume.log.dir}/${flume.log.file}.%d{yyyy-MM-dd}

log4j.appender.DAILY.layout=org.apache.log4j.PatternLayout

log4j.appender.DAILY.layout.ConversionPattern=%d{dd

MMM yyyy HH:mm:ss,SSS} %-5p [%t] (%C.%M:%L) %x - %m%n

# console

# Add "console" to

flume.root.logger above if you want to use this

log4j.appender.console=org.apache.log4j.ConsoleAppender

log4j.appender.console.target=System.err

"log4j.properties" 71L, 3281C 已写入

[cevent@hadoop207 conf]$ ll

总用量 16

-rw-r--r--. 1 cevent cevent 1661 9月 26 2016 flume-conf.properties.template

-rw-r--r--. 1 cevent cevent 1455 9月 26 2016 flume-env.ps1.template

-rw-r--r--. 1 cevent cevent 1563 6月 11 13:41 flume-env.sh

-rw-r--r--. 1 cevent cevent 3281 6月 11 17:18 log4j.properties

[cevent@hadoop207 conf]$ cd ..

[cevent@hadoop207 apache-flume-1.7.0]$

bin/flume-ng agent

--name a1 --conf conf/ --conf-file job/flume-netcat-logger.conf

-Dflume.root.logger=INFO,console

设置控制台输出logo

-Dflume.root.logger=INFO,console

Info: Sourcing environment configuration script

/opt/module/apache-flume-1.7.0/conf/flume-env.sh

Info: Including Hadoop libraries found via

(/opt/module/hadoop-2.7.2/bin/hadoop) for HDFS access

Info: Including Hive libraries found via (/opt/module/hive-1.2.1) for

Hive access

+ exec /opt/module/jdk1.7.0_79/bin/java -Xmx20m

-Dflume.root.logger=INFO,console -cp '/opt/module/apache-flume-1.7.0/conf:/opt/module/apache-flume-1.7.0/lib/*:/opt/module/hadoop-2.7.2/etc/hadoop:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/common/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/*:/opt/module/hadoop-2.7.2/contrib/capacity-scheduler/*.jar:/opt/module/hive-1.2.1/lib/*'

-Djava.library.path=:/opt/module/hadoop-2.7.2/lib/native

org.apache.flume.node.Application --name a1 --conf-file

job/flume-netcat-logger.conf

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in

[jar:file:/opt/module/apache-flume-1.7.0/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an

explanation.

2020-06-11 17:20:04,619 (lifecycleSupervisor-1-0) [INFO - org.apache.flume.node.PollingPropertiesFileConfigurationProvider.start(PollingPropertiesFileConfigurationProvider.java:62)]

Configuration provider starting

2020-06-11 17:20:04,623 (conf-file-poller-0) [INFO -

org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:134)]

Reloading configuration file:job/flume-netcat-logger.conf

2020-06-11 17:20:04,629 (conf-file-poller-0) [INFO -

org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addProperty(FlumeConfiguration.java:930)]

Added sinks: k1 Agent: a1

2020-06-11 17:20:04,629 (conf-file-poller-0) [INFO -

org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addProperty(FlumeConfiguration.java:1016)]

Processing:k1

2020-06-11 17:20:04,629 (conf-file-poller-0) [INFO -

org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addProperty(FlumeConfiguration.java:1016)]

Processing:k1

2020-06-11 17:20:04,640 (conf-file-poller-0) [INFO -

org.apache.flume.conf.FlumeConfiguration.validateConfiguration(FlumeConfiguration.java:140)]

Post-validation flume configuration contains configuration for agents: [a1]

2020-06-11 17:20:04,641 (conf-file-poller-0) [INFO -

org.apache.flume.node.AbstractConfigurationProvider.loadChannels(AbstractConfigurationProvider.java:147)]

Creating channels

2020-06-11 17:20:04,653 (conf-file-poller-0) [INFO -

org.apache.flume.channel.DefaultChannelFactory.create(DefaultChannelFactory.java:42)]

Creating instance of channel c1 type memory

2020-06-11 17:20:04,656 (conf-file-poller-0) [INFO -

org.apache.flume.node.AbstractConfigurationProvider.loadChannels(AbstractConfigurationProvider.java:201)]

Created channel c1

2020-06-11 17:20:04,657 (conf-file-poller-0) [INFO -

org.apache.flume.source.DefaultSourceFactory.create(DefaultSourceFactory.java:41)]

Creating instance of source r1, type netcat

2020-06-11 17:20:04,666 (conf-file-poller-0) [INFO -

org.apache.flume.sink.DefaultSinkFactory.create(DefaultSinkFactory.java:42)]

Creating instance of sink: k1, type: logger

2020-06-11 17:20:04,669 (conf-file-poller-0) [INFO -

org.apache.flume.node.AbstractConfigurationProvider.getConfiguration(AbstractConfigurationProvider.java:116)]

Channel c1 connected to [r1, k1]

2020-06-11 17:20:04,675 (conf-file-poller-0) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:137)]

Starting new configuration:{ sourceRunners:{r1=EventDrivenSourceRunner: {

source:org.apache.flume.source.NetcatSource{name:r1,state:IDLE} }}

sinkRunners:{k1=SinkRunner: { policy:org.apache.flume.sink.DefaultSinkProcessor@6a8bda08

counterGroup:{ name:null counters:{} } }}

channels:{c1=org.apache.flume.channel.MemoryChannel{name: c1}} }

2020-06-11 17:20:04,682 (conf-file-poller-0) [INFO -

org.apache.flume.node.Application.startAllComponents(Application.java:144)]

Starting Channel c1

2020-06-11 17:20:04,724 (lifecycleSupervisor-1-0) [INFO -

org.apache.flume.instrumentation.MonitoredCounterGroup.register(MonitoredCounterGroup.java:119)]

Monitored counter group for type: CHANNEL, name: c1: Successfully registered

new MBean.

2020-06-11 17:20:04,725 (lifecycleSupervisor-1-0) [INFO -

org.apache.flume.instrumentation.MonitoredCounterGroup.start(MonitoredCounterGroup.java:95)]

Component type: CHANNEL, name: c1 started

2020-06-11 17:20:04,726 (conf-file-poller-0) [INFO -

org.apache.flume.node.Application.startAllComponents(Application.java:171)]

Starting Sink k1

2020-06-11 17:20:04,727 (conf-file-poller-0) [INFO -

org.apache.flume.node.Application.startAllComponents(Application.java:182)]

Starting Source r1

2020-06-11 17:20:04,728 (lifecycleSupervisor-1-4) [INFO -

org.apache.flume.source.NetcatSource.start(NetcatSource.java:155)] Source

starting

2020-06-11 17:20:04,762 (lifecycleSupervisor-1-4) [INFO -

org.apache.flume.source.NetcatSource.start(NetcatSource.java:169)] Created

serverSocket:sun.nio.ch.ServerSocketChannelImpl[/127.0.0.1:44444]

2020-06-11 17:21:00,410

(SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO -

org.apache.flume.sink.LoggerSink.process(LoggerSink.java:95)] Event: {

headers:{} body: 63 65 76 65 6E 74 cevent }

2020-06-11 17:21:30,419

(SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO -

org.apache.flume.sink.LoggerSink.process(LoggerSink.java:95)] Event: {

headers:{} body: 65 63 68 6F echo }

^C2020-06-11 17:23:55,629 (agent-shutdown-hook) [INFO -

org.apache.flume.lifecycle.LifecycleSupervisor.stop(LifecycleSupervisor.java:78)]

Stopping lifecycle supervisor 10

2020-06-11 17:23:55,630 (agent-shutdown-hook) [INFO -

org.apache.flume.node.PollingPropertiesFileConfigurationProvider.stop(PollingPropertiesFileConfigurationProvider.java:84)]

Configuration provider stopping

2020-06-11 17:23:55,631 (agent-shutdown-hook) [INFO -

org.apache.flume.instrumentation.MonitoredCounterGroup.stop(MonitoredCounterGroup.java:149)]

Component type: CHANNEL, name: c1 stopped

2020-06-11 17:23:55,631 (agent-shutdown-hook) [INFO -

org.apache.flume.instrumentation.MonitoredCounterGroup.stop(MonitoredCounterGroup.java:155)]

Shutdown Metric for type: CHANNEL, name: c1. channel.start.time ==

1591867204725

2020-06-11 17:23:55,631 (agent-shutdown-hook) [INFO -

org.apache.flume.instrumentation.MonitoredCounterGroup.stop(MonitoredCounterGroup.java:161)]

Shutdown Metric for type: CHANNEL, name: c1. channel.stop.time ==

1591867435631

2020-06-11 17:23:55,632 (agent-shutdown-hook) [INFO -

org.apache.flume.instrumentation.MonitoredCounterGroup.stop(MonitoredCounterGroup.java:177)]

Shutdown Metric for type: CHANNEL, name: c1. channel.capacity == 1000

2020-06-11 17:23:55,632 (agent-shutdown-hook) [INFO -

org.apache.flume.instrumentation.MonitoredCounterGroup.stop(MonitoredCounterGroup.java:177)]

Shutdown Metric for type: CHANNEL, name: c1. channel.current.size == 0

2020-06-11 17:23:55,632 (agent-shutdown-hook) [INFO -

org.apache.flume.instrumentation.MonitoredCounterGroup.stop(MonitoredCounterGroup.java:177)]

Shutdown Metric for type: CHANNEL, name: c1. channel.event.put.attempt == 2

2020-06-11 17:23:55,632 (agent-shutdown-hook) [INFO -

org.apache.flume.instrumentation.MonitoredCounterGroup.stop(MonitoredCounterGroup.java:177)]

Shutdown Metric for type: CHANNEL, name: c1. channel.event.put.success == 2

2020-06-11 17:23:55,632 (agent-shutdown-hook) [INFO -

org.apache.flume.instrumentation.MonitoredCounterGroup.stop(MonitoredCounterGroup.java:177)]

Shutdown Metric for type: CHANNEL, name: c1. channel.event.take.attempt == 35

2020-06-11 17:23:55,632 (agent-shutdown-hook) [INFO -

org.apache.flume.instrumentation.MonitoredCounterGroup.stop(MonitoredCounterGroup.java:177)]

Shutdown Metric for type: CHANNEL, name: c1. channel.event.take.success == 2

2020-06-11 17:23:55,632 (agent-shutdown-hook) [INFO -

org.apache.flume.source.NetcatSource.stop(NetcatSource.java:196)] Source

stopping

9.校验结果:传入数据nc localhost 44444

[cevent@hadoop207 ~]$ nc localhost 44444

cevent

OK

echo

OK

10.监控单个追加文件:配置:flume-file-hdfs.conf

[cevent@hadoop207 apache-flume-1.7.0]$ ll

总用量 156

drwxr-xr-x. 2 cevent cevent 4096 6月 11 13:35 bin

-rw-r--r--. 1 cevent cevent 77387 10月 11 2016 CHANGELOG

drwxr-xr-x. 2 cevent cevent 4096 6月 11 17:18 conf

-rw-r--r--. 1 cevent cevent 6172 9月 26 2016 DEVNOTES

-rw-r--r--. 1 cevent cevent 2873 9月 26 2016 doap_Flume.rdf

drwxr-xr-x. 10 cevent cevent 4096 10月 13 2016 docs

drwxrwxr-x. 2 cevent cevent 4096 6月 12 11:33 job

drwxrwxr-x. 2 cevent cevent 4096 6月 12 09:30 lib

-rw-r--r--. 1 cevent cevent 27625 10月 13 2016 LICENSE

drwxrwxr-x. 2 cevent cevent 4096 6月 11 17:05 logs

-rw-r--r--. 1 cevent cevent 249 9月 26 2016 NOTICE

-rw-r--r--. 1 cevent cevent 2520 9月 26 2016 README.md

-rw-r--r--. 1 cevent cevent 1585 10月 11 2016 RELEASE-NOTES

drwxrwxr-x. 2 cevent cevent 4096 6月 11 13:35 tools

[cevent@hadoop207 apache-flume-1.7.0]$ vim job/flume-file-hdfs.conf 编辑fule-hdfs.conf

//1.监控hdfs单个文件配置

# Name the components on this agent

a2.sources = r2

a2.sinks = k2

a2.channels = c2

# Describe/configure the source

# shell执行命令:/bin/bash -c tail -F

/opt/module/hive/logs/hive.log

a2.sources.r2.type = exec

a2.sources.r2.command = tail -F /opt/module/hive-1.2.1/logs/hive.log

a2.sources.r2.shell = /bin/bash -c

# Describe the sink

a2.sinks.k2.type = hdfs

a2.sinks.k2.hdfs.path =

hdfs://hadoop207.cevent.com:8020/flume/%Y%m%d/%H

#上传文件的前缀:时间戳

a2.sinks.k2.hdfs.filePrefix = logs-

#是否按照时间滚动文件夹,每隔多长时间创建新文件夹滚动显示

a2.sinks.k2.hdfs.round = true

#多少时间单位创建一个新的文件夹

a2.sinks.k2.hdfs.roundValue = 1

#重新定义时间单位,以小时为单位的新文件夹

a2.sinks.k2.hdfs.roundUnit = hour

#是否使用本地时间戳

a2.sinks.k2.hdfs.useLocalTimeStamp = true

#channel到hdfs:积攒多少个Event才flush到HDFS一次,1000条刷新1次

a2.sinks.k2.hdfs.batchSize = 1000

#设置文件类型,可支持压缩

a2.sinks.k2.hdfs.fileType = DataStream

#多久生成一个新的文件,60秒生成一个新文件

a2.sinks.k2.hdfs.rollInterval = 60

#设置每个文件的滚动大小,128M,超过128M则生成新文件

a2.sinks.k2.hdfs.rollSize = 134217700

#文件的滚动与Event数量无关(只按照时间/大小滚动新增文件及文件夹)

a2.sinks.k2.hdfs.rollCount = 0

# Use a channel which buffers events in memory

a2.channels.c2.type = memory

a2.channels.c2.capacity = 1000

a2.channels.c2.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r2.channels = c2

a2.sinks.k2.channel = c2

启动hadoop

[cevent@hadoop207 ~]$ cd /opt/module/hadoop-2.7.2

[cevent@hadoop207 hadoop-2.7.2]$ ll

总用量 60

drwxr-xr-x. 2 cevent cevent 4096 5月 22 2017 bin

drwxrwxr-x. 3 cevent cevent 4096 4月

30 14:16 data

drwxr-xr-x. 3 cevent cevent 4096 5月 22 2017 etc

drwxr-xr-x. 2 cevent cevent 4096 5月 22 2017 include

drwxr-xr-x. 3 cevent cevent 4096 5月 22 2017 lib

drwxr-xr-x. 2 cevent cevent 4096 5月 22 2017 libexec

-rw-r--r--. 1 cevent cevent 15429 5月 22 2017 LICENSE.txt

drwxrwxr-x. 3 cevent cevent 4096 6月 10 21:36 logs

-rw-r--r--. 1 cevent cevent 101 5月 22 2017 NOTICE.txt

-rw-r--r--. 1 cevent cevent 1366 5月 22 2017 README.txt

drwxr-xr-x. 2 cevent cevent 4096 5月 22 2017 sbin

drwxr-xr-x. 4 cevent cevent 4096 5月 22 2017 share

[cevent@hadoop207 hadoop-2.7.2]$ sbin/start-dfs.sh

Starting namenodes on

[hadoop207.cevent.com]

hadoop207.cevent.com: starting namenode,

logging to

/opt/module/hadoop-2.7.2/logs/hadoop-cevent-namenode-hadoop207.cevent.com.out

hadoop207.cevent.com: starting datanode,

logging to

/opt/module/hadoop-2.7.2/logs/hadoop-cevent-datanode-hadoop207.cevent.com.out

Starting secondary namenodes

[hadoop207.cevent.com]

hadoop207.cevent.com: starting

secondarynamenode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-cevent-secondarynamenode-hadoop207.cevent.com.out

[cevent@hadoop207 hadoop-2.7.2]$ sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-cevent-resourcemanager-hadoop207.cevent.com.out

hadoop207.cevent.com: starting

nodemanager, logging to

/opt/module/hadoop-2.7.2/logs/yarn-cevent-nodemanager-hadoop207.cevent.com.out

[cevent@hadoop207 hadoop-2.7.2]$ jps

4034 DataNode

4526 NodeManager

4243 SecondaryNameNode

4789 Jps

4411 ResourceManager

3920 NameNode

启动flume

[cevent@hadoop207 apache-flume-1.7.0]$ bin/flume-ng agent -n a2 -c conf/ -f

job/flume-file-hdfs.conf

启动配置

Info: Sourcing environment configuration

script /opt/module/apache-flume-1.7.0/conf/flume-env.sh

Info: Including Hadoop libraries found

via (/opt/module/hadoop-2.7.2/bin/hadoop) for HDFS access

11.无法生成flume跟踪文件,重新配置flume-env

[cevent@hadoop207 apache-flume-1.7.0]$ cd conf/

[cevent@hadoop207 conf]$ ll

总用量 16

-rw-r--r--. 1 cevent cevent 1661 9月 26 2016 flume-conf.properties.template

-rw-r--r--. 1 cevent cevent 1455 9月 26 2016 flume-env.ps1.template

-rw-r--r--. 1 cevent cevent 1563 6月 11 13:41 flume-env.sh

-rw-r--r--. 1 cevent cevent 3281 6月 11 17:18 log4j.properties

[cevent@hadoop207 conf]$ vim flume-env.sh

# Licensed to the Apache Software

Foundation (ASF) under one

# or more contributor license

agreements. See the NOTICE file

# distributed with this work for

additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License,

Version 2.0 (the

# "License"); you may not use

this file except in compliance

# with the License. You may obtain a copy of the License at

#

#

http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or

agreed to in writing, software

# distributed under the License is

distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY

KIND, either express or implied.

# See the License for the specific

language governing permissions and

# limitations under the License.

# If this file is placed at

FLUME_CONF_DIR/flume-env.sh, it will be sourced

# during Flume startup.

# Enviroment variables can be set here.

#JAVA_HOME

export JAVA_HOME=/opt/module/jdk1.7.0_79

export PATH=$PATH:$JAVA_HOME/bin

#HADOOP_HOME

export HADOOP_HOME=/opt/module/hadoop-2.7.2

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

#HIVE_HOME

export HIVE_HOME=/opt/module/hive-1.2.1

export PATH=$PATH:$HIVE_HOME/bin

# Give Flume more memory and

pre-allocate, enable remote monitoring via JMX

# export JAVA_OPTS="-Xms100m

-Xmx2000m -Dcom.sun.management.jmxremote"

# Let Flume write raw event data and configuration

information to its log files for debugging

# purposes. Enabling these flags is not

recommended in production,

# as it may result in logging sensitive

user information or encryption secrets.

# export JAVA_OPTS="$JAVA_OPTS

-Dorg.apache.flume.log.rawdata=true -Dorg.apache.flume.log.printconfig=true

"

# Note that the Flume conf directory is

always included in the classpath.

#FLUME_CLASSPATH=""

12.配置/etc/profile

[cevent@hadoop207 logs]$ sudo vim /etc/profile

# /etc/profile

# System wide environment and startup

programs, for login setup

# Functions and aliases go in /etc/bashrc

# It's NOT a good idea to change this

file unless you know what you

# are doing. It's much better to create a

custom.sh shell script in

# /etc/profile.d/ to make custom changes

to your environment, as this

# will prevent the need for merging in

future updates.

pathmunge () {

case ":${PATH}:" in

*:"$1":*)

;;

*)

if [ "$2" =

"after" ] ; then

PATH=$PATH:$1

else

PATH=$1:$PATH

fi

esac

}

if [ -x /usr/bin/id ]; then

if [ -z "$EUID" ]; then

# ksh workaround

EUID=`id -u`

UID=`id -ru`

fi

USER="`id -un`"

LOGNAME=$USER

MAIL="/var/spool/mail/$USER"

fi

# Path manipulation

if [ "$EUID" = "0" ];

then

pathmunge /sbin

pathmunge /usr/sbin

pathmunge /usr/local/sbin

else

pathmunge /usr/local/sbin after

pathmunge /usr/sbin after

pathmunge /sbin after

fi

HOSTNAME=`/bin/hostname 2>/dev/null`

HISTSIZE=1000

if [ "$HISTCONTROL" =

"ignorespace" ] ; then

export HISTCONTROL=ignoreboth

else

export HISTCONTROL=ignoredups

fi

export PATH USER LOGNAME MAIL HOSTNAME

HISTSIZE HISTCONTROL

# By default, we want umask to get set.

This sets it for login shell

# Current threshold for system reserved

uid/gids is 200

# You could check uidgid reservation

validity in

# /usr/share/doc/setup-*/uidgid file

if [ $UID -gt 199 ] && [

"`id -gn`" = "`id -un`" ]; then

umask 002

else

umask 022

fi

[cevent@hadoop207 logs]$ sudo vim

/etc/profile

[sudo] password for cevent:

if [ -x /usr/bin/id ]; then

if [ -z "$EUID" ]; then

# ksh workaround

EUID=`id -u`

UID=`id -ru`

fi

USER="`id -un`"

LOGNAME=$USER

MAIL="/var/spool/mail/$USER"

fi

# Path manipulation

if [ "$EUID" = "0" ];

then

pathmunge /sbin

pathmunge /usr/sbin

pathmunge /usr/local/sbin

else

pathmunge /usr/local/sbin after

pathmunge /usr/sbin after

pathmunge /sbin after

fi

HOSTNAME=`/bin/hostname 2>/dev/null`

HISTSIZE=1000

if [ "$HISTCONTROL" =

"ignorespace" ] ; then

export HISTCONTROL=ignoreboth

else

export HISTCONTROL=ignoredups

fi

export PATH USER LOGNAME MAIL HOSTNAME

HISTSIZE HISTCONTROL

# By default, we want umask to get set.

This sets it for login shell

# Current threshold for system reserved

uid/gids is 200

# You could check uidgid reservation

validity in

# /usr/share/doc/setup-*/uidgid file

if [ $UID -gt 199 ] && [

"`id -gn`" = "`id -un`" ]; then

umask 002

else

umask 022

fi

for i in /etc/profile.d/*.sh ; do

if [ -r "$i" ]; then

if [ "${-#*i}" != "$-" ]; then

. "$i"

else

. "$i" >/dev/null

2>&1

fi

fi

done

unset i

unset -f pathmunge

#JAVA_HOME

export JAVA_HOME=/opt/module/jdk1.7.0_79

export PATH=$PATH:$JAVA_HOME/bin

#HADOOP_HOME

export HADOOP_HOME=/opt/module/hadoop-2.7.2

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

#HIVE_HOME

export HIVE_HOME=/opt/module/hive-1.2.1

export PATH=$PATH:$HIVE_HOME/bin

#FLUME_HOME

export FLUME_HOME=/opt/module/apache-flume-1.7.0

export PATH=$PATH:$FLUME_HOME/bin

13.解决flume没有上传到hdfs上的原因,端口错误

启动dfs

[cevent@hadoop207 hadoop-2.7.2]$

sbin/start-dfs.sh

Starting namenodes on

[hadoop207.cevent.com]

hadoop207.cevent.com: starting namenode,

logging to

/opt/module/hadoop-2.7.2/logs/hadoop-cevent-namenode-hadoop207.cevent.com.out

hadoop207.cevent.com: starting datanode,

logging to

/opt/module/hadoop-2.7.2/logs/hadoop-cevent-datanode-hadoop207.cevent.com.out

Starting secondary namenodes

[hadoop207.cevent.com]

hadoop207.cevent.com: starting

secondarynamenode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-cevent-secondarynamenode-hadoop207.cevent.com.out

[cevent@hadoop207 hadoop-2.7.2]$

sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to

/opt/module/hadoop-2.7.2/logs/yarn-cevent-resourcemanager-hadoop207.cevent.com.out

hadoop207.cevent.com: starting

nodemanager, logging to

/opt/module/hadoop-2.7.2/logs/yarn-cevent-nodemanager-hadoop207.cevent.com.out

[cevent@hadoop207 hadoop-2.7.2]$ jps

11836 SecondaryNameNode

12003 ResourceManager

11653 DataNode

12462 Application

12568 Jps

12116 NodeManager

11515 NameNode

启动flume-file-hdfs-cong

[cevent@hadoop207 apache-flume-1.7.0]$ bin/flume-ng agent -n a2 -c conf/ -f

job/flume-file-hdfs.conf

Info: Sourcing environment configuration

script /opt/module/apache-flume-1.7.0/conf/flume-env.sh

Info: Including Hadoop libraries found

via (/opt/module/hadoop-2.7.2/bin/hadoop) for HDFS access

Info: Including Hive libraries found via

(/opt/module/hive-1.2.1) for Hive access

+ exec /opt/module/jdk1.7.0_79/bin/java

-Xmx20m -cp

'/opt/module/apache-flume-1.7.0/conf:/opt/module/apache-flume-1.7.0/lib/*:/opt/module/hadoop-2.7.2/etc/hadoop:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/common/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/*:/opt/module/hadoop-2.7.2/contrib/capacity-scheduler/*.jar:/opt/module/hive-1.2.1/lib/*'

-Djava.library.path=:/opt/module/hadoop-2.7.2/lib/native

org.apache.flume.node.Application -n a2 -f job/flume-file-hdfs.conf

SLF4J: Class path contains multiple SLF4J

bindings.

SLF4J: Found binding in

[jar:file:/opt/module/apache-flume-1.7.0/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in

[jar:file:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See

http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

14.实现文件日志跟踪

链接:http://hadoop207.cevent.com:50070/explorer.html#/flume/20200612/13

启动或进行其他操作,日志记录

[cevent@hadoop207 logs]$ hive

Logging initialized using configuration

in file:/opt/module/hive-1.2.1/conf/hive-log4j.properties

hive (default)> exit;

15.监控目录,多个文件跟踪日志, 配置flume-dir-hdfs.conf

a3.sources = r3

a3.sinks = k3

a3.channels = c3

# Describe/configure the source

# 文件上传后,添加.completed后缀

a3.sources.r3.type = spooldir

a3.sources.r3.spoolDir =

/opt/module/apache-flume-1.7.0/upload

a3.sources.r3.fileSuffix = .COMPLETED

a3.sources.r3.fileHeader = true

#忽略所有以.tmp结尾的文件,不上传,正则[]表示非 ^开头 空格 *多个 文件开头非一个或多个开头的.tmp

a3.sources.r3.ignorePattern = ([^

]*\.tmp)

# Describe the sink

a3.sinks.k3.type = hdfs

a3.sinks.k3.hdfs.path =

hdfs://hadoop207.cevent.com:8020/flume/upload/%Y%m%d/%H

#上传文件的前缀

a3.sinks.k3.hdfs.filePrefix = upload-

#是否按照时间滚动文件夹

a3.sinks.k3.hdfs.round = true

#多少时间单位创建一个新的文件夹

a3.sinks.k3.hdfs.roundValue = 1

#重新定义时间单位

a3.sinks.k3.hdfs.roundUnit = hour

#是否使用本地时间戳

a3.sinks.k3.hdfs.useLocalTimeStamp = true

#积攒多少个Event才flush到HDFS一次

a3.sinks.k3.hdfs.batchSize = 100

#设置文件类型,可支持压缩

a3.sinks.k3.hdfs.fileType = DataStream

#多久生成一个新的文件

a3.sinks.k3.hdfs.rollInterval = 60

#设置每个文件的滚动大小大概是128M

a3.sinks.k3.hdfs.rollSize = 134217700

#文件的滚动与Event数量无关

a3.sinks.k3.hdfs.rollCount = 0

# Use a channel which buffers events in

memory

a3.channels.c3.type = memory

a3.channels.c3.capacity = 1000

a3.channels.c3.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r3.channels = c3

a3.sinks.k3.channel = c3

16. 开启监控

[cevent@hadoop207 apache-flume-1.7.0]$ bin/flume-ng agent -n a3 -c conf/ -f job/flume-dir-hdfs.conf

Info: Sourcing environment configuration

script /opt/module/apache-flume-1.7.0/conf/flume-env.sh

Info: Including Hadoop libraries found

via (/opt/module/hadoop-2.7.2/bin/hadoop) for HDFS access

Info: Including Hive libraries found via

(/opt/module/hive-1.2.1) for Hive access

+ exec /opt/module/jdk1.7.0_79/bin/java

-Xmx20m -cp

'/opt/module/apache-flume-1.7.0/conf:/opt/module/apache-flume-1.7.0/lib/*:/opt/module/hadoop-2.7.2/etc/hadoop:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/common/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/*:/opt/module/hadoop-2.7.2/contrib/capacity-scheduler/*.jar:/opt/module/hive-1.2.1/lib/*'

-Djava.library.path=:/opt/module/hadoop-2.7.2/lib/native

org.apache.flume.node.Application -n a3 -f job/flume-dir-hdfs.conf

SLF4J: Class path contains multiple SLF4J

bindings.

SLF4J: Found binding in

[jar:file:/opt/module/apache-flume-1.7.0/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in

[jar:file:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See

http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

17. 结果校验

[cevent@hadoop207 apache-flume-1.7.0]$ ll

总用量 164

drwxr-xr-x. 2 cevent cevent 4096 6月 11 13:35 bin

-rw-r--r--. 1 cevent cevent 77387 10月 11 2016 CHANGELOG

drwxr-xr-x. 2 cevent cevent 4096 6月 12 12:11 conf

-rw-r--r--. 1 cevent cevent 6172 9月 26 2016 DEVNOTES

-rw-r--r--. 1 cevent cevent 2873 9月 26 2016 doap_Flume.rdf

drwxr-xr-x. 10 cevent cevent 4096 10月 13 2016 docs

drwxrwxr-x. 2 cevent cevent 4096 6月 12 14:12 job

drwxrwxr-x. 2 cevent cevent 4096 6月 12 09:30 lib

-rw-r--r--. 1 cevent cevent 27625 10月 13 2016 LICENSE

drwxrwxr-x. 2 cevent cevent 4096 6月 12 11:48 loggers

drwxrwxr-x. 2 cevent cevent 4096 6月 11 17:05 logs

-rw-r--r--. 1 cevent cevent 249 9月 26 2016 NOTICE

-rw-r--r--. 1 cevent cevent 2520 9月 26 2016 README.md

-rw-r--r--. 1 cevent cevent 1585 10月 11 2016 RELEASE-NOTES

drwxrwxr-x. 2 cevent cevent 4096 6月 11 13:35 tools

drwxrwxr-x. 2 cevent cevent 4096 6月 12 14:21 upload

[cevent@hadoop207 apache-flume-1.7.0]$ cp README.md upload/

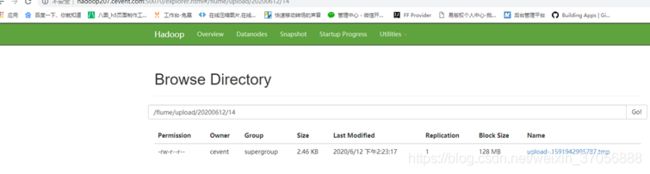

链接:http://hadoop207.cevent.com:50070/explorer.html#/flume/upload/20200612/14

18.实时监控目录下的多个追加文件,taildir source配置

[cevent@hadoop207 apache-flume-1.7.0]$ vim job/flume-taildir-hdfs.conf 编辑配置taildir

a3.sources = r3

a3.sinks = k3

a3.channels = c3

# Describe/configure the source:指定filegroups文件组

# .*file.* 所有文件中包含file的文件

a3.sources.r3.type = TAILDIR

a3.sources.r3.positionFile = /opt/module/apache-flume-1.7.0/tail_dir.json

a3.sources.r3.filegroups = f1 f2

a3.sources.r3.filegroups.f1 =

/opt/module/apache-flume-1.7.0/files/.*file.*

a3.sources.r3.filegroups.f2 =

/opt/module/apache-flume-1.7.0/files/.*log.*

# Describe the sink

a3.sinks.k3.type = hdfs

a3.sinks.k3.hdfs.path =

hdfs://hadoop207.cevent.com:8020/flume/upload2/%Y%m%d/%H

#上传文件的前缀

a3.sinks.k3.hdfs.filePrefix = upload-

#是否按照时间滚动文件夹

a3.sinks.k3.hdfs.round = true

#多少时间单位创建一个新的文件夹

a3.sinks.k3.hdfs.roundValue = 1

#重新定义时间单位

a3.sinks.k3.hdfs.roundUnit = hour

#是否使用本地时间戳

a3.sinks.k3.hdfs.useLocalTimeStamp = true

#积攒多少个Event才flush到HDFS一次

a3.sinks.k3.hdfs.batchSize = 100

#设置文件类型,可支持压缩

a3.sinks.k3.hdfs.fileType = DataStream

#多久生成一个新的文件

a3.sinks.k3.hdfs.rollInterval = 60

#设置每个文件的滚动大小大概是128M

a3.sinks.k3.hdfs.rollSize = 134217700

#文件的滚动与Event数量无关

a3.sinks.k3.hdfs.rollCount = 0

# Use a channel which buffers events in memory

a3.channels.c3.type = memory

a3.channels.c3.capacity = 1000

a3.channels.c3.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r3.channels = c3

a3.sinks.k3.channel = c3

~

"job/flume-taildir-hdfs.conf" [新] 44L, 1522C 已写入

[cevent@hadoop207 apache-flume-1.7.0]$ mkdir files/

[cevent@hadoop207 apache-flume-1.7.0]$ ll

总用量 168

drwxr-xr-x. 2 cevent cevent 4096 6月 11 13:35 bin

-rw-r--r--. 1 cevent cevent 77387 10月 11 2016 CHANGELOG

drwxr-xr-x. 2 cevent cevent 4096 6月 12 12:11 conf

-rw-r--r--. 1 cevent cevent 6172 9月 26 2016 DEVNOTES

-rw-r--r--. 1 cevent cevent 2873 9月 26 2016 doap_Flume.rdf

drwxr-xr-x. 10 cevent cevent 4096 10月 13 2016 docs

drwxrwxr-x. 2 cevent cevent 4096 6月 12 16:41 files

drwxrwxr-x. 2 cevent cevent 4096 6月 12 16:40 job

drwxrwxr-x. 2 cevent cevent 4096 6月 12 09:30 lib

-rw-r--r--. 1 cevent cevent 27625 10月 13 2016 LICENSE

drwxrwxr-x. 2 cevent cevent 4096 6月 12 11:48 loggers

drwxrwxr-x. 2 cevent cevent 4096 6月 11 17:05 logs

-rw-r--r--. 1 cevent cevent 249 9月 26 2016 NOTICE

-rw-r--r--. 1 cevent cevent 2520 9月 26 2016 README.md

-rw-r--r--. 1 cevent cevent 1585 10月 11 2016 RELEASE-NOTES

drwxrwxr-x. 2 cevent cevent 4096 6月 11 13:35 tools

drwxrwxr-x. 3 cevent cevent 4096 6月 12 14:23 upload

[cevent@hadoop207 apache-flume-1.7.0]$ cp CHANGELOG LICENSE files/

[cevent@hadoop207 apache-flume-1.7.0]$ ll

files/

总用量 104

-rw-r--r--. 1 cevent cevent 77387 6月 12 16:41 CHANGELOG

-rw-r--r--. 1 cevent cevent 27625 6月 12 16:41 LICENSE

[cevent@hadoop207 apache-flume-1.7.0]$ mv files/CHANGELOG CHANGELOG.log

[cevent@hadoop207 apache-flume-1.7.0]$ mv files/LICENSE LICENSE.log

[cevent@hadoop207 apache-flume-1.7.0]$ ll

files/

总用量 0

[cevent@hadoop207 apache-flume-1.7.0]$ ll

总用量 272

drwxr-xr-x. 2 cevent cevent 4096 6月 11 13:35 bin

-rw-r--r--. 1 cevent cevent 77387 10月 11 2016 CHANGELOG

-rw-r--r--. 1 cevent cevent 77387 6月 12 16:41 CHANGELOG.log

drwxr-xr-x. 2 cevent cevent 4096 6月 12 12:11 conf

-rw-r--r--. 1 cevent cevent 6172 9月 26 2016 DEVNOTES

-rw-r--r--. 1 cevent cevent 2873 9月 26 2016 doap_Flume.rdf

drwxr-xr-x. 10 cevent cevent 4096 10月 13 2016 docs

drwxrwxr-x. 2 cevent cevent 4096 6月 12 16:43 files

drwxrwxr-x. 2 cevent cevent 4096 6月 12 16:40 job

drwxrwxr-x. 2 cevent cevent 4096 6月 12 09:30 lib

-rw-r--r--. 1 cevent cevent 27625 10月 13 2016 LICENSE

-rw-r--r--. 1 cevent cevent 27625 6月 12 16:41 LICENSE.log

drwxrwxr-x. 2 cevent cevent 4096 6月 12 11:48 loggers

drwxrwxr-x. 2 cevent cevent 4096 6月 11 17:05 logs

-rw-r--r--. 1 cevent cevent 249 9月 26 2016 NOTICE

-rw-r--r--. 1 cevent cevent 2520 9月 26 2016 README.md

-rw-r--r--. 1 cevent cevent 1585 10月 11 2016 RELEASE-NOTES

drwxrwxr-x. 2 cevent cevent 4096 6月 11 13:35 tools

drwxrwxr-x. 3 cevent cevent 4096 6月 12 14:23 upload

[cevent@hadoop207 apache-flume-1.7.0]$ mv CHANGELOG.log LICENSE.log files/

[cevent@hadoop207 apache-flume-1.7.0]$ ll

files/

总用量 104

-rw-r--r--. 1 cevent cevent 77387 6月 12 16:41 CHANGELOG.log

-rw-r--r--. 1 cevent cevent 27625 6月 12 16:41 LICENSE.log

[cevent@hadoop207 apache-flume-1.7.0]$ vim tutu.txt files/

还有 2 个文件等待编辑

tutu is rabbit!

~

tutu is rabbit!

"tutu.txt" 1L, 16C 已写入

[cevent@hadoop207 apache-flume-1.7.0]$ ll

总用量 172

drwxr-xr-x. 2 cevent cevent 4096 6月 11 13:35 bin

-rw-r--r--. 1 cevent cevent 77387 10月 11 2016 CHANGELOG

drwxr-xr-x. 2 cevent cevent 4096 6月 12 12:11 conf

-rw-r--r--. 1 cevent cevent 6172 9月 26 2016 DEVNOTES

-rw-r--r--. 1 cevent cevent 2873 9月 26 2016 doap_Flume.rdf

drwxr-xr-x. 10 cevent cevent 4096 10月

13 2016 docs

drwxrwxr-x. 2 cevent cevent 4096 6月 12 16:43 files

drwxrwxr-x. 2 cevent cevent 4096 6月 12 16:40 job

drwxrwxr-x. 2 cevent cevent 4096 6月 12 09:30 lib

-rw-r--r--. 1 cevent cevent 27625 10月 13 2016 LICENSE

drwxrwxr-x. 2 cevent cevent 4096 6月 12 11:48 loggers

drwxrwxr-x. 2 cevent cevent 4096 6月 11 17:05 logs

-rw-r--r--. 1 cevent cevent 249 9月 26 2016 NOTICE

-rw-r--r--. 1 cevent cevent 2520 9月 26 2016 README.md

-rw-r--r--. 1 cevent cevent 1585 10月 11 2016 RELEASE-NOTES

drwxrwxr-x. 2 cevent cevent 4096 6月 11 13:35 tools

-rw-rw-r--. 1 cevent cevent 16 6月 12 16:45 tutu.txt

drwxrwxr-x. 3 cevent cevent 4096 6月 12 14:23 upload

[cevent@hadoop207 apache-flume-1.7.0]$ bin/flume-ng agent -n a3 -c conf/ -f job/flume-taildir-hdfs.conf

断点续传taildir

Info: Sourcing environment configuration

script /opt/module/apache-flume-1.7.0/conf/flume-env.sh

Info: Including Hadoop libraries found

via (/opt/module/hadoop-2.7.2/bin/hadoop) for HDFS access

Info: Including Hive libraries found via

(/opt/module/hive-1.2.1) for Hive access

+ exec /opt/module/jdk1.7.0_79/bin/java

-Xmx20m -cp

'/opt/module/apache-flume-1.7.0/conf:/opt/module/apache-flume-1.7.0/lib/*:/opt/module/hadoop-2.7.2/etc/hadoop:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/common/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/hdfs/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/yarn/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/lib/*:/opt/module/hadoop-2.7.2/share/hadoop/mapreduce/*:/opt/module/hadoop-2.7.2/contrib/capacity-scheduler/*.jar:/opt/module/hive-1.2.1/lib/*'

-Djava.library.path=:/opt/module/hadoop-2.7.2/lib/native

org.apache.flume.node.Application -n a3 -f job/flume-taildir-hdfs.conf

SLF4J: Class path contains multiple SLF4J

bindings.

SLF4J: Found binding in

[jar:file:/opt/module/apache-flume-1.7.0/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in

[jar:file:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See

http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

19.断点续传

[cevent@hadoop207 apache-flume-1.7.0]$ ll

总用量 176

drwxr-xr-x. 2 cevent cevent 4096 6月 11 13:35 bin

-rw-r--r--. 1 cevent cevent 77387 10月 11 2016 CHANGELOG

drwxr-xr-x. 2 cevent cevent 4096 6月 12 12:11 conf

-rw-r--r--. 1 cevent cevent 6172 9月

26 2016 DEVNOTES

-rw-r--r--. 1 cevent cevent 2873 9月 26 2016 doap_Flume.rdf

drwxr-xr-x. 10 cevent cevent 4096 10月 13 2016 docs

drwxrwxr-x. 2 cevent cevent 4096 6月 12 16:43 files

drwxrwxr-x. 2 cevent cevent 4096 6月 12 16:40 job

drwxrwxr-x. 2 cevent cevent 4096 6月 12 09:30 lib

-rw-r--r--. 1 cevent cevent 27625 10月 13 2016 LICENSE

drwxrwxr-x. 2 cevent cevent 4096 6月 12 11:48 loggers

drwxrwxr-x. 2 cevent cevent 4096 6月 11 17:05 logs

-rw-r--r--. 1 cevent cevent 249 9月 26 2016 NOTICE

-rw-r--r--. 1 cevent cevent 2520 9月 26 2016 README.md

-rw-r--r--. 1 cevent cevent 1585 10月 11 2016 RELEASE-NOTES

-rw-rw-r--. 1 cevent cevent 177 6月 12 16:48 tail_dir.json

drwxrwxr-x. 2 cevent cevent 4096 6月 11 13:35 tools

-rw-rw-r--. 1 cevent cevent 16 6月 12 16:45 tutu.txt

drwxrwxr-x. 3 cevent cevent 4096 6月 12 14:23 upload

[cevent@hadoop207 apache-flume-1.7.0]$ cat tail_dir.json 查看json

[{"inode":151788,"pos":77387,"file":"/opt/module/apache-flume-1.7.0/files/CHANGELOG.log"},{"inode":151791,"pos":27625,"file":"/opt/module/apache-flume-1.7.0/files/LICENSE.log"}]

[ { "inode": 151788, "pos": 77387, "file": "/opt/module/apache-flume-1.7.0/files/CHANGELOG.log" }, { "inode": 151791, "pos": 27625, "file": "/opt/module/apache-flume-1.7.0/files/LICENSE.log" }]

[cevent@hadoop207 apache-flume-1.7.0]$

[cevent@hadoop207 apache-flume-1.7.0]$ cat >> files/LICENSE.log 文件追加

ccccccccccc

cccccccc

cccc

cccccccccccccc

链接:http://hadoop207.cevent.com:50070/explorer.html#/flume/upload2/20200612/16

20.监控配置解析

//flume日志采集、聚合、传输系统

//1.监控hdfs单个文件配置

# Name the components on this agent

a2.sources = r2

a2.sinks = k2

a2.channels = c2

# Describe/configure the source

# shell执行命令:/bin/bash -c tail -F /opt/module/hive/logs/hive.log

a2.sources.r2.type = exec

a2.sources.r2.command = tail -F

/opt/module/hive-1.2.1/logs/hive.log

a2.sources.r2.shell = /bin/bash -c

# Describe the sink

a2.sinks.k2.type = hdfs

a2.sinks.k2.hdfs.path =

hdfs://hadoop207.cevent.com:8020/flume/%Y%m%d/%H

#上传文件的前缀:时间戳

a2.sinks.k2.hdfs.filePrefix = logs-

#是否按照时间滚动文件夹,每隔多长时间创建新文件夹滚动显示

a2.sinks.k2.hdfs.round = true

#多少时间单位创建一个新的文件夹

a2.sinks.k2.hdfs.roundValue = 1

#重新定义时间单位,以小时为单位的新文件夹

a2.sinks.k2.hdfs.roundUnit = hour

#是否使用本地时间戳

a2.sinks.k2.hdfs.useLocalTimeStamp = true

#channel到hdfs:积攒多少个Event才flush到HDFS一次,1000条刷新1次

a2.sinks.k2.hdfs.batchSize = 1000

#设置文件类型,可支持压缩

a2.sinks.k2.hdfs.fileType = DataStream

#多久生成一个新的文件,60秒生成一个新文件

a2.sinks.k2.hdfs.rollInterval = 60

#设置每个文件的滚动大小,128M,超过128M则生成新文件

a2.sinks.k2.hdfs.rollSize = 134217700

#文件的滚动与Event数量无关(只按照时间/大小滚动新增文件及文件夹)

a2.sinks.k2.hdfs.rollCount = 0

# Use a channel which buffers events in

memory

a2.channels.c2.type = memory

a2.channels.c2.capacity = 1000

a2.channels.c2.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r2.channels = c2

a2.sinks.k2.channel = c2

//2.监控目录下多个新文件

a3.sources = r3

a3.sinks = k3

a3.channels = c3

# Describe/configure the source

# 文件上传后,添加.completed后缀

a3.sources.r3.type = spooldir

a3.sources.r3.spoolDir = /opt/module/apache-flume-1.7.0/upload

a3.sources.r3.fileSuffix = .COMPLETED

a3.sources.r3.fileHeader = true

#忽略所有以.tmp结尾的文件,不上传,正则[]表示非 ^开头 空格 *多个 文件开头非一个或多个开头的.tmp

a3.sources.r3.ignorePattern = ([^

]*\.tmp)

# Describe the sink

a3.sinks.k3.type = hdfs

a3.sinks.k3.hdfs.path =

hdfs://hadoop207.cevent.com:8020/flume/upload/%Y%m%d/%H

#上传文件的前缀

a3.sinks.k3.hdfs.filePrefix = upload-

#是否按照时间滚动文件夹

a3.sinks.k3.hdfs.round = true

#多少时间单位创建一个新的文件夹

a3.sinks.k3.hdfs.roundValue = 1

#重新定义时间单位

a3.sinks.k3.hdfs.roundUnit = hour

#是否使用本地时间戳

a3.sinks.k3.hdfs.useLocalTimeStamp = true

#积攒多少个Event才flush到HDFS一次

a3.sinks.k3.hdfs.batchSize = 100

#设置文件类型,可支持压缩

a3.sinks.k3.hdfs.fileType = DataStream

#多久生成一个新的文件

a3.sinks.k3.hdfs.rollInterval = 60

#设置每个文件的滚动大小大概是128M

a3.sinks.k3.hdfs.rollSize = 134217700

#文件的滚动与Event数量无关

a3.sinks.k3.hdfs.rollCount = 0

# Use a channel which buffers events in

memory

a3.channels.c3.type = memory

a3.channels.c3.capacity = 1000

a3.channels.c3.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r3.channels = c3

a3.sinks.k3.channel = c3

//3.监控目录下多个追加文件-断点续传taildir

a3.sources = r3

a3.sinks = k3

a3.channels = c3

# Describe/configure the source:指定filegroups文件组

# .*file.* 所有文件中包含file的文件

a3.sources.r3.type = TAILDIR

a3.sources.r3.positionFile =

/opt/module/apache-flume-1.7.0/tail_dir.json

a3.sources.r3.filegroups = f1 f2

a3.sources.r3.filegroups.f1 =

/opt/module/apache-flume-1.7.0/files/.*file.*

a3.sources.r3.filegroups.f2 = /opt/module/apache-flume-1.7.0/files/.*log.*

# Describe the sink

a3.sinks.k3.type = hdfs

a3.sinks.k3.hdfs.path =

hdfs://hadoop207.cevent.com:8020/flume/upload2/%Y%m%d/%H

#上传文件的前缀

a3.sinks.k3.hdfs.filePrefix = upload-

#是否按照时间滚动文件夹

a3.sinks.k3.hdfs.round = true

#多少时间单位创建一个新的文件夹

a3.sinks.k3.hdfs.roundValue = 1

#重新定义时间单位

a3.sinks.k3.hdfs.roundUnit = hour

#是否使用本地时间戳

a3.sinks.k3.hdfs.useLocalTimeStamp = true

#积攒多少个Event才flush到HDFS一次

a3.sinks.k3.hdfs.batchSize = 100

#设置文件类型,可支持压缩

a3.sinks.k3.hdfs.fileType = DataStream

#多久生成一个新的文件

a3.sinks.k3.hdfs.rollInterval = 60

#设置每个文件的滚动大小大概是128M

a3.sinks.k3.hdfs.rollSize = 134217700

#文件的滚动与Event数量无关

a3.sinks.k3.hdfs.rollCount = 0

# Use a channel which buffers events in

memory

a3.channels.c3.type = memory

a3.channels.c3.capacity = 1000

a3.channels.c3.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r3.channels = c3

a3.sinks.k3.channel = c3