基于Zookeeper与Hadooper HA集群

基础 Zookeeper Hadooper 集群搭建完毕

Zookeeper 高速数据库

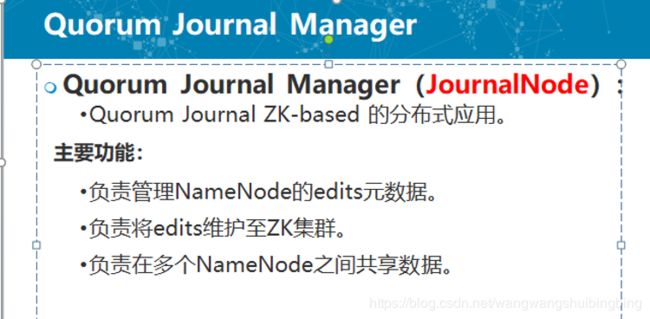

JournalNode 负责对Zookeeper进行高速读取 (Master主机将配置通过JournalNode 放入Zookeeper JournalNode同时负责两台Master的同步 )

注:HA on ZK与原集群的主要区别

原集群,元数据和日志edits都存储于Secondary Namenode,合并后再转储至Master

HA集群,元数据镜像存储于Master(基础)、日志edits存储于zk集群。

JournalNode进程职责

第一步HA配置

core-site.xml

hadoop.tmp.dir

file:/usr/hadoop/hadoop-3.1.2

fs.defaultFS

hdfs://nnc1/

ha.zookeeper.quorum

192.168.150.111:2181,192.168.150.112:2181,192.168.150.113:2181

hdfs-site.xml

dfs.replication

1

dfs.namenode.name.dir

file:/usr/hadoop/hadoop-3.1.2/dfs/name

dfs.blocksize

1048576

dfs.nameservices

nnc1

dfs.ha.namenodes.nnc1

nn1,nn2

dfs.namenode.rpc-address.nnc1.nn1

HadoopMaster01:9000

dfs.namenode.rpc-address.nnc1.nn2

HadoopMaster02:9000

dfs.namenode.shared.edits.dir

qjournal://192.168.150.111:8485;192.168.150.112:8485;192.168.150.113:8485/nnc1

dfs.journalnode.edits.dir

/usr/hadoop/hadoop-3.1.2/dfs/journalData

dfs.ha.automatic-failover.enabled

true

dfs.client.failover.proxy.provider.nnc1

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.ha.fencing.methods

sshfence

shell(/bin/true)

shell(/usr/hadoop/hadoop-3.1.2/sbin/masterSwitchRecord.sh)

dfs.ha.fencing.ssh.private-key-files

/root/.ssh/id_rsa

dfs.ha.fencing.ssh.connect-timeout

30000

mapred-site.xml

mapreduce.framework.name

yarn

yarn-site.xml

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.cluster-id

yrmc1

yarn.resourcemanager.ha.rm-ids

rm1,rm2

yarn.resourcemanager.hostname.rm1

192.168.150.101

yarn.resourcemanager.hostname.rm2

192.168.150.102

yarn.resourcemanager.zk-address

192.168.150.111:2181,192.168.150.112:2181,192,168.150.113:2181

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce_shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

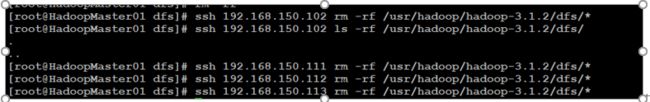

第二步:清理历史分区数据

第三步:同步文件

(同步整个Hadoop/etc/hadoop/*目录)

第四步:启动ZK集群、启动JournalNode集群

./zkServer.sh start (启动)

hadoop-daemon.sh start journalnode

hdfs --daemon start journalnode

启动JournalNode 命令二选一

第五步:选一台Master进行重新格式化

Hdfs namenode -format

第六步:初始化ZKFC进程

选择一台master,运行即可:

Hdfs zkfc –formatZK

添加变量到 start-dfs.sh

HDFS_JOURNALNODE_USER=root

HDFS_ZKFC_USER=root

第七步:启动集群,测试HA

Start-dfs.sh