flume 1.6.0 安装及配置

flume 是一个日志收集系统,它可以自定义数据的来源和目的地,具体的介绍百度有一大把

flume-ng 的ng 指的是 next generation 就是新一代的意思

对应的就有 flume-og 即老的版本

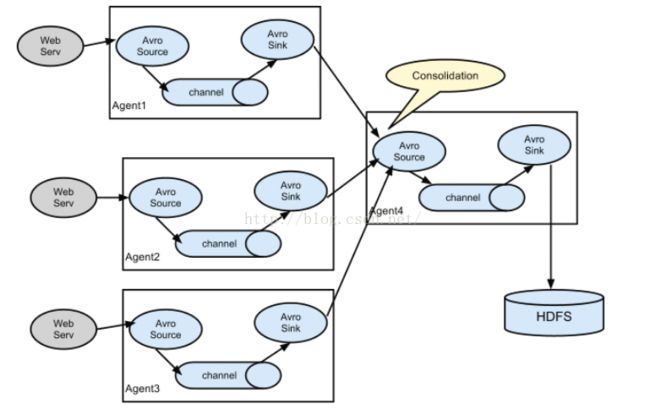

flume 如图所示,我们可以任意定义flume的节点然后最终将log 写入hdfs 或者kafka 或者随便你怎么搞

我这里演示一下基本的安装与配置

step 1 下载与安装

首先

http://flume.apache.org/FlumeUserGuide.html

从 官网下载 apache-flume-1.6.0-bin.tar.gz,

tar -zxvf apache-flume-1.6.0-bin.tar.gz 解压

然后

配置jdk

此处略过 jdk 的安装。。。。。100字

再然后

进入 apache-flume-1.6.0-bin/conf

复制一份 flume-env.sh.template 并改名 flume-env.sh

vim flume-env.sh 加上一句 export JAVA_HOME=/usr/local/jdk7/ (你自己的安装位置)

step 2 配置

好了,我这里配置了3个 flume 两个收集日志,另一个汇总

结构如下

z0┐

├ ── 汇总─── > z2

z1┘

将 apache-flume-1.6.0-bin 复制 为 flume-z0 flume-z1 flume-z2

分别进入 conf 将flume-conf.properties.template 复制为 agent0.conf 、agent1.conf 、agent2.conf

agent0 /agent1 :

a0.sources = r1

a0.channels = c1

a0.sinks = k1

# Describe/configure the source

a0.sources.r1.type = exec

# a0.sources.r1.bind = localhost

# a0.sources.r1.port = 44444

a0.sources.r1.command = tail -f /usr/local/flume-z0/testlog/test.log

# Describe the sink

a0.sinks.k1.type = avro

a0.sinks.k1.hostname = localhost

a0.sinks.k1.port = 44444

# Use a channel which buffers events in memory

a0.channels.c1.type = memory

a0.channels.c1.capacity = 1000

a0.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a0.sources.r1.channels = c1

a0.sinks.k1.channel = c1

上面三个 都定义了 source channel sink ,这里的 source 使用了 type = exec 及unix 命令

将会监控 test.log

source 常用的还有 spooldir ---监控新增文件

- a1.sources.r1.type = spooldir

- a1.sources.r1.channels = c1

- a1.sources.r1.spoolDir = /home/hadoop/flume-1.5.0-bin/logs

- a1.sources.r1.fileHeader = true

还有 Avro 等,

这里 sinks 使用了 avro ,avro 是一个序列化rpc 系统

这里的sinks 将会指向 agent2 的 sources

agent2:

a2.sources = r1

a2.channels = c1

a2.sinks = k1

# Describe/configure the source

a2.sources.r1.type = avro

a2.sources.r1.bind = localhost

a2.sources.r1.port = 44444

a2.sources.r1.interceptors = i1

a2.sources.r1.interceptors.i1.type = timestamp

# a2.sources.r1.command = tail -f /usr/local/flume-z0/testlog/test.log

# Describe the sink

a2.sinks.k1.type = logger

# Use a channel which buffers events in memory

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

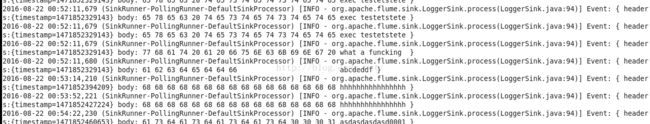

这样配置以后,我追加 z0/z1下的 test.log 文件 z0/z1监测到了文件的变化,z0/z1将会将日志信息传送至 z2

下面启动 z0 z1 z2

追加 z0 下面的文件 echo "asdasdasdasd000102002102102" >>/usr/local/flume-z0/testlog/test.log

z2中控制台输出

实验成功

sources channels sinks 汇总

*= org.apache.flume

| Component Interface | Type Alias | Implementation Class |

| *.Channel | memory | *.channel.MemoryChannel |

| *.Channel | jdbc | *.channel.jdbc.JdbcChannel |

| *.Channel | file | *.channel.file.FileChannel |

| *.Channel | – | *.channel.PseudoTxnMemoryChannel |

| *.Channel | – | org.example.MyChannel |

| *.Source | avro | *.source.AvroSource |

| *.Source | netcat | *.source.NetcatSource |

| *.Source | seq | *.source.SequenceGeneratorSource |

| *.Source | exec | *.source.ExecSource |

| *.Source | syslogtcp | *.source.SyslogTcpSource |

| *.Source | multiport_syslogtcp | *.source.MultiportSyslogTCPSource |

| *.Source | syslogudp | *.source.SyslogUDPSource |

| *.Source | spooldir | *.source.SpoolDirectorySource |

| *.Source | http | *.source.http.HTTPSource |

| *.Source | thrift | *.source.ThriftSource |

| *.Source | jms | *.source.jms.JMSSource |

| *.Source | – | *.source.avroLegacy.AvroLegacySource |

| *.Source | – | *.source.thriftLegacy.ThriftLegacySource |

| *.Source | – | org.example.MySource |

| *.Sink | null | *.sink.NullSink |

| *.Sink | logger | *.sink.LoggerSink |

| *.Sink | avro | *.sink.AvroSink |

| *.Sink | hdfs | *.sink.hdfs.HDFSEventSink |

| *.Sink | hbase | *.sink.hbase.HBaseSink |

| *.Sink | asynchbase | *.sink.hbase.AsyncHBaseSink |

| *.Sink | elasticsearch | *.sink.elasticsearch.ElasticSearchSink |

| *.Sink | file_roll | *.sink.RollingFileSink |

| *.Sink | irc | *.sink.irc.IRCSink |

| *.Sink | thrift | *.sink.ThriftSink |

| *.Sink | – | org.example.MySink |

| *.ChannelSelector | replicating | *.channel.ReplicatingChannelSelector |

| *.ChannelSelector | multiplexing | *.channel.MultiplexingChannelSelector |

| *.ChannelSelector | – | org.example.MyChannelSelector |

| *.SinkProcessor | default | *.sink.DefaultSinkProcessor |

| *.SinkProcessor | failover | *.sink.FailoverSinkProcessor |

| *.SinkProcessor | load_balance | *.sink.LoadBalancingSinkProcessor |

| *.SinkProcessor | – | |

| *.interceptor.Interceptor | timestamp | *.interceptor.TimestampInterceptor$Builder |

| *.interceptor.Interceptor | host | *.interceptor.HostInterceptor$Builder |

| *.interceptor.Interceptor | static | *.interceptor.StaticInterceptor$Builder |

| *.interceptor.Interceptor | regex_filter | *.interceptor.RegexFilteringInterceptor$Builder |

| *.interceptor.Interceptor | regex_extractor | *.interceptor.RegexFilteringInterceptor$Builder |

| *.channel.file.encryption. KeyProvider$Builder |

jceksfile | *.channel.file.encryption.JCEFileKeyProvider |

| *.channel.file.encryption. KeyProvider$Builder |

– | org.example.MyKeyProvider |

| *.channel.file.encryption.CipherProvider | aesctrnopadding | *.channel.file.encryption.AESCTRNoPaddingProvider |

| *.channel.file.encryption.CipherProvider | – | org.example.MyCipherProvider |

| *.serialization.EventSerializer$Builder | text | *.serialization.BodyTextEventSerializer$Builder |

| *.serialization.EventSerializer$Builder | avro_event | *.serialization.FlumeEventAvroEventSerializer$Builder |

| *.serialization.EventSerializer$Builder | – | org.example.MyEventSerializer$Builder |