kafka-producer生产者案例

kafka集群搭建、创建topic、:kafka集群搭建以及kafka命令使用

zookeeper集群搭建:zookeeper集群搭建以及使用

1、创建topic:user-info,3个分区,每个分区有2个副本

kafka-topics.sh --zookeeper 192.168.34.128:2181 --create --topic user-info --partitions 3 --replication-factor 32、生产者:KafkaProducerDemo

package cn.dl.main;

import com.alibaba.fastjson.JSONObject;

import cn.dl.utils.ReadPropertiesUtils;

import java.util.*;

import kafka.javaapi.producer.Producer;

import kafka.producer.KeyedMessage;

import kafka.producer.ProducerConfig;

import kafka.serializer.StringEncoder;

/**

* Created by Tiger on 2018/1/28.

*/

public class KafkaProducerDemo extends Thread{

//消息主题

private String topic;

//producer对象

private Producer producer;

private static Map config = new HashMap();

public KafkaProducerDemo(String topic){

this.topic = topic;

this.producer = createProducer();

}

public static void main(String[] args) throws Exception {

//读取配置文件

config.putAll(ReadPropertiesUtils.readConfig("config.properties"));

System.out.println(config);

new KafkaProducerDemo(config.get("topic")).start();

}

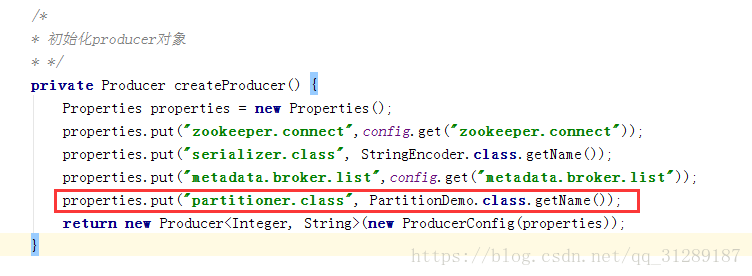

/*

* 初始化producer对象

* */

private Producer createProducer() {

Properties properties = new Properties();

properties.put("zookeeper.connect",config.get("zookeeper.connect"));

properties.put("serializer.class", StringEncoder.class.getName());

properties.put("metadata.broker.list",config.get("metadata.broker.list"));

properties.put("partitioner.class", PartitionDemo.class.getName());

return new Producer(new ProducerConfig(properties));

}

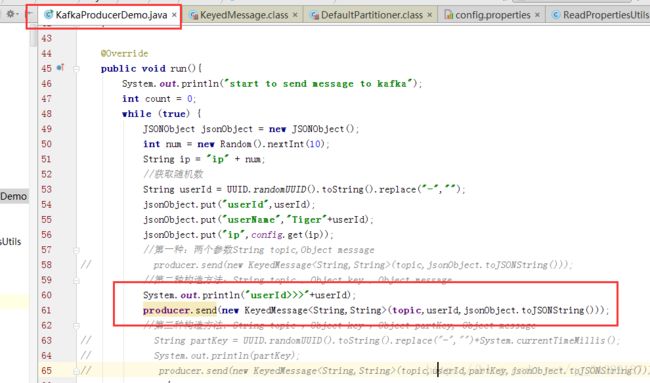

@Override

public void run(){

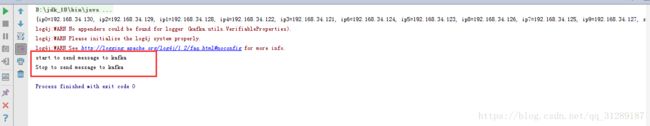

System.out.println("start to send message to kafka");

int count = 0;

while (true) {

JSONObject jsonObject = new JSONObject();

int num = new Random().nextInt(10);

String ip = "ip" + num;

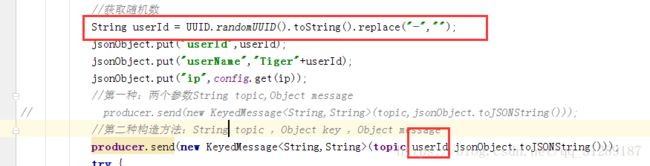

//获取随机数

String userId = UUID.randomUUID().toString().replace("-","");

jsonObject.put("userId",userId);

jsonObject.put("userName","Tiger"+userId);

jsonObject.put("ip",config.get(ip));

//第一种:两个参数String topic,Object message

// producer.send(new KeyedMessage(topic,jsonObject.toJSONString()));

//第二种构造方法:String topic ,Object key ,Object message

System.out.println("userId>>>"+userId);

producer.send(new KeyedMessage(topic,userId,jsonObject.toJSONString()));

//第三种构造方法:String topic ,Object key ,Object partKey, Object message

// String partKey = UUID.randomUUID().toString().replace("-","")+System.currentTimeMillis();

// System.out.println(partKey);

// producer.send(new KeyedMessage(topic,userId,partKey,jsonObject.toJSONString()));

try {

Thread.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

count ++;

if(count == 500){

System.out.println("Stop to send message to kafka");

break;

}

}

}

}

3、读取配置文件:ReadPropertiesUtils

package cn.dl.utils;

import java.io.IOException;

import java.io.InputStream;

import java.util.HashMap;

import java.util.Map;

import java.util.Properties;

import java.util.Set;

/**

* Created by Tiger on 2018/8/18.

*/

public class ReadPropertiesUtils {

/**

* 读取properties配置文件

* @param configFileName

* @exception

* @return

* */

public static Map readConfig(String configFileName) throws IOException {

Map config = new HashMap();

InputStream in = ReadPropertiesUtils.class.getClassLoader().getResourceAsStream(configFileName);

Properties properties = new Properties();

properties.load(in);

Set> entrySet = properties.entrySet();

for(java.util.Map.Entry entry : entrySet){

String key = String.valueOf(entry.getKey());

String value = String.valueOf(entry.getValue());

config.put(key,value);

}

return config;

}

}

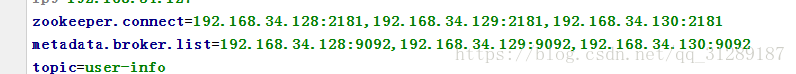

4、配置文件:config.properties

ip0=192.168.34.130

ip1=192.168.34.128

ip2=192.168.34.129

ip3=192.168.34.121

ip4=192.168.34.122

ip5=192.168.34.123

ip6=192.168.34.124

ip7=192.168.34.125

ip8=192.168.34.126

ip9=192.168.34.127

zookeeper.connect=192.168.34.128:2181,192.168.34.129:2181,192.168.34.130:2181

metadata.broker.list=192.168.34.128:9092,192.168.34.129:9092,192.168.34.130:9092

topic=user-info

private Producer createProducer() {

Properties properties = new Properties();

properties.put("zookeeper.connect",config.get("zookeeper.connect"));

properties.put("serializer.class", StringEncoder.class.getName());

properties.put("metadata.broker.list",config.get("metadata.broker.list"));

// properties.put("partitioner.class", PartitionDemo.class.getName());

return new Producer(new ProducerConfig(properties));

} 这个方法是初始化Producer对象:

zookeeper.connect:zk服务器列表,格式ip:port,ip:port或者host:port

metadata.broker.list:kafka broker列表:格式ip:port,ip:port

serializer.class:默认为kafka.serializer.DefaultEncoder,默认的序列号类

partitioner.class:自定义的分区规则,class路径,默认值:kafka.producer.DefaultPartitioner,目的是让数据在各个partition上分布平衡

ps:执行一次,入500条数据到kafka

KeyedMessage有三个构造方法:

第一种:两个参数String topic,Object message

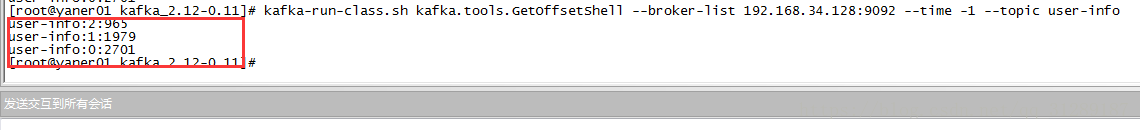

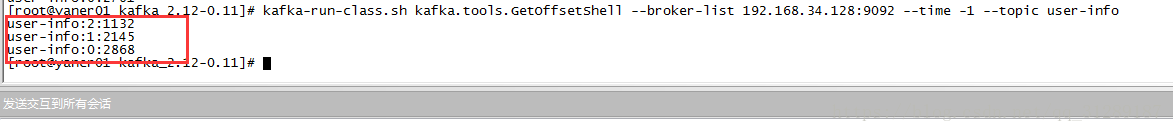

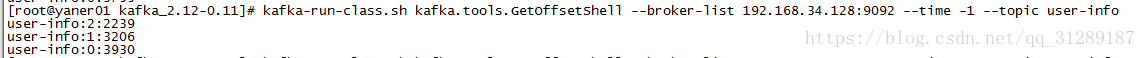

运行之前,各个分区数据分布情况

user-info:2:965

user-info:1:979

user-info:0:2701

5.1、第一种构造方法运行结果

user-info:2:965

user-info:1:1479

user-info:0:2701

500条数据全部到user-info:1了!!! 数据分布不均衡,再多运行几次,每次都是其中一个分区数据增加500条!!!

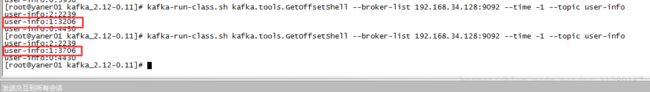

5.2第二种构造方法:String topic ,Object key ,Object message

运行之前数据分布情况:

user-info:2:965

user-info:1:1979

user-info:0:2701

运行之后数据分布情况:

user-info:2:1132 比运行之前多了167条

user-info:1:2145 比运行之前多了166条

user-info:0:2868 比运行之前多了167条

总共多了500,而且每个分区数据分布相当平衡,在多运行几次,发现每个分区增加的条数都差不多,其实500条只能大致看分布情况,可以用5000条、50000条,甚至生成更多数据来看看分布情况,最终发现每个分区分布相对均衡

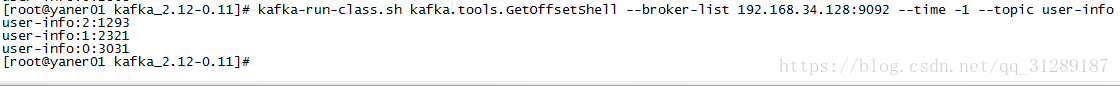

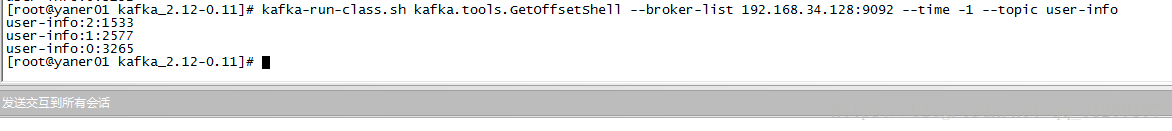

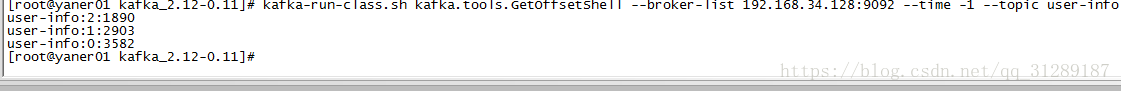

5.3第三种构造方法:String topic ,Object key ,Object partKey, Object message

运行之前分布情况:

user-info:2:1533

user-info:1:2577

user-info:0:3265

运行之后分布情况:

user-info:2:1716 比之前增加了 183条

user-info:1:2747 比之前增加了 170条

user-info:0:3412 表之前增加了147条

数据分布均衡,多运行几次看看

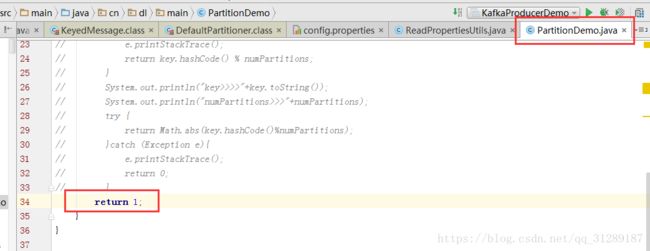

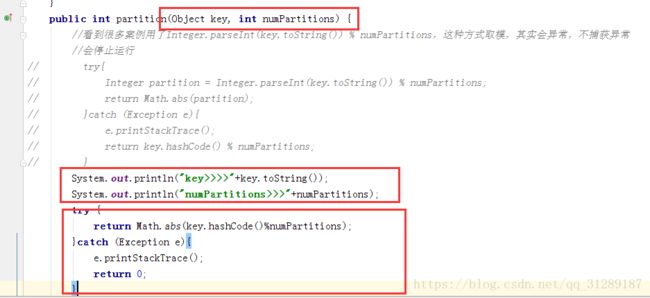

6、kafka各个分区之间为什么会负载均衡?

1、自定义分区规则,初始化Producer时指定分区规则类的路径

2、创建分区规则类:PartitionDemo ,实现Partitioner ,重写partition(Object key,int numPartitions)方法

package cn.dl.main;

import kafka.producer.Partitioner;

import kafka.utils.VerifiableProperties;

/**

* Created by Tiger on 2018/8/18.

*/

public class PartitionDemo implements Partitioner {

//这个构造器必须加,否则初始化Producer对象有问题

public PartitionDemo(VerifiableProperties props){

}

public int partition(Object key, int numPartitions) {

//看到很多案例用了Integer.parseInt(key.toString()) % numPartitions,这种方式取模,其实会异常,不捕获异常

//会停止运行

// try{

// Integer partition = Integer.parseInt(key.toString()) % numPartitions;

// return Math.abs(partition);

// }catch (Exception e){

// e.printStackTrace();

// return key.hashCode() % numPartitions;

// }

// System.out.println("key>>>>"+key.toString());

// System.out.println("numPartitions>>>"+numPartitions);

// try {

// return Math.abs(key.hashCode()%numPartitions);

// }catch (Exception e){

// e.printStackTrace();

// return 0;

// }

return 0;

}

}

运行之前分区数据分布情况:

user-info:2:2239

user-info:1:3206

user-info:0:3930

运行之后分区数据分布情况:

user-info:2:2239

user-info:1:3206

user-info:0:4430

发现500条数据全部到分区user-info:0!!!

返回值改成

500条数据全部到分区user-info:1,返回值为2时,全部在分区user-info:2

如果返回值: num<0 || num>=numPartitions,会报:kafka.common.FailedToSendMessageException

Exception in thread "Thread-0" kafka.common.FailedToSendMessageException: Failed to send messages after 3 tries.

at kafka.producer.async.DefaultEventHandler.handle(DefaultEventHandler.scala:90)

at kafka.producer.Producer.send(Producer.scala:77)

at kafka.javaapi.producer.Producer.send(Producer.scala:33)

at cn.dl.main.KafkaProducerDemo.run(KafkaProducerDemo.java:64)

注意:打印key和numPartitions的值观察,key.hashCode()%numPartitions,返回值有可能<0,所以取绝对值,如果捕获到异常,直接返回0

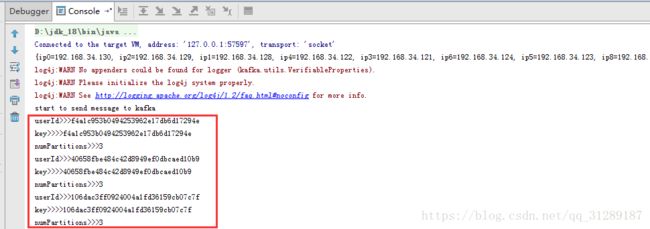

debug打印的userId和后面的key和numPartitios:

key其实就是userId,numPartitions等于创建topic时指定的分区数!!!

结论:kafka在初始化Producer对象时有默认的分区规则类,我们传入的useId就是为了让数据在各个分区分布均匀!!!

如果用第三种构造方法:String topic ,Object key ,Object partKey, Object message,自定义分区类中的key就是传入的partKey!!!

7、kafka-producer案例遇到的异常

a、 kafka.common.FailedToSendMessageException

Exception in thread "Thread-0" kafka.common.FailedToSendMessageException: Failed to send messages after 3 tries.

at kafka.producer.async.DefaultEventHandler.handle(DefaultEventHandler.scala:90)

at kafka.producer.Producer.send(Producer.scala:77)

at kafka.javaapi.producer.Producer.send(Producer.scala:33)

at cn.dl.main.KafkaProducerDemo.run(KafkaProducerDemo.java:57)

错误原因:自定义分区规则类,没有构造方法,或者return值(num<0 || num>=numPartitions),总之,只要是你自定义分区规则类,执行这个方法有问题public int partition(Object key, int numPartitions),都会报这个异常

//这个构造器必须加,否则初始化Producer对象有问题

public PartitionDemo(VerifiableProperties props){}在网上百度到到很多自定义分区规则方法,最终的想法都是一样的

try{

Integer partition = Integer.parseInt(key.toString()) % numPartitions;

return Math.abs(partition);

}catch (Exception e){

e.printStackTrace();

return key.hashCode() % numPartitions;

}java.lang.NumberFormatException: For input string: "d335c02ebdf144f69e1e101ca9b262ec"

at java.lang.NumberFormatException.forInputString(NumberFormatException.java:65)

at java.lang.Integer.parseInt(Integer.java:580)

at java.lang.Integer.parseInt(Integer.java:615)

at cn.dl.main.PartitionDemo.partition(PartitionDemo.java:18)

at kafka.producer.async.DefaultEventHandler.kafka$producer$async$DefaultEventHandler$$getPartition(DefaultEventHandler.scala:227)

at kafka.producer.async.DefaultEventHandler$$anonfun$partitionAndCollate$1.apply(DefaultEventHandler.scala:151)

at kafka.producer.async.DefaultEventHandler$$anonfun$partitionAndCollate$1.apply(DefaultEventHandler.scala:149)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:47)

at kafka.producer.async.DefaultEventHandler.partitionAndCollate(DefaultEventHandler.scala:149)

at kafka.producer.async.DefaultEventHandler.dispatchSerializedData(DefaultEventHandler.scala:95)

at kafka.producer.async.DefaultEventHandler.handle(DefaultEventHandler.scala:72)

at kafka.producer.Producer.send(Producer.scala:77)

at kafka.javaapi.producer.Producer.send(Producer.scala:33)

at cn.dl.main.KafkaProducerDemo.run(KafkaProducerDemo.java:57)

这里用了Integer.parseInt(key.toString()) % numPartitions,传入的key必须可以转换成数字,否则会报上面的异常,而且线上环境,key一般不会为数字,基本都是类似UUID生成的唯一字符串!!!!

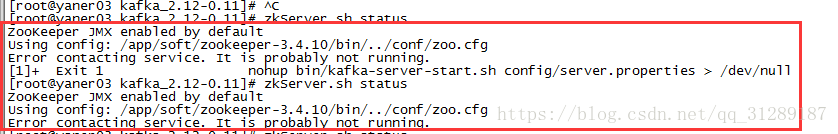

b、java.net.SocketTimeoutException

[root@yaner01 kafka_2.12-0.11]# kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list 192.168.34.128:9092 --time -1 --topic user-info

[2018-08-18 01:04:56,205] WARN Fetching topic metadata with correlation id 0 for topics [Set(user-info)] from broker [BrokerEndPoint(0,192.168.34.128,9092)] failed (kafka.client.ClientUtils$)

java.net.SocketTimeoutException

at sun.nio.ch.SocketAdaptor$SocketInputStream.read(SocketAdaptor.java:211)

at sun.nio.ch.ChannelInputStream.read(ChannelInputStream.java:103)

at java.nio.channels.Channels$ReadableByteChannelImpl.read(Channels.java:385)

at org.apache.kafka.common.network.NetworkReceive.readFromReadableChannel(NetworkReceive.java:85)

at kafka.network.BlockingChannel.readCompletely(BlockingChannel.scala:131)

at kafka.network.BlockingChannel.receive(BlockingChannel.scala:122)

at kafka.producer.SyncProducer.liftedTree1$1(SyncProducer.scala:82)

at kafka.producer.SyncProducer.doSend(SyncProducer.scala:79)

at kafka.producer.SyncProducer.send(SyncProducer.scala:124)

at kafka.client.ClientUtils$.fetchTopicMetadata(ClientUtils.scala:61)

at kafka.client.ClientUtils$.fetchTopicMetadata(ClientUtils.scala:96)

at kafka.tools.GetOffsetShell$.main(GetOffsetShell.scala:79)

at kafka.tools.GetOffsetShell.main(GetOffsetShell.scala)

Exception in thread "main" kafka.common.KafkaException: fetching topic metadata for topics [Set(user-info)] from broker [ArrayBuffer(BrokerEndPoint(0,192.168.34.128,9092))] failed

at kafka.client.ClientUtils$.fetchTopicMetadata(ClientUtils.scala:75)

at kafka.client.ClientUtils$.fetchTopicMetadata(ClientUtils.scala:96)

at kafka.tools.GetOffsetShell$.main(GetOffsetShell.scala:79)

at kafka.tools.GetOffsetShell.main(GetOffsetShell.scala)

Caused by: java.net.SocketTimeoutException

at sun.nio.ch.SocketAdaptor$SocketInputStream.read(SocketAdaptor.java:211)

at sun.nio.ch.ChannelInputStream.read(ChannelInputStream.java:103)

at java.nio.channels.Channels$ReadableByteChannelImpl.read(Channels.java:385)

at org.apache.kafka.common.network.NetworkReceive.readFromReadableChannel(NetworkReceive.java:85)

at kafka.network.BlockingChannel.readCompletely(BlockingChannel.scala:131)

at kafka.network.BlockingChannel.receive(BlockingChannel.scala:122)

at kafka.producer.SyncProducer.liftedTree1$1(SyncProducer.scala:82)

at kafka.producer.SyncProducer.doSend(SyncProducer.scala:79)

at kafka.producer.SyncProducer.send(SyncProducer.scala:124)

at kafka.client.ClientUtils$.fetchTopicMetadata(ClientUtils.scala:61)

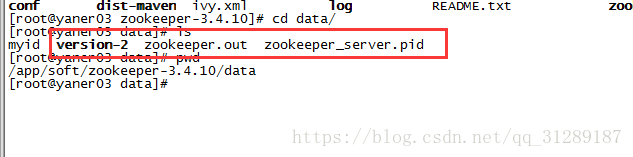

... 3 more原因:zookeeper集群挂了,解决办法,就是重启

使用命令:zkServer.sh status,查看zookeeper集群状态

使用命令:zkServer.sh stop,先杀死zookeeper进程,或者用kill命令,然后执行zkServer.sh start命令,再看zookeeper状态。

有时候重启了好几次都不行,在配置myid文本的目录下,删除除myid文件之外的所有文件,再重启,线上环境谨慎用,最好先看zookeeper日志,有可能删除其它文件,zookeeper能正常启动,但是有的topic不能用了,具体原因不太清楚!!!

8、 遗留问题:

a、自定义的分区规则类:必须要有参构造方法,而且参数必须是:VerifiableProperties props?

b、异步发送数据,如何更好的处理异常数据?

c、第三种构造方法还有其它作用吗?

producer.send(new KeyedMessage(topic,userId,partKey,jsonObject.toJSONString())); 9、下次目标

a、解决遗留的三个问题;

b、多线程消费数据;

c、如何获取指定offset位置的数据;

d、server.properties配置文件各个参数的作用;