DataX实现:从Hive到MySQL数据抽取(含完整json配置)

1.需求

从离线Hive数仓ads层抽取数据到Mysql

2.参考DataX官方Github实例

DataX官网

从hive读数据

{

"job": {

"setting": {

"speed": {

"channel": 3

}

},

"content": [

{

"reader": {

"name": "hdfsreader",

"parameter": {

"path": "/user/hive/warehouse/mytable01/*",

"defaultFS": "hdfs://xxx:port",

"column": [

{

"index": 0,

"type": "long"

},

{

"index": 1,

"type": "boolean"

},

{

"type": "string",

"value": "hello"

},

{

"index": 2,

"type": "double"

}

],

"fileType": "orc",

"encoding": "UTF-8",

"fieldDelimiter": ","

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"print": true

}

}

}

]

}

}

写入MySQL

{

"job": {

"setting": {

"speed": {

"channel": 1

}

},

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"column" : [

{

"value": "DataX",

"type": "string"

},

{

"value": 19880808,

"type": "long"

},

{

"value": "1988-08-08 08:08:08",

"type": "date"

},

{

"value": true,

"type": "bool"

},

{

"value": "test",

"type": "bytes"

}

],

"sliceRecordCount": 1000

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"writeMode": "insert",

"username": "root",

"password": "root",

"column": [

"id",

"name"

],

"session": [

"set session sql_mode='ANSI'"

],

"preSql": [

"delete from test"

],

"connection": [

{

"jdbcUrl": "jdbc:mysql://127.0.0.1:3306/datax?useUnicode=true&characterEncoding=gbk",

"table": [

"test"

]

}

]

}

}

}

]

}

}

HA设置

"hadoopConfig":{

"dfs.nameservices": "testDfs",

"dfs.ha.namenodes.testDfs": "namenode1,namenode2",

"dfs.namenode.rpc-address.aliDfs.namenode1": "",

"dfs.namenode.rpc-address.aliDfs.namenode2": "",

"dfs.client.failover.proxy.provider.testDfs": "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

}

3.最终json配置

{

"job": {

"setting": {

"speed": {

"channel": 3

}

},

"content": [

{

"reader": {

"name": "hdfsreader",

"parameter": {

"path": "hdfs://mycluster/user/hive/warehouse/ads.db/ads_paper_avgtimeandscore/dt=${dt}/dn=${dn}",

"defaultFS": "hdfs://mycluster",

"hadoopConfig":{

"dfs.nameservices": "mycluster",

"dfs.ha.namenodes.mycluster": "nn1,nn2,nn3",

"dfs.namenode.rpc-address.mycluster.nn1": "hadoop101:8020",

"dfs.namenode.rpc-address.mycluster.nn2": "hadoop102:8020",

"dfs.namenode.rpc-address.mycluster.nn3": "hadoop103:8020",

"dfs.client.failover.proxy.provider.mycluster": "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

},

"column": [

{

"index": 0,

"type": "string"

},

{

"index": 1,

"type": "string"

},

{

"index": 2,

"type": "string"

},

{

"index": 3,

"type": "string"

}

,

{

"value": "${dt}",

"type": "string"

},

{

"value": "${dn}",

"type": "string"

}

],

"fileType": "text",

"encoding": "UTF-8",

"fieldDelimiter": "\t"

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"writeMode": "insert",

"username": "root",

"password": "123456",

"column": [

"paperviewid",

"paperviewname",

"avgscore",

"avgspendtime",

"dt",

"dn"

],

"preSql": [

"delete from paper_avgtimeandscore where dt=${dt}"

],

"connection": [

{

"jdbcUrl": "jdbc:mysql://hadoop101:3306/qz_paper?useUnicode=true&characterEncoding=utf8",

"table": [

"paper_avgtimeandscore"

]

}

]

}

}

}

]

}

}

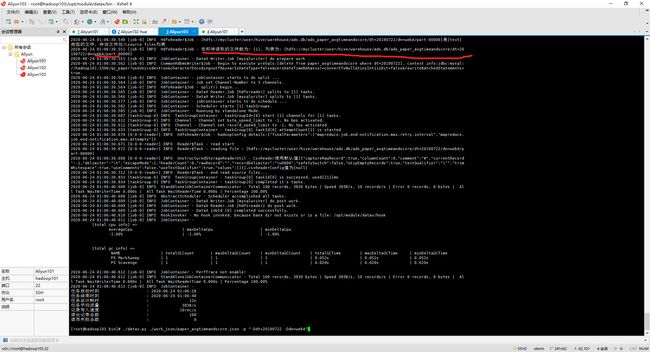

4.执行脚本

./datax.py ./work_json/paper_avgtimeandscore.json -p "-Ddt=20190722 -Ddn=webA"

6.注意

向MySQL中导入数据前,要先建好表,不然会报错!找不到表!

CREATE TABLE paper_avgtimeandscore(

paperviewid INT,

paperviewname VARCHAR(100),

avgscore decimal(4,1),

avgspendtime decimal(10,1),

dt VARCHAR(100),

dn VARCHAR(100)

);