3-Tensorflow-线性回归

import warnings

warnings.filterwarnings('ignore')

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

from sklearn.linear_model import LinearRegression

import tensorflow as tf

# 版本1.50

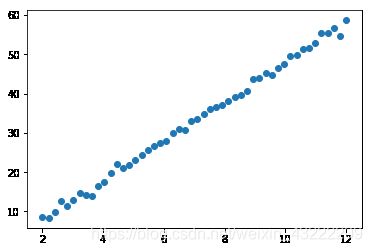

X = np.linspace(2,12,50).reshape(-1,1)

w = np.random.randint(1,6,size = 1)[0]

b = np.random.randint(-5,5,size = 1)[0]

y = X*w + b + np.random.randn(50,1)*0.7

plt.scatter(X,y)

print(X.shape,y.shape)

(50, 1) (50, 1)

linear = LinearRegression()

linear.fit(X,y)

print(linear.coef_,linear.intercept_)

[[5.01746526]] [-2.14868063]

print(w,b)

5 -2

Tensorflow完成线性回归

1、定义占位符、变量

# 线性回归理论基础是最小二乘法

X_train = tf.placeholder(dtype=tf.float32,shape = [50,1],name = 'data')

y_train = tf.placeholder(dtype=tf.float32,shape = [50,1],name = 'target')

w_ = tf.Variable(initial_value=tf.random_normal(shape = [1,1]),name = 'weight')

b_ = tf.Variable(initial_value=tf.random_normal(shape = [1]),name = 'bias')

2、构造方程(线性方程,矩阵乘法)

# 构建方程 f(x) = Xw + b

# 构建的方程,就是预测的结果

y_pred = tf.matmul(X_train,w_) + b_

# shape = (50,1)

y_pred

3、最小二乘法(平均最小二乘法)

c o s t = 1 m ∑ i = 0 M ( y − y p r e d ) 2 cost = \frac{1}{m}\sum_{i = 0}^M(y - y_{pred})^2 cost=m1i=0∑M(y−ypred)2

# 二乘法(y_pred - y_train)**2 返回的结果是列表,没有办法比较大小

# 平均最小二乘法,数值,mean

# 平均:每一个样本都考虑进去了

cost = tf.reduce_mean(tf.pow(y_pred - y_train,2))

cost

4、梯度下降(tf,提供了方法)

# 优化,cost损失函数,越小越好

opt = tf.train.GradientDescentOptimizer(0.01).minimize(cost)

opt

5、会话进行训练(for循环),sess.run(),占位符(赋值)

with tf.Session() as sess:

# 变量,初始化

sess.run(tf.global_variables_initializer())

for i in range(1000):

opt_,cost_ = sess.run([opt,cost],feed_dict = {y_train:y,X_train:X})

if i %50 == 0:

print('执行次数是:%d。损失函数值是:%0.4f'%(i+1,cost_))

# for循环结束,训练结束了

# 获取斜率和截距

W,B = sess.run([w_,b_])

print('经过100次训练,TensorFlow返回线性方程的斜率是:%0.3f。截距是:%0.3f'%(W,B))

执行次数是:1。损失函数值是:581.9765

执行次数是:51。损失函数值是:1.3099

执行次数是:101。损失函数值是:1.0826

执行次数是:151。损失函数值是:0.9136

执行次数是:201。损失函数值是:0.7881

执行次数是:251。损失函数值是:0.6947

执行次数是:301。损失函数值是:0.6254

执行次数是:351。损失函数值是:0.5739

执行次数是:401。损失函数值是:0.5356

执行次数是:451。损失函数值是:0.5071

执行次数是:501。损失函数值是:0.4859

执行次数是:551。损失函数值是:0.4702

执行次数是:601。损失函数值是:0.4585

执行次数是:651。损失函数值是:0.4499

执行次数是:701。损失函数值是:0.4434

执行次数是:751。损失函数值是:0.4386

执行次数是:801。损失函数值是:0.4350

执行次数是:851。损失函数值是:0.4324

执行次数是:901。损失函数值是:0.4304

执行次数是:951。损失函数值是:0.4290

经过100次训练,TensorFlow返回线性方程的斜率是:3.033。截距是:-1.194

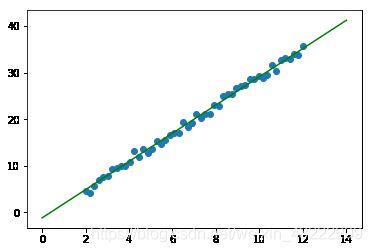

6、可视化

plt.scatter(X,y)

x = np.linspace(0,14,100)

plt.plot(x,W[0]*x + B,color = 'green')

[]