爬虫基础笔记

文章目录

- requests 库基本操作

- beautifulsoup 库基本操作

- re库基本操作

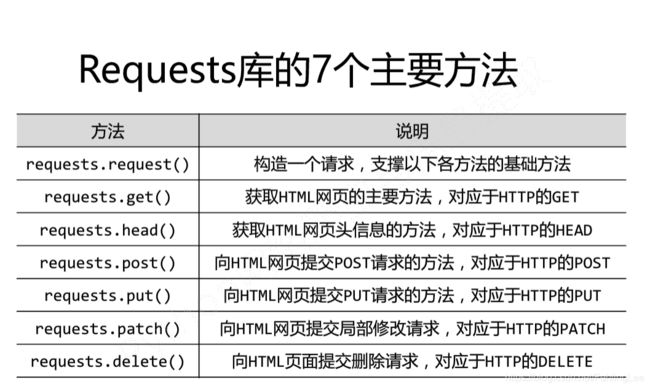

requests 库基本操作

#_author: 86138

#date: 2020/3/29

# import requests #亚马逊爬取

# url = "https://www.amazon.cn/dp/B07CRHCK77?smid=A3CQWPW49OI3BQ&ref_=Oct_CBBBCard_dsk_asin2&pf_rd_r=N8WT13Q2P3085ZPV093X&pf_rd_p=5a0738df-7719-4914-81ee-278221dce082&pf_rd_m=A1AJ19PSB66TGU&pf_rd_s=desktop-3"

# try:

# kv = {'user-agent':'Mozilla/5.0'}

# r = requests.get(url, headers = kv)

# r.raise_for_status()

# r.encoding = r.apparent_encoding

# print(r.text[1000:2000])

# except:

# print("爬取失败")

# import requests #baidu搜索

# keyword = "python"

# try:

# kv = {'wd':keyword} #360 'wd'换成'q'

# r = requests.get("http://www.baidu.com/s",params = kv)

# print(r.request.url)

# r.raise_for_status()

# print(len(r.text))

# except:

# print("爬取失败")

# import requests #爬图

# >>> import os

# >>> url = "https://tvax1.sinaimg.cn/crop.0.0.1002.1002.180/b59af28fly8fodpz8zxejj20ru0rudh6.jpg?KID=imgbed,tva&Expires=1586155537&ssig=wNM13DZqCW"

# >>> root = "D://pics//"

# >>> path = root + "abc.jpg" #url.split('/')[-1] # 路径 、文件名

# >>> try:

# ... if not os.path.exists(root): #判断是否存在目录

# ... os.mkdir(root)

# ... if not os.path.exists(path): #判断是否存在文件

# ... r = requests.get(url)

# ... with open(path, 'wb') as f: #将返回的二进制写入文件中

# ... f.write(r.content)

# ... f.close()

# ... print("文件保存成功")

# ... else:

# ... print("文件已存在")

# ... except:

# ... print("爬取失败")

# # 爬歌/视频

# import requests

# import os

# url = "https://sharefs.yun.kugou.com/202004061144/6f43c939cfe9a79cd90dfb58cb6f00ad/G200/M03/04/18/CA4DAF5FCFeAR0zvADDFozYoPDI733.mp3"

# root = "D://pics//"

# path = root + "abc.mp3" #url.split('/')[-1] # 路径 、文件名

# try:

# if not os.path.exists(root): #判断是否存在目录

# os.mkdir(root)

# if not os.path.exists(path): #判断是否存在文件

# r = requests.get(url)

# with open(path, 'wb') as f: #将返回的二进制写入文件中

# f.write(r.content)

# f.close()

# print("文件保存成功")

# else:

# print("文件已存在")

# except:

# print("爬取失败")

#

# import requests #查ip地址

# url = "http://m.ip138.com/ip.asp?ip="

# try:

# r = requests.get(url + "ip地址")

# r.raise_for_status()

# r.encoding = r.apparent.encoding

# print(r.text[-500:])

# except:

# print("爬取失败")

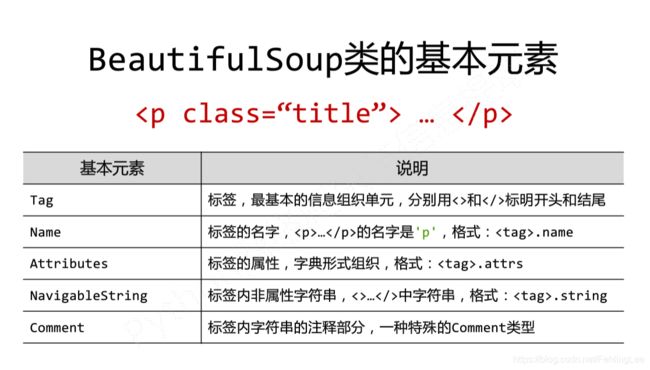

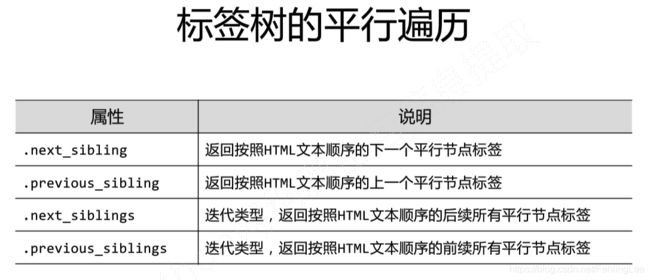

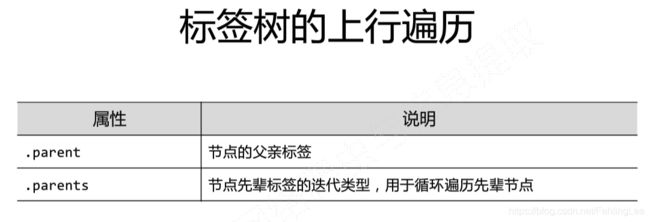

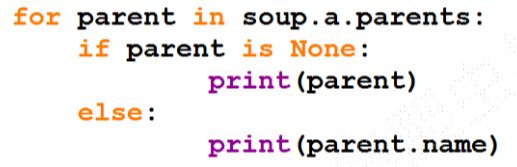

beautifulsoup 库基本操作

只有父节点相同的标签才可以平行遍历

遍历实现(其他类似)

实际操作

# >>> import requests

# >>> r = requests.get("http://python123.io/ws/demo.html")

# >>> r.text

# 'This is a python demo page \r\n\r\nThe demo python introduces several python courses.

\r\nPython is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:\r\nBasic Python and Advanced Python.

\r\n'

# >>> demo = r.text

# >>> from bs4 import BeautifulSoup #bs库使用

# >>> soup = BeautifulSoup(demo, "html.parser")

# >>> print(soup.prettify())

#

#

# </span>

<span class="token comment"># This is a python demo page</span>

<span class="token comment">#

#

#

#

#

# The demo python introduces several python courses.

#

#

#

# Python is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:

#

# Basic Python

#

# and

#

# Advanced Python

#

# .

#

#

#

# 查html页面中所有网址

# import requests

# r = requests.get("http://python123.io/ws/demo.html")

# demo = r.text

# from bs4 import BeautifulSoup

# soup = BeautifulSoup(demo, "html.parser")

# for link in soup.find_all('a'): #soup.find_all('a') == soup('a') ## 一样 ## .find_all(name, attrs, recursive(True/false), string, **kwargs)

# print(link.get('href'))

###爬取大学排名

# import requests

# from bs4 import BeautifulSoup

# import bs4

#

# def getHTMLText(url):

# try:

# r = requests.get(url, timeout = 30)

# r.raise_for_status()

# r.encoding = r.apparent_encoding

# return r.text

# except:

# return""

#

# def fillUnivList(ulist, html):

# soup = BeautifulSoup(html, "html.parser")

# for tr in soup.find('tbody').childen:

# if isinstance(tr, bs4.element.Tag):

# tds = tr('td')

# ulist.append([tds[0].string, tds[1].string, tds[2].string, tds[3].string])

#

# def printUnivList(ulist, num):

# print("{:^10}\t{:^6}\t{:^10}".format("排名","学校名称","总分"))

# for i in range(num):

# u = ulist[i]

# print("{:^10}\t{:^6}\t{:^10}".format(u[0],u[1],u[2]))

##优化

#def printUnivList(ulist, num):

# tplt = "{0:^10}\t{1:{3}^10}\t{2:^10}" ##中英文混合格式化输出优化

# print(tplt.format("排名","学校名称","总分",chr(12288)))

# for i in range(num):

# u=ulist[i]

# print(tplt.format(u[0],u[1],u[2],chr(12288)))

#

# def main():

# unifo = []

# url = 'https://www.zuihaodaxue.cn/zuihaodaxuepaiming2016.html'

# html = getHTMLText(url)

# fillUnivList(unifo, html)

# printUnivList(unifo, 20) ##20unis

# main()

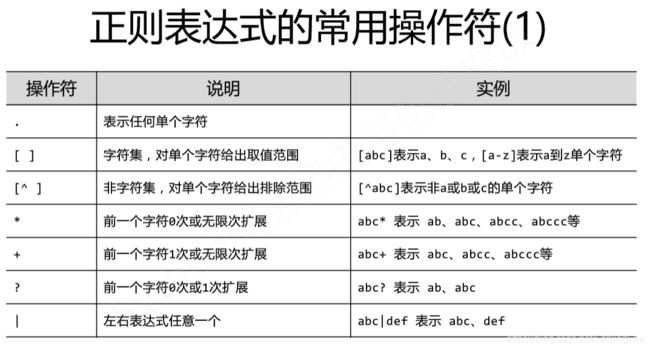

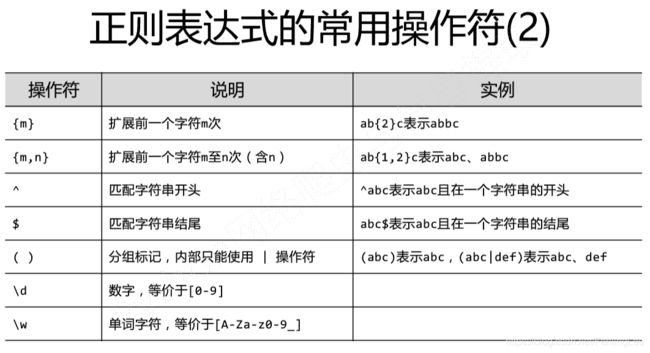

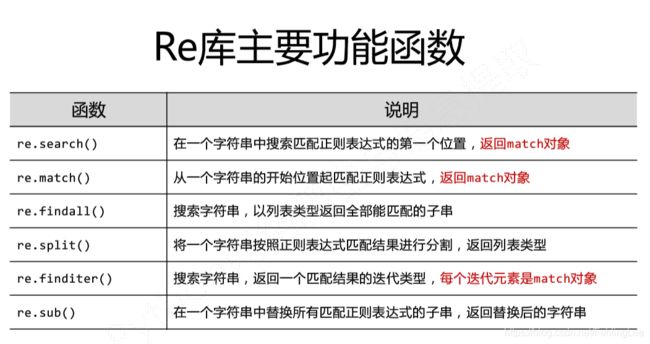

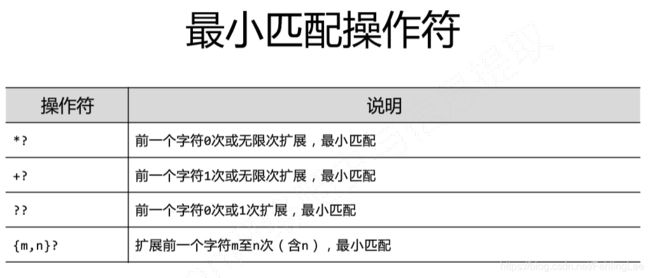

re库基本操作

#正则表达式

## re.search()

# >>> import re

# >>> match = re.search(r'[1-9]\d{5}', 'BIT 100081')

# >>> if match:

# ... print(match.group(0))

# ...

# 100081

# #re.match()

# >>> import re

# >>> match = re.match(r'[1-9]\d{5}', 'BIT 100081')

# >>> if match:

# ... match.group(0)

# ...

# >>> match.group(0)

# Traceback (most recent call last):

# File "", line 1, in

# AttributeError: 'NoneType' object has no attribute 'group'

# >>>

# >>>

# >>>

# >>> import re

# >>> match = re.match(r'[1-9]\d{5}', '100081 BIT')

# >>> if match:

# ... match.group(0)

# ...

# '100081'

# re.findall()

# >>> import re

# >>> ls = re.findall(r'[1-9]\d{5}', 'BIT100081 TSU100084')

# >>> ls

# ['100081', '100084']

# #re.split()

# >>> import re

# >>> re.split(r'[1-9]\d{5}', 'BIT100081 TSU100081')

# ['BIT', ' TSU', '']

# >>> re.split(r'[1-9]\d{5}', 'BIT100081 TSU100081', maxsplit = 1)

# ['BIT', ' TSU100081']

# #re.finditer()

# >>> import re

# >>> for m in re.finditer(r'[1-9]\d{5}', 'BIT100081 TSU100084'): ##迭代类型

# ... if m:

# ... print(m.group(0))

# ...

# 100081

# 100084

## re.sub()

# >>> import re

# >>> re.sub(r'[1-9]\d{5}', ':zipcode', 'BIT100081 TSU100084')

# 'BIT:zipcode TSU:zipcode'

#

# >>> import re

# >>> m = re.search(r'[1-9]\d{5}', 'BIT100081 TSU100084')

# >>> m.string

# 'BIT100081 TSU100084'

# >>> m.re

# re.compile('[1-9]\\d{5}')

# >>> m.pos

# 0

# >>> m.endpos

# 19

# >>> m.group(0)

# '100081'

# >>> m.start()

# 3

# >>> m.end()

# 9

# >>> m.span()

# (3, 9)

#

# >>> match = re.search(r'PY.*N', 'PYANBNCNDN')#最长匹配

# >>> match.group(0)

# 'PYANBNCNDN'

# >>> match = re.search(r'PY.*?N', 'PYANBNCNDN') #最短匹配

# >>> match.group(0)

# 'PYAN'

# ##爬取某宝商品价格

# import requests

# import re

#

# def getHTMLText(url):

# try:

# r = requests.get(url, timeout = 30)

# r.raise_for_status()

# r.encoding = r.apparent_encoding

# return r.text

# except:

# print("")

#

# def parsePage(ilt, html):

# try:

# plt = re.findall(r'\"view_price\"\:\"[\d\.]*\"', html)

# tlt = re.findall(r'\"raw_title\"\:\".*?\"', html)

# for i in range(len(plt)):

# price = eval(plt[i].split(':')[1])

# title = eval(tlt[i].split(':')[1])

# ilt.append([price, title])

# except:

# print("")

#

# def printGoodsList(ilt):

# tplt = "{:4}\t{:8}\t{:16}"

# print(tplt.format("序号", "价格", "商品名称"))

# count = 0

# for g in ilt:

# count += 1

# print(tplt.format(count, g[0], g[1]))

#

# def main():

# goods = '书包'

# depth = 3

# star_url = 'https://s.taobao.com/search?q=' + goods

# infoList = []

# for i in range(depth):

# try:

# url = star_url + '&s=' + str(44 * i)

# html = getHTMLText(url)

# parsePage(infoList, html)

# except:

# continue

# printGoodsList(infoList)

#

# main()

#爬取股票信息

# import requests

# from bs4 import BeautifulSoup

# import traceback

# import re

#

#

# def getHTMLText(url):

# try:

# r = requests.get(url)

# r.raise_for_status()

# r.encoding = r.apparent_encoding

# return r.text

# except:

# return ""

#

#

# def getStockList(lst, stockURL):

# html = getHTMLText(stockURL)

# soup = BeautifulSoup(html, 'html.parser')

# a = soup.find_all('a')

# for i in a:

# try:

# href = i.attrs['href']

# lst.append(re.findall(r"[s][hz]\d{6}", href)[0])

# except:

# continue

#

#

# def getStockInfo(lst, stockURL, fpath):

# for stock in lst:

# url = stockURL + stock + ".html"

# html = getHTMLText(url)

# try:

# if html == "":

# continue

# infoDict = {}

# soup = BeautifulSoup(html, 'html.parser')

# stockInfo = soup.find('div', attrs={'class': 'stock-bets'})

#

# name = stockInfo.find_all(attrs={'class': 'bets-name'})[0]

# infoDict.update({'股票名称': name.text.split()[0]})

#

# keyList = stockInfo.find_all('dt')

# valueList = stockInfo.find_all('dd')

# for i in range(len(keyList)):

# key = keyList[i].text

# val = valueList[i].text

# infoDict[key] = val

#

# with open(fpath, 'a', encoding='utf-8') as f:

# f.write(str(infoDict) + '\n')

# except:

# traceback.print_exc()

# continue

#

#

# def main():

# stock_list_url = 'https://quote.eastmoney.com/stocklist.html'

# stock_info_url = 'https://gupiao.baidu.com/stock/'

# output_file = 'D:/BaiduStockInfo.txt'

# slist = []

# getStockList(slist, stock_list_url)

# getStockInfo(slist, stock_info_url, output_file)

#

#

# main()

##优化速度,完成度器

# import requests

# from bs4 import BeautifulSoup

# import traceback

# import re

#

#

# def getHTMLText(url, code="utf-8"): ##优化

# try:

# r = requests.get(url)

# r.raise_for_status()

# r.encoding = code ##优化

# return r.text

# except:

# return ""

#

#

# def getStockList(lst, stockURL):

# html = getHTMLText(stockURL, "GB2312") ##优化

# soup = BeautifulSoup(html, 'html.parser')

# a = soup.find_all('a')

# for i in a:

# try:

# href = i.attrs['href']

# lst.append(re.findall(r"[s][hz]\d{6}", href)[0])

# except:

# continue

#

#

# def getStockInfo(lst, stockURL, fpath):

# count = 0 ##优化,完成度器

# for stock in lst:

# url = stockURL + stock + ".html"

# html = getHTMLText(url)

# try:

# if html == "":

# continue

# infoDict = {}

# soup = BeautifulSoup(html, 'html.parser')

# stockInfo = soup.find('div', attrs={'class': 'stock-bets'})

#

# name = stockInfo.find_all(attrs={'class': 'bets-name'})[0]

# infoDict.update({'股票名称': name.text.split()[0]})

#

# keyList = stockInfo.find_all('dt')

# valueList = stockInfo.find_all('dd')

# for i in range(len(keyList)):

# key = keyList[i].text

# val = valueList[i].text

# infoDict[key] = val

#

# with open(fpath, 'a', encoding='utf-8') as f:

# f.write(str(infoDict) + '\n')

# count = count + 1 ##优化

# print("\r当前进度: {:.2f}%".format(count * 100 / len(lst)), end="") ##优化

# except:

# count = count + 1 ##优化

# print("\r当前进度: {:.2f}%".format(count * 100 / len(lst)), end="") ##优化

# continue

#

#

# def main():

# stock_list_url = 'https://quote.eastmoney.com/stocklist.html'

# stock_info_url = 'https://gupiao.baidu.com/stock/'

# output_file = 'D:/BaiduStockInfo.txt'

# slist = []

# getStockList(slist, stock_list_url)

# getStockInfo(slist, stock_info_url, output_file)

#

# main()