pytorch实现Senet 代码详解

Senet的优点

senet的优点在于增加少量的参数便可以一定程度的提高模型的准确率,是第一个在成型的模型基础之上建立的策略,创新点非常的好,很适合自己创作新模型刷高准确率的一种方法。

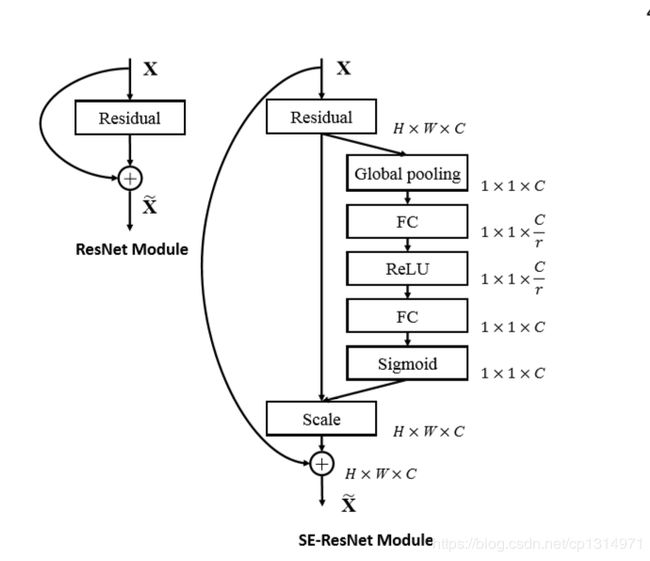

Senet的结构

本文的代码讲解是以resnet50讲解,上图便是senet的结构,应用于已经构造完成的resnet模型,只不过在加上了一层se结构的卷积。se结构是在特征图最后进行的,out_channels,作为输入,然后经历整个se结构的卷积处理,这种压缩在膨胀的过程可以看做是不同层特征图数据交融,原本进行1X1的卷积就可以加强非线性和跨通道的信息交互。

Se结构代码

self.se = nn.Sequential(

nn.AdaptiveAvgPool2d((1,1)),

nn.Conv2d(filter3,filter3//16,kernel_size=1),

nn.ReLU(),

nn.Conv2d(filter3//16,filter3,kernel_size=1),

nn.Sigmoid()

)

整体结构

SE-resnet-50基本跟resnet-50没有变化,唯一变化就是加上了se这个结构,可以参考我之前写的resnet讲解对比学习,代码也基本相同。卷积的重复利用,所以写成一个板块

class Block(nn.Module):

def __init__(self, in_channels, filters, stride=1, is_1x1conv=False):

super(Block, self).__init__()

filter1, filter2, filter3 = filters

self.is_1x1conv = is_1x1conv

self.relu = nn.ReLU(inplace=True)

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels, filter1, kernel_size=1, stride=stride,bias=False),

nn.BatchNorm2d(filter1),

nn.ReLU()

)

self.conv2 = nn.Sequential(

nn.Conv2d(filter1, filter2, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(filter2),

nn.ReLU()

)

self.conv3 = nn.Sequential(

nn.Conv2d(filter2, filter3, kernel_size=1, stride=1, bias=False),

nn.BatchNorm2d(filter3),

)

if is_1x1conv:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, filter3, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(filter3)

)

self.se = nn.Sequential(

nn.AdaptiveAvgPool2d((1,1)),

nn.Conv2d(filter3,filter3//16,kernel_size=1),

nn.ReLU(),

nn.Conv2d(filter3//16,filter3,kernel_size=1),

nn.Sigmoid()

)

def forward(self, x):

x_shortcut = x

x1 = self.conv1(x)

x1 = self.conv2(x1)

x1 = self.conv3(x1)

x2 = self.se(x1)

x1 = x1*x2

if self.is_1x1conv:

x_shortcut = self.shortcut(x_shortcut)

x1 = x1 + x_shortcut

x1 = self.relu(x1)

return x1

这一步的核心之处便是 x1 = x1*x2,利用了pytorch特有的广播机制,这一步便形成了全新的特征图,经过这样的卷积,包含浅层的特征信息,又有增强版的跨层通道的特征图,这样的特征图基本很完善。

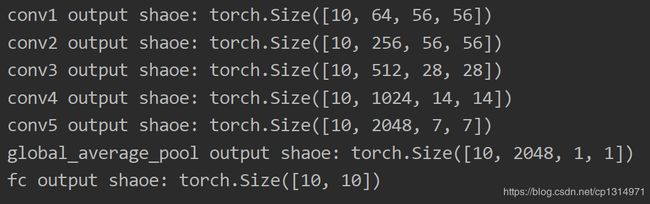

输出的结构和上图对应。

全部代码

import torch

import torch.nn as nn

class Block(nn.Module):

def __init__(self, in_channels, filters, stride=1, is_1x1conv=False):

super(Block, self).__init__()

filter1, filter2, filter3 = filters

self.is_1x1conv = is_1x1conv

self.relu = nn.ReLU(inplace=True)

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels, filter1, kernel_size=1, stride=stride,bias=False),

nn.BatchNorm2d(filter1),

nn.ReLU()

)

self.conv2 = nn.Sequential(

nn.Conv2d(filter1, filter2, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(filter2),

nn.ReLU()

)

self.conv3 = nn.Sequential(

nn.Conv2d(filter2, filter3, kernel_size=1, stride=1, bias=False),

nn.BatchNorm2d(filter3),

)

if is_1x1conv:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, filter3, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(filter3)

)

self.se = nn.Sequential(

nn.AdaptiveAvgPool2d((1,1)),

nn.Conv2d(filter3,filter3//16,kernel_size=1),

nn.ReLU(),

nn.Conv2d(filter3//16,filter3,kernel_size=1),

nn.Sigmoid()

)

def forward(self, x):

x_shortcut = x

x1 = self.conv1(x)

x1 = self.conv2(x1)

x1 = self.conv3(x1)

x2 = self.se(x1)

x1 = x1*x2

if self.is_1x1conv:

x_shortcut = self.shortcut(x_shortcut)

x1 = x1 + x_shortcut

x1 = self.relu(x1)

return x1

class senet(nn.Module):

def __init__(self,cfg):

super(senet,self).__init__()

classes = cfg['classes']

num = cfg['num']

self.conv1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

self.conv2 = self._make_layer(64, (64, 64, 256), num[0],1)

self.conv3 = self._make_layer(256, (128, 128, 512), num[1], 2)

self.conv4 = self._make_layer(512, (256, 256, 1024), num[2], 2)

self.conv5 = self._make_layer(1024, (512, 512, 2048), num[3], 2)

self.global_average_pool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Sequential(

nn.Linear(2048,classes)

)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.global_average_pool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def _make_layer(self,in_channels, filters, num, stride=1):

layers = []

block_1 = Block(in_channels, filters, stride=stride, is_1x1conv=True)

layers.append(block_1)

for i in range(1, num):

layers.append(Block(filters[2], filters, stride=1, is_1x1conv=False))

return nn.Sequential(*layers)

def Senet():

cfg = {

'num':(3,4,6,3),

'classes': (10)

}

return senet(cfg)

net = Senet()

x = torch.rand((10, 3, 224, 224))

for name,layer in net.named_children():

if name != "fc":

x = layer(x)

print(name, 'output shaoe:', x.shape)

else:

x = x.view(x.size(0), -1)

x = layer(x)

print(name, 'output shaoe:', x.shape)