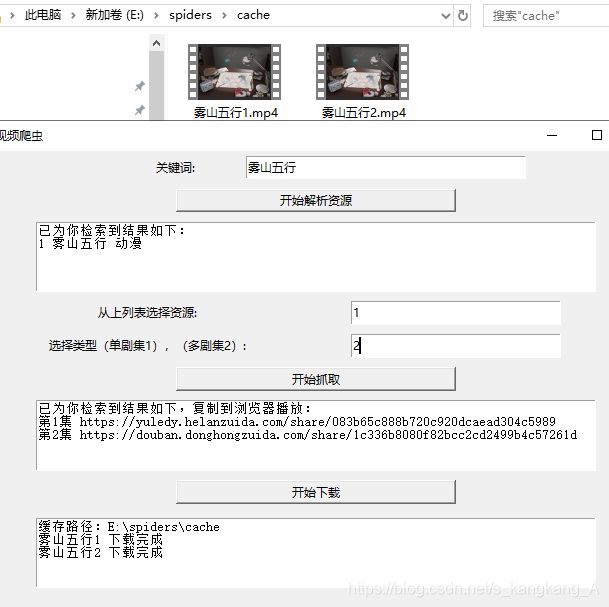

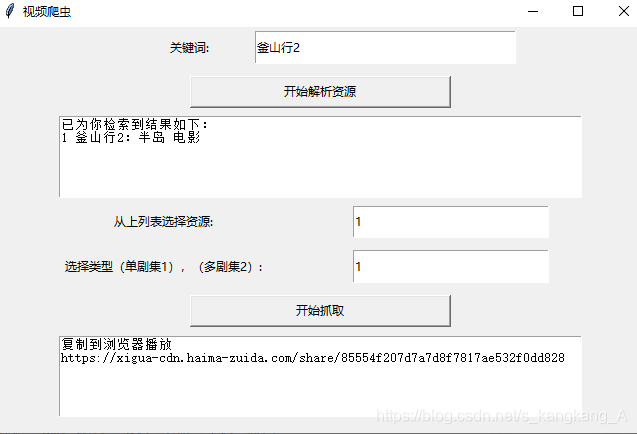

tkinter与爬虫的有机结合,视频爬虫GUI实现(新增下载功能)

更新了GUI版本的下载功能,相较于GitHub版本的代码,在GUI版本弃用了一部分资源,可能对检索结果产生部分影响

这是非gui版的爬虫源码,功能更强大,支持视频下载,逻辑也更加完整,感兴趣可以看看

https://github.com/iskangkanga/PiracyVideo

简单实现GUI版本的视频爬虫

效果图:

代码:

import re

from service.geter import get_response

import requests

import traceback

from tkinter import *

def get_source():

# 响应第一个button,仅获取资源并输出到gui

result = ''

search_url = 'https://www.yszxwang.com/search.php'

data = {

'searchword': inp1.get()

}

resp = requests.post(search_url, data=data, timeout=15)

# print(resp.text)

# 若网站检索无结果,直接返回错误提示并关闭爬虫

if '对不起,没有找到' in resp.text:

txt.insert(END, 'ERROR, 网站检索无此结果!' + '\n')

return

# 进入搜索详情页,获取详情页链接

urls = re.findall('href="(/[a-z]+?/.*?\d+/)"', resp.text)

titles = re.findall('(.*?)

', resp.text)

types = re.findall('.*?

', resp.text)

type_list = []

for t in types:

if t == 'tv':

t = '电视剧'

elif t == 'dm':

t = '动漫'

elif t == 'dy':

t = '电影'

elif t == 'zy':

t = '综艺'

type_list.append(t)

# 暂时已发现 tv:剧集 dm:动漫 dy:电影 zy:综艺

# print(titles)

for url in urls:

if len(url) > 60:

urls.remove(url)

r_urls = []

for u in urls:

if u not in r_urls:

r_urls.append(u)

txt.insert(END, '已为你检索到结果如下:' + '\n')

for i, title in enumerate(titles):

txt.insert(END, str(i+1) + ' ' + title + ' ' + type_list[i] + '\n')

def get_source1():

# 获取资源并返回到方法

result = ''

search_url = 'https://www.yszxwang.com/search.php'

data = {

'searchword': inp1.get()

}

resp = requests.post(search_url, data=data, timeout=15)

urls = re.findall('href="(/[a-z]+?/.*?\d+/)"', resp.text)

for url in urls:

if len(url) > 60:

urls.remove(url)

r_urls = []

for u in urls:

if u not in r_urls:

r_urls.append(u)

return r_urls

def begin_parse():

# 响应第二个button,开始解析

f_type = inp3.get()

choice = int(inp2.get())

r_urls = get_source1()

detail_url = 'https://www.yszxwang.com' + r_urls[choice - 1]

if f_type == '1':

# 单剧集解析

parse_alone(detail_url)

else:

# 多剧集解析

parse_many(detail_url)

def parse_alone(detail_url):

resp = get_response(detail_url)

if not resp:

txt2.insert(END, '失败' + '\n')

return

play_urls = re.findall('a title=.*? href=\'(.*?)\' target="_self"', resp)

play_url = get_real_url(play_urls)

if 'http' in play_url:

txt2.insert(END, "复制到浏览器播放" + '\n')

txt2.insert(END, play_url + '\n')

else:

txt2.insert(END, "解析失败" + '\n')

def get_real_url(play_urls):

for i, play_page_url in enumerate(play_urls):

play_page_url = 'https://www.yszxwang.com' + play_page_url

resp1 = get_response(play_page_url)

if resp1:

data_url = re.search('var now="(http.*?)"', resp1).group(1).strip()

resp2 = get_response(data_url)

if resp2:

return data_url

return 'defeat'

def parse_many(detail_url):

all_play_list, play_num = get_all_source(detail_url)

many_real_url = get_many_real_url(all_play_list, play_num)

if isinstance(many_real_url,list):

txt2.insert(END, '已为你检索到结果如下:' + '\n')

txt2.insert(END, "复制到浏览器播放" + '\n')

for i, r in enumerate(many_real_url):

txt2.insert(END, "第" + str(i+1) + '集' + ' ' + r + '\n')

else:

txt2.insert(END, "解析失败" + '\n')

def get_many_real_url(all_play_list, play_num):

for i, play_list in enumerate(all_play_list):

many_data_url = []

for j, play_page_url in enumerate(play_list):

play_page_url = 'https://www.yszxwang.com' + play_page_url

resp1 = get_response(play_page_url)

if resp1:

data_url = re.search('var now="(http.*?)"', resp1).group(1).strip()

resp2 = get_response(data_url)

if resp2:

# 在data_url含有m3u8时,该链接可以用于下载,但是无法播放

if 'm3u8' in data_url:

break

else:

# 该链接为视频链接,视频本质依旧是m3u8,但该页面无广告之类,为纯视频页面

many_data_url.append(data_url)

else:

break

else:

break

if len(many_data_url) == play_num:

return many_data_url

return 'defeat'

def get_all_source(detail_url):

max_num = 0

max_play = 0

resp = get_response(detail_url)

all_source = re.findall("href='(/video.*?)'", resp)

for s in all_source:

num = int(re.search('-(\d+?)-', s).group(1).strip())

play_num = int(re.search('-\d+?-(\d+?)\.html', s).group(1).strip())

if num > max_num:

max_num = num

if play_num > max_play:

max_play = play_num

# 最大资源数

source_num = max_num + 1

# 最大集数,有些资源更新慢集数不足,弃用

play_num = max_play + 1

all_play_list = []

for i in range(source_num):

soruce_list = []

for s in all_source:

# 资源分类

cate = int(re.search('-(\d+?)-', s).group(1).strip())

if cate == i:

soruce_list.append(s)

# 获取集数最大的所有资源

if len(soruce_list) == play_num:

all_play_list.append(soruce_list)

# 弃用集数不足的资源

return all_play_list, play_num

if __name__ == '__main__':

# 构建窗口

try:

root = Tk()

root.geometry('600x500')

root.title('视频爬虫')

lb1 = Label(root, text='关键词:')

lb1.place(relx=0.1, rely=0.01, relwidth=0.4, relheight=0.08)

inp1 = Entry(root)

inp1.place(relx=0.4, rely=0.01, relwidth=0.4, relheight=0.08)

btn1 = Button(root, text='开始解析资源', command=get_source)

btn1.place(relx=0.3, rely=0.12, relwidth=0.4, relheight=0.08)

txt = Text(root)

txt.place(relx=0.1, rely=0.22, relwidth=0.8, relheight=0.2)

lb2 = Label(root, text='从上列表选择资源:')

lb2.place(relx=0.01, rely=0.44, relwidth=0.5, relheight=0.08)

inp2 = Entry(root)

inp2.place(relx=0.55, rely=0.44, relwidth=0.3, relheight=0.08)

lb3 = Label(root, text='选择类型(单剧集1),(多剧集2):')

lb3.place(relx=0.01, rely=0.55, relwidth=0.5, relheight=0.08)

inp3 = Entry(root)

inp3.place(relx=0.55, rely=0.55, relwidth=0.3, relheight=0.08)

btn1 = Button(root, text='开始抓取', command=begin_parse)

btn1.place(relx=0.3, rely=0.66, relwidth=0.4, relheight=0.08)

txt2 = Text(root)

txt2.place(relx=0.1, rely=0.76, relwidth=0.8, relheight=0.2)

root.mainloop()

except Exception as e:

print(e, traceback.format_exc())在上面代码用到一个自写的请求方法from service.geter import get_response:

# 只做get请求,post请求在原解析做

import requests

def get_response(url, headers=None):

# 重试次数

times = 3

info = ''

while times:

try:

resp = requests.get(url, headers=headers, timeout=9)

content = resp.content.decode('utf-8')

if resp.status_code >= 400 or len(content) <= 50:

info = ''

times -= 1

else:

info = content

break

except Exception as e:

print(e)

info = ''

times -= 1

return info

2020-08-07更新

更新下载功能,不再更新效果图了,下载器源码以及更新代码如下:

import os

from service.geter import get_response

import re

import requests

import traceback

from tkinter import *

from service.downloader import down_m3u8_thread

def get_source():

# 响应第一个button,仅获取资源并输出到gui

result = ''

search_url = 'https://www.yszxwang.com/search.php'

data = {

'searchword': inp1.get()

}

resp = requests.post(search_url, data=data, timeout=15)

# print(resp.text)

# 若网站检索无结果,直接返回错误提示并关闭爬虫

if '对不起,没有找到' in resp.text:

txt.insert(END, 'ERROR, 网站检索无此结果!' + '\n')

return

# 进入搜索详情页,获取详情页链接

urls = re.findall('href="(/[a-z]+?/.*?\d+/)"', resp.text)

titles = re.findall('(.*?)

', resp.text)

types = re.findall('.*?

', resp.text)

type_list = []

for t in types:

if t == 'tv':

t = '电视剧'

elif t == 'dm':

t = '动漫'

elif t == 'dy':

t = '电影'

elif t == 'zy':

t = '综艺'

type_list.append(t)

# 暂时已发现 tv:剧集 dm:动漫 dy:电影 zy:综艺

# print(titles)

for url in urls:

if len(url) > 60:

urls.remove(url)

r_urls = []

for u in urls:

if u not in r_urls:

r_urls.append(u)

txt.insert(END, '已为你检索到结果如下:' + '\n')

for i, title in enumerate(titles):

txt.insert(END, str(i+1) + ' ' + title + ' ' + type_list[i] + '\n')

def get_source1():

# 获取资源并返回到方法

result = ''

search_url = 'https://www.yszxwang.com/search.php'

data = {

'searchword': inp1.get()

}

resp = requests.post(search_url, data=data, timeout=15)

urls = re.findall('href="(/[a-z]+?/.*?\d+/)"', resp.text)

for url in urls:

if len(url) > 60:

urls.remove(url)

r_urls = []

for u in urls:

if u not in r_urls:

r_urls.append(u)

return r_urls

def begin_parse():

# 响应第二个button,开始解析

f_type = inp3.get()

choice = int(inp2.get())

r_urls = get_source1()

detail_url = 'https://www.yszxwang.com' + r_urls[choice - 1]

if f_type == '1':

# 单剧集解析

parse_alone(detail_url)

urls = parse_alone2(detail_url)

else:

# 多剧集解析

urls = parse_many2(detail_url)

parse_many(detail_url)

return urls

def begin_parse2():

# 响应第二个button,开始解析,避免重复打印

f_type = inp3.get()

choice = int(inp2.get())

r_urls = get_source1()

detail_url = 'https://www.yszxwang.com' + r_urls[choice - 1]

if f_type == '1':

# 单剧集解析

urls = parse_alone2(detail_url)

else:

# 多剧集解析

urls = parse_many2(detail_url)

return urls

def parse_alone(detail_url):

resp = get_response(detail_url)

if not resp:

txt2.insert(END, '失败' + '\n')

return

play_urls = re.findall('a title=.*? href=\'(.*?)\' target="_self"', resp)

play_url = get_real_url(play_urls)

if 'http' in play_url:

txt2.insert(END, "复制到浏览器播放" + '\n')

txt2.insert(END, play_url + '\n')

else:

txt2.insert(END, "解析失败" + '\n')

return play_url

def parse_alone2(detail_url):

# 避免重复打印

resp = get_response(detail_url)

play_urls = re.findall('a title=.*? href=\'(.*?)\' target="_self"', resp)

play_url = get_real_url(play_urls)

return play_url

def get_real_url(play_urls):

for i, play_page_url in enumerate(play_urls):

play_page_url = 'https://www.yszxwang.com' + play_page_url

resp1 = get_response(play_page_url)

if resp1:

data_url = re.search('var now="(http.*?)"', resp1).group(1).strip()

resp2 = get_response(data_url)

if resp2:

return data_url

return 'defeat'

def parse_many(detail_url):

all_play_list, play_num = get_all_source(detail_url)

many_real_url = get_many_real_url(all_play_list, play_num)

if isinstance(many_real_url,list):

txt2.insert(END, "已为你检索到结果如下,复制到浏览器播放:" + '\n')

for i, r in enumerate(many_real_url):

txt2.insert(END, "第" + str(i+1) + '集' + ' ' + r + '\n')

else:

txt2.insert(END, "解析失败" + '\n')

return many_real_url

def parse_many2(detail_url):

all_play_list, play_num = get_all_source(detail_url)

many_real_url = get_many_real_url(all_play_list, play_num)

return many_real_url

def get_many_real_url(all_play_list, play_num):

for i, play_list in enumerate(all_play_list):

many_data_url = []

for j, play_page_url in enumerate(play_list):

play_page_url = 'https://www.yszxwang.com' + play_page_url

resp1 = get_response(play_page_url)

if resp1:

data_url = re.search('var now="(http.*?)"', resp1).group(1).strip()

resp2 = get_response(data_url)

if resp2:

# 在data_url含有m3u8时,该链接可以用于下载,但是无法播放

if 'm3u8' in data_url:

break

else:

# 该链接为视频链接,视频本质依旧是m3u8,但该页面无广告之类,为纯视频页面

many_data_url.append(data_url)

else:

break

else:

break

if len(many_data_url) == play_num:

return many_data_url

return 'defeat'

def get_all_source(detail_url):

max_num = 0

max_play = 0

resp = get_response(detail_url)

all_source = re.findall("href='(/video.*?)'", resp)

for s in all_source:

num = int(re.search('-(\d+?)-', s).group(1).strip())

play_num = int(re.search('-\d+?-(\d+?)\.html', s).group(1).strip())

if num > max_num:

max_num = num

if play_num > max_play:

max_play = play_num

# 最大资源数

source_num = max_num + 1

# 最大集数,有些资源更新慢集数不足,弃用

play_num = max_play + 1

all_play_list = []

for i in range(source_num):

soruce_list = []

for s in all_source:

# 资源分类

cate = int(re.search('-(\d+?)-', s).group(1).strip())

if cate == i:

soruce_list.append(s)

# 获取集数最大的所有资源

if len(soruce_list) == play_num:

all_play_list.append(soruce_list)

# 弃用集数不足的资源

return all_play_list, play_num

def get_down():

# 响应bt3

urls = begin_parse2()

m3u8_urls = get_m3u8(urls)

if m3u8_urls:

if isinstance(m3u8_urls, list):

down_many(m3u8_urls)

else:

down(m3u8_urls)

def get_m3u8(urls):

# 解析可下载的m3u8文件链接

if isinstance(urls, list):

m3u8_urls = []

for u in urls:

resp2 = get_response(u)

if resp2:

u2 = ''

s = u.split('/')

host1 = s[0] + '//' + s[2]

u1 = re.search('var main = "(.*?)"', resp2).group(1).strip()

m3u8_url1 = host1 + u1

host = re.sub('index.*', '', m3u8_url1)

# 读取第一个m3u8链接,获取真实m3u8链接

resp3 = get_response(m3u8_url1)

if resp3:

m3u8text = resp3.split('\n')

for text in m3u8text:

if 'm3u8' in text:

u2 = text

else:

break

if u2:

if u2[0] == '/':

real_url = host + u2[1:]

else:

real_url = host + u2

if not real_url:

break

resp = get_response(real_url)

# 简单测试链接可用性

if resp:

m3u8_urls.append(real_url)

else:

break

else:

break

else:

m3u8_urls = ''

u2 = ''

resp2 = get_response(urls)

s = urls.split('/')

host1 = s[0] + '//' + s[2]

u1 = re.search('var main = "(.*?)"', resp2).group(1).strip()

m3u8_url1 = host1 + u1

host = re.sub('index.*', '', m3u8_url1)

resp3 = get_response(m3u8_url1)

if resp3:

m3u8text = resp3.split('\n')

for text in m3u8text:

if 'm3u8' in text:

u2 = text

if u2:

if u2[0] == '/':

real_url = host + u2[1:]

else:

real_url = host + u2

resp = get_response(real_url)

if resp:

m3u8_urls = real_url

return m3u8_urls

def down_many(urls):

# 多剧集执行下载

path = os.path.abspath('cache/')

txt3.insert(END, "缓存路径:" + path + '\n')

for i, url in enumerate(urls):

host = re.sub('/index.*', '', url)

name = inp1.get()

name = name + str(i+1)

result = down_m3u8_thread(url, name, host)

if result == 'succeed':

txt3.insert(END, name + ' 下载完成' + '\n')

else:

txt3.insert(END, name + ' 下载失败' + '\n')

def down(urls):

# 单剧集执行下载

path = os.path.abspath('cache/')

txt3.insert(END, "缓存路径:" + path + '\n')

name = inp1.get()

host = re.sub('/index.*', '', urls)

result = down_m3u8_thread(urls, name, host)

if result == 'succeed':

txt3.insert(END, name + ' 下载完成' + '\n')

else:

txt3.insert(END, name + ' 下载失败' + '\n')

if __name__ == '__main__':

# 构建窗口

try:

root = Tk()

root.geometry('700x550')

root.title('视频爬虫')

lb1 = Label(root, text='关键词:')

lb1.place(relx=0.1, rely=0.01, relwidth=0.4, relheight=0.05)

inp1 = Entry(root)

inp1.place(relx=0.4, rely=0.01, relwidth=0.4, relheight=0.05)

btn1 = Button(root, text='开始解析资源', command=get_source)

btn1.place(relx=0.3, rely=0.08, relwidth=0.4, relheight=0.05)

txt = Text(root)

txt.place(relx=0.1, rely=0.15, relwidth=0.8, relheight=0.15)

lb2 = Label(root, text='从上列表选择资源:')

lb2.place(relx=0.01, rely=0.32, relwidth=0.5, relheight=0.05)

inp2 = Entry(root)

inp2.place(relx=0.55, rely=0.32, relwidth=0.3, relheight=0.05)

lb3 = Label(root, text='选择类型(单剧集1),(多剧集2):')

lb3.place(relx=0.01, rely=0.39, relwidth=0.5, relheight=0.05)

inp3 = Entry(root)

inp3.place(relx=0.55, rely=0.39, relwidth=0.3, relheight=0.05)

btn2 = Button(root, text='开始抓取', command=begin_parse)

btn2.place(relx=0.3, rely=0.46, relwidth=0.4, relheight=0.05)

txt2 = Text(root)

txt2.place(relx=0.1, rely=0.53, relwidth=0.8, relheight=0.15)

btn3 = Button(root, text='开始下载', command=get_down)

btn3.place(relx=0.3, rely=0.7, relwidth=0.4, relheight=0.05)

txt3 = Text(root)

txt3.place(relx=0.1, rely=0.78, relwidth=0.8, relheight=0.15)

root.mainloop()

except Exception as e:

print(e, traceback.format_exc())下载器:

import os

import re

import threading

import time

from queue import Queue

import logging

from moviepy.video.io.VideoFileClip import VideoFileClip

from service.geter import get_response

logging.basicConfig(level=logging.INFO,

format='%(asctime)s - %(name)s - %(levelname)s - %(filename)s - %(lineno)d - %(message)s')

import requests

def down_m3u8_thread(url, file_name, host=None, headers=None):

mkdir()

file_name = file_name + '.mp4'

logging.info('[url] %s [file_name] %s',url, file_name)

host = host

# 预下载,获取链接并写文件

resp = get_response(url)

m3u8_text = resp

# 开链接队列

ts_queue = Queue(10000)

lines = m3u8_text.split('\n')

concatfile = 'cache/' + "s" + '.txt'

for i,line in enumerate(lines):

if '.ts' in line:

if 'http' in line:

ts_queue.put(line)

else:

if line[0] == '/':

line = host + line

else:

line = host + '/' + line

ts_queue.put(line)

filename = re.search('([a-zA-Z0-9-_]+.ts)', line).group(1).strip()

open(concatfile, 'a+').write('file %s\n' % filename)

num = ts_queue.qsize()

logging.info('[下载开始,队列任务数:] %s', num)

if num > 5:

t_num = num // 5

else:

t_num = 1

if t_num > 50:

t_num = 50

threads = []

logging.info('下载开始')

for i in range(t_num):

t = threading.Thread(target=down, name='th-' + str(i),

kwargs={'ts_queue': ts_queue, 'headers': headers})

t.setDaemon(True)

threads.append(t)

for t in threads:

logging.info('[线程开始]')

time.sleep(0.4)

t.start()

for t in threads:

logging.info('[线程停止]')

t.join()

logging.info('下载完成,合并开始')

merge(concatfile, file_name)

logging.info('合并完成,删除冗余文件')

remove()

result = getLength(file_name)

return result

# 关于m3u8格式视频多线程下载及合并的详解,在以前博客里记录过

# 最近突发奇想,准备搞点非正规电影网站的资源下载器,在进行第一个网站时,可能因为该网站通信质量差,导致我多线程卡死,阻塞了

# 研究了一下网上资料以及自己的代码,已经解决了,下面是思路

# 当线程卡死或者阻塞时,应首先考虑网络或者其他异常导致请求无法自动判定为超时,挂掉该线程,而是使线程一直处于卡死状态

# 当手动加上超时时间,就可以大概率解决该异常

# 从某种程度上来说,我们做请求时,都应该加上超时限制,不然代码卡在奇怪的地方,还要分析好久

def down(ts_queue, headers):

tt_name = threading.current_thread().getName()

while True:

if ts_queue.empty():

break

url = ts_queue.get()

filename = re.search('([a-zA-Z0-9-_]+.ts)', url).group(1).strip()

try:

requests.packages.urllib3.disable_warnings()

r = requests.get(url, stream=True, headers=headers, verify=False, timeout=5)

with open('cache/' + filename, 'wb') as fp:

for chunk in r.iter_content(5424):

if chunk:

fp.write(chunk)

logging.info('[url] %s [线程] %s [文件下载成功] %s',url, tt_name, filename)

except Exception as e:

ts_queue.put(url)

logging.info('[ERROR] %s [文件下载失败,入队重试] %s', e, filename)

def merge(concatfile, file_name):

try:

path = 'cache/' + file_name

command = 'ffmpeg -y -f concat -i %s -bsf:a aac_adtstoasc -c copy %s' % (concatfile, path)

os.system(command)

except:

logging.info('Error')

def remove():

dir = 'cache/'

for line in open('cache/s.txt'):

line = re.search('file (.*?ts)', line).group(1).strip()

# print(line)

os.remove(dir + line)

logging.info('ts文件全部删除')

try:

os.remove('cache/s.txt')

logging.info('文件已删除')

except:

logging.info('部分文件删除失败,请手动删除')

def getLength(file_name):

video_path = 'cache/' + file_name

clip = VideoFileClip(video_path)

length = clip.duration

logging.info('[视频时长] %s s', length)

clip.reader.close()

clip.audio.reader.close_proc()

if length < 1:

return 'defeat'

else:

return 'succeed'

# 所有下载方法执行前,判断是否存在缓存文件夹

def mkdir():

root = 'cache/'

flag = os.path.exists(root)

if flag:

pass

else:

os.mkdir(root)