深度学习推荐算法召回youtube和排序deepfm

参考:https://github.com/shenweichen/DeepCTR

1、youtube召回算法

深度召回算法dssm,fm,ncf,sdm,mind

用tensorflow 1.X,不然deepmatch会报eager错误

import pandas as pd

from sklearn.utils import shuffle

from sklearn.model_selection import train_test_split

from deepctr.inputs import SparseFeat, VarLenSparseFeat

# from deepctr.inputs import build_input_features

from sklearn.preprocessing import LabelEncoder

from deepmatch.models import *

from deepmatch.utils import sampledsoftmaxloss

from tensorflow.python.keras import backend as K

from tensorflow.python.keras.models import Model

from tensorflow.python.keras.preprocessing.sequence import pad_sequences

import tensorflow as tf

print(tf.__version__)

# tf.compat.v1.disable_eager_execution()

#

# tf.compat.v1.enable_eager_execution()

# tf.config.experimental_run_functions_eagerly(True)

data = pd.read_csvdata = pd.read_csv("/Users/lonng/Desktop/推荐学习/deep_rec/movielens_sample.txt")

sparse_features = ["movie_id", "user_id",

"gender", "age", "occupation", "zip", ]

SEQ_LEN = 50

features = ['user_id', 'movie_id', 'gender', 'age', 'occupation', 'zip']

feature_max_idx = {}

for feature in features:

lbe = LabelEncoder()

data[feature] = lbe.fit_transform(data[feature]) + 1

feature_max_idx[feature] = data[feature].max() + 1

user_profile = data[["user_id", "gender", "age", "occupation", "zip"]].drop_duplicates('user_id')

item_profile = data[["movie_id"]].drop_duplicates('movie_id')

user_profile.set_index("user_id", inplace=True)

user_item_list = data.groupby("user_id")['movie_id'].apply(list)

from tqdm import tqdm

import random

import numpy as np

def gen_data_set(data, negsample=0):

data.sort_values("timestamp", inplace=True)

item_ids = data['movie_id'].unique()

train_set = []

test_set = []

for reviewerID, hist in tqdm(data.groupby('user_id')):

pos_list = hist['movie_id'].tolist()

rating_list = hist['rating'].tolist()

if negsample > 0:

candidate_set = list(set(item_ids) - set(pos_list))

neg_list = np.random.choice(candidate_set,size=len(pos_list)*negsample,replace=True)

for i in range(1, len(pos_list)):

hist = pos_list[:i]

if i != len(pos_list) - 1:

train_set.append((reviewerID, hist[::-1], pos_list[i], 1, len(hist[::-1]),rating_list[i]))

for negi in range(negsample):

train_set.append((reviewerID, hist[::-1], neg_list[i*negsample+negi], 0,len(hist[::-1])))

else:

test_set.append((reviewerID, hist[::-1], pos_list[i],1,len(hist[::-1]),rating_list[i]))

random.shuffle(train_set)

random.shuffle(test_set)

print(len(train_set[0]),len(test_set[0]))

return train_set, test_set

train_set, test_set = gen_data_set(data, 0)

def gen_model_input(train_set, user_profile, seq_max_len):

train_uid = np.array([line[0] for line in train_set])

train_seq = [line[1] for line in train_set]

train_iid = np.array([line[2] for line in train_set])

train_label = np.array([line[3] for line in train_set])

train_hist_len = np.array([line[4] for line in train_set])

train_seq_pad = pad_sequences(train_seq, maxlen=seq_max_len, padding='post', truncating='post', value=0)

train_model_input = {"user_id": train_uid, "movie_id": train_iid, "hist_movie_id": train_seq_pad,

"hist_len": train_hist_len}

for key in ["gender", "age", "occupation", "zip"]:

train_model_input[key] = user_profile.loc[train_model_input['user_id']][key].values

return train_model_input, train_label

# from tensorflow.keras.preprocessing.sequence import pad_sequences

train_model_input, train_label = gen_model_input(train_set, user_profile, SEQ_LEN)

test_model_input, test_label = gen_model_input(test_set, user_profile, SEQ_LEN)

# 2.count #unique features for each sparse field and generate feature config for sequence feature

embedding_dim = 16

user_feature_columns = [SparseFeat('user_id', feature_max_idx['user_id'], embedding_dim),

SparseFeat("gender", feature_max_idx['gender'], embedding_dim),

SparseFeat("age", feature_max_idx['age'], embedding_dim),

SparseFeat("occupation", feature_max_idx['occupation'], embedding_dim),

SparseFeat("zip", feature_max_idx['zip'], embedding_dim),

VarLenSparseFeat(SparseFeat('hist_movie_id', feature_max_idx['movie_id'], embedding_dim,

embedding_name="movie_id"), SEQ_LEN, 'mean', 'hist_len'),

]

item_feature_columns = [SparseFeat('movie_id', feature_max_idx['movie_id'], embedding_dim)]

# 3.Define Model and train

model = YoutubeDNN(user_feature_columns, item_feature_columns, num_sampled=5, user_dnn_hidden_units=(64, embedding_dim))

# model = MIND(user_feature_columns,item_feature_columns,dynamic_k=False,p=1,k_max=2,num_sampled=5,user_dnn_hidden_units=(64, embedding_dim),init_std=0.001)

model.compile(optimizer="adam", loss=sampledsoftmaxloss, metrics=['accuracy']) # "binary_crossentropy")

history = model.fit(train_model_input, train_label, # train_label,

batch_size=256, epochs=10, verbose=1, validation_split=0.0, )

# 4. Generate user features for testing and full item features for retrieval

test_user_model_input = test_model_input

all_item_model_input = {"movie_id": item_profile['movie_id'].values}

user_embedding_model = Model(inputs=model.user_input, outputs=model.user_embedding)

item_embedding_model = Model(inputs=model.item_input, outputs=model.item_embedding)

print(all_item_model_input)

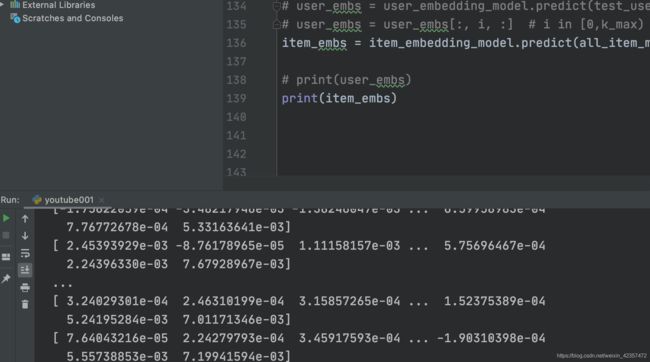

# user_embs = user_embedding_model.predict(test_user_model_input, batch_size=2 ** 12)

# user_embs = user_embs[:, i, :] # i in [0,k_max) if MIND

item_embs = item_embedding_model.predict(all_item_model_input, batch_size=2 ** 12)

# print(user_embs)

print(item_embs)

2、deepfm排序算法

深度排序算法widedeep,dcn,afm,nfm,fnn,pnn,din,dien等

import pandas as pd

from sklearn.metrics import log_loss, roc_auc_score

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder, MinMaxScaler

from deepctr.models import DeepFM

from deepctr.inputs import SparseFeat, DenseFeat, get_feature_names

data = pd.read_csv('/Users/lonng/Desktop/推荐学习/deep_rec/criteo_sample.txt')

sparse_features = ['C' + str(i) for i in range(1, 27)]

dense_features = ['I' + str(i) for i in range(1, 14)]

data[sparse_features] = data[sparse_features].fillna('-1', )

data[dense_features] = data[dense_features].fillna(0, )

target = ['label']

# 1.Label Encoding for sparse features,and do simple Transformation for dense features

for feat in sparse_features:

lbe = LabelEncoder()

data[feat] = lbe.fit_transform(data[feat])

mms = MinMaxScaler(feature_range=(0, 1))

data[dense_features] = mms.fit_transform(data[dense_features])

# 2.count #unique features for each sparse field,and record dense feature field name

fixlen_feature_columns = [SparseFeat(feat, vocabulary_size=data[feat].nunique(),embedding_dim=4)

for i,feat in enumerate(sparse_features)] + [DenseFeat(feat, 1,)

for feat in dense_features]

dnn_feature_columns = fixlen_feature_columns

linear_feature_columns = fixlen_feature_columns

feature_names = get_feature_names(linear_feature_columns + dnn_feature_columns)

# 3.generate input data for model

train, test = train_test_split(data, test_size=0.2)

train_model_input = {name:train[name] for name in feature_names}

test_model_input = {name:test[name] for name in feature_names}

# 4.Define Model,train,predict and evaluate

model = DeepFM(linear_feature_columns, dnn_feature_columns, task='binary')

model.compile("adam", "binary_crossentropy",

metrics=['accuracy'], )

history = model.fit(train_model_input, train[target].values,

batch_size=256, epochs=10, verbose=2, validation_split=0.2, )

pred_ans = model.predict(test_model_input, batch_size=256)

print("test LogLoss", round(log_loss(test[target].values, pred_ans), 4))

print("test AUC", round(roc_auc_score(test[target].values, pred_ans), 4))

from deepctr.models import xDeepFM

# 4.Define Model,train,predict and evaluate

model = xDeepFM(linear_feature_columns, dnn_feature_columns, task='binary')

model.compile("adam", "binary_crossentropy",

metrics=['binary_crossentropy'], )

history = model.fit(train_model_input, train[target].values,

batch_size=256, epochs=10, verbose=2, validation_split=0.2, )

pred_ans = model.predict(test_model_input, batch_size=256)

print("test LogLoss", round(log_loss(test[target].values, pred_ans), 4))

print("test AUC", round(roc_auc_score(test[target].values, pred_ans), 4))

from deepctr.models import DCN

# 4.Define Model,train,predict and evaluate

model = DCN(linear_feature_columns, dnn_feature_columns, task='binary')

model.compile("adam", "binary_crossentropy",

metrics=['binary_crossentropy'], )

history = model.fit(train_model_input, train[target].values,

batch_size=256, epochs=10, verbose=2, validation_split=0.2, )

pred_ans = model.predict(test_model_input, batch_size=256)

print("test LogLoss", round(log_loss(test[target].values, pred_ans), 4))

print("test AUC", round(roc_auc_score(test[target].values, pred_ans), 4))