kaggle比赛--Quora Question Pairs

文章目录

- 数据来源

- 数据分析

- 训练集

- 测试集

- 训练集的数据分析

- 字符个数

- 词的个数

- 词云

- 逻辑回归

- 获得特征

- 训练数据

- ROC 评价

- Precision-Recall Curve 评价

- XGBoost

- 两个句子共有的词数

- TF-IDF

- 统计词语

- 计算tfidf

- 平衡数据

- 划分数据

- XGBoost

- 另一种方法

- Random Forest随机森林

- 词袋模型

- LSTM

- decompesition attention

- time distributed cnn

- reference

数据来源

Quora是一个提出问题并与提供独特见解和有质量答案的人联系的平台。 这使人们能够相互学习,更好地了解世界。每个月有超过1亿人访问Quora,因此很多人提出类似措辞的问题也就不足为奇了。 具有相同意图的多个问题可能会导致寻求者花更多时间找到问题的最佳答案,并使作者觉得他们需要回答同一问题的多个版本。目前,Quora使用随机森林模型来识别重复的问题。 在本次比赛中,Kagglers面临着通过应用先进技术来分类问题对是否重复来解决这种自然语言处理问题的挑战。

数据分析

训练集

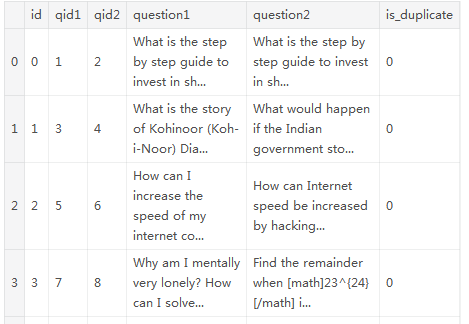

id:训练集中question pair的id

qid1, qid2:每个问题唯一的id

question1, question2:问题的具体内容

is_duplicate:目标变量, 1表示question1和question2有相同的意思,0表示不相同

import pandas as pd

df_train = pd.read_csv('./train.csv')

df_train.head()

print('训练样本个数:{}'.format(len(df_train)))

print('样本重复比例:{}%'.format(round(df_train['is_duplicate'].mean()*100, 2)))

qids = pd.Series(df_train['qid1'].tolist() + df_train['qid2'].tolist())

print('训练集中的问题个数: {}'.format(len(np.unique(qids))))

print("训练集中问题出现多次的问题个数:{}".format(np.sum(qids.value_counts() > 1)))

训练样本个数:404290

样本重复比例:36.92%

训练集中的问题个数: 537933

训练集中问题出现多次的问题个数:111780

plt.figure(figsize=(12, 5))

plt.hist(qids.value_counts(), bins=50)

plt.yscale('log', nonposy='clip')

plt.title('Log-Histogram of question appearance counts')

plt.xlabel('Number of occurences of question')

plt.ylabel('Number of questions')

由图可知,大部分问题只出现几次,很少问题出现多次,而且有个问题出现多于160,是一个outlier。

测试集

df_test = pd.read_csv('./test.csv')

df_test.head()

print('测试集样本个数: {}'.format(len(df_test)))

测试集样本个数: 3563475

训练集的数据分析

字符个数

train_qs = pd.Series(df_train['question1'].tolist() + df_train['question2'].tolist()).astype(str)

test_qs = pd.Series(df_test['question1'].tolist() + df_test['question2'].tolist()).astype(str)

print(train_qs[0])

print(train_qs.shape)

dist_train = train_qs.apply(len) # get all the length of qs

dist_test = test_qs.apply(len)

print(dist_train[0])

What is the step by step guide to invest in share market in india?

(808580,)

66

plt.figure(figsize=(15, 10))

plt.hist(dist_train, bins=200, range=[0, 200], color=pal[2], normed=True, label='train')

plt.hist(dist_test, bins=200, range=[0, 200], color=pal[1], normed=True, alpha=0.5, label='test')

plt.title('Normalised histogram of character count in questions', fontsize=15)

plt.legend()

plt.xlabel('Number of characters', fontsize=15)

plt.ylabel('Probability', fontsize=15)

print('mean-train {:.2f} std-train {:.2f} mean-test {:.2f} std-test {:.2f} max-train {:.2f} max-test {:.2f}'.format(dist_train.mean(),

dist_train.std(), dist_test.mean(), dist_test.std(), dist_train.max(), dist_test.max()))

mean-train 59.82 std-train 31.96

mean-test 60.07 std-test 31.63

max-train 1169.00 max-test 1176.00

大部分问题有15-150个字符,test的字符个数的分布和train的字符个数的分布有所不同,所有问题的字符个数都在1200个以下,而且超过200的问题已经很少了。

词的个数

dist_train = train_qs.apply(lambda x: len(x.split(' ')))

dist_test = test_qs.apply(lambda x: len(x.split(' ')))

plt.figure(figsize=(15, 10))

plt.hist(dist_train, bins=50, range=[0, 50], color=pal[2], normed=True, label='train')

plt.hist(dist_test, bins=50, range=[0, 50], color=pal[1], normed=True, alpha=0.5, label='test')

plt.title('Normalised histogram of word count in questions', fontsize=15)

plt.legend()

plt.xlabel('Number of words', fontsize=15)

plt.ylabel('Probability', fontsize=15)

print('mean-train {:.2f} std-train {:.2f} mean-test {:.2f} std-test {:.2f} max-train {:.2f} max-test {:.2f}'.format(dist_train.mean(),

dist_train.std(), dist_test.mean(), dist_test.std(), dist_train.max(), dist_test.max()))

mean-train 11.06 std-train 5.89

mean-test 11.02 std-test 5.84

max-train 237.00 max-test 238.00

词云

表示出现最频繁的词语。

from wordcloud import WordCloud

cloud = WordCloud(width=1440, height=1080).generate(" ".join(train_qs.astype(str)))

plt.figure(figsize=(20, 15))

plt.imshow(cloud)

plt.axis('off')

逻辑回归

- 1 导入数据

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

from subprocess import check_output

%matplotlib inline

import plotly.offline as py

py.init_notebook_mode(connected=True)

import plotly.graph_objs as go

import plotly.tools as tls

df = pd.read_csv("./train.csv").fillna("")

df.head()

- 2 处理数据

df['q1len'] = df['question1'].str.len()

df['q2len'] = df['question2'].str.len()

df['q1_n_words'] = df['question1'].apply(lambda row: len(row.split(" ")))

df['q2_n_words'] = df['question2'].apply(lambda row: len(row.split(" ")))

def normalized_word_share(row):

w1 = set(map(lambda word: word.lower().strip(), row['question1'].split(" ")))

w2 = set(map(lambda word: word.lower().strip(), row['question2'].split(" ")))

return 1.0 * len(w1 & w2)/(len(w1) + len(w2))

df['word_share'] = df.apply(normalized_word_share, axis=1)

df.head()

plt.figure(figsize=(12, 8))

plt.subplot(1,2,1)

sns.violinplot(x = 'is_duplicate', y = 'word_share', data = df[0:50000])

plt.subplot(1,2,2)

sns.distplot(df[df['is_duplicate'] == 1.0]['word_share'][0:10000], color = 'green')

sns.distplot(df[df['is_duplicate'] == 0.0]['word_share'][0:10000], color = 'red')

获得特征

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import precision_recall_curve, auc, roc_curve

from sklearn.model_selection import GridSearchCV, train_test_split

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler().fit(df[['q1len', 'q2len', 'q1_n_words', 'q2_n_words', 'word_share']])

X = scaler.transform(df[['q1len', 'q2len', 'q1_n_words', 'q2_n_words', 'word_share']])

y = df['is_duplicate']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.33, random_state=42)

X_train.shape, X_test.shape, y_train.shape, y_test.shape

训练数据

clf = LogisticRegression()

grid = {

'C': [1e-6, 1e-3, 1e0],

'penalty': ['l1', 'l2']

}

cv = GridSearchCV(clf, grid, scoring='neg_log_loss', n_jobs=-1, verbose=1)

cv.fit(X_train, y_train)

for i in range(1, len(cv.cv_results_['params'])+1):

rank = cv.cv_results_['rank_test_score'][i-1]

s = cv.cv_results_['mean_test_score'][i-1]

sd = cv.cv_results_['std_test_score'][i-1]

params = cv.cv_results_['params'][i-1]

print("{0}. Mean validation neg log loss: {1:.3f} (std: {2:.3f}) - {3}".format(

rank,

s,

sd,

params

))

输出

6–Mean validation neg log loss: -0.693 (std: 0.000) - {‘C’: 1e-06, ‘penalty’: ‘l1’}

5–Mean validation neg log loss: -0.690 (std: 0.000) - {‘C’: 1e-06, ‘penalty’: ‘l2’}

3–Mean validation neg log loss: -0.582 (std: 0.001) - {‘C’: 0.001, ‘penalty’: ‘l1’}

4–Mean validation neg log loss: -0.586 (std: 0.001) - {‘C’: 0.001, ‘penalty’: ‘l2’}

1–Mean validation neg log loss: -0.568 (std: 0.001) - {‘C’: 1.0, ‘penalty’: ‘l1’}

2–Mean validation neg log loss: -0.569 (std: 0.001) - {‘C’: 1.0, ‘penalty’: ‘l2’}

print(cv.best_params_)

print(cv.best_estimator_.coef_)

{‘C’: 1.0, ‘penalty’: ‘l1’}

[[-13.54349298 4.32508628 10.35869457 -13.07001066 3.29722094]]

ROC 评价

colors = ['r', 'g', 'b', 'y', 'k', 'c', 'm', 'brown', 'r']

lw = 1

Cs = [1e-6, 1e-4, 1e0]

plt.figure(figsize=(12,8))

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('ROC Curve for different classifiers')

plt.plot([0, 1], [0, 1], color='navy', lw=lw, linestyle='--')

labels = []

for idx, C in enumerate(Cs):

clf = LogisticRegression(C = C)

clf.fit(X_train, y_train)

print("C: {}, parameters {} and intercept {}".format(C, clf.coef_, clf.intercept_))

fpr, tpr, _ = roc_curve(y_test, clf.predict_proba(X_test)[:,1])

roc_auc = auc(fpr, tpr)

plt.plot(fpr, tpr, lw=lw, color=colors[idx])

labels.append("C: {}, AUC = {}".format(C, np.round(roc_auc, 4)))

plt.legend(['random AUC = 0.5'] + labels)

输出

C: 1e-06, parameters [[-0.00419999 -0.00232428 -0.00354653 -0.00199889 -0.0018606 ]] and intercept [-0.03324753]

C: 0.0001, parameters [[-0.15977647 -0.09050407 -0.13253665 -0.08000122 0.68634253]] and intercept [-0.70425466]

C: 1.0, parameters [[-10.24038938 -0.91761175 6.78291511 -7.1645702 3.29874314]] and intercept [-1.35659649]

Precision-Recall Curve 评价

pr, re, _ = precision_recall_curve(y_test, cv.best_estimator_.predict_proba(X_test)[:,1])

plt.figure(figsize=(12,8))

plt.plot(re, pr)

plt.title('PR Curve (AUC {})'.format(auc(re, pr)))

plt.xlabel('Recall')

plt.ylabel('Precision')

XGBoost

两个句子共有的词数

from nltk.corpus import stopwords

stops = set(stopwords.words("english"))

def word_match_share(row):

q1words = {}

q2words = {}

# 去除停用词

for word in str(row['question1']).lower().split():

if word not in stops:

q1words[word] = 1

for word in str(row['question2']).lower().split():

if word not in stops:

q2words[word] = 1

if len(q1words) == 0 or len(q2words) == 0:

# The computer-generated chaff includes a few questions that are nothing but stopwords

return 0

# 计算此问题的词语在另个问题的个数

shared_words_in_q1 = [w for w in q1words.keys() if w in q2words]

shared_words_in_q2 = [w for w in q2words.keys() if w in q1words]

# 共有的词语/所有的词语

R = (len(shared_words_in_q1) + len(shared_words_in_q2))/(len(q1words) + len(q2words))

return R

plt.figure(figsize=(15, 5))

train_word_match = df_train.apply(word_match_share, axis=1, raw=True)

plt.hist(train_word_match[df_train['is_duplicate'] == 0], bins=20, normed=True, label='Not Duplicate')

plt.hist(train_word_match[df_train['is_duplicate'] == 1], bins=20, normed=True, alpha=0.7, label='Duplicate')

plt.legend()

plt.title('Label distribution over word_match_share', fontsize=15)

plt.xlabel('word_match_share', fontsize=15)

TF-IDF

统计词语

print('最常见的词语和频率:')

print(sorted(weights.items(), key=lambda x: x[1] if x[1] > 0 else 9999)[:10])

print('最不常见的词语和频率: ')

print(sorted(weights.items(), key=lambda x: x[1], reverse=True)[:10])

最常见的词语和频率:

[(‘the’, 2.5891040146646852e-06), (‘what’, 3.115623919267953e-06), (‘is’, 3.5861702928825277e-06), (‘how’, 4.366449945201053e-06), (‘i’, 4.4805878531263305e-06), (‘a’, 4.540645588989843e-06), (‘to’, 4.671434644293609e-06), (‘in’, 4.884625153865692e-06), (‘of’, 5.920242493132519e-06), (‘do’, 6.070908207867897e-06)]

最不常见的词语和频率:

[(‘シ’, 9.998000399920016e-05), (‘し?’, 9.998000399920016e-05), (‘19-year-old.’, 9.998000399920016e-05), (‘1-855-425-3768’, 9.998000399920016e-05), (‘confederates’, 9.998000399920016e-05), (‘asahi’, 9.998000399920016e-05), (‘fab’, 9.998000399920016e-05), (‘109?’, 9.998000399920016e-05), (‘samrudi’, 9.998000399920016e-05), (‘fulfill?’, 9.998000399920016e-05)]

计算tfidf

def tfidf_word_match_share(row):

q1words = {}

q2words = {}

for word in str(row['question1']).lower().split():

if word not in stops:

q1words[word] = 1

for word in str(row['question2']).lower().split():

if word not in stops:

q2words[word] = 1

if len(q1words) == 0 or len(q2words) == 0:

# The computer-generated chaff includes a few questions that are nothing but stopwords

return 0

shared_weights = [weights.get(w, 0) for w in q1words.keys() if w in q2words] + [weights.get(w, 0) for w in q2words.keys() if w in q1words]

total_weights = [weights.get(w, 0) for w in q1words] + [weights.get(w, 0) for w in q2words]

R = np.sum(shared_weights) / np.sum(total_weights)

return R

plt.figure(figsize=(15, 5))

tfidf_train_word_match = df_train.apply(tfidf_word_match_share, axis=1, raw=True)

plt.hist(tfidf_train_word_match[df_train['is_duplicate'] == 0].fillna(0), bins=20, normed=True, label='Not Duplicate')

plt.hist(tfidf_train_word_match[df_train['is_duplicate'] == 1].fillna(0), bins=20, normed=True, alpha=0.7, label='Duplicate')

plt.legend()

plt.title('Label distribution over tfidf_word_match_share', fontsize=15)

plt.xlabel('word_match_share', fontsize=15)

# roc_auc_score(y_true, y_score, average=’macro’, sample_weight=None, max_fpr=None)

from sklearn.metrics import roc_auc_score

print('Original AUC:', roc_auc_score(df_train['is_duplicate'], train_word_match))

print(' TFIDF AUC:', roc_auc_score(df_train['is_duplicate'], tfidf_train_word_match.fillna(0)))

Original AUC: 0.7804327049353577

TFIDF AUC: 0.7704802292218704

TFIDF 的表现能力比较差,

平衡数据

# First we create our training and testing data

x_train = pd.DataFrame()

x_test = pd.DataFrame()

x_train['word_match'] = train_word_match

x_train['tfidf_word_match'] = tfidf_train_word_match

x_test['word_match'] = df_test.apply(word_match_share, axis=1, raw=True)

x_test['tfidf_word_match'] = df_test.apply(tfidf_word_match_share, axis=1, raw=True)

y_train = df_train['is_duplicate'].values

pos_train = x_train[y_train == 1]

neg_train = x_train[y_train == 0]

# 过采样负类的样本

# There is likely a much more elegant way to do this...

p = 0.165

scale = ((len(pos_train) / (len(pos_train) + len(neg_train))) / p) - 1

while scale > 1:

neg_train = pd.concat([neg_train, neg_train])

scale -=1

neg_train = pd.concat([neg_train, neg_train[:int(scale * len(neg_train))]])

print(len(pos_train) / (len(pos_train) + len(neg_train)))

x_train = pd.concat([pos_train, neg_train])

y_train = (np.zeros(len(pos_train)) + 1).tolist() + np.zeros(len(neg_train)).tolist()

del pos_train, neg_train

划分数据

# 把训练数据分为train和valid两部分

from sklearn.cross_validation import train_test_split

x_train, x_valid, y_train, y_valid = train_test_split(x_train, y_train, test_size=0.2, random_state=4242)

XGBoost

import xgboost as xgb

# Set our parameters for xgboost

params = {}

params['objective'] = 'binary:logistic'

params['eval_metric'] = 'logloss'

params['eta'] = 0.02

params['max_depth'] = 4

d_train = xgb.DMatrix(x_train, label=y_train)

d_valid = xgb.DMatrix(x_valid, label=y_valid)

watchlist = [(d_train, 'train'), (d_valid, 'valid')]

bst = xgb.train(params, d_train, 400, watchlist, early_stopping_rounds=50, verbose_eval=10)

保存数据

d_test = xgb.DMatrix(x_test)

p_test = bst.predict(d_test)

sub = pd.DataFrame()

sub['test_id'] = df_test['test_id']

sub['is_duplicate'] = p_test

sub.to_csv('simple_xgb.csv', index=False)

另一种方法

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

from scipy.sparse import csr_matrix

train_data = pd.read_csv('../input/train.csv')

print (train_data.shape)

train_data.head()

test_data = pd.read_csv('../input/test.csv')

print (test_data.shape)

test_data.head()

# 处理数据

train_data = train_data.drop(['id', 'qid1', 'qid2'], 1)

test_data = test_data.drop(['test_id'], 1)

train_data.isnull().sum()

train_data = train_data.fillna('empty question')

test_data.isnull().sum()

test_data = test_data.fillna('empty question')

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf = TfidfVectorizer(analyzer = 'word', stop_words = 'english', lowercase = True, norm = 'l1')

train_data_q1_tfidf = tfidf.fit_transform(train_data.question1.values)

train_data_q2_tfidf = tfidf.fit_transform(train_data.question2.values)

test_data_q1_tfidf = tfidf.fit_transform(test_data.question1.values)

test_data_q2_tfidf = tfidf.fit_transform(test_data.question2.values)

print (train_data_q1_tfidf.shape)

print (train_data_q2_tfidf.shape)

# (404290, 67533)

# (404290, 62375)

train_data_q1_tfidf = csr_matrix((train_data_q1_tfidf.data, train_data_q1_tfidf.indices, train_data_q1_tfidf.indptr), shape=(404290,90824))

train_data_q2_tfidf = csr_matrix((train_data_q2_tfidf.data, train_data_q2_tfidf.indices, train_data_q2_tfidf.indptr), shape=(404290,90824))

print (train_data_q1_tfidf.shape)

print (train_data_q2_tfidf.shape)

# (404290, 90824)

# (404290, 90824)

test_data_q2_tfidf = csr_matrix((test_data_q2_tfidf.data, test_data_q2_tfidf.indices, test_data_q2_tfidf.indptr), shape=(2345796,90824))

print (test_data_q1_tfidf.shape)

print (test_data_q2_tfidf.shape)

# (2345796, 90824)

# (2345796, 90824)

X = abs(train_data_q1_tfidf-train_data_q2_tfidf)

y = train_data[['is_duplicate']]

X_test = abs(test_data_q1_tfidf-test_data_q2_tfidf)

from xgboost import XGBClassifier

xg_model = XGBClassifier()

xg_model.fit(X, y)

xg_pred = xg_model.predict(X_test)

xg_pred = pd.Series(xg_pred, name='is_duplicate')

submission = pd.concat([pd.Series(range(2345796), name='test_id'),xg_pred], axis = 1)

submission.to_csv('xg_tfidf_submission_file.csv', index=False)

reference:

https://www.kaggle.com/ananthreddy/only-tf-idf-vectors

Random Forest随机森林

import numpy as np

import pandas as pd

import os

from sklearn.model_selection import train_test_split

def read_data():

df = pd.read_csv("./train.csv")

print ("Shape of base training File = ", df.shape)

# Remove missing values and duplicates from training data

df.drop_duplicates(inplace=True)

df.dropna(inplace=True)

print("Shape of base training data after cleaning = ", df.shape)

return df

df = read_data()

df_train, df_test = train_test_split(df, test_size = 0.02)

print ("\n\n", df_train.head(10))

print ("\nTrain Shape : ", df_train.shape)

print ("Test Shape : ", df_test.shape)

# Shape of base training File = (404290, 6)

#Shape of base training data after cleaning = (404287, 6)

# Train Shape : (396201, 6)

# Test Shape : (8086, 6)

词袋模型

import re

import gensim

from gensim import corpora

from nltk.corpus import stopwords

from nltk.stem.porter import *

words = re.compile(r"\w+",re.I)

stopword = stopwords.words('english')

stemmer = PorterStemmer()

# Cleaning and tokenizing the queries.

def tokenize_questions(df):

question_1_tokenized = []

question_2_tokenized = []

for q in df.question1.tolist():

question_1_tokenized.append([stemmer.stem(i.lower()) for i in words.findall(q)

if i not in stopword])

for q in df.question2.tolist():

question_2_tokenized.append([stemmer.stem(i.lower()) for i in words.findall(q)

if i not in stopword])

df["Question_1_tok"] = question_1_tokenized

df["Question_2_tok"] = question_2_tokenized

return df

df_train = tokenize_questions(df_train)

df_test = tokenize_questions(df_test)

词典

def train_dictionary(df):

questions_tokenized = df.Question_1_tok.tolist() + df.Question_2_tok.tolist()

dictionary = corpora.Dictionary(questions_tokenized)

dictionary.filter_extremes(no_below=5)

dictionary.compactify()

return dictionary

dictionary = train_dictionary(df_train)

print ("No of words in the dictionary = %s" %len(dictionary.token2id))

def get_vectors(df, dictionary):

question1_vec = [dictionary.doc2bow(text) for text in df.Question_1_tok.tolist()]

question2_vec = [dictionary.doc2bow(text) for text in df.Question_2_tok.tolist()]

question1_csc = gensim.matutils.corpus2csc(question1_vec, num_terms=len(dictionary.token2id))

question2_csc = gensim.matutils.corpus2csc(question2_vec, num_terms=len(dictionary.token2id))

return question1_csc.transpose(),question2_csc.transpose()

q1_csc, q2_csc = get_vectors(df_train, dictionary)

print (q1_csc.shape)

print (q2_csc.shape)

# (396201, 21254)

# (396201, 21254)

q1_csc_test, q2_csc_test = get_vectors(df_test, dictionary)

相似度计算

'''

Similarity Measures:

Cosine Similarity

Manhattan Distance

Euclidean Distance

'''

from sklearn.metrics.pairwise import cosine_similarity as cs

from sklearn.metrics.pairwise import manhattan_distances as md

from sklearn.metrics.pairwise import euclidean_distances as ed

def get_similarity_values(q1_csc, q2_csc):

cosine_sim = []

manhattan_dis = []

eucledian_dis = []

for i,j in zip(q1_csc, q2_csc):

sim = cs(i,j)

cosine_sim.append(sim[0][0])

sim = md(i,j)

manhattan_dis.append(sim[0][0])

sim = ed(i,j)

eucledian_dis.append(sim[0][0])

return cosine_sim, manhattan_dis, eucledian_dis

cosine_sim, manhattan_dis, eucledian_dis = get_similarity_values(q1_csc, q2_csc)

y_pred_cos, y_pred_man, y_pred_euc = get_similarity_values(q1_csc_test, q2_csc_test)

print ("cosine_sim sample= \n", cosine_sim[0:5])

print ("\nmanhattan_dis sample = \n", manhattan_dis[0:5])

print ("\neucledian_dis sample = \n", eucledian_dis[0:5])

# cosine_sim sample= [0.5773502691896258, 0.3086066999241839, 0.3086066999241838, 0.40089186286863654, 0.2886751345948129]

# manhattan_dis sample = [6.0, 11.0, 18.0, 9.0, 10.0]

# eucledian_dis sample = [2.449489742783178, 3.605551275463989, 5.0990195135927845, 3.0, 3.1622776601683795]

分类模型

from sklearn.ensemble import RandomForestClassifier

from sklearn.linear_model import LogisticRegression

xtrain = pd.DataFrame({"cosine" : cosine_sim, "manhattan" : manhattan_dis,

"eucledian" : eucledian_dis})

ytrain = df_train.is_duplicate

xtest = pd.DataFrame({"cosine" : y_pred_cos, "manhattan" : y_pred_man,

"eucledian" : y_pred_euc})

ytest = df_test.is_duplicate

rf = RandomForestClassifier()

rf.fit(xtrain, ytrain)

rf_predicted = rf.predict(xtest)

logist = LogisticRegression(random_state=0)

logist.fit(xtrain, ytrain)

logist_predicted = logist.predict(xtest)

from sklearn.metrics import log_loss

def calculate_logloss(y_true, y_pred):

loss_cal = log_loss(y_true, y_pred)

return loss_cal

logloss_rf = calculate_logloss(ytest, rf_predicted)

log_loss_logist = calculate_logloss(ytest, logist_predicted)

print ("Log loss value using Random Forest is = %f" %logloss_rf)

print ("Log loss value using Logistic Regression is = %f" %log_loss_logist)

from sklearn.metrics import accuracy_score

test_acc_rf = accuracy_score(ytest, rf_predicted) * 100

test_acc_logist = accuracy_score(ytest, logist_predicted) * 100

print ("Accuracy of Random Forest Model : ", test_acc_rf)

print ("Accuracy of Logistic Regression Model : ", test_acc_logist)

Log loss value using Random Forest is = 11.067395

Log loss value using Logistic Regression is = 12.071184

Accuracy of Random Forest Model : 67.95696265149641

Accuracy of Logistic Regression Model : 65.05070492208756

LSTM

'''

Single model may achieve LB scores at around 0.29+ ~ 0.30+

Average ensembles can easily get 0.28+ or less

Don't need to be an expert of feature engineering

All you need is a GPU!!!!!!!

The code is tested on Keras 2.0.0 using Tensorflow backend, and Python 2.7

According to experiments by kagglers, Theano backend with GPU may give bad LB scores while

the val_loss seems to be fine, so try Tensorflow backend first please

'''

########################################

## import packages

########################################

import os

import re

import csv

import codecs

import numpy as np

import pandas as pd

from nltk.corpus import stopwords

from nltk.stem import SnowballStemmer

from string import punctuation

from gensim.models import KeyedVectors

from keras.preprocessing.text import Tokenizer

from keras.preprocessing.sequence import pad_sequences

from keras.layers import Dense, Input, LSTM, Embedding, Dropout, Activation

from keras.layers.merge import concatenate

from keras.models import Model

from keras.layers.normalization import BatchNormalization

from keras.callbacks import EarlyStopping, ModelCheckpoint

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

########################################

## set directories and parameters

########################################

BASE_DIR = '../input/'

EMBEDDING_FILE = BASE_DIR + 'GoogleNews-vectors-negative300.bin'

TRAIN_DATA_FILE = BASE_DIR + 'train.csv'

TEST_DATA_FILE = BASE_DIR + 'test.csv'

MAX_SEQUENCE_LENGTH = 30

MAX_NB_WORDS = 200000

EMBEDDING_DIM = 300

VALIDATION_SPLIT = 0.1

num_lstm = np.random.randint(175, 275)

num_dense = np.random.randint(100, 150)

rate_drop_lstm = 0.15 + np.random.rand() * 0.25

rate_drop_dense = 0.15 + np.random.rand() * 0.25

act = 'relu'

re_weight = True # whether to re-weight classes to fit the 17.5% share in test set

STAMP = 'lstm_%d_%d_%.2f_%.2f'%(num_lstm, num_dense, rate_drop_lstm, \

rate_drop_dense)

########################################

## index word vectors

########################################

print('Indexing word vectors')

word2vec = KeyedVectors.load_word2vec_format(EMBEDDING_FILE, \

binary=True)

print('Found %s word vectors of word2vec' % len(word2vec.vocab))

########################################

## process texts in datasets

########################################

print('Processing text dataset')

# The function "text_to_wordlist" is from

# https://www.kaggle.com/currie32/quora-question-pairs/the-importance-of-cleaning-text

def text_to_wordlist(text, remove_stopwords=False, stem_words=False):

# Clean the text, with the option to remove stopwords and to stem words.

# Convert words to lower case and split them

text = text.lower().split()

# Optionally, remove stop words

if remove_stopwords:

stops = set(stopwords.words("english"))

text = [w for w in text if not w in stops]

text = " ".join(text)

# Clean the text

text = re.sub(r"[^A-Za-z0-9^,!.\/'+-=]", " ", text)

text = re.sub(r"what's", "what is ", text)

text = re.sub(r"\'s", " ", text)

text = re.sub(r"\'ve", " have ", text)

text = re.sub(r"can't", "cannot ", text)

text = re.sub(r"n't", " not ", text)

text = re.sub(r"i'm", "i am ", text)

text = re.sub(r"\'re", " are ", text)

text = re.sub(r"\'d", " would ", text)

text = re.sub(r"\'ll", " will ", text)

text = re.sub(r",", " ", text)

text = re.sub(r"\.", " ", text)

text = re.sub(r"!", " ! ", text)

text = re.sub(r"\/", " ", text)

text = re.sub(r"\^", " ^ ", text)

text = re.sub(r"\+", " + ", text)

text = re.sub(r"\-", " - ", text)

text = re.sub(r"\=", " = ", text)

text = re.sub(r"'", " ", text)

text = re.sub(r"(\d+)(k)", r"\g<1>000", text)

text = re.sub(r":", " : ", text)

text = re.sub(r" e g ", " eg ", text)

text = re.sub(r" b g ", " bg ", text)

text = re.sub(r" u s ", " american ", text)

text = re.sub(r"\0s", "0", text)

text = re.sub(r" 9 11 ", "911", text)

text = re.sub(r"e - mail", "email", text)

text = re.sub(r"j k", "jk", text)

text = re.sub(r"\s{2,}", " ", text)

# Optionally, shorten words to their stems

if stem_words:

text = text.split()

stemmer = SnowballStemmer('english')

stemmed_words = [stemmer.stem(word) for word in text]

text = " ".join(stemmed_words)

# Return a list of words

return(text)

texts_1 = []

texts_2 = []

labels = []

with codecs.open(TRAIN_DATA_FILE, encoding='utf-8') as f:

reader = csv.reader(f, delimiter=',')

header = next(reader)

for values in reader:

texts_1.append(text_to_wordlist(values[3]))

texts_2.append(text_to_wordlist(values[4]))

labels.append(int(values[5]))

print('Found %s texts in train.csv' % len(texts_1))

test_texts_1 = []

test_texts_2 = []

test_ids = []

with codecs.open(TEST_DATA_FILE, encoding='utf-8') as f:

reader = csv.reader(f, delimiter=',')

header = next(reader)

for values in reader:

test_texts_1.append(text_to_wordlist(values[1]))

test_texts_2.append(text_to_wordlist(values[2]))

test_ids.append(values[0])

print('Found %s texts in test.csv' % len(test_texts_1))

tokenizer = Tokenizer(num_words=MAX_NB_WORDS)

tokenizer.fit_on_texts(texts_1 + texts_2 + test_texts_1 + test_texts_2)

sequences_1 = tokenizer.texts_to_sequences(texts_1)

sequences_2 = tokenizer.texts_to_sequences(texts_2)

test_sequences_1 = tokenizer.texts_to_sequences(test_texts_1)

test_sequences_2 = tokenizer.texts_to_sequences(test_texts_2)

word_index = tokenizer.word_index

print('Found %s unique tokens' % len(word_index))

data_1 = pad_sequences(sequences_1, maxlen=MAX_SEQUENCE_LENGTH)

data_2 = pad_sequences(sequences_2, maxlen=MAX_SEQUENCE_LENGTH)

labels = np.array(labels)

print('Shape of data tensor:', data_1.shape)

print('Shape of label tensor:', labels.shape)

test_data_1 = pad_sequences(test_sequences_1, maxlen=MAX_SEQUENCE_LENGTH)

test_data_2 = pad_sequences(test_sequences_2, maxlen=MAX_SEQUENCE_LENGTH)

test_ids = np.array(test_ids)

########################################

## prepare embeddings

########################################

print('Preparing embedding matrix')

nb_words = min(MAX_NB_WORDS, len(word_index))+1

embedding_matrix = np.zeros((nb_words, EMBEDDING_DIM))

for word, i in word_index.items():

if word in word2vec.vocab:

embedding_matrix[i] = word2vec.word_vec(word)

print('Null word embeddings: %d' % np.sum(np.sum(embedding_matrix, axis=1) == 0))

########################################

## sample train/validation data

########################################

#np.random.seed(1234)

perm = np.random.permutation(len(data_1))

idx_train = perm[:int(len(data_1)*(1-VALIDATION_SPLIT))]

idx_val = perm[int(len(data_1)*(1-VALIDATION_SPLIT)):]

data_1_train = np.vstack((data_1[idx_train], data_2[idx_train]))

data_2_train = np.vstack((data_2[idx_train], data_1[idx_train]))

labels_train = np.concatenate((labels[idx_train], labels[idx_train]))

data_1_val = np.vstack((data_1[idx_val], data_2[idx_val]))

data_2_val = np.vstack((data_2[idx_val], data_1[idx_val]))

labels_val = np.concatenate((labels[idx_val], labels[idx_val]))

weight_val = np.ones(len(labels_val))

if re_weight:

weight_val *= 0.472001959

weight_val[labels_val==0] = 1.309028344

########################################

## define the model structure

########################################

embedding_layer = Embedding(nb_words,

EMBEDDING_DIM,

weights=[embedding_matrix],

input_length=MAX_SEQUENCE_LENGTH,

trainable=False)

lstm_layer = LSTM(num_lstm, dropout=rate_drop_lstm, recurrent_dropout=rate_drop_lstm)

sequence_1_input = Input(shape=(MAX_SEQUENCE_LENGTH,), dtype='int32')

embedded_sequences_1 = embedding_layer(sequence_1_input)

x1 = lstm_layer(embedded_sequences_1)

sequence_2_input = Input(shape=(MAX_SEQUENCE_LENGTH,), dtype='int32')

embedded_sequences_2 = embedding_layer(sequence_2_input)

y1 = lstm_layer(embedded_sequences_2)

merged = concatenate([x1, y1])

merged = Dropout(rate_drop_dense)(merged)

merged = BatchNormalization()(merged)

merged = Dense(num_dense, activation=act)(merged)

merged = Dropout(rate_drop_dense)(merged)

merged = BatchNormalization()(merged)

preds = Dense(1, activation='sigmoid')(merged)

########################################

## add class weight

########################################

if re_weight:

class_weight = {0: 1.309028344, 1: 0.472001959}

else:

class_weight = None

########################################

## train the model

########################################

model = Model(inputs=[sequence_1_input, sequence_2_input], \

outputs=preds)

model.compile(loss='binary_crossentropy',

optimizer='nadam',

metrics=['acc'])

#model.summary()

print(STAMP)

early_stopping =EarlyStopping(monitor='val_loss', patience=3)

bst_model_path = STAMP + '.h5'

model_checkpoint = ModelCheckpoint(bst_model_path, save_best_only=True, save_weights_only=True)

hist = model.fit([data_1_train, data_2_train], labels_train, \

validation_data=([data_1_val, data_2_val], labels_val, weight_val), \

epochs=200, batch_size=2048, shuffle=True, \

class_weight=class_weight, callbacks=[early_stopping, model_checkpoint])

model.load_weights(bst_model_path)

bst_val_score = min(hist.history['val_loss'])

########################################

## make the submission

########################################

print('Start making the submission before fine-tuning')

preds = model.predict([test_data_1, test_data_2], batch_size=8192, verbose=1)

preds += model.predict([test_data_2, test_data_1], batch_size=8192, verbose=1)

preds /= 2

submission = pd.DataFrame({'test_id':test_ids, 'is_duplicate':preds.ravel()})

submission.to_csv('%.4f_'%(bst_val_score)+STAMP+'.csv', index=False)

decompesition attention

import numpy as np

import pandas as pd

from keras.layers import *

from keras.activations import softmax

from keras.models import Model

from keras.optimizers import Nadam, Adam

from keras.regularizers import l2

import keras.backend as K

MAX_LEN = 30

def create_pretrained_embedding(pretrained_weights_path, trainable=False, **kwargs):

"Create embedding layer from a pretrained weights array"

pretrained_weights = np.load(pretrained_weights_path)

in_dim, out_dim = pretrained_weights.shape

embedding = Embedding(in_dim, out_dim, weights=[pretrained_weights], trainable=False, **kwargs)

return embedding

def unchanged_shape(input_shape):

"Function for Lambda layer"

return input_shape

def substract(input_1, input_2):

"Substract element-wise"

neg_input_2 = Lambda(lambda x: -x, output_shape=unchanged_shape)(input_2)

out_ = Add()([input_1, neg_input_2])

return out_

def submult(input_1, input_2):

"Get multiplication and subtraction then concatenate results"

mult = Multiply()([input_1, input_2])

sub = substract(input_1, input_2)

out_= Concatenate()([sub, mult])

return out_

def apply_multiple(input_, layers):

"Apply layers to input then concatenate result"

if not len(layers) > 1:

raise ValueError('Layers list should contain more than 1 layer')

else:

agg_ = []

for layer in layers:

agg_.append(layer(input_))

out_ = Concatenate()(agg_)

return out_

def time_distributed(input_, layers):

"Apply a list of layers in TimeDistributed mode"

out_ = []

node_ = input_

for layer_ in layers:

node_ = TimeDistributed(layer_)(node_)

out_ = node_

return out_

def soft_attention_alignment(input_1, input_2):

"Align text representation with neural soft attention"

attention = Dot(axes=-1)([input_1, input_2])

w_att_1 = Lambda(lambda x: softmax(x, axis=1),

output_shape=unchanged_shape)(attention)

w_att_2 = Permute((2,1))(Lambda(lambda x: softmax(x, axis=2),

output_shape=unchanged_shape)(attention))

in1_aligned = Dot(axes=1)([w_att_1, input_1])

in2_aligned = Dot(axes=1)([w_att_2, input_2])

return in1_aligned, in2_aligned

def decomposable_attention(pretrained_embedding='../data/fasttext_matrix.npy',

projection_dim=300, projection_hidden=0, projection_dropout=0.2,

compare_dim=500, compare_dropout=0.2,

dense_dim=300, dense_dropout=0.2,

lr=1e-3, activation='elu', maxlen=MAX_LEN):

# Based on: https://arxiv.org/abs/1606.01933

q1 = Input(name='q1',shape=(maxlen,))

q2 = Input(name='q2',shape=(maxlen,))

# Embedding

embedding = create_pretrained_embedding(pretrained_embedding,

mask_zero=False)

q1_embed = embedding(q1)

q2_embed = embedding(q2)

# Projection

projection_layers = []

if projection_hidden > 0:

projection_layers.extend([

Dense(projection_hidden, activation=activation),

Dropout(rate=projection_dropout),

])

projection_layers.extend([

Dense(projection_dim, activation=None),

Dropout(rate=projection_dropout),

])

q1_encoded = time_distributed(q1_embed, projection_layers)

q2_encoded = time_distributed(q2_embed, projection_layers)

# Attention

q1_aligned, q2_aligned = soft_attention_alignment(q1_encoded, q2_encoded)

# Compare

q1_combined = Concatenate()([q1_encoded, q2_aligned, submult(q1_encoded, q2_aligned)])

q2_combined = Concatenate()([q2_encoded, q1_aligned, submult(q2_encoded, q1_aligned)])

compare_layers = [

Dense(compare_dim, activation=activation),

Dropout(compare_dropout),

Dense(compare_dim, activation=activation),

Dropout(compare_dropout),

]

q1_compare = time_distributed(q1_combined, compare_layers)

q2_compare = time_distributed(q2_combined, compare_layers)

# Aggregate

q1_rep = apply_multiple(q1_compare, [GlobalAvgPool1D(), GlobalMaxPool1D()])

q2_rep = apply_multiple(q2_compare, [GlobalAvgPool1D(), GlobalMaxPool1D()])

# Classifier

merged = Concatenate()([q1_rep, q2_rep])

dense = BatchNormalization()(merged)

dense = Dense(dense_dim, activation=activation)(dense)

dense = Dropout(dense_dropout)(dense)

dense = BatchNormalization()(dense)

dense = Dense(dense_dim, activation=activation)(dense)

dense = Dropout(dense_dropout)(dense)

out_ = Dense(1, activation='sigmoid')(dense)

model = Model(inputs=[q1, q2], outputs=out_)

model.compile(optimizer=Adam(lr=lr), loss='binary_crossentropy',

metrics=['binary_crossentropy','accuracy'])

return model

def esim(pretrained_embedding='../data/fasttext_matrix.npy',

maxlen=MAX_LEN,

lstm_dim=300,

dense_dim=300,

dense_dropout=0.5):

# Based on arXiv:1609.06038

q1 = Input(name='q1',shape=(maxlen,))

q2 = Input(name='q2',shape=(maxlen,))

# Embedding

embedding = create_pretrained_embedding(pretrained_embedding, mask_zero=False)

bn = BatchNormalization(axis=2)

q1_embed = bn(embedding(q1))

q2_embed = bn(embedding(q2))

# Encode

encode = Bidirectional(LSTM(lstm_dim, return_sequences=True))

q1_encoded = encode(q1_embed)

q2_encoded = encode(q2_embed)

# Attention

q1_aligned, q2_aligned = soft_attention_alignment(q1_encoded, q2_encoded)

# Compose

q1_combined = Concatenate()([q1_encoded, q2_aligned, submult(q1_encoded, q2_aligned)])

q2_combined = Concatenate()([q2_encoded, q1_aligned, submult(q2_encoded, q1_aligned)])

compose = Bidirectional(LSTM(lstm_dim, return_sequences=True))

q1_compare = compose(q1_combined)

q2_compare = compose(q2_combined)

# Aggregate

q1_rep = apply_multiple(q1_compare, [GlobalAvgPool1D(), GlobalMaxPool1D()])

q2_rep = apply_multiple(q2_compare, [GlobalAvgPool1D(), GlobalMaxPool1D()])

# Classifier

merged = Concatenate()([q1_rep, q2_rep])

dense = BatchNormalization()(merged)

dense = Dense(dense_dim, activation='elu')(dense)

dense = BatchNormalization()(dense)

dense = Dropout(dense_dropout)(dense)

dense = Dense(dense_dim, activation='elu')(dense)

dense = BatchNormalization()(dense)

dense = Dropout(dense_dropout)(dense)

out_ = Dense(1, activation='sigmoid')(dense)

model = Model(inputs=[q1, q2], outputs=out_)

model.compile(optimizer=Adam(lr=1e-3), loss='binary_crossentropy', metrics=['binary_crossentropy','accuracy'])

return model

time distributed cnn

# coding: utf-8

# # Predicting Duplicate Questions

# In[5]:

import pandas as pd

import numpy as np

import nltk

from nltk.corpus import stopwords

from nltk.stem import SnowballStemmer

import re

from sklearn.metrics import accuracy_score

import matplotlib.pyplot as plt

import datetime, time, json

from string import punctuation

from keras.preprocessing.text import Tokenizer

from keras.preprocessing.sequence import pad_sequences

from keras.models import Sequential

from keras.layers import Embedding, Dense, Dropout, Reshape, Merge, BatchNormalization, TimeDistributed, Lambda, Activation, LSTM, Flatten, Convolution1D, GRU, MaxPooling1D

from keras.regularizers import l2

from keras.callbacks import Callback, ModelCheckpoint, EarlyStopping

from keras import initializers

from keras import backend as K

from keras.optimizers import SGD

from collections import defaultdict

# In[6]:

train = pd.read_csv("../input/train.csv")[:100]

test = pd.read_csv("../input/test.csv")[:100]

# In[7]:

train.head(6)

# In[8]:

test.head()

# In[9]:

print(train.shape)

print(test.shape)

# In[10]:

# Check for any null values

print(train.isnull().sum())

print(test.isnull().sum())

# In[11]:

# Add the string 'empty' to empty strings

train = train.fillna('empty')

test = test.fillna('empty')

# In[12]:

print(train.isnull().sum())

print(test.isnull().sum())

# In[13]:

# Preview some of the pairs of questions

for i in range(6):

print(train.question1[i])

print(train.question2[i])

print()

# In[14]:

stop_words = ['the','a','an','and','but','if','or','because','as','what','which','this','that','these','those','then',

'just','so','than','such','both','through','about','for','is','of','while','during','to','What','Which',

'Is','If','While','This']

# In[191]:

def text_to_wordlist(text, remove_stop_words=True, stem_words=False):

# Clean the text, with the option to remove stop_words and to stem words.

# Convert words to lower case and split them

#text = text.lower()

# Clean the text

text = re.sub(r"[^A-Za-z0-9]", " ", text)

text = re.sub(r"what's", "", text)

text = re.sub(r"What's", "", text)

text = re.sub(r"\'s", " ", text)

text = re.sub(r"\'ve", " have ", text)

text = re.sub(r"can't", "cannot ", text)

text = re.sub(r"n't", " not ", text)

text = re.sub(r"I'm", "I am", text)

text = re.sub(r" m ", " am ", text)

text = re.sub(r"\'re", " are ", text)

text = re.sub(r"\'d", " would ", text)

text = re.sub(r"\'ll", " will ", text)

text = re.sub(r"\0k ", "0000 ", text)

text = re.sub(r" e g ", " eg ", text)

text = re.sub(r" b g ", " bg ", text)

text = re.sub(r"\0s", "0", text)

text = re.sub(r" 9 11 ", "911", text)

text = re.sub(r"e-mail", "email", text)

text = re.sub(r"\s{2,}", " ", text)

text = re.sub(r"quikly", "quickly", text)

text = re.sub(r" usa ", " America ", text)

text = re.sub(r" USA ", " America ", text)

text = re.sub(r" u s ", " America ", text)

text = re.sub(r" uk ", " England ", text)

text = re.sub(r" UK ", " England ", text)

text = re.sub(r"india", "India", text)

text = re.sub(r"china", "China", text)

text = re.sub(r"chinese", "Chinese", text)

text = re.sub(r"imrovement", "improvement", text)

text = re.sub(r"intially", "initially", text)

text = re.sub(r"quora", "Quora", text)

text = re.sub(r" dms ", "direct messages ", text)

text = re.sub(r"demonitization", "demonetization", text)

text = re.sub(r"actived", "active", text)

text = re.sub(r"kms", " kilometers ", text)

text = re.sub(r"KMs", " kilometers ", text)

text = re.sub(r" cs ", " computer science ", text)

text = re.sub(r" upvotes ", " up votes ", text)

text = re.sub(r" iPhone ", " phone ", text)

text = re.sub(r"\0rs ", " rs ", text)

text = re.sub(r"calender", "calendar", text)

text = re.sub(r"ios", "operating system", text)

text = re.sub(r"gps", "GPS", text)

text = re.sub(r"gst", "GST", text)

text = re.sub(r"programing", "programming", text)

text = re.sub(r"bestfriend", "best friend", text)

text = re.sub(r"dna", "DNA", text)

text = re.sub(r"III", "3", text)

text = re.sub(r"the US", "America", text)

text = re.sub(r"Astrology", "astrology", text)

text = re.sub(r"Method", "method", text)

text = re.sub(r"Find", "find", text)

text = re.sub(r"banglore", "Banglore", text)

text = re.sub(r" J K ", " JK ", text)

# Remove punctuation from text

text = ''.join([c for c in text if c not in punctuation])

# Optionally, remove stop words

if remove_stop_words:

text = text.split()

text = [w for w in text if not w in stop_words]

text = " ".join(text)

# Optionally, shorten words to their stems

if stem_words:

text = text.split()

stemmer = SnowballStemmer('english')

stemmed_words = [stemmer.stem(word) for word in text]

text = " ".join(stemmed_words)

# Return a list of words

return(text)

# In[192]:

def process_questions(question_list, questions, question_list_name, dataframe):

'''transform questions and display progress'''

for question in questions:

question_list.append(text_to_wordlist(question))

if len(question_list) % 100000 == 0:

progress = len(question_list)/len(dataframe) * 100

print("{} is {}% complete.".format(question_list_name, round(progress, 1)))

# In[193]:

train_question1 = []

process_questions(train_question1, train.question1, 'train_question1', train)

# In[194]:

train_question2 = []

process_questions(train_question2, train.question2, 'train_question2', train)

# In[165]:

test_question1 = []

process_questions(test_question1, test.question1, 'test_question1', test)

# In[166]:

test_question2 = []

process_questions(test_question2, test.question2, 'test_question2', test)

# In[195]:

# Preview some transformed pairs of questions

i = 0

for i in range(i,i+10):

print(train_question1[i])

print(train_question2[i])

print()

# In[168]:

# Find the length of questions

lengths = []

for question in train_question1:

lengths.append(len(question.split()))

for question in train_question2:

lengths.append(len(question.split()))

# Create a dataframe so that the values can be inspected

lengths = pd.DataFrame(lengths, columns=['counts'])

# In[169]:

lengths.counts.describe()

# In[170]:

print(np.percentile(lengths.counts, 99.0))

print(np.percentile(lengths.counts, 99.4))

print(np.percentile(lengths.counts, 99.5))

print(np.percentile(lengths.counts, 99.9))

# In[171]:

# tokenize the words for all of the questions

all_questions = train_question1 + train_question2 + test_question1 + test_question2

tokenizer = Tokenizer()

tokenizer.fit_on_texts(all_questions)

print("Fitting is complete.")

train_question1_word_sequences = tokenizer.texts_to_sequences(train_question1)

print("train_question1 is complete.")

train_question2_word_sequences = tokenizer.texts_to_sequences(train_question2)

print("train_question2 is complete")

# In[172]:

test_question1_word_sequences = tokenizer.texts_to_sequences(test_question1)

print("test_question1 is complete.")

test_question2_word_sequences = tokenizer.texts_to_sequences(test_question2)

print("test_question2 is complete.")

# In[173]:

word_index = tokenizer.word_index

print("Words in index: %d" % len(word_index))

# In[174]:

# Pad the questions so that they all have the same length.

max_question_len = 36

train_q1 = pad_sequences(train_question1_word_sequences,

maxlen = max_question_len)

print("train_q1 is complete.")

train_q2 = pad_sequences(train_question2_word_sequences,

maxlen = max_question_len)

print("train_q2 is complete.")

# In[175]:

test_q1 = pad_sequences(test_question1_word_sequences,

maxlen = max_question_len,

padding = 'post',

truncating = 'post')

print("test_q1 is complete.")

test_q2 = pad_sequences(test_question2_word_sequences,

maxlen = max_question_len,

padding = 'post',

truncating = 'post')

print("test_q2 is complete.")

# In[30]:

y_train = train.is_duplicate

# In[31]:

# Load GloVe to use pretrained vectors

# Note for Kaggle users: Uncomment this - it couldn't be used on Kaggle

# From this link: https://nlp.stanford.edu/projects/glove/

#embeddings_index = {}

#with open('glove.840B.300d.txt', encoding='utf-8') as f:

# for line in f:

# values = line.split(' ')

# word = values[0]

# embedding = np.asarray(values[1:], dtype='float32')

# embeddings_index[word] = embedding

#

#print('Word embeddings:', len(embeddings_index)) #151,250

# In[176]:

# Need to use 300 for embedding dimensions to match GloVe's vectors.

embedding_dim = 300

# Note for Kaggle users: Uncomment this too, because it relate to the code for GloVe.

nb_words = len(word_index)

#word_embedding_matrix = np.zeros((nb_words + 1, embedding_dim))

#for word, i in word_index.items():

# embedding_vector = embeddings_index.get(word)

# if embedding_vector is not None:

# # words not found in embedding index will be all-zeros.

# word_embedding_matrix[i] = embedding_vector

#

#print('Null word embeddings: %d' % np.sum(np.sum(word_embedding_matrix, axis=1) == 0)) #75,334

# In[177]:

units = 128 # Number of nodes in the Dense layers

dropout = 0.25 # Percentage of nodes to drop

nb_filter = 32 # Number of filters to use in Convolution1D

filter_length = 3 # Length of filter for Convolution1D

# Initialize weights and biases for the Dense layers

weights = initializers.TruncatedNormal(mean=0.0, stddev=0.05, seed=2)

bias = bias_initializer='zeros'

model1 = Sequential()

model1.add(Embedding(nb_words + 1,

embedding_dim,

#weights = [word_embedding_matrix], Commented out for Kaggle

input_length = max_question_len,

trainable = False))

model1.add(Convolution1D(filters = nb_filter,

kernel_size = filter_length,

padding = 'same'))

model1.add(BatchNormalization())

model1.add(Activation('relu'))

model1.add(Dropout(dropout))

model1.add(Convolution1D(filters = nb_filter,

kernel_size = filter_length,

padding = 'same'))

model1.add(BatchNormalization())

model1.add(Activation('relu'))

model1.add(Dropout(dropout))

model1.add(Flatten())

model2 = Sequential()

model2.add(Embedding(nb_words + 1,

embedding_dim,

#weights = [word_embedding_matrix],

input_length = max_question_len,

trainable = False))

model2.add(Convolution1D(filters = nb_filter,

kernel_size = filter_length,

padding = 'same'))

model2.add(BatchNormalization())

model2.add(Activation('relu'))

model2.add(Dropout(dropout))

model2.add(Convolution1D(filters = nb_filter,

kernel_size = filter_length,

padding = 'same'))

model2.add(BatchNormalization())

model2.add(Activation('relu'))

model2.add(Dropout(dropout))

model2.add(Flatten())

model3 = Sequential()

model3.add(Embedding(nb_words + 1,

embedding_dim,

#weights = [word_embedding_matrix],

input_length = max_question_len,

trainable = False))

model3.add(TimeDistributed(Dense(embedding_dim)))

model3.add(BatchNormalization())

model3.add(Activation('relu'))

model3.add(Dropout(dropout))

model3.add(Lambda(lambda x: K.max(x, axis=1), output_shape=(embedding_dim, )))

model4 = Sequential()

model4.add(Embedding(nb_words + 1,

embedding_dim,

#weights = [word_embedding_matrix],

input_length = max_question_len,

trainable = False))

model4.add(TimeDistributed(Dense(embedding_dim)))

model4.add(BatchNormalization())

model4.add(Activation('relu'))

model4.add(Dropout(dropout))

model4.add(Lambda(lambda x: K.max(x, axis=1), output_shape=(embedding_dim, )))

modela = Sequential()

modela.add(Merge([model1, model2], mode='concat'))

modela.add(Dense(units*2, kernel_initializer=weights, bias_initializer=bias))

modela.add(BatchNormalization())

modela.add(Activation('relu'))

modela.add(Dropout(dropout))

modela.add(Dense(units, kernel_initializer=weights, bias_initializer=bias))

modela.add(BatchNormalization())

modela.add(Activation('relu'))

modela.add(Dropout(dropout))

modelb = Sequential()

modelb.add(Merge([model3, model4], mode='concat'))

modelb.add(Dense(units*2, kernel_initializer=weights, bias_initializer=bias))

modelb.add(BatchNormalization())

modelb.add(Activation('relu'))

modelb.add(Dropout(dropout))

modelb.add(Dense(units, kernel_initializer=weights, bias_initializer=bias))

modelb.add(BatchNormalization())

modelb.add(Activation('relu'))

modelb.add(Dropout(dropout))

model = Sequential()

model.add(Merge([modela, modelb], mode='concat'))

model.add(Dense(units*2, kernel_initializer=weights, bias_initializer=bias))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dropout(dropout))

model.add(Dense(units, kernel_initializer=weights, bias_initializer=bias))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dropout(dropout))

model.add(Dense(units, kernel_initializer=weights, bias_initializer=bias))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dropout(dropout))

model.add(Dense(1, kernel_initializer=weights, bias_initializer=bias))

model.add(BatchNormalization())

model.add(Activation('sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# In[178]:

# save the best weights for predicting the test question pairs

save_best_weights = 'question_pairs_weights.h5'

t0 = time.time()

callbacks = [ModelCheckpoint(save_best_weights, monitor='val_loss', save_best_only=True),

EarlyStopping(monitor='val_loss', patience=5, verbose=1, mode='auto')]

history = model.fit([train_q1, train_q2, train_q1, train_q2],

y_train,

batch_size=256,

epochs=2, #Use 100, I reduce it for Kaggle,

validation_split=0.15,

verbose=True,

shuffle=True,

callbacks=callbacks)

t1 = time.time()

print("Minutes elapsed: %f" % ((t1 - t0) / 60.))

# In[179]:

# Aggregate the summary statistics

summary_stats = pd.DataFrame({'epoch': [ i + 1 for i in history.epoch ],

'train_acc': history.history['acc'],

'valid_acc': history.history['val_acc'],

'train_loss': history.history['loss'],

'valid_loss': history.history['val_loss']})

# In[180]:

summary_stats

# In[181]:

plt.plot(summary_stats.train_loss) # blue

plt.plot(summary_stats.valid_loss) # green

plt.show()

# In[182]:

# Find the minimum validation loss during the training

min_loss, idx = min((loss, idx) for (idx, loss) in enumerate(history.history['val_loss']))

print('Minimum loss at epoch', '{:d}'.format(idx+1), '=', '{:.4f}'.format(min_loss))

min_loss = round(min_loss, 4)

# In[183]:

# Make predictions with the best weights

model.load_weights(save_best_weights)

predictions = model.predict([test_q1, test_q2, test_q1, test_q2], verbose = True)

# In[184]:

#Create submission

submission = pd.DataFrame(predictions, columns=['is_duplicate'])

submission.insert(0, 'test_id', test.test_id)

file_name = 'submission_{}.csv'.format(min_loss)

submission.to_csv(file_name, index=False)

# In[185]:

submission.head(10)

reference

1 https://www.kaggle.com/anokas/data-analysis-xgboost-starter-0-35460-lb