Android Camera fw学习(四)-recording流程分析

Android Camera fw学习(四)-recording流程分析

文章目录

-

-

- 一、认识video(mediaRecorder)状态机

- 二、Camera app如何启动录像

- 三、与MediaPlayerService相关的类接口之间的关系简介

- 1.mediaRecorder何时与MediaPlayerService发送关系

- 2.mediaPlayerService类和接口之间关系

- 3.MediaRecorder类和接口之间关系

- 1)代码片段1

- 2)代码片段2

- 3)代码片段3-setCamera本地实现

- 4)代码片段4

- 5)代码片段5

- 6) 代码片段6-mediaRecorder注册帧可用监听对象

- 四、阶段小结

- 五、Camera video创建BufferQueue.

- 六、何时录像帧可用

- 1.onFrameAvailable()

- 2.StreamingProcessor线程loop

- 3.帧可用消息发送给Camera本地对象

- 4.Camera本地对象转发消息给mediaRecorder.

- 7.总结

-

感兴趣可以加QQ群85486140,大家一起交流相互学习下!

备注:备注:本文是Android5.1学习笔记。博文按照软件启动流程分析。

且行且惜,一步一个脚印,这次学习camera Video.虽然标题是recording流程分析,但这里很多和preview是相似的(包含更新,创建Stream,创建Request),这里主要分析MediaRecorder对象创建、video帧监听对象注册、帧可用事件以及一系列callback流程分析。

一、认识video(mediaRecorder)状态机

Used to record audio and video. The recording control is based on a

simple state machine (see below).状态机请看上面源码中给的流程图。

A common case of using MediaRecorder to record audio works as follows:

1.MediaRecorder recorder = new MediaRecorder();

2.recorder.setAudioSource(MediaRecorder.AudioSource.MIC);

3.recorder.setOutputFormat(MediaRecorder.OutputFormat.THREE_GPP);

4.recorder.setAudioEncoder(MediaRecorder.AudioEncoder.AMR_NB);

5.recorder.setOutputFile(PATH_NAME);

6.recorder.prepare();

7.recorder.start(); // Recording is now started

8…

9.recorder.stop();

10.recorder.reset(); // You can reuse the object by going back to setAudioSource() step

recorder.release(); // Now the object cannot be reused

Applications may want to register for informational and error

events in order to be informed of some internal update and possible

runtime errors during recording. Registration for such events is

done by setting the appropriate listeners (via calls

(to {@link #setOnInfoListener(OnInfoListener)}setOnInfoListener and/or

{@link #setOnErrorListener(OnErrorListener)}setOnErrorListener).

In order to receive the respective callback associated with these listeners,

applications are required to create MediaRecorder objects on threads with a

Looper running (the main UI thread by default already has a Looper running).

上面是googole工程师加的注释,最权威的资料。大概意思就是说“使用mediaRecorder记录音视频,需要一个简单的状态机来控制”。上面的1,2,3…就是在操作时需要准守的步骤。算了吧,翻译水平有限,重点还是放到camera这边吧。

二、Camera app如何启动录像

//源码路径:pdk/apps/TestingCamera/src/com/android/testingcamera/TestingCamera.java

private void startRecording() {

log("Starting recording");

logIndent(1);

log("Configuring MediaRecoder");

//这里会检查是否打开了录像功能。这里我们省略了,直接不如正题

//上面首先创建了一个MediaRecorder的java对象(注意这里同camera.java类似,java对象中肯定包含了一个mediaRecorder jni本地对象,继续往下看)

mRecorder = new MediaRecorder();

//下面就是设置一些callback.

mRecorder.setOnErrorListener(mRecordingErrorListener);

mRecorder.setOnInfoListener(mRecordingInfoListener);

if (!mRecordHandoffCheckBox.isChecked()) {

//将当前camera java对象设置给了mediaRecorder java对象。

//这里setCamera是jni接口,后面我们贴代码在分析。

mRecorder.setCamera(mCamera);

}

//将preview surface java对象设置给mediaRecorder java对象,后面贴代码

//详细说明。

mRecorder.setPreviewDisplay(mPreviewHolder.getSurface());

//下面2个是设置音频和视频的资源。

mRecorder.setAudioSource(MediaRecorder.AudioSource.CAMCORDER);

mRecorder.setVideoSource(MediaRecorder.VideoSource.CAMERA);

mRecorder.setProfile(mCamcorderProfiles.get(mCamcorderProfile));

//从app控件选择录像帧大小,并设置给mediaRecorder

Camera.Size videoRecordSize = mVideoRecordSizes.get(mVideoRecordSize);

if (videoRecordSize.width > 0 && videoRecordSize.height > 0) {

mRecorder.setVideoSize(videoRecordSize.width, videoRecordSize.height);

}

//从app控件选择录像帧率,并设置给mediaRecorder.

if (mVideoFrameRates.get(mVideoFrameRate) > 0) {

mRecorder.setVideoFrameRate(mVideoFrameRates.get(mVideoFrameRate));

}

File outputFile = getOutputMediaFile(MEDIA_TYPE_VIDEO);

log("File name:" + outputFile.toString());

mRecorder.setOutputFile(outputFile.toString());

boolean ready = false;

log("Preparing MediaRecorder");

try {

//准备一下,请看下面google给的使用mediaRecorder标准流程

mRecorder.prepare();

ready = true;

} catch (Exception e) {//------异常处理省略

}

if (ready) {

try {

log("Starting MediaRecorder");

mRecorder.start();//启动录像

mState = CAMERA_RECORD;

log("Recording active");

mRecordingFile = outputFile;

} catch (Exception e) {//-----异常处理省略

}

//------------

}

可以看到应用启动录像功能是是符合状态机流程的。在应用开发中,也要这样来做。

- 1.创建mediaRecorderjava对象,mRecorder = new MediaRecorder();

- 2.设置camera java对象到mediaRecorder中,mRecorder.setCamera(mCamera);

- 3.将preview surface对象设置给mediaRecorder,mRecorder.setPreviewDisplay(mPreviewHolder.getSurface());

- 4.设置音频源,mRecorder.setAudioSource(MediaRecorder.AudioSource.CAMCORDER);

- 5.设置视频源,mRecorder.setVideoSource(MediaRecorder.VideoSource.CAMERA);

- 6.设置录像帧大小和帧率,以及setOutputFile

- 8.准备工作,mRecorder.prepare();

- 9.启动mdiaRecorder,mRecorder.start();

三、与MediaPlayerService相关的类接口之间的关系简介

1.mediaRecorder何时与MediaPlayerService发送关系

MediaRecorder::MediaRecorder() : mSurfaceMediaSource(NULL)

{

ALOGV("constructor");

const sp<IMediaPlayerService>& service(getMediaPlayerService());

if (service != NULL) {

mMediaRecorder = service->createMediaRecorder();

}

if (mMediaRecorder != NULL) {

mCurrentState = MEDIA_RECORDER_IDLE;

}

doCleanUp();

}

在jni中创建mediaRecorder对象时,其实在构造函数中偷偷的链接了mediaPlayerService,这也是Android习惯用的方法。获取到MediaPlayerService代理对象后,通过匿名binder获取mediaRecorder代理对象。

frameworks/base/media/java/android/media/MediaRecorder.java

2.mediaPlayerService类和接口之间关系

接口简单介绍-都是通过mediaPlayerService代理对象获取匿名mediaRecorder和mediaPlayer

| 接口类型 | 接口说明 |

|---|---|

| virtual sp createMediaRecorder() = 0; | 创建mediaRecorder录视频服务对象的接口 |

| virtual sp create(const sp& client, int audioSessionId = 0) = 0; | 创建mediaPlayer播放音乐服务对象的接口,播放音乐都是通过mediaPlayer对象播放的 |

| virtual status_t decode() = 0; | 音频解码器 |

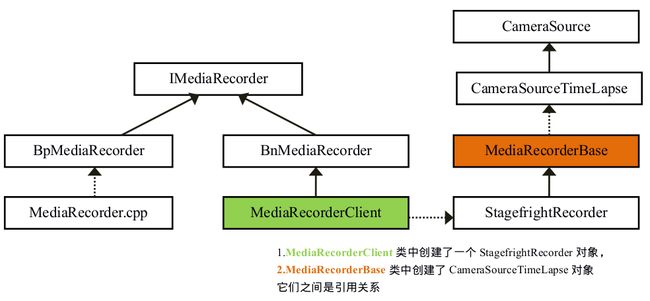

3.MediaRecorder类和接口之间关系

mediaRecorder功能就是来录像的。其中MediaRecorder类中,包含了BpMediaRecorder代理对象引用。MediaRecorderClient本地对象驻留在mediaPlayService中。它的接口比较多,这里就列出我们今天关注的几个接口。其它接口查看源码吧

详细介绍可以参考源码:frameworks/av/include/media/IMediaRecorder.h

| 接口类型 | 接口说明 |

|---|---|

| virtual status_t setCamera(const sp& camera,const sp& proxy) = 0; | 这个接口也是非常需要我们关注的,这里获取到了启动录像操作的本地对象(BnCameraRecordingProxy),并通过匿名binder通信方式,第二个参数就是本地对象.然后在startRecording时将帧监听对象注册到camera本地对象中了 |

| virtual status_t setPreviewSurface(const sp& surface) = 0; | 将preview预览surface对象设置给medaiRecorder,因为mediaRecorder也有一个camera本地client,所以这个surface对象最终还是会设置到cameraService用于显示。而录像的帧会在CameraService本地创建一个bufferQueue,具体下面会详细说明 |

| virtual status_t setListener(const sp& listener) = 0; | 这里一看就是设置监听对象,监听对象是jni中的JNIMediaRecorderListener对象,该对象可以回调MediaRecorder.java类中的postEventFromNative方法,将时间送到java层。其实MediaRecorder实现了BnMediaRecorderClient接口,即实现notify接口,那么这里其实将本地对象传到MediaRecorder本地的客户端对象中(本地对象拿到的就是代理对象了),参考代码片段1 |

| virtual status_t start() = 0; | 启动录像功能,函数追究下去和Camera关系不大了,这里就不细说了 |

1)代码片段1

源码路径:frameworks/base/media/jni/android_media_MediaRecorder.cpp

// create new listener and give it to MediaRecorder

sp<JNIMediaRecorderListener> listener = new JNIMediaRecorderListener(env, thiz, weak_this);

mr->setListener(listener);

mediaRecorder jni接口回调java方法,通知上层native事件。

2)代码片段2

static void android_media_MediaRecorder_setCamera(JNIEnv* env, jobject thiz, jobject camera)

{

// we should not pass a null camera to get_native_camera() call.

//这里检查camera是不是空的,显然不是空的。

//这个地方需要好好研究一下,其中camera是java层的camera对象(即camera.java)

//这里由java对象获取到camera应用端本地对象。

sp<Camera> c = get_native_camera(env, camera, NULL);

if (c == NULL) {

// get_native_camera will throw an exception in this case

return;

}

//获取mediaRecorder本地对象

sp<MediaRecorder> mr = getMediaRecorder(env, thiz);

//下面要特别注意,这里为什么传入的不是Camera对象而是c->remote(),当时琢磨

//着,camera.cpp也没实现什么代理类的接口啊,不过后来在cameraBase类中发现

//重载了remote()方法,该方法返回ICamera代理对象,呵呵。这样的话就会在

//mediaRecorder中创建一个新的ICamera代理对象。并在mediaPlayerService中

//创建了一个本地的Camera对象。

//c->getRecordingProxy():获取camera本地对象实现的Recording本地对象。这里

//调用setCamera设置到mediaRecorder本地对象中了(见代码片段3)

process_media_recorder_call(env, mr->setCamera(c->remote(), c->getRecordingProxy()),

"java/lang/RuntimeException", "setCamera failed.");

}

//camera端

sp<ICameraRecordingProxy> Camera::getRecordingProxy() {

ALOGV("getProxy");

return new RecordingProxy(this);

}

//看看下面RecordingProxy实现了BnCameraRecordingProxy接口,

//是个本地对象,水落石出了。

class RecordingProxy : public BnCameraRecordingProxy

{

public:

RecordingProxy(const sp<Camera>& camera);

// ICameraRecordingProxy interface

virtual status_t startRecording(const sp<ICameraRecordingProxyListener>& listener);

virtual void stopRecording();

virtual void releaseRecordingFrame(const sp<IMemory>& mem);

private:

//这里的是mCamera已经不再是之前preview启动时对应的那个本地Camera对象

//这是mediaRecorder重新创建的camera本地对象。

sp<Camera> mCamera;

};

3)代码片段3-setCamera本地实现

status_t MediaRecorderClient::setCamera(const sp<ICamera>& camera,

const sp<ICameraRecordingProxy>& proxy)

{

ALOGV("setCamera");

Mutex::Autolock lock(mLock);

if (mRecorder == NULL) {

ALOGE("recorder is not initialized");

return NO_INIT;

}

return mRecorder->setCamera(camera, proxy);

}

//构造函数中可以看到创建了一个StagefrightRecorder对象,后续的其它操作

//都是通过mRecorder对象实现的

MediaRecorderClient::MediaRecorderClient(const sp<MediaPlayerService>& service, pid_t pid)

{

ALOGV("Client constructor");

mPid = pid;

mRecorder = new StagefrightRecorder;

mMediaPlayerService = service;

}

//StagefrightRecorder::setCamera实现

struct StagefrightRecorder : public MediaRecorderBase {}

status_t StagefrightRecorder::setCamera(const sp<ICamera> &camera,

const sp<ICameraRecordingProxy> &proxy) {

//省去一些错误检查代码

mCamera = camera;

mCameraProxy = proxy;

return OK;

}

最终ICamera,ICameraRecordingProxy代理对象都存放到StagefrightRecorder对应的成员变量中,看来猪脚就在这个类中。

4)代码片段4

status_t CameraSource::isCameraAvailable(

const sp<ICamera>& camera, const sp<ICameraRecordingProxy>& proxy,

int32_t cameraId, const String16& clientName, uid_t clientUid) {

if (camera == 0) {

mCamera = Camera::connect(cameraId, clientName, clientUid);

if (mCamera == 0) return -EBUSY;

mCameraFlags &= ~FLAGS_HOT_CAMERA;

} else {

// We get the proxy from Camera, not ICamera. We need to get the proxy

// to the remote Camera owned by the application. Here mCamera is a

// local Camera object created by us. We cannot use the proxy from

// mCamera here.

//根据ICamera代理对象重新创建Camera本地对象

mCamera = Camera::create(camera);

if (mCamera == 0) return -EBUSY;

mCameraRecordingProxy = proxy;

//目前还不清楚是什么标记,权且理解成支持热插拔标记

mCameraFlags |= FLAGS_HOT_CAMERA;

//代理对象绑定死亡通知对象

mDeathNotifier = new DeathNotifier();

// isBinderAlive needs linkToDeath to work.

mCameraRecordingProxy->asBinder()->linkToDeath(mDeathNotifier);

}

mCamera->lock();

return OK;

}

由上面的类图之间的关系的,就知道mediaRecorder间接包含了cameaSource对象,这里为了简单直接要害代码。

- 1.在创建CameraSource对象时,会去检查一下Camera对象是否可用,可用的话就会根据传进来的代理对象重新创建Camera本地对象(注意这个时候Camera代理对象在mediaRecorder中)

- 2.然后保存RecordingProxy代理对象到mCameraRecordingProxy成员中,然后绑定死亡通知对象到RecordingProxy代理对象。

5)代码片段5

status_t CameraSource::startCameraRecording() {

ALOGV("startCameraRecording");

// Reset the identity to the current thread because media server owns the

// camera and recording is started by the applications. The applications

// will connect to the camera in ICameraRecordingProxy::startRecording.

int64_t token = IPCThreadState::self()->clearCallingIdentity();

status_t err;

if (mNumInputBuffers > 0) {

err = mCamera->sendCommand(

CAMERA_CMD_SET_VIDEO_BUFFER_COUNT, mNumInputBuffers, 0);

}

err = OK;

if (mCameraFlags & FLAGS_HOT_CAMERA) {//前面已经置位FLAGS_HOT_CAMERA,成立

mCamera->unlock();

mCamera.clear();

//通过recording代理对象,直接启动camera本地端的recording

if ((err = mCameraRecordingProxy->startRecording(

new ProxyListener(this))) != OK) {

}

} else {

}

IPCThreadState::self()->restoreCallingIdentity(token);

return err;

}

上面代码需要我们注意的是在启动startRecording()时,创建的监听对象new ProxyListener(this),该监听对象会传到Camera本地对象中。当帧可用时,用来通知mediaRecorder有帧可以使用了,赶紧编码吧。

6) 代码片段6-mediaRecorder注册帧可用监听对象

class ProxyListener: public BnCameraRecordingProxyListener {

public:

ProxyListener(const sp<CameraSource>& source);

virtual void dataCallbackTimestamp(int64_t timestampUs, int32_t msgType,

const sp<IMemory> &data);

private:

sp<CameraSource> mSource;

};

//camera.cpp

status_t Camera::RecordingProxy::startRecording(const sp<ICameraRecordingProxyListener>& listener)

{

ALOGV("RecordingProxy::startRecording");

mCamera->setRecordingProxyListener(listener);

mCamera->reconnect();

return mCamera->startRecording();

}

注册帧监听对象就是在启动Recording时注册,主要有下面几步:

- 1.使用setRecordingProxyListener接口,将监听对象设置给mRecordingProxyListener 成员。

- 2.重新和cameraService握手(preview停止时就会断开链接,在切换瞬间就断开了)

- 3.使用ICamera代理对象启动录像。

四、阶段小结

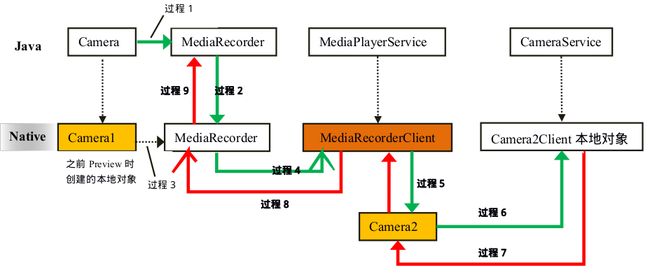

到这里Camera如何使用medaiRecorder录像的基本流程已经清楚了,这里我画了一个流程图,大概包含下面9个流程。

- 过程1:上层点击了录像功能,或者录像preview模式下,会创建一个mediaRecorDer Java层对象。

- 过程2:java层mediaRecorder对象调用native_jni native_setup方法,创建一个native的mediaRecorder对象。创建的过程中连接mediaPlayerService,并通过匿名binder通信方式获取到一个mediaRecorderClient代理对象,并保存到mediaRecorder对象的成员变量mMediaRecorder中。

- 过程3:ava层的Camera对象传给mediaRecorder native层时,可以通过本地方法获取到Camera本地对象和ICamera代理对象。这里是获取ICamera代理对象和RecordingProxy本地对象

- 过程4:将ICamera代理对象和RecordingProxy本地对象传给在MedaiService本地端的MediaRecorderClient对象,这时ICamera是重新创建的ICamer代理对象,以及获取到RecordingProxy代理对象。

- 过程5:根据过程4获取到的新的ICamera代理对象和RecordingProxy代理对象,创建新的本地Camera对象Camera2,以及注册录像帧监听对象到Camera2中。

- 过程6:启动StartRecording

- 过程7:当录像帧可用时,通知驻留在MedaiRecorderClient中的Camera2本地对象收帧,于此同时Camera2又是通过注册的帧监听对象告知MediaClientClient对象。MediaClientClient对象拿到帧后进行录像编码。

- 过程8,过程9:通过回调函数,将一些消息发送给应用端。

五、Camera video创建BufferQueue.

status_t StreamingProcessor::updateRecordingStream(const Parameters ¶ms) {

ATRACE_CALL();

status_t res;

Mutex::Autolock m(mMutex);

sp<CameraDeviceBase> device = mDevice.promote();

//----------------

bool newConsumer = false;

if (mRecordingConsumer == 0) {

ALOGV("%s: Camera %d: Creating recording consumer with %zu + 1 "

"consumer-side buffers", __FUNCTION__, mId, mRecordingHeapCount);

// Create CPU buffer queue endpoint. We need one more buffer here so that we can

// always acquire and free a buffer when the heap is full; otherwise the consumer

// will have buffers in flight we'll never clear out.

sp<IGraphicBufferProducer> producer;

sp<IGraphicBufferConsumer> consumer;

//创建bufferQueue,同时获取到生产者和消费者对象。

BufferQueue::createBufferQueue(&producer, &consumer);

//注意下面设置buffer的用处是GRALLOC_USAGE_HW_VIDEO_ENCODER,这个会在

//mediaRecorder中使用到。

mRecordingConsumer = new BufferItemConsumer(consumer,

GRALLOC_USAGE_HW_VIDEO_ENCODER,

mRecordingHeapCount + 1);

mRecordingConsumer->setFrameAvailableListener(this);

mRecordingConsumer->setName(String8("Camera2-RecordingConsumer"));

mRecordingWindow = new Surface(producer);

newConsumer = true;

// Allocate memory later, since we don't know buffer size until receipt

}

//更新部分代码,就不贴出来了----

//注意下面video 录像buffer的像素格式是CAMERA2_HAL_PIXEL_FORMAT_OPAQUE

if (mRecordingStreamId == NO_STREAM) {

mRecordingFrameCount = 0;

res = device->createStream(mRecordingWindow,

params.videoWidth, params.videoHeight,

CAMERA2_HAL_PIXEL_FORMAT_OPAQUE, &mRecordingStreamId);

}

return OK;

}

主要处理下面几件事情。

- 1.由于录像不需要显示,这里创建CameraService BufferQueue本地对象,这个时候获取到的生产者和消费者都是本地的,只有BufferQueue保存的有IGraphicBufferAlloc代理对象mAllocator,专门用来分配buffer。

- 2.由于StremingProcess.cpp中实现了FrameAvailableListener监听接口方法onFrameAvailable()。这里会通过setFrameAvailableListener方法注册到BufferQueue中。

- 3.根据生产者对象创建surface对象,并传给Camera3Device申请录像buffer.

- 4.如果参数有偏差或者之前已经创建过video Stream.这里会删除或者更新videoStream.如果压根没有创建VideoStream,直接创建VideoStream并根据参数更新流信息。

六、何时录像帧可用

1.onFrameAvailable()

void StreamingProcessor::onFrameAvailable(const BufferItem& /*item*/) {

ATRACE_CALL();

Mutex::Autolock l(mMutex);

if (!mRecordingFrameAvailable) {

mRecordingFrameAvailable = true;

mRecordingFrameAvailableSignal.signal();

}

}

当video buffer进行enqueue操作后,该函数会被调用。函数中可用发现,激活了StreamingProcessor主线程。

2.StreamingProcessor线程loop

bool StreamingProcessor::threadLoop() {

status_t res;

{

Mutex::Autolock l(mMutex);

while (!mRecordingFrameAvailable) {

//之前是在这里挂起的,现在有帧可用就会从这里唤醒。

res = mRecordingFrameAvailableSignal.waitRelative(

mMutex, kWaitDuration);

if (res == TIMED_OUT) return true;

}

mRecordingFrameAvailable = false;

}

do {

res = processRecordingFrame();//进一步处理。

} while (res == OK);

return true;

}

到这里发现,原来StreamingProcessor主线程只为录像服务,previewStream只是使用了它的几个方法而已。

3.帧可用消息发送给Camera本地对象

status_t StreamingProcessor::processRecordingFrame() {

ATRACE_CALL();

status_t res;

sp<Camera2Heap> recordingHeap;

size_t heapIdx = 0;

nsecs_t timestamp;

sp<Camera2Client> client = mClient.promote();

BufferItemConsumer::BufferItem imgBuffer;

//取出buffer消费,就是拿给mediaRecorder编码

res = mRecordingConsumer->acquireBuffer(&imgBuffer, 0);

//----------------------------

// Call outside locked parameters to allow re-entrancy from notification

Camera2Client::SharedCameraCallbacks::Lock l(client->mSharedCameraCallbacks);

if (l.mRemoteCallback != 0) {

//调用Callback通知Camea本地对象。

l.mRemoteCallback->dataCallbackTimestamp(timestamp,

CAMERA_MSG_VIDEO_FRAME,

recordingHeap->mBuffers[heapIdx]);

} else {

ALOGW("%s: Camera %d: Remote callback gone", __FUNCTION__, mId);

}

return OK;

之前我们已经知道Camera运行时存在类型为ICameraClient的两个对象,其中一个代理对象保存在CameraService中,本地对象保存的Camera本地对象中。这里代理对象通知本地对象取帧了。注意这里消息发送的是“CAMERA_MSG_VIDEO_FRAME”。

4.Camera本地对象转发消息给mediaRecorder.

void Camera::dataCallbackTimestamp(nsecs_t timestamp, int32_t msgType, const sp<IMemory>& dataPtr)

{

// If recording proxy listener is registered, forward the frame and return.

// The other listener (mListener) is ignored because the receiver needs to

// call releaseRecordingFrame.

sp<ICameraRecordingProxyListener> proxylistener;

{

//这里mRecordingProxyListener就是mediaRecorder注册过来的监听代理对象

Mutex::Autolock _l(mLock);

proxylistener = mRecordingProxyListener;

}

if (proxylistener != NULL) {

//这里就把buffer送到了mediaRecorder中进行编码

proxylistener->dataCallbackTimestamp(timestamp, msgType, dataPtr);

return;

}

//---------省略代码

}

到这里Camera本地对象就会调用mediaRecorder注册来的帧监听对象。前面我们已经做了那么长的铺垫,我想应该可以理解了。好了,mediaRecorder有饭吃了。

7.总结

- 1.一开始我自以为preview和Video使用同一个camera本地对象,看了代码发现,原来是不同的对象。

- 2.预览的BufferQueue是在CameraService中创建的,和surfaceFlinger没有关系,只是保留了IGraphicBufferAlloc代理对象mAllocator,用于分配buffer.

- 3.之匿名binder没有理解透彻,以为只有传递本地对象才能使用writeStrongBinder()接口保存binder对象,同时在使用端使用readStrongBinder()就可以获取到代理对象了。其实也可以传递代理对象,只不过代码会走另外一套逻辑,在kernel中重新创建一个binder_ref索引对象返回给另一端。如下mediaRecorder设置camera时就是传递的ICamera代理对象。

status_t setCamera(const sp<ICamera>& camera, const sp<ICameraRecordingProxy>& proxy)

{

ALOGV("setCamera(%p,%p)", camera.get(), proxy.get());

Parcel data, reply;

data.writeInterfaceToken(IMediaRecorder::getInterfaceDescriptor());

//camera->asBinder()是ICamera代理对象

data.writeStrongBinder(camera->asBinder());

data.writeStrongBinder(proxy->asBinder());

remote()->transact(SET_CAMERA, data, &reply);

return reply.readInt32();

}