oracle 11.2.0.4 rac for rhel 6.8 installation

这里记录一下oracle 11.2.0.4 rac for rhel 6.8 installation完整过程。其实,oracle rac的安装重点是前期的安装环境准备,尤其是共享磁盘的定制。

根据安装过程,需要执行安装的工程师事先准备一个快速安装文档,快速安装文档起到安装工作的指导作用,并尽可能的预料到安装中可能遇到的问题及解决方法。

我的快速安装文档:

---------------------------------------------------------------------------------------------

一、主机信息查看

1、查看主机名 uname -a

2、查看主机版本 cat /etc/redhat-release

3、查看主机IP ifconfig -a

4、查看hosts解析 cat /etc/hosts

5、查看主机时间 date

6、查看主机磁盘 df -h

7、查看存储配置 multipath -l

8、查看主机防火墙 service iptables status

9、查看selinux配置 cat /etc/selinux/config

10、查看存储超时时间 cat /sys/block/sdb/device/timeout

11、查看系统硬件时钟 hwclock --show

二、主机基础信息可能的修改

1、修改主机时间 date -s 14:20:50

2、硬件同步的系统时钟 clock --systohc

系统同步硬件的时钟 clock --hctosys

3、停止防火墙 service iptables stop

防火墙不开机启动 chkconfig iptables off

确认防火墙状态 service iptables status

防火墙开机启动确认 chkconfig --list|grep iptables

4、删除主机ntp配置文件 rm -rf /etc/ntp.conf

5、修改selinux配置 sed -i '/enforcing/s/SELINUX=enforced/SELINUX=disabled/g' /etc/selinux/config

确认selinux修改 cat /etc/selinux/config

三、rac搭建主机基础环境

1、检查rac安装必须的rpm包

rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' binutils \

compat-libstdc++-33 \

elfutils-libelf \

elfutils-libelf-devel \

gcc \

gcc-c++ \

glibc \

glibc-common \

glibc-devel \

glibc-headers \

ksh \

libaio \

libaio-devel \

libgcc \

libstdc++ \

libstdc++-devel \

make \

sysstat \

unixODBC \

unixODBC-devel \

kernel-headers \

glibc-headers \

mpfr \

ppl \

cpp \

cloog-ppl \

gcc-c++ \

pdksh \

cvuqdisk \

compat-libcap1

--挂载ISO镜像 mount -o loop -t iso9660 /home/software/oracle_linux_6.8.iso /mnt/

--配置本地yum源

echo "

[local]

name = local

baseurl=file:///mnt/

enabled = 1

gpgcheck = 0

gpgkey =file://mnt/RPM-GPG-KEY-oracle

" > /etc/yum.repos.d/local.repo

--使用yum安装缺失的rpm包

yum clean all

yum list

yum -y install rpm_name.rpm

2、修改hosts配置文件

echo "

192.168.248.38 rac2

172.25.25.2 racpriv2

192.168.248.39 racvip2

192.168.248.35 rac1

172.25.25.1 racpriv1

192.168.248.36 racvip1

192.168.248.37 racscanip

" >>/etc/hosts

--验证hosts解析

ping rac2

ping rac1

ping racpriv2

ping racpriv1

ping racvip1

ping racvip2

ping racscanip

3、修改rac安装必须的操作系统参数

echo "

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 1

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

kernel.msgmnb = 65536

kernel.msgmax = 8192

kernel.shmmax = 4398046511104

kernel.shmall = 1073741824

fs.file-max = 6815744

kernel.msgmni = 2878

kernel.sem = 250 32000 100 142

kernel.shmmni = 4096

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max =1048576

fs.aio-max-nr = 3145728

net.ipv4.ip_local_port_range = 9000 65000

" >> /etc/sysctl.conf

发布修改的操作系统参数 sysctl -p

4、调整操作系统对rac管理用户grid、oracle的资源限制

echo "

oracle soft nofile 131072

oracle hard nofile 131072

oracle soft nproc 131072

oracle hard nproc 131072

oracle soft core unlimited

oracle hard core unlimited

oracle soft memlock 50000000

oracle hard memlock 50000000

grid soft nofile 131072

grid hard nofile 131072

grid soft nproc 131072

grid hard nproc 131072

grid soft core unlimited

grid hard core unlimited

grid soft memlock 50000000

grid hard memlock 50000000

" >> /etc/security/limits.conf

--验证用户资源限制参数修改

cat /etc/security/limits.conf

5、修改multipath存储配置控制文件

vi /etc/multipath.conf

--示例

# multipath.conf written by anaconda

#defaults {

# user_friendly_names yes

#}

blacklist {

devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

devnode "^hd[a-z]"

devnode "^dcssblk[0-9]*"

device {

vendor "DGC"

product "LUNZ"

}

device {

vendor "IBM"

product "S/390.*"

}

# don't count normal SATA devices as multipaths

device {

vendor "ATA"

}

# don't count 3ware devices as multipaths

device {

vendor "3ware"

}

device {

vendor "AMCC"

}

# nor highpoint devices

device {

vendor "HPT"

}

wwid "361866da06426f3001f2b425402d3d849"

device {

vendor PLDS

product DVD-ROM_DS-8DBSH

}

wwid "*"

}

blacklist_exceptions {

wwid "3600a098000a1157d000002d25798865a"

wwid "3600a098000a1157d000002d457988679"

wwid "3600a098000a1157d000002cf57988639"

wwid "3600a098000a1157d000002de579886d4"

wwid "3600a098000a1157d000002e0579886e9"

wwid "3600a098000a1157d000002d65798868a"

wwid "3600a098000a1157d000002d85798869a"

wwid "3600a098000a1157d000002da579886ac"

wwid "3600a098000a1157d000002dc579886bf"

}

multipaths {

multipath {

uid 0

gid 0

wwid "3600a098000a1157d000002d25798865a"

mode 0600

}

multipath {

uid 600

gid 600

alias ocr1

wwid "3600a098000a1157d000002d457988679"

mode 0600

path_grouping_policy failover

}

multipath {

uid 600

gid 600

alias arch

wwid "3600a098000a1157d000002cf57988639"

mode 0600

path_grouping_policy failover

}

multipath {

uid 0

gid 0

wwid "3600a098000a1157d000002de579886d4"

mode 0600

}

multipath {

uid 600

gid 600

alias data3

wwid "3600a098000a1157d000002e0579886e9"

mode 0600

path_grouping_policy failover

}

multipath {

uid 600

gid 600

alias ocr2

wwid "3600a098000a1157d000002d65798868a"

mode 0600

path_grouping_policy failover

}

multipath {

uid 600

gid 600

alias ocr3

wwid "3600a098000a1157d000002d85798869a"

mode 0600

path_grouping_policy failover

}

multipath {

uid 600

gid 600

alias data1

wwid "3600a098000a1157d000002da579886ac"

mode 0600

path_grouping_policy failover

}

multipath {

uid 600

gid 600

alias data2

wwid "3600a098000a1157d000002dc579886bf"

mode 0600

path_grouping_policy failover

}

}

--重启multipath服务,使multipath配置修改生效

service multipathd restart

--修改udev配置文件

vi /etc/udev/rules.d/12-mulitpath-privs.rules

ENV{DM_NAME}=="arch*", OWNER:="grid", GROUP:="asmadmin", MODE:="660"

ENV{DM_NAME}=="ocr*", OWNER:="grid", GROUP:="asmadmin", MODE:="660"

ENV{DM_NAME}=="data*", OWNER:="grid", GROUP:="asmadmin", MODE:="660"

--重启udev,用户创建后执行

start_udev

--确认存储配置结果

multipath -ll

ls -l /dev/mapper/*

四、rac安装环境创建

1、创建用户

groupadd -g 600 asmadmin

groupadd -g 601 oinstall

groupadd -g 602 asmoper

groupadd -g 603 dba

groupadd -g 604 asmdba

useradd -u 500 -g oinstall -G dba,asmadmin,asmdba oracle

useradd -u 600 -g oinstall -G asmdba,asmadmin,asmoper grid

2、修改用户密码

passwd grid

passwd oracle

3、创建安装目录并修改权限

mkdir -p /u01/oracle/app

mkdir -p /u01/oracle/app/grid/home

mkdir -p /u01/oracle/app/grid/base

mkdir -p /u01/oracle/app/oracle/

mkdir -p /u01/oracle/app/oracle/product/11.2.0.4/db

chown -R grid:oinstall /u01/oracle/app/grid

chown -R oracle:oinstall /u01/oracle/app/oracle

chmod -R 775 /u01/oracle/app

4、修改用户环境变量

--grid用户环境变量,注意ORACLE_SID

cat >>/home/grid/.bash_profile<

export TEMP=/tmp

export TMPDIR=/tmp

export ORACLE_TERM=xterm

export THREADS_FLAG=native

export NLS_LANG='AMERICAN_AMERICA.ZHS16GBK'

export NLS_DATE_FORMAT='yyyy-mm-dd hh24:mi:ss'

export NLS_TIMESTAMP_TZ_FORMAT='yyyy-mm-dd hh24:mi:ss.ff'

export ORACLE_SID=+ASM1

export ORACLE_BASE=/u01/oracle/app/grid/base

export ORACLE_HOME=/u01/oracle/app/grid/home

export PATH=$ORACLE_HOME/bin:$PATH

EOF

--oracle环境变量,注意ORACLE_SID

cat >>/home/oracle/.bash_profile<

export TEMP=/tmp

export TMPDIR=/tmp

export ORACLE_TERM=xterm

export THREADS_FLAG=native

export NLS_LANG='AMERICAN_AMERICA.ZHS16GBK'

export NLS_DATE_FORMAT='yyyy-mm-dd hh24:mi:ss'

export NLS_TIMESTAMP_TZ_FORMAT='yyyy-mm-dd hh24:mi:ss.ff'

export ORACLE_BASE=/u01/oracle/app/oracle

export ORACLE_HOME=/u01/oracle/app/oracle/product/11.2.0.4/db

export ORACLE_SID=orcl1

export PATH=$PATH:$ORACLE_HOME/bin

EOF

五、安装GI

--注意GI安装阶段的grid管理组选择:

ORACLE ASM管理员组 asmadmin

ORACLE ASM DBA组 asmdba

ORACLE ASM 操作者 asmoper

--GI安装完成,检查CRS状态

crsctl check crs

crs_stat -t -v

olsnodes -n

ocrcheck

crsctl query css votedisk

ps -ef | grep lsnr | grep -v 'grep' | grep -v 'ocfs' | awk '{print $9}'

--GI安装完成,创建数据库ASM磁盘组DATADG、ARCHDG

六、安装oracle数据库软件

七、创建数据库

--注意数据库全局名

--注意归档空间调整

--注意数据库字符集

--注意数据库进程数

--注意redo组及大小设置

--数据库创建完毕,检查集群状态

crsctl status resource -t

crs_stat -t

--检查监听状态

lsnrctl status

--检查数据库客户端字符集

select userenv('language') from dual;

--检查数据库读写状态

select name,dbid,open_mode from v$database;

--检查实例打开状态

select instance_name,status from v$instance;

八、集群补丁集安装

--检查opatch版本

grid、oracle执行

$ORACLE_HOME/OPatch/opatch lsinventory

--解压opatch高版本压缩包

unzip -v text.zip

tar -cvf opatch.tar OPatch/

unzip text.zip -d $ORACLE_HOME/OPatch

--将补丁集解压到指定目录

/home/software/18139609

--创建ocm响应文件

/u01/oracle/app/grid/home/OPatch/ocm/bin/emocmrsp -no_banner -output /home/software/18139609/ocm.rsp

--全自动安装补丁集,注意cd到opatch目录,root执行

./opatch auto /home/software/18139609 -ocmrf /home/software/18139609/ocm.rsp

--检查补丁集安装情况

opatch lsinventory

九、配置数据库TNS,追加即可,样例如下

echo "

RAC1 =

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac1)(PORT = 1521))

(ADDRESS = (PROTOCOL = TCP)(HOST = rac2)(PORT = 1521))

)

(CONNECT_DATA =

(SERVICE_NAME = orcl )

)

)

RAC2 =

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac2)(PORT = 1521))

(ADDRESS = (PROTOCOL = TCP)(HOST = rac1)(PORT = 1521))

)

(CONNECT_DATA =

(SERVICE_NAME = orcl )

)

)

" >>$ORACLE_HOME/network/admin/tnsnames.ora

--配置完成后测试

tnsping rac1

tnsping rac2

十、按照参数修改要求修改数据库参数

十一、部署数据库巡检脚本

十二、部署数据库rman备份脚本

--配置备份目录时,注意修改/etc/fstab,挂载目录的最后一列的最后fsck检查和备份要求是0 0

十三、数据迁移

-----------------------------------------------------------------------------------------------

下面是一次完整的rac安装过程

一、主机信息查看

为什么要查看主机信息,因为主机服务器的安装是主机工程师负责安装的,有很多信息需要db安装工程师再次确认,我只相信自己。

当时的安装开始时间是2017年9月15号早上9点15分,刚上班的时候,来看看主机的时间吧!

这里以一个节点为主,为了文档不那么长。

--查看主机名

[root@rac1 ~]# hostname

rac1

--查看操作系统版本

[root@rac1 ~]# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 6.8 (Santiago)

[root@rac1 ~]# ifconfig -a

bond0 Link encap:Ethernet HWaddr 80:18:44:E2:E0:10

inet addr:192.168.248.35 Bcast:192.168.248.255 Mask:255.255.255.0

inet6 addr: fe80::8218:44ff:fee2:e010/64 Scope:Link

UP BROADCAST RUNNING MASTER MULTICAST MTU:1500 Metric:1

RX packets:6105502 errors:0 dropped:20656 overruns:0 frame:0

TX packets:1596568 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:8748034785 (8.1 GiB) TX bytes:122397666 (116.7 MiB)

bond1 Link encap:Ethernet HWaddr 80:18:44:E2:E0:11

inet addr:172.25.25.1 Bcast:172.25.25.7 Mask:255.255.255.248

inet6 addr: fe80::8218:44ff:fee2:e011/64 Scope:Link

UP BROADCAST RUNNING MASTER MULTICAST MTU:1500 Metric:1

RX packets:134494 errors:0 dropped:20654 overruns:0 frame:0

TX packets:126816 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:16369705 (15.6 MiB) TX bytes:8128308 (7.7 MiB)

em1 Link encap:Ethernet HWaddr 80:18:44:E2:E0:10

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:6038002 errors:0 dropped:1 overruns:0 frame:0

TX packets:1533057 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:8739829271 (8.1 GiB) TX bytes:118316458 (112.8 MiB)

Interrupt:65

em2 Link encap:Ethernet HWaddr 80:18:44:E2:E0:11

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:67122 errors:0 dropped:0 overruns:0 frame:0

TX packets:63547 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:8172147 (7.7 MiB) TX bytes:4078386 (3.8 MiB)

Interrupt:66

em3 Link encap:Ethernet HWaddr 80:18:44:E2:E0:12

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:67500 errors:0 dropped:20655 overruns:0 frame:0

TX packets:63511 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:8205514 (7.8 MiB) TX bytes:4081208 (3.8 MiB)

Interrupt:67

em4 Link encap:Ethernet HWaddr 80:18:44:E2:E0:13

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:67372 errors:0 dropped:20654 overruns:0 frame:0

TX packets:63269 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:8197558 (7.8 MiB) TX bytes:4049922 (3.8 MiB)

Interrupt:68

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:13 errors:0 dropped:0 overruns:0 frame:0

TX packets:13 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:852 (852.0 b) TX bytes:852 (852.0 b)

virbr0 Link encap:Ethernet HWaddr 52:54:00:53:83:57

inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0

UP BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

virbr0-nic Link encap:Ethernet HWaddr 52:54:00:53:83:57

BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:500

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

--查看Hosts解析

[root@rac1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

--查看操作系统时间

[root@rac1 ~]# date

Fri Sep 15 17:22:48 CST 2017

[root@rac1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda2 558G 13G 517G 3% /

tmpfs 63G 72K 63G 1% /dev/shm

/dev/sda1 575M 100M 433M 19% /boot

/dev/sda6 311G 67M 295G 1% /dump

/dev/sda5 105G 60M 100G 1% /u01

[root@rac1 ~]#

再看看防火墙吧!

[root@rac1 ~]# service iptables status

Table: nat

Chain PREROUTING (policy ACCEPT)

num target prot opt source destination

Chain INPUT (policy ACCEPT)

num target prot opt source destination

Chain OUTPUT (policy ACCEPT)

num target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

num target prot opt source destination

1 MASQUERADE tcp -- 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535

2 MASQUERADE udp -- 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535

3 MASQUERADE all -- 192.168.122.0/24 !192.168.122.0/24

Table: mangle

Chain PREROUTING (policy ACCEPT)

num target prot opt source destination

Chain INPUT (policy ACCEPT)

num target prot opt source destination

Chain FORWARD (policy ACCEPT)

num target prot opt source destination

Chain OUTPUT (policy ACCEPT)

num target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

num target prot opt source destination

1 CHECKSUM udp -- 0.0.0.0/0 0.0.0.0/0 udp dpt:68 CHECKSUM fill

Table: filter

Chain INPUT (policy ACCEPT)

num target prot opt source destination

1 ACCEPT udp -- 0.0.0.0/0 0.0.0.0/0 udp dpt:53

2 ACCEPT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:53

3 ACCEPT udp -- 0.0.0.0/0 0.0.0.0/0 udp dpt:67

4 ACCEPT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:67

Chain FORWARD (policy ACCEPT)

num target prot opt source destination

1 ACCEPT all -- 0.0.0.0/0 192.168.122.0/24 state RELATED,ESTABLISHED

2 ACCEPT all -- 192.168.122.0/24 0.0.0.0/0

3 ACCEPT all -- 0.0.0.0/0 0.0.0.0/0

4 REJECT all -- 0.0.0.0/0 0.0.0.0/0 reject-with icmp-port-unreachable

5 REJECT all -- 0.0.0.0/0 0.0.0.0/0 reject-with icmp-port-unreachable

Chain OUTPUT (policy ACCEPT)

num target prot opt source destination

[root@rac1 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=enforcing

# SELINUXTYPE= can take one of these two values:

# targeted - Targeted processes are protected,

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

--查看主机硬件超时时间

[root@rac1 ~]#

[root@rac1 ~]# cat /sys/block/sdb/device/timeout

30

[root@rac1 ~]#

二、主机基础信息可能的修改(这里以节点rac1为准)

--修改操作系统时间

[root@rac1 ~]# date -s 9:27:00

Fri Sep 15 09:27:00 CST 2017

--关闭防火墙

[root@rac1 ~]# service iptables stop

iptables: Setting chains to policy ACCEPT: nat mangle filte[ OK ]

iptables: Flushing firewall rules: [ OK ]

iptables: Unloading modules: [ OK ]

[root@rac1 ~]# chkconfig iptables off

[root@rac1 ~]# service iptables status

iptables: Firewall is not running.

[root@rac1 ~]# chkconfig --list|grep iptables

iptables 0:off1:off2:off3:off4:off5:off6:off

[root@rac1 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=enforcing

# SELINUXTYPE= can take one of these two values:

# targeted - Targeted processes are protected,

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@rac1 ~]#

[root@rac1 ~]# sed -i '/enforcing/s/SELINUX=enforced/SELINUX=disabled/g' /etc/selinux/config

[root@rac1 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of these two values:

# targeted - Targeted processes are protected,

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@rac1 ~]#

--检查操作系统缺失的rpm包

[root@rac1 ~]# rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' binutils \

> compat-libstdc++-33 \

> elfutils-libelf \

> elfutils-libelf-devel \

> gcc \

> gcc-c++ \

> glibc \

> glibc-common \

> glibc-devel \

> glibc-headers \

> ksh \

> libaio \

> libaio-devel \

> libgcc \

> libstdc++ \

> libstdc++-devel \

> make \

> sysstat \

> unixODBC \

> unixODBC-devel\

> kernel-headers \

> glibc-headers \

> mpfr \

> ppl \

> cpp \

> cloog-ppl \

> gcc-c++ \

> pdksh \

> cvuqdisk \

> compat-libcap1

binutils-2.20.51.0.2-5.44.el6 (x86_64)

package compat-libstdc++-33 is not installed

elfutils-libelf-0.164-2.el6 (x86_64)

elfutils-libelf-devel-0.164-2.el6 (x86_64)

gcc-4.4.7-17.el6 (x86_64)

gcc-c++-4.4.7-17.el6 (x86_64)

glibc-2.12-1.192.el6 (x86_64)

glibc-common-2.12-1.192.el6 (x86_64)

glibc-devel-2.12-1.192.el6 (x86_64)

glibc-headers-2.12-1.192.el6 (x86_64)

package ksh is not installed

libaio-0.3.107-10.el6 (x86_64)

package libaio-devel is not installed

libgcc-4.4.7-17.el6 (x86_64)

libstdc++-4.4.7-17.el6 (x86_64)

libstdc++-devel-4.4.7-17.el6 (x86_64)

make-3.81-23.el6 (x86_64)

sysstat-9.0.4-31.el6 (x86_64)

package unixODBC is not installed

package unixODBC-develkernel-headers is not installed

glibc-headers-2.12-1.192.el6 (x86_64)

mpfr-2.4.1-6.el6 (x86_64)

ppl-0.10.2-11.el6 (x86_64)

cpp-4.4.7-17.el6 (x86_64)

cloog-ppl-0.15.7-1.2.el6 (x86_64)

gcc-c++-4.4.7-17.el6 (x86_64)

package pdksh is not installed

package cvuqdisk is not installed

package compat-libcap1 is not installed

c libstdc++ libstdc++-devel make sysstat unixODBC unixODBC-develkernel-headers glibc-headers mpfr ppl cpp cloog-ppl gcc-c++ pdksh cvuqdisk compat-libcap1

binutils-2.20.51.0.2-5.44.el6 (x86_64)

package compat-libstdc++-33 is not installed

elfutils-libelf-0.164-2.el6 (x86_64)

elfutils-libelf-devel-0.164-2.el6 (x86_64)

gcc-4.4.7-17.el6 (x86_64)

gcc-c++-4.4.7-17.el6 (x86_64)

glibc-2.12-1.192.el6 (x86_64)

glibc-common-2.12-1.192.el6 (x86_64)

glibc-devel-2.12-1.192.el6 (x86_64)

glibc-headers-2.12-1.192.el6 (x86_64)

package ksh is not installed

libaio-0.3.107-10.el6 (x86_64)

package libaio-devel is not installed

libgcc-4.4.7-17.el6 (x86_64)

libstdc++-4.4.7-17.el6 (x86_64)

libstdc++-devel-4.4.7-17.el6 (x86_64)

make-3.81-23.el6 (x86_64)

sysstat-9.0.4-31.el6 (x86_64)

package unixODBC is not installed

package unixODBC-develkernel-headers is not installed

glibc-headers-2.12-1.192.el6 (x86_64)

mpfr-2.4.1-6.el6 (x86_64)

ppl-0.10.2-11.el6 (x86_64)

cpp-4.4.7-17.el6 (x86_64)

cloog-ppl-0.15.7-1.2.el6 (x86_64)

gcc-c++-4.4.7-17.el6 (x86_64)

package pdksh is not installed

package cvuqdisk is not installed

package compat-libcap1 is not installed

c libstdc++ libstdc++-devel make sysstat unixODBC unixODBC-develkernel-headers glibc-headers mpfr ppl cpp cloog-ppl gcc-c++ pdksh cvuqdisk compat-libcap1|grep not

package compat-libstdc++-33 is not installed

package ksh is not installed

package libaio-devel is not installed

package unixODBC is not installed

package unixODBC-develkernel-headers is not installed

package pdksh is not installed

package cvuqdisk is not installed

package compat-libcap1 is not installed

[root@rac1 ~]#

--这里可以加在命令后边加管道过滤只看没有安装的,没有安装的是需要手工安装的,演示如下:

[root@rac2 home]# rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' binutils \

> compat-libstdc++-33 \

> elfutils-libelf \

> elfutils-libelf-devel \

> gcc \

> gcc-c++ \

> glibc \

> glibc-common \

> glibc-devel \

> glibc-headers \

> ksh \

> libaio \

> libaio-devel \

> libgcc \

> libstdc++ \

> libstdc++-devel \

> make \

> sysstat \

> unixODBC \

> unixODBC-devel\

> kernel-headers \

> glibc-headers \

> mpfr \

> ppl \

> cpp \

> cloog-ppl \

> gcc-c++ \

> pdksh \

> cvuqdisk \

> compat-libcap1|grep not

package compat-libstdc++-33 is not installed

package ksh is not installed

package libaio-devel is not installed

package unixODBC is not installed

package unixODBC-develkernel-headers is not installed

package pdksh is not installed

package cvuqdisk is not installed

package compat-libcap1 is not installed

[root@rac2 home]#

--安装操作系统缺失的rpm包

[root@rac1 home]# mkdir software

[root@rac1 home]# ls

oracle linux_6.8.iso p13390677_112040_Linux-x86-64_2of7.zip p18139609_112040_Linux-x86-64.zip p6880880_112000_Linux-x86-64.zip

p13390677_112040_Linux-x86-64_1of7.zip p13390677_112040_Linux-x86-64_3of7.zip p19121551_112040_Linux-x86-64.zip software

[root@rac1 home]# mv p* software/

[root@rac1 home]# ls

oracle linux_6.8.iso software

[root@rac1 home]# mv oracle\ linux_6.8.iso oracle_linux_6.8.iso

[root@rac1 home]# ls

oracle_linux_6.8.iso software

[root@rac1 home]# mv oracle_linux_6.8.iso software/

[root@rac1 home]# ls

software

--挂载iso镜像

[root@rac1 home]# mount -o loop -t iso9660 /home/software/oracle_linux_6.8.iso /mnt/

mount: /home/software/oracle_linux_6.8.iso is write-protected, mounting read-only

[root@rac1 home]# cd /etc/yum.repos.d/

[root@rac1 yum.repos.d]# ls

public-yum-ol6.repo

--做本地的yum源

[root@rac1 yum.repos.d]# echo "

> [local]

> name = local

> baseurl=file:///mnt/

> enabled = 1

> gpgcheck = 0

> gpgkey =file://mnt/RPM-GPG-KEY-oracle

> " > /etc/yum.repos.d/local.repo

[root@rac1 yum.repos.d]# ls

local.repo public-yum-ol6.repo

[root@rac1 yum.repos.d]# cat local.repo

[local]

name = local

baseurl=file:///mnt/

enabled = 1

gpgcheck = 0

gpgkey =file://mnt/RPM-GPG-KEY-oracle

[root@rac1 yum.repos.d]# yum clean all

Loaded plugins: refresh-packagekit, security, ulninfo

Cleaning repos: local public_ol6_UEKR4 public_ol6_latest

Cleaning up Everything

[root@rac1 yum.repos.d]# yum -y install compat-libstdc++-33

Loaded plugins: refresh-packagekit, security, ulninfo

Setting up Install Process

local | 3.7 kB 00:00 ...

local/primary_db | 3.1 MB 00:00 ...

http://yum.oracle.com/repo/OracleLinux/OL6/UEKR4/x86_64/repodata/repomd.xml: [Errno 14] PYCURL ERROR 6 - "Couldn't resolve host 'yum.oracle.com'"

Trying other mirror.

Error: Cannot retrieve repository metadata (repomd.xml) for repository: public_ol6_UEKR4. Please verify its path and try again

[root@rac1 yum.repos.d]# cd /mnt

--由于内网不能联网,这里yum不能用,为了不耽误工期,执行rpm -ivh方式安装

[root@rac1 mnt]# cd /mnt/Packages/

[root@rac1 Packages]# rpm -ivh compat-libstdc++-33-3.2.3-69.el6.x86_64.rpm

warning: compat-libstdc++-33-3.2.3-69.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:compat-libstdc++-33 ########################################### [100%]

[root@rac1 Packages]# rpm -ivh ksh-20120801-33.el6.x86_64.rpm

warning: ksh-20120801-33.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:ksh ########################################### [100%]

[root@rac1 Packages]# rpm -ivh libaio-devel-0.3.107-10.el6.x86_64.rpm

warning: libaio-devel-0.3.107-10.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:libaio-devel ########################################### [100%]

[root@rac1 Packages]# rpm -ivh unixODBC-

unixODBC-2.2.14-14.el6.i686.rpm unixODBC-2.2.14-14.el6.x86_64.rpm unixODBC-devel-2.2.14-14.el6.i686.rpm unixODBC-devel-2.2.14-14.el6.x86_64.rpm

[root@rac1 Packages]# rpm -ivh unixODBC-

unixODBC-2.2.14-14.el6.i686.rpm unixODBC-2.2.14-14.el6.x86_64.rpm unixODBC-devel-2.2.14-14.el6.i686.rpm unixODBC-devel-2.2.14-14.el6.x86_64.rpm

[root@rac1 Packages]# rpm -ivh unixODBC-2.2.14-14.el6.x86_64.rpm

warning: unixODBC-2.2.14-14.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:unixODBC ########################################### [100%]

[root@rac1 Packages]# rpm -ivh unixODBC-devel-2.2.14-14.el6.x86_64.rpm

warning: unixODBC-devel-2.2.14-14.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:unixODBC-devel ########################################### [100%]

[root@rac1 Packages]# rpm -ivh kernel-headers-2.6.32-642.el6.x86_64.rpm

warning: kernel-headers-2.6.32-642.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

package kernel-headers-2.6.32-642.el6.x86_64 is already installed

[root@rac1 Packages]# rpm -ivh compat-libcap1-1.10-1.x86_64.rpm

warning: compat-libcap1-1.10-1.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:compat-libcap1 ########################################### [100%]

[root@rac1 Packages]# cd /home/software/

[root@rac1 software]# ls

cvuqdisk-1.0.9-1.rpm p13390677_112040_Linux-x86-64_1of7.zip p13390677_112040_Linux-x86-64_3of7.zip p19121551_112040_Linux-x86-64.zip

oracle_linux_6.8.iso p13390677_112040_Linux-x86-64_2of7.zip p18139609_112040_Linux-x86-64.zip p6880880_112000_Linux-x86-64.zip

[root@rac1 software]#

cvuqdisk-1.0.9-1.rpm 包需要创建完oracle、grid相关的组和用户后才可以安装,否则安装会报错如下:

[root@rac1 software]# rpm -ivh cvuqdisk-1.0.9-1.rpm

Preparing... ########################################### [100%]

Using default group oinstall to install package

Group oinstall not found in /etc/group

Checking for presence of group oinstall in NIS

ypcat: can't get local yp domain: Local domain name not set

Group oinstall not found in NIS

oinstall : Group doesn't exist.

Please define environment variable CVUQDISK_GRP with the correct group to be used

error: %pre(cvuqdisk-1.0.9-1.x86_64) scriptlet failed, exit status 1

error: install: %pre scriptlet failed (2), skipping cvuqdisk-1.0.9-1

[root@rac1 software]#

--添加hosts解析

[root@rac1 ~]# cp /etc/hosts /etc/hosts.bak

[root@rac1 ~]# echo "

> 192.168.248.38 rac2

> 172.25.25.2 racpriv2

> 192.168.248.39 racvip2

> 192.168.248.35 rac1

> 172.25.25.1 racpriv1

> 192.168.248.36 racvip1

> 192.168.248.37 racscanip

> " >>/etc/hosts

[root@rac1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.248.38 rac2

172.25.25.2 racpriv2

192.168.248.39 racvip2

192.168.248.35 rac1

172.25.25.1 racpriv1

192.168.248.36 racvip1

192.168.248.37 racscanip

--hosts解析,网络ping通测试

[root@rac1 ~]# ping rac1

PING rac1 (192.168.248.35) 56(84) bytes of data.

64 bytes from rac1 (192.168.248.35): icmp_seq=1 ttl=64 time=0.019 ms

64 bytes from rac1 (192.168.248.35): icmp_seq=2 ttl=64 time=0.012 ms

^C

--- rac1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1914ms

rtt min/avg/max/mdev = 0.012/0.015/0.019/0.005 ms

[root@rac1 ~]# ping rac2

PING rac2 (192.168.248.38) 56(84) bytes of data.

64 bytes from rac2 (192.168.248.38): icmp_seq=1 ttl=64 time=0.132 ms

64 bytes from rac2 (192.168.248.38): icmp_seq=2 ttl=64 time=0.131 ms

^C

--- rac2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1297ms

rtt min/avg/max/mdev = 0.131/0.131/0.132/0.011 ms

[root@rac1 ~]# ping racpriv1

PING racpriv1 (172.25.25.1) 56(84) bytes of data.

64 bytes from racpriv1 (172.25.25.1): icmp_seq=1 ttl=64 time=0.020 ms

64 bytes from racpriv1 (172.25.25.1): icmp_seq=2 ttl=64 time=0.011 ms

^C

--- racpriv1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1571ms

rtt min/avg/max/mdev = 0.011/0.015/0.020/0.006 ms

[root@rac1 ~]# ping racpriv2

PING racpriv2 (172.25.25.2) 56(84) bytes of data.

64 bytes from racpriv2 (172.25.25.2): icmp_seq=1 ttl=64 time=0.137 ms

64 bytes from racpriv2 (172.25.25.2): icmp_seq=2 ttl=64 time=0.129 ms

^C

--- racpriv2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1763ms

rtt min/avg/max/mdev = 0.129/0.133/0.137/0.004 ms

[root@rac1 ~]#

--主机参数调整

[root@rac1 ~]# cp /etc/sysctl.conf /etc/sysctl.conf.bak

[root@rac1 ~]# echo "

> net.ipv4.ip_forward = 0

> net.ipv4.conf.default.rp_filter = 1

> net.ipv4.conf.default.accept_source_route = 0

> kernel.sysrq = 1

> kernel.core_uses_pid = 1

> net.ipv4.tcp_syncookies = 1

> kernel.msgmnb = 65536

> kernel.msgmax = 8192

> kernel.shmmax = 4398046511104

> kernel.shmall = 1073741824

> fs.file-max = 6815744

> kernel.msgmni = 2878

> kernel.sem = 250 32000 100 142

> kernel.shmmni = 4096

> net.core.rmem_default = 262144

> net.core.rmem_max = 4194304

> net.core.wmem_default = 262144

> net.core.wmem_max =1048576

> fs.aio-max-nr = 3145728

> net.ipv4.ip_local_port_range = 9000 65000

> " >> /etc/sysctl.conf

[root@rac1 ~]# sysctl -p

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 1

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

kernel.msgmnb = 65536

kernel.msgmax = 8192

kernel.shmmax = 4398046511104

kernel.shmall = 1073741824

fs.file-max = 6815744

kernel.msgmni = 2878

kernel.sem = 250 32000 100 142

kernel.shmmni = 4096

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

fs.aio-max-nr = 3145728

net.ipv4.ip_local_port_range = 9000 65000

[root@rac1 ~]# cat /etc/sysctl.conf

# Kernel sysctl configuration file for Red Hat Linux

#

# For binary values, 0 is disabled, 1 is enabled. See sysctl(8) and

# sysctl.conf(5) for more details.

#

# Use '/sbin/sysctl -a' to list all possible parameters.

# Controls IP packet forwarding

net.ipv4.ip_forward = 0

# Controls source route verification

net.ipv4.conf.default.rp_filter = 1

# Do not accept source routing

net.ipv4.conf.default.accept_source_route = 0

# Controls the System Request debugging functionality of the kernel

kernel.sysrq = 0

# Controls whether core dumps will append the PID to the core filename.

# Useful for debugging multi-threaded applications.

kernel.core_uses_pid = 1

# Controls the use of TCP syncookies

net.ipv4.tcp_syncookies = 1

# Controls the default maxmimum size of a mesage queue

kernel.msgmnb = 65536

# Controls the maximum size of a message, in bytes

kernel.msgmax = 65536

# Controls the maximum shared segment size, in bytes

kernel.shmmax = 68719476736

# Controls the maximum number of shared memory segments, in pages

kernel.shmall = 4294967296

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 1

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

kernel.msgmnb = 65536

kernel.msgmax = 8192

kernel.shmmax = 4398046511104

kernel.shmall = 1073741824

fs.file-max = 6815744

kernel.msgmni = 2878

kernel.sem = 250 32000 100 142

kernel.shmmni = 4096

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max =1048576

fs.aio-max-nr = 3145728

net.ipv4.ip_local_port_range = 9000 65000

[root@rac1 ~]#

--主机对特定用户oracle、grid的限制调整

[root@rac1 ~]# cp /etc/security/limits.conf /etc/security/limits.conf.bak

[root@rac1 ~]# ls -l /etc/security/limits.conf

-rw-r--r--. 1 root root 1835 Mar 29 2016 /etc/security/limits.conf

[root@rac1 ~]# ls -l /etc/security/limits.conf.bak

-rw-r--r--. 1 root root 1835 Sep 15 10:14 /etc/security/limits.conf.bak

[root@rac1 ~]#

[root@rac1 ~]# echo "

> oracle soft nofile 131072

> oracle hard nofile 131072

> oracle soft nproc 131072

> oracle hard nproc 131072

> oracle soft core unlimited

> oracle hard core unlimited

> oracle soft memlock 50000000

> oracle hard memlock 50000000

> grid soft nofile 131072

> grid hard nofile 131072

> grid soft nproc 131072

> grid hard nproc 131072

> grid soft core unlimited

> grid hard core unlimited

> grid soft memlock 50000000

> grid hard memlock 50000000

> " >> /etc/security/limits.conf

[root@rac1 ~]# cat /etc/security/limits.conf

# /etc/security/limits.conf

#

#Each line describes a limit for a user in the form:

#

#

#

#Where:

#

# - a user name

# - a group name, with @group syntax

# - the wildcard *, for default entry

# - the wildcard %, can be also used with %group syntax,

# for maxlogin limit

#

#

# - "soft" for enforcing the soft limits

# - "hard" for enforcing hard limits

#

#

# - core - limits the core file size (KB)

# - data - max data size (KB)

# - fsize - maximum filesize (KB)

# - memlock - max locked-in-memory address space (KB)

# - nofile - max number of open file descriptors

# - rss - max resident set size (KB)

# - stack - max stack size (KB)

# - cpu - max CPU time (MIN)

# - nproc - max number of processes

# - as - address space limit (KB)

# - maxlogins - max number of logins for this user

# - maxsyslogins - max number of logins on the system

# - priority - the priority to run user process with

# - locks - max number of file locks the user can hold

# - sigpending - max number of pending signals

# - msgqueue - max memory used by POSIX message queues (bytes)

# - nice - max nice priority allowed to raise to values: [-20, 19]

# - rtprio - max realtime priority

#

#

#

#* soft core 0

#* hard rss 10000

#@student hard nproc 20

#@faculty soft nproc 20

#@faculty hard nproc 50

#ftp hard nproc 0

#@student - maxlogins 4

# End of file

oracle soft nofile 131072

oracle hard nofile 131072

oracle soft nproc 131072

oracle hard nproc 131072

oracle soft core unlimited

oracle hard core unlimited

oracle soft memlock 50000000

oracle hard memlock 50000000

grid soft nofile 131072

grid hard nofile 131072

grid soft nproc 131072

grid hard nproc 131072

grid soft core unlimited

grid hard core unlimited

grid soft memlock 50000000

grid hard memlock 50000000

[root@rac1 ~]#

--重头戏,关乎成败,修改multipath配置,注意备份

[root@rac1 ~]# multipath -l

mpathe (3600a098000b65e0a000001f859b94970) dm-6 DELL,MD38xxf

size=2.0T features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:6 sdh 8:112 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:6 sdo 8:224 active undef running

mpathd (3600a098000b65e0a000001f059b94907) dm-2 DELL,MD38xxf

size=10G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:2 sdd 8:48 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:2 sdk 8:160 active undef running

mpathc (3600a098000b65e0a000001ee59b948f6) dm-1 DELL,MD38xxf

size=10G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:1 sdc 8:32 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:1 sdj 8:144 active undef running

mpathb (3600a098000b65e0a000001ec59b948da) dm-0 DELL,MD38xxf

size=10G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:0 sdb 8:16 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:0 sdi 8:128 active undef running

mpathh (3600a098000b65e0a000001f659b94956) dm-5 DELL,MD38xxf

size=2.0T features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:5 sdg 8:96 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:5 sdn 8:208 active undef running

mpathg (3600a098000b65e0a000001f459b94941) dm-4 DELL,MD38xxf

size=2.0T features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:4 sdf 8:80 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:4 sdm 8:192 active undef running

mpathf (3600a098000b65e0a000001f259b94926) dm-3 DELL,MD38xxf

size=600G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:3 sde 8:64 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:3 sdl 8:176 active undef running

[root@rac1 ~]#

[root@rac1 ~]# cp /etc/multipath.conf /etc/multipath.conf.bak

[root@rac1 ~]# vi /etc/multipath.conf

[root@rac1 ~]# cat /etc/multipath.conf

# multipath.conf written by anaconda

#defaults {

# user_friendly_names yes

#}

blacklist {

devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

devnode "^hd[a-z]"

devnode "^dcssblk[0-9]*"

device {

vendor "DGC"

product "LUNZ"

}

device {

vendor "IBM"

product "S/390.*"

}

# don't count normal SATA devices as multipaths

device {

vendor "ATA"

}

# don't count 3ware devices as multipaths

device {

vendor "3ware"

}

device {

vendor "AMCC"

}

# nor highpoint devices

device {

vendor "HPT"

}

wwid "361866da0b4f9a100214c0e5803224c80"

wwid "*"

}

blacklist_exceptions {

wwid "3600a098000b65e0a000001ec59b948da"

wwid "3600a098000b65e0a000001ee59b948f6"

wwid "3600a098000b65e0a000001f059b94907"

wwid "3600a098000b65e0a000001f859b94970"

wwid "3600a098000b65e0a000001f259b94926"

wwid "3600a098000b65e0a000001f459b94941"

wwid "3600a098000b65e0a000001f659b94956"

}

multipaths {

multipath {

uid 600

gid 600

alias ocr1

wwid "3600a098000b65e0a000001ec59b948da"

mode 0600

path_grouping_policy failover

}

multipath {

uid 600

gid 600

alias ocr2

wwid "3600a098000b65e0a000001ee59b948f6"

mode 0600

path_grouping_policy failover

}

multipath {

uid 600

gid 600

alias ocr3

wwid "3600a098000b65e0a000001f059b94907"

mode 0600

path_grouping_policy failover

}

multipath {

uid 600

gid 600

alias data1

wwid "3600a098000b65e0a000001f859b94970"

mode 0600

path_grouping_policy failover

}

multipath {

uid 600

gid 600

alias arch

wwid "3600a098000b65e0a000001f259b94926"

mode 0600

path_grouping_policy failover

}

multipath {

uid 600

gid 600

alias data2

wwid "3600a098000b65e0a000001f459b94941"

mode 0600

path_grouping_policy failover

}

multipath {

uid 600

gid 600

alias data3

wwid "3600a098000b65e0a000001f659b94956"

mode 0600

path_grouping_policy failover

}

}

[root@rac1 ~]#

[root@rac1 ~]# groupadd -g 600 asmadmin

[root@rac1 ~]# groupadd -g 601 oinstall

[root@rac1 ~]# groupadd -g 602 asmoper

[root@rac1 ~]# groupadd -g 603 dba

[root@rac1 ~]# groupadd -g 604 asmdba

[root@rac1 ~]# useradd -u 500 -g oinstall -G dba,asmadmin,asmdba oracle

[root@rac1 ~]# useradd -u 600 -g oinstall -G asmdba,asmadmin,asmoper grid

[root@rac1 ~]# service multipathd restart

ok

Stopping multipathd daemon: [ OK ]

Starting multipathd daemon: [ OK ]

[root@rac1 ~]# multipath -l

arch (3600a098000b65e0a000001f259b94926) dm-3 DELL,MD38xxf

size=600G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:3 sde 8:64 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:3 sdl 8:176 active undef running

ocr3 (3600a098000b65e0a000001f059b94907) dm-2 DELL,MD38xxf

size=10G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:2 sdd 8:48 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:2 sdk 8:160 active undef running

ocr2 (3600a098000b65e0a000001ee59b948f6) dm-1 DELL,MD38xxf

size=10G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:1 sdc 8:32 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:1 sdj 8:144 active undef running

ocr1 (3600a098000b65e0a000001ec59b948da) dm-0 DELL,MD38xxf

size=10G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:0 sdb 8:16 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:0 sdi 8:128 active undef running

data3 (3600a098000b65e0a000001f659b94956) dm-5 DELL,MD38xxf

size=2.0T features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:5 sdg 8:96 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:5 sdn 8:208 active undef running

data2 (3600a098000b65e0a000001f459b94941) dm-4 DELL,MD38xxf

size=2.0T features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:4 sdf 8:80 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:4 sdm 8:192 active undef running

data1 (3600a098000b65e0a000001f859b94970) dm-6 DELL,MD38xxf

size=2.0T features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=active

| `- 1:0:0:6 sdh 8:112 active undef running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 2:0:0:6 sdo 8:224 active undef running

[root@rac1 ~]#

--编辑udev控制文件,控制权限

[root@rac1 ~]# vi /etc/udev/rules.d/12-mulitpath-privs.rules

ENV{DM_NAME}=="arch*", OWNER:="grid", GROUP:="asmadmin", MODE:="660"

ENV{DM_NAME}=="ocr*", OWNER:="grid", GROUP:="asmadmin", MODE:="660"

ENV{DM_NAME}=="data*", OWNER:="grid", GROUP:="asmadmin", MODE:="660"

[root@rac1 ~]# start_udev

Starting udev: [ OK ]

[root@rac1 ~]#

[root@rac1 ~]# ls -l /dev/mapper/*

lrwxrwxrwx. 1 root root 7 Sep 15 10:29 /dev/mapper/arch -> ../dm-3

crw-rw----. 1 root root 10, 236 Sep 15 10:29 /dev/mapper/control

lrwxrwxrwx. 1 root root 7 Sep 15 10:29 /dev/mapper/data1 -> ../dm-6

lrwxrwxrwx. 1 root root 7 Sep 15 10:29 /dev/mapper/data2 -> ../dm-4

lrwxrwxrwx. 1 root root 7 Sep 15 10:29 /dev/mapper/data3 -> ../dm-5

lrwxrwxrwx. 1 root root 7 Sep 15 10:29 /dev/mapper/ocr1 -> ../dm-0

lrwxrwxrwx. 1 root root 7 Sep 15 10:29 /dev/mapper/ocr2 -> ../dm-1

lrwxrwxrwx. 1 root root 7 Sep 15 10:29 /dev/mapper/ocr3 -> ../dm-2

[root@rac1 ~]#

--到此存储规划及权限控制操作执行完毕

--为oracle、grid重置密码

[root@rac1 ~]# passwd oracle

Changing password for user oracle.

New password:

BAD PASSWORD: it is based on a dictionary word

Retype new password:

passwd: all authentication tokens updated successfully.

[root@rac1 ~]# passwd grid

Changing password for user grid.

New password:

BAD PASSWORD: it does not contain enough DIFFERENT characters

Retype new password:

passwd: all authentication tokens updated successfully.

[root@rac1 ~]#

--安装cvuqdisk-1.0.9-1.rpm 包

[root@rac1 software]# rpm -ivh cvuqdisk-1.0.9-1.rpm

Preparing... ########################################### [100%]

Using default group oinstall to install package

1:cvuqdisk ########################################### [100%]

[root@rac1 software]#

--以上操作需要在rac2节点重复操作一遍

四、rac安装环境创建

[root@rac1 u01]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda2 558G 13G 517G 3% /

tmpfs 63G 72K 63G 1% /dev/shm

/dev/sda1 575M 100M 433M 19% /boot

/dev/sda6 311G 67M 295G 1% /dump

/dev/sda5 105G 60M 100G 1% /u01

/home/software/oracle_linux_6.8.iso

3.8G 3.8G 0 100% /mnt

--创建软件安装目录

[root@rac1 u01]# mkdir -p /u01/oracle/app

acle/app/oracle

chmod -R 775 /u01/oracle/app[root@rac1 u01]# mkdir -p /u01/oracle/app/grid/home

[root@rac1 u01]# mkdir -p /u01/oracle/app/grid/base

[root@rac1 u01]# mkdir -p /u01/oracle/app/oracle/

[root@rac1 u01]# mkdir -p /u01/oracle/app/oracle/product/11.2.0.4/db

[root@rac1 u01]# chown -R grid:oinstall /u01/oracle/app/grid

[root@rac1 u01]# chown -R oracle:oinstall /u01/oracle/app/oracle

[root@rac1 u01]# chmod -R 775 /u01/oracle/app

--安装目录树形结构查看包

[root@rac1 u01]#

[root@rac1 Packages]# rpm -ivh tree-1.5.3-3.el6.x86_64.rpm

warning: tree-1.5.3-3.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:tree ########################################### [100%]

[root@rac1 Packages]# cd /

[root@rac1 /]# tree /u01

/u01

├── lost+found

└── oracle

└── app

├── grid

│?? ├── base

│?? └── home

└── oracle

└── product

└── 11.2.0.4

└── db

10 directories, 0 files

[root@rac1 /]#

--编辑oracle、grid的环境变量

[root@rac1 /]# cat >>/home/grid/.bash_profile<

'yyyy-mm-dd hh24:mi:ss.ff'

export ORACLE_SID=+ASM1

export ORACLE_BASE=/u01/oracle/app/grid/base

export ORACLE_HOME=/u01/oracle/app/grid/home

export PATH=$ORACLE_HOME/bin:$PATH

EOF> export TEMP=/tmp

> export TMPDIR=/tmp

> export ORACLE_TERM=xterm

> export THREADS_FLAG=native

> export NLS_LANG='AMERICAN_AMERICA.ZHS16GBK'

> export NLS_DATE_FORMAT='yyyy-mm-dd hh24:mi:ss'

> export NLS_TIMESTAMP_TZ_FORMAT='yyyy-mm-dd hh24:mi:ss.ff'

> export ORACLE_SID=+ASM1

> export ORACLE_BASE=/u01/oracle/app/grid/base

> export ORACLE_HOME=/u01/oracle/app/grid/home

> export PATH=$ORACLE_HOME/bin:$PATH

> EOF

[root@rac1 /]# cat /home/grid/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

umask=022

export TEMP=/tmp

export TMPDIR=/tmp

export ORACLE_TERM=xterm

export THREADS_FLAG=native

export NLS_LANG='AMERICAN_AMERICA.ZHS16GBK'

export NLS_DATE_FORMAT='yyyy-mm-dd hh24:mi:ss'

export NLS_TIMESTAMP_TZ_FORMAT='yyyy-mm-dd hh24:mi:ss.ff'

export ORACLE_SID=+ASM1

export ORACLE_BASE=/u01/oracle/app/grid/base

export ORACLE_HOME=/u01/oracle/app/grid/home

export PATH=/bin:/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin

[root@rac1 /]# cat >>/home/oracle/.bash_profile<

> export TEMP=/tmp

> export TMPDIR=/tmp

> export ORACLE_TERM=xterm

> export THREADS_FLAG=native

> export NLS_LANG='AMERICAN_AMERICA.ZHS16GBK'

> export NLS_DATE_FORMAT='yyyy-mm-dd hh24:mi:ss'

> export NLS_TIMESTAMP_TZ_FORMAT='yyyy-mm-dd hh24:mi:ss.ff'

> export ORACLE_BASE=/u01/oracle/app/oracle

> export ORACLE_HOME=/u01/oracle/app/oracle/product/11.2.0.4/db

> export ORACLE_SID=orcl1

> export PATH=$PATH:$ORACLE_HOME/bin

> EOF

[root@rac1 /]# cat >>/home/oracle/.bash_profile<

export TEMP=/tmp

export TMPDIR=/tmp

export ORACLE_TERM=xterm

export THREADS_FLAG=native

export NLS_LANG='AMERICAN_AMERICA.ZHS16GBK'

export NLS_DATE_FORMAT='yyyy-mm-dd hh24:mi:ss'

export NLS_TIMESTAMP_TZ_FORMAT='yyyy-mm-dd hh24:mi:ss.ff'

export ORACLE_BASE=/u01/oracle/app/oracle

export ORACLE_HOME=/u01/oracle/app/oracle/product/11.2.0.4/db

export ORACLE_SID=orcl1

export PATH=$PATH:$ORACLE_HOME/bin

EOF

[root@rac1 /]#

--以上操作需要在rac2节点重复操作一遍

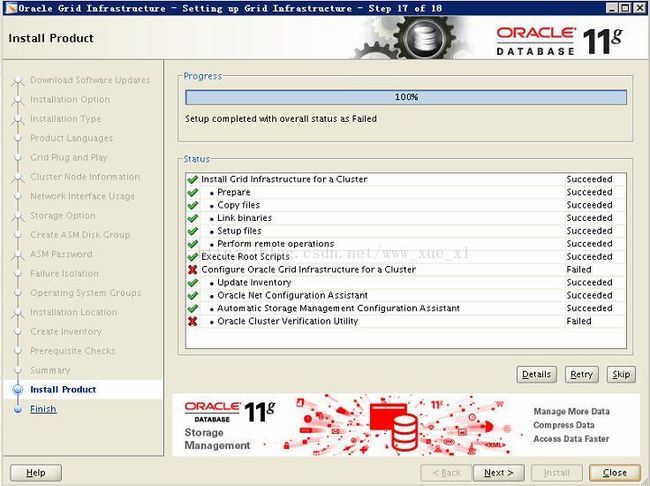

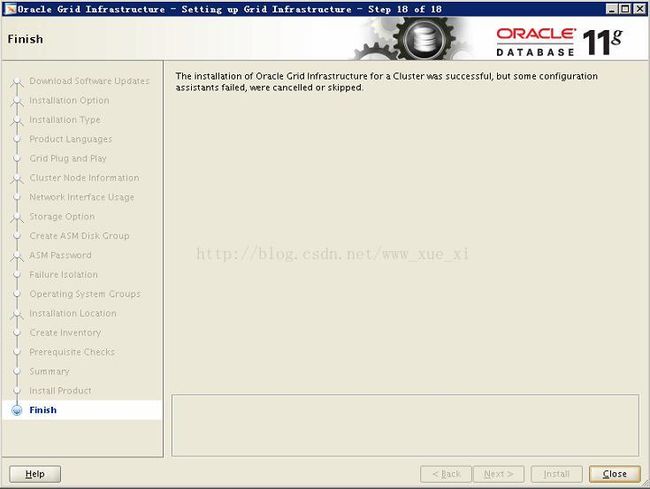

五、安装GI

--次步骤只需要在rac1节点执行即可

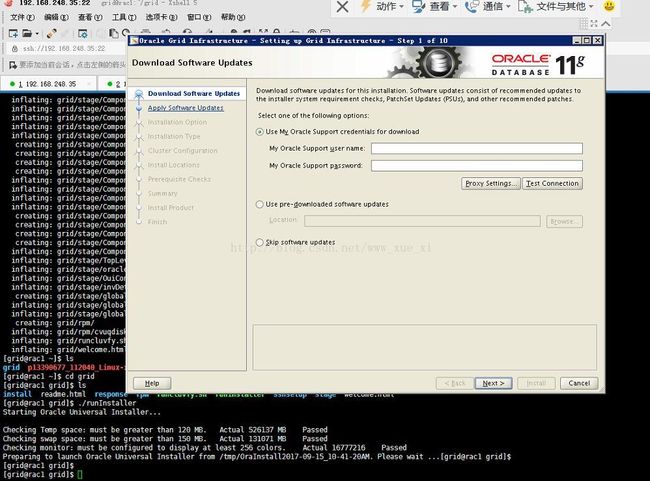

使用grid登录rac1服务器,解压p13390677_112040_Linux-x86-64_3of7.zip 到本地的/home/grid/grid目录,然后进入/home/grid/grid,执行./runInstaller启动安装图形界面

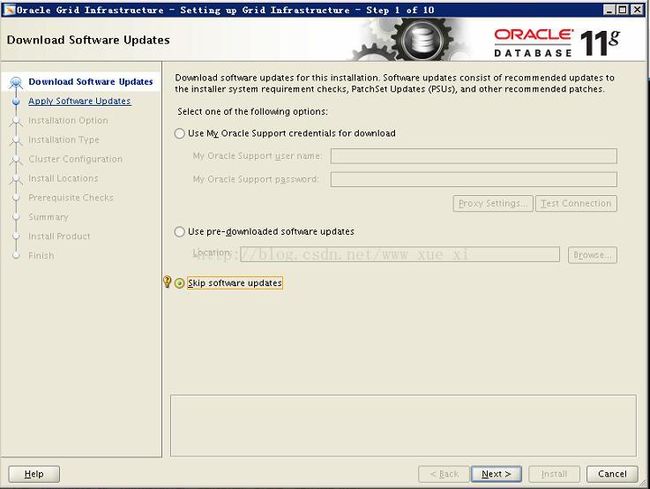

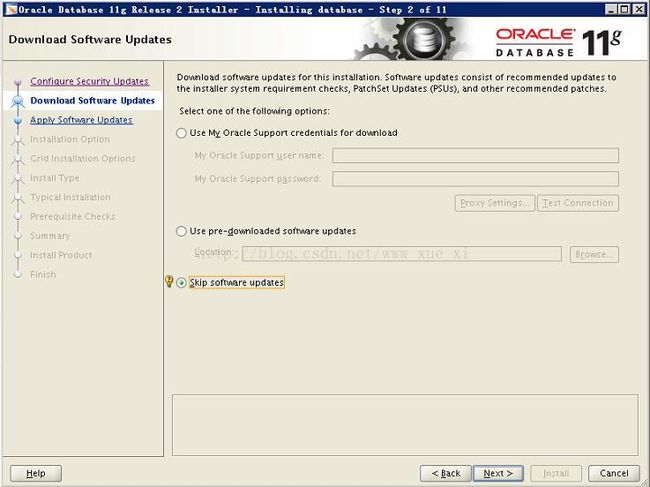

--跳过软件更新,点击下一步

--选择安装并配置grid集群

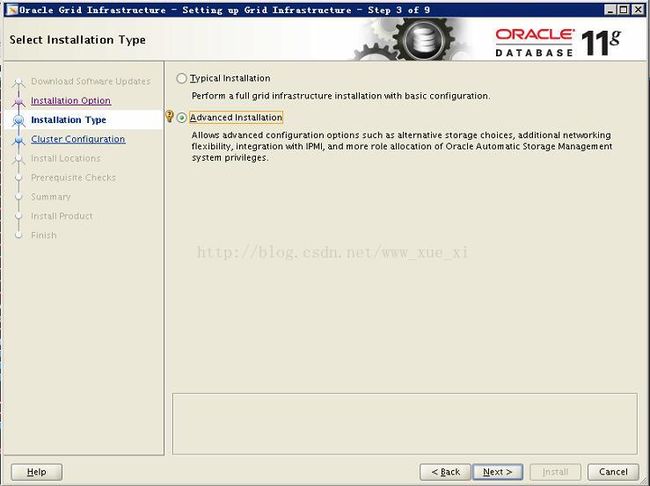

--选择高级安装

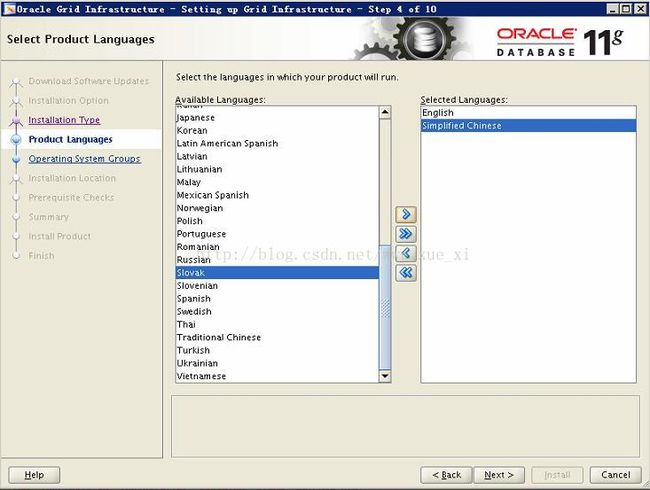

--添加软件的简体中文支持,注意这里并不是数据库字符集的配置

--编辑集群名字及SCAN名字,scanname要和hosts里的scanip解析的别名一致,我的配置是racscanip

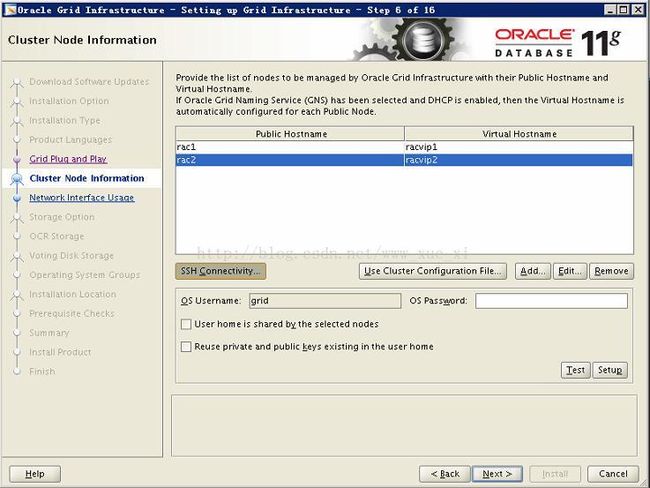

--编辑vip并添加节点rac2,这里的virtual hostname也要和hosts解析文件里配置的vip别名保持一致,这里我的配置分别是racvip1、racvip2

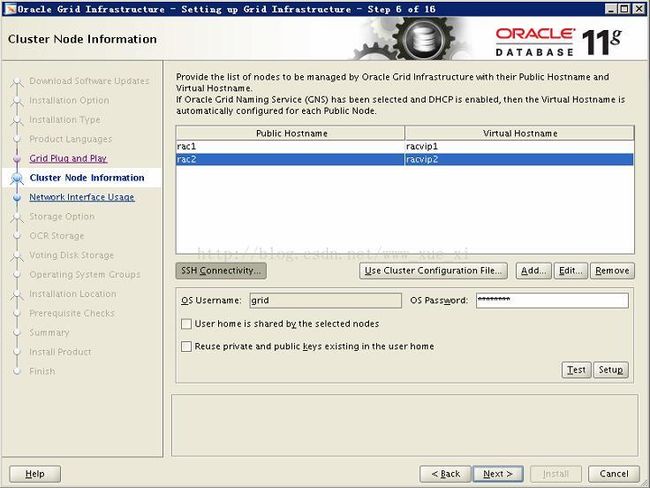

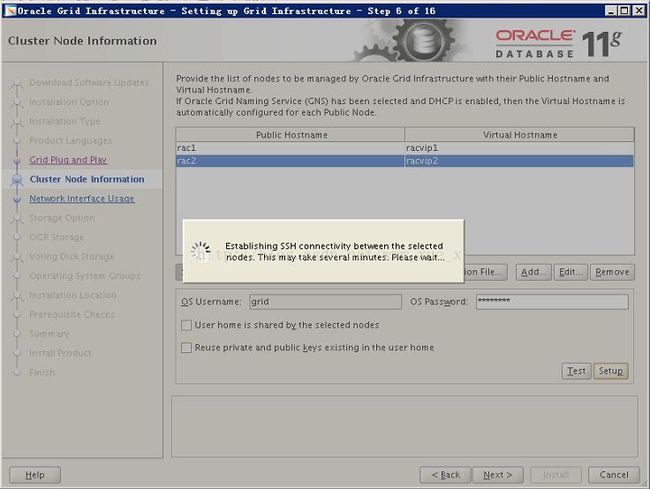

--建立2个节点间的ssh对等性,点击界面的SSH Connectivity,输入grid密码,然后点击setup稍等片刻即可

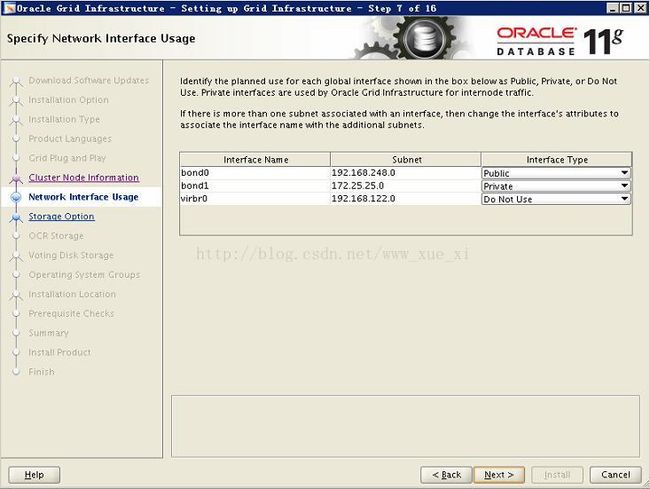

--指定公网IP和私网心跳IP网段

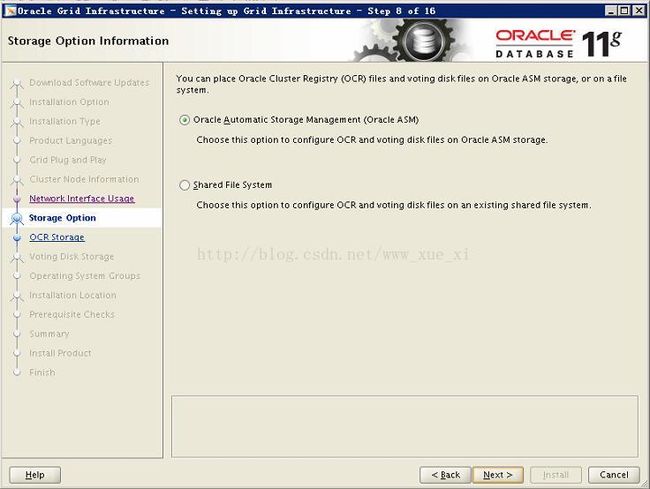

--选择存储类型,这里我打算使用ASM

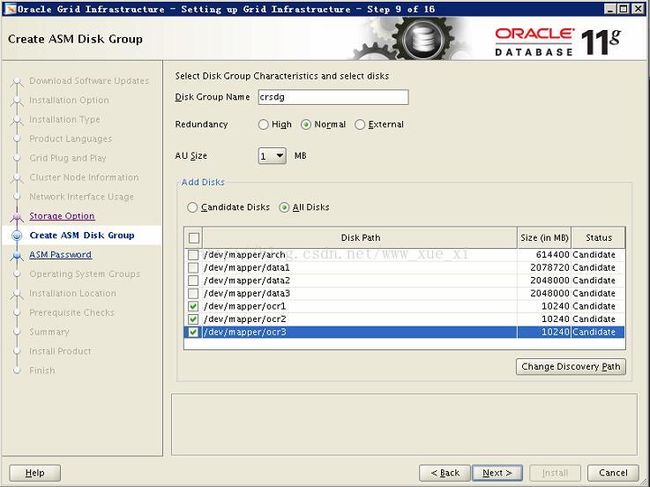

--指定crs磁盘组,这里需要修改裸设备的路径,点击Change Directory Path按钮进行修改,否则可能看不到磁盘组

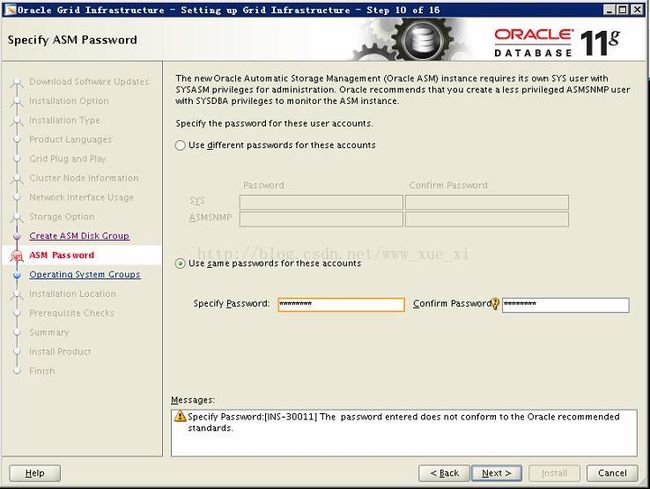

--指定ASM管理员的密码

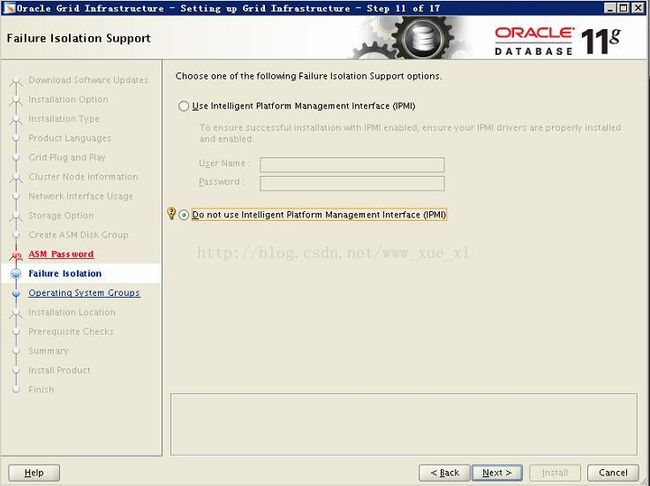

--取消PMI

--指定ASM磁盘管理员相关的组别,从上到下依次是asmadmin、asmdba、asmoper

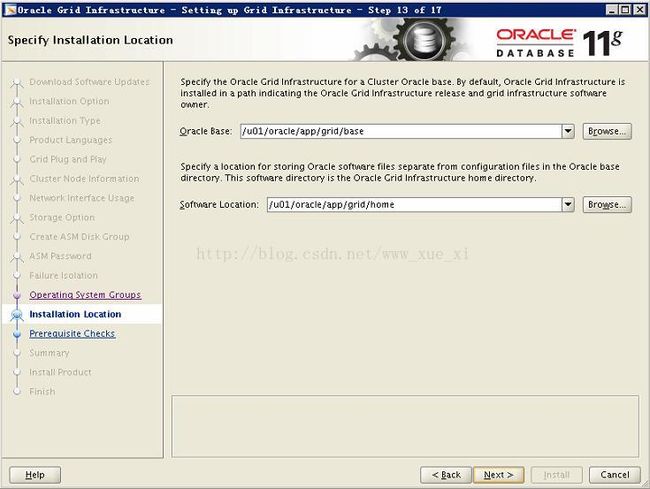

--Gi软件的安装路径

--oraInventory目录

--安装前预检查,只有NTP和反解析相关的警告,忽略即可

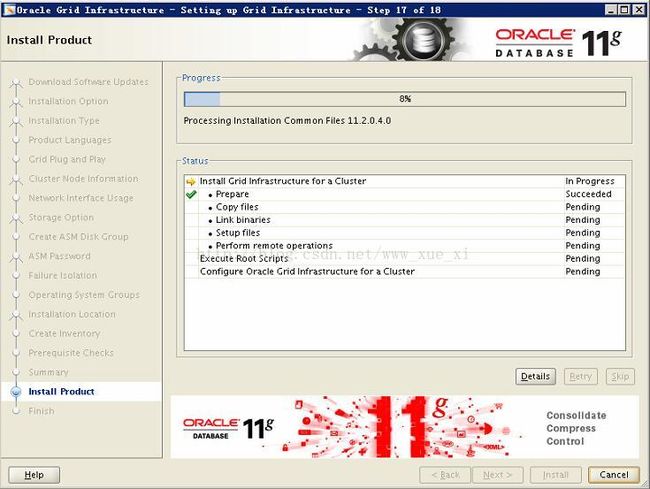

--安装前信息概览,点击install开始安装

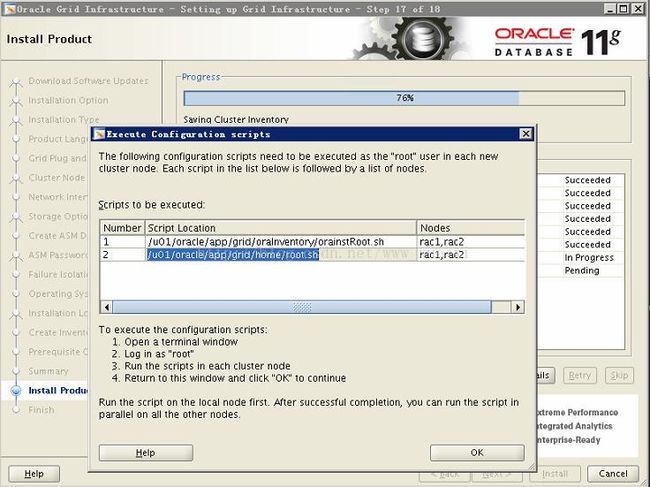

--2个节点root交替执行脚本

--rac1执行脚本orainstRoot.sh

[root@rac1 /]# /u01/oracle/app/grid/oraInventory/orainstRoot.sh

Changing permissions of /u01/oracle/app/grid/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/oracle/app/grid/oraInventory to oinstall.

The execution of the script is complete.

--rac2执行脚本orainstRoot.sh

[root@rac2 Packages]# /u01/oracle/app/grid/oraInventory/orainstRoot.sh

Changing permissions of /u01/oracle/app/grid/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/oracle/app/grid/oraInventory to oinstall.

The execution of the script is complete.

[root@rac2 Packages]#

--rac1执行root.sh脚本

[root@rac1 /]# /u01/oracle/app/grid/home/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/oracle/app/grid/home

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/oracle/app/grid/home/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization - successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding Clusterware entries to upstart

CRS-2672: Attempting to start 'ora.mdnsd' on 'rac1'

CRS-2676: Start of 'ora.mdnsd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'rac1'

CRS-2676: Start of 'ora.gpnpd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1'

CRS-2672: Attempting to start 'ora.gipcd' on 'rac1'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac1' succeeded

CRS-2676: Start of 'ora.gipcd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac1'

CRS-2672: Attempting to start 'ora.diskmon' on 'rac1'

CRS-2676: Start of 'ora.diskmon' on 'rac1' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac1' succeeded

ASM created and started successfully.

Disk Group crsdg created successfully.

clscfg: -install mode specified

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

CRS-4256: Updating the profile

Successful addition of voting disk 4ea2ab3255484fa2bfc81aa433213b7d.

Successful addition of voting disk 04990217f0ec4f6cbfdeabc8d3480050.

Successful addition of voting disk 103cfb04c3244f6abf9df60eadd7caf3.

Successfully replaced voting disk group with +crsdg.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 4ea2ab3255484fa2bfc81aa433213b7d (/dev/mapper/ocr1) [CRSDG]

2. ONLINE 04990217f0ec4f6cbfdeabc8d3480050 (/dev/mapper/ocr2) [CRSDG]

3. ONLINE 103cfb04c3244f6abf9df60eadd7caf3 (/dev/mapper/ocr3) [CRSDG]

Located 3 voting disk(s).

CRS-2672: Attempting to start 'ora.asm' on 'rac1'

CRS-2676: Start of 'ora.asm' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.CRSDG.dg' on 'rac1'

CRS-2676: Start of 'ora.CRSDG.dg' on 'rac1' succeeded

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@rac1 /]#

--rac2执行root.sh脚本

[root@rac2 Packages]# /u01/oracle/app/grid/home/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/oracle/app/grid/home

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/oracle/app/grid/home/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization - successful

Adding Clusterware entries to upstart

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node rac1, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@rac2 Packages]#

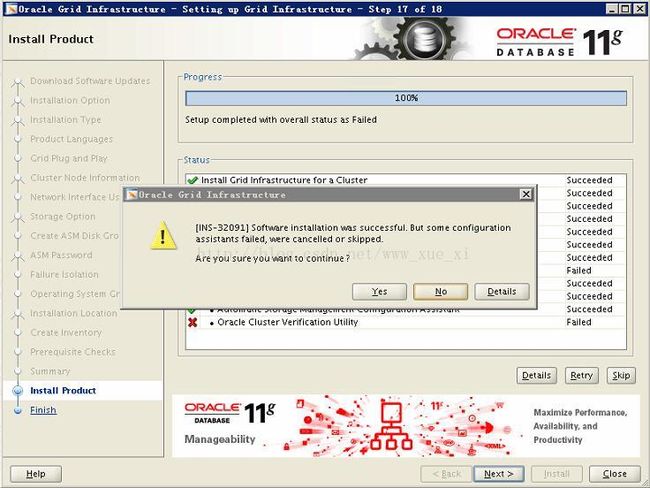

--回到安装界面点击ok按钮

--节点rac1查看集群状态

[root@rac1 /]# su - grid

[grid@rac1 ~]$ source .bash_profile

[grid@rac1 ~]$ crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora.CRSDG.dg ora....up.type ONLINE ONLINE rac1

ora....N1.lsnr ora....er.type ONLINE ONLINE rac1

ora.asm ora.asm.type ONLINE ONLINE rac1

ora.cvu ora.cvu.type ONLINE ONLINE rac1

ora.gsd ora.gsd.type OFFLINE OFFLINE

ora....network ora....rk.type ONLINE ONLINE rac1

ora.oc4j ora.oc4j.type ONLINE ONLINE rac1

ora.ons ora.ons.type ONLINE ONLINE rac1

ora....SM1.asm application ONLINE ONLINE rac1

ora.rac1.gsd application OFFLINE OFFLINE

ora.rac1.ons application ONLINE ONLINE rac1

ora.rac1.vip ora....t1.type ONLINE ONLINE rac1

ora....SM2.asm application ONLINE ONLINE rac2

ora.rac2.gsd application OFFLINE OFFLINE

ora.rac2.ons application ONLINE ONLINE rac2

ora.rac2.vip ora....t1.type ONLINE ONLINE rac2

ora.scan1.vip ora....ip.type ONLINE ONLINE rac1

[grid@rac1 ~]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

[grid@rac1 ~]$ crs_stat -t -v

Name Type R/RA F/FT Target State Host

----------------------------------------------------------------------

ora.CRSDG.dg ora....up.type 0/5 0/ ONLINE ONLINE rac1

ora....N1.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE rac1

ora.asm ora.asm.type 0/5 0/ ONLINE ONLINE rac1

ora.cvu ora.cvu.type 0/5 0/0 ONLINE ONLINE rac1

ora.gsd ora.gsd.type 0/5 0/ OFFLINE OFFLINE

ora....network ora....rk.type 0/5 0/ ONLINE ONLINE rac1

ora.oc4j ora.oc4j.type 0/1 0/2 ONLINE ONLINE rac1

ora.ons ora.ons.type 0/3 0/ ONLINE ONLINE rac1

ora....SM1.asm application 0/5 0/0 ONLINE ONLINE rac1

ora.rac1.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac1.ons application 0/3 0/0 ONLINE ONLINE rac1

ora.rac1.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac1

ora....SM2.asm application 0/5 0/0 ONLINE ONLINE rac2

ora.rac2.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac2.ons application 0/3 0/0 ONLINE ONLINE rac2

ora.rac2.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac2

ora.scan1.vip ora....ip.type 0/0 0/0 ONLINE ONLINE rac1

[grid@rac1 ~]$ crs_stat -t -v

Name Type R/RA F/FT Target State Host

----------------------------------------------------------------------

ora.CRSDG.dg ora....up.type 0/5 0/ ONLINE ONLINE rac1

ora....N1.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE rac1

ora.asm ora.asm.type 0/5 0/ ONLINE ONLINE rac1

ora.cvu ora.cvu.type 0/5 0/0 ONLINE ONLINE rac1

ora.gsd ora.gsd.type 0/5 0/ OFFLINE OFFLINE

ora....network ora....rk.type 0/5 0/ ONLINE ONLINE rac1

ora.oc4j ora.oc4j.type 0/1 0/2 ONLINE ONLINE rac1

ora.ons ora.ons.type 0/3 0/ ONLINE ONLINE rac1

ora....SM1.asm application 0/5 0/0 ONLINE ONLINE rac1

ora.rac1.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac1.ons application 0/3 0/0 ONLINE ONLINE rac1

ora.rac1.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac1

ora....SM2.asm application 0/5 0/0 ONLINE ONLINE rac2

ora.rac2.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac2.ons application 0/3 0/0 ONLINE ONLINE rac2

ora.rac2.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac2

ora.scan1.vip ora....ip.type 0/0 0/0 ONLINE ONLINE rac1

[grid@rac1 ~]$ olnodes -n

-bash: olnodes: command not found

[grid@rac1 ~]$ cd $ORACLE_HOME/bin

[grid@rac1 bin]$ ls -l ol

ologdbg ologdbg.pl ologgerd olsnodes olsnodes.bin

[grid@rac1 bin]$ ls -l ol

ologdbg ologdbg.pl ologgerd olsnodes olsnodes.bin

[grid@rac1 bin]$ olsnodes -n

rac1 1

rac2 2

[grid@rac1 bin]$ ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 3

Total space (kbytes) : 262120

Used space (kbytes) : 2304

Available space (kbytes) : 259816

ID : 952406618

Device/File Name : +crsdg

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check bypassed due to non-privileged user

[grid@rac1 bin]$ crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 4ea2ab3255484fa2bfc81aa433213b7d (/dev/mapper/ocr1) [CRSDG]

2. ONLINE 04990217f0ec4f6cbfdeabc8d3480050 (/dev/mapper/ocr2) [CRSDG]

3. ONLINE 103cfb04c3244f6abf9df60eadd7caf3 (/dev/mapper/ocr3) [CRSDG]

Located 3 voting disk(s).

[grid@rac1 bin]$ ps -ef | grep lsnr | grep -v 'grep' | grep -v 'ocfs' | awk '{print $9}'

LISTENER_SCAN1

[grid@rac1 bin]$

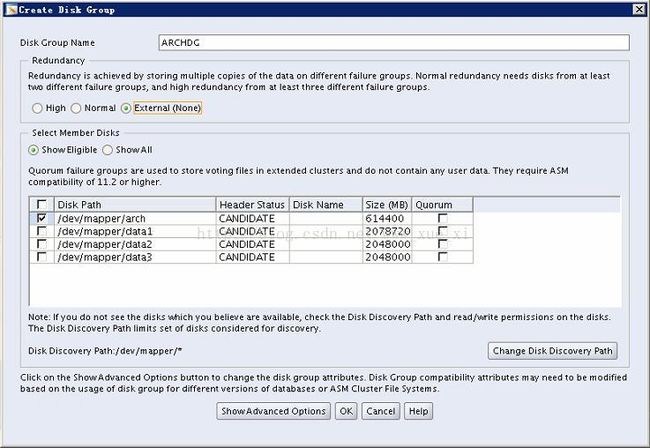

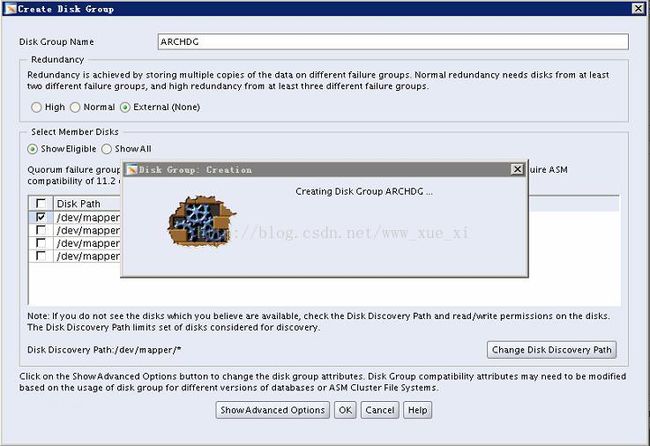

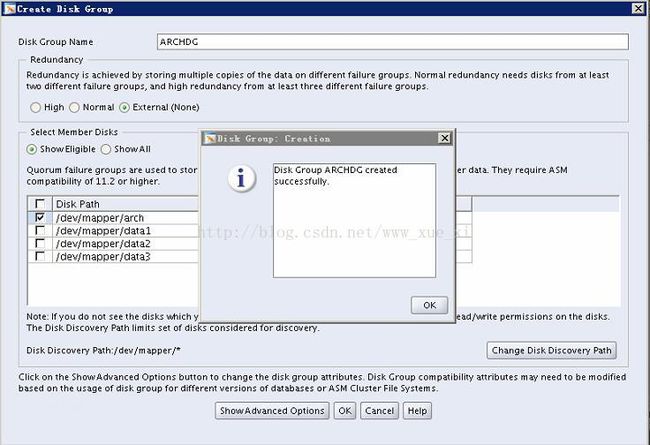

--GI安装完成,创建数据库ASM磁盘组DATADG、ARCHDG

grid登录服务器rac1节点,执行asmca,点击界面的create按钮

--用相同方法制作datadg磁盘组

--点击exit退出

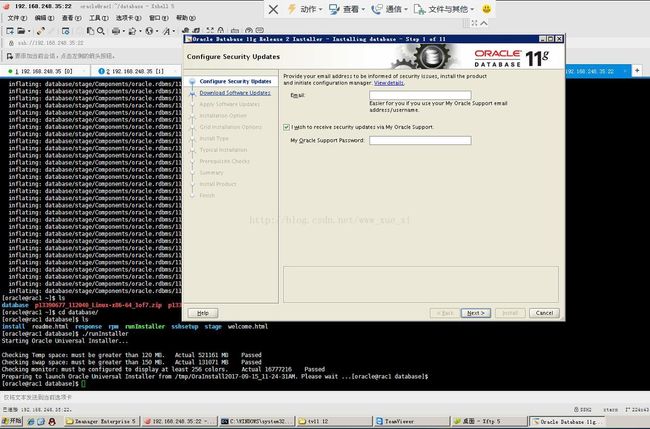

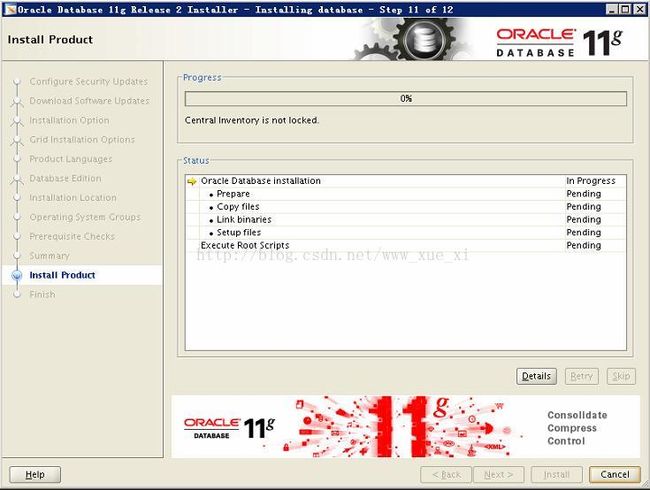

六、安装oracle数据库软件

oracle登录节点rac1,将p13390677_112040_Linux-x86-64_1of7.zip p13390677_112040_Linux-x86-64_2of7.zip解压到/home/oracle/db

然后执行cd /home/oracle/db ,然后执行./runInstaller启动数据库软件安装界面

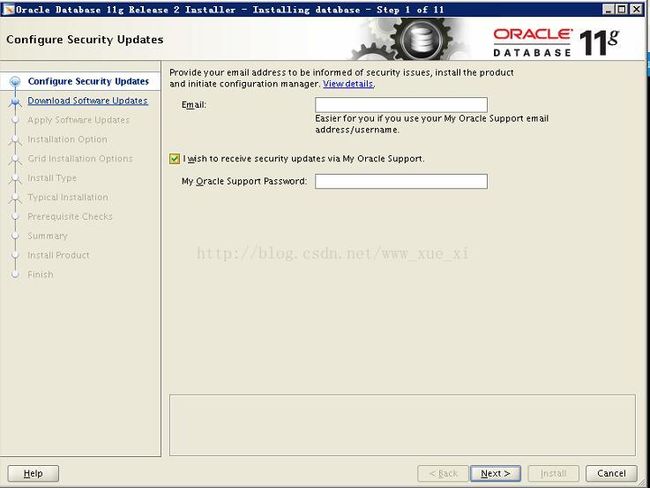

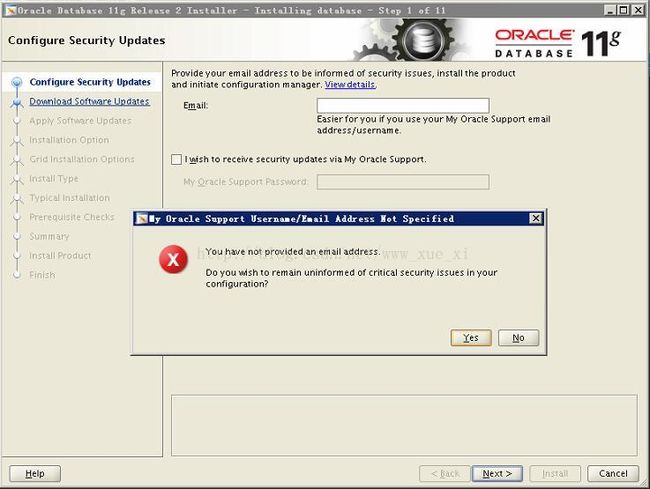

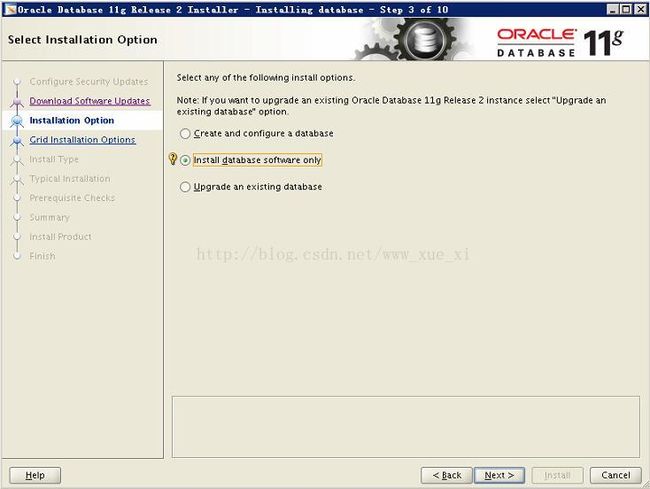

--取消软件更新

--选择只安装软件

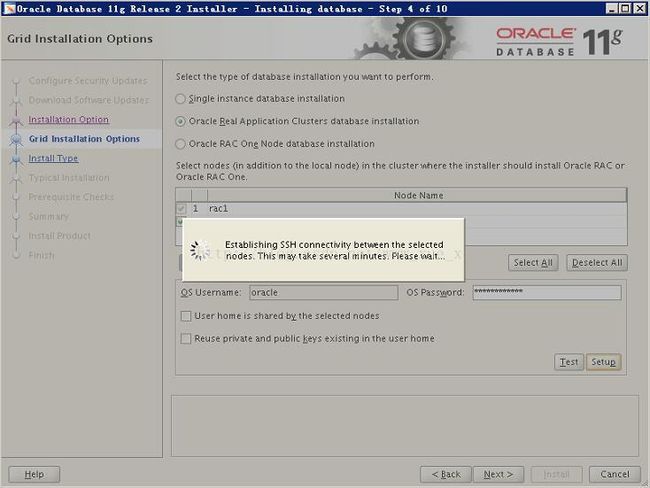

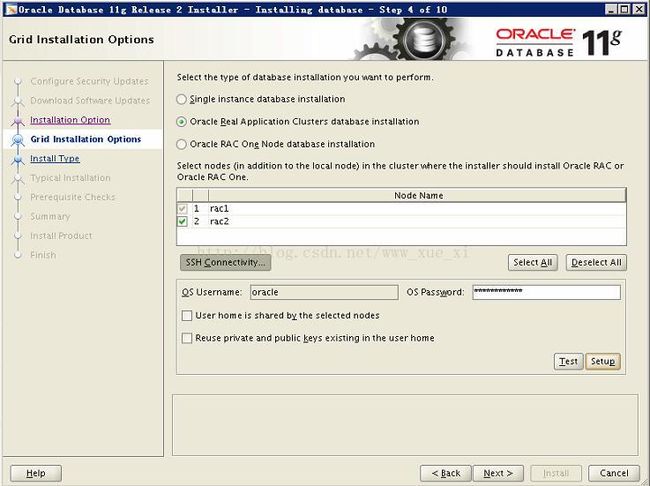

--选择rac1、rac2节点

--创建oracle用户rac1、rac2的ssh对等性

--为DB软件添加简体中文支持,注意这不是数据库字符集的设置

--安装版本选择企业版

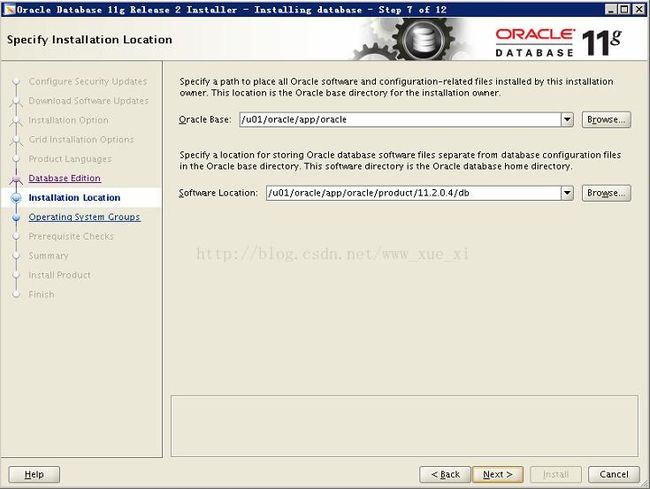

--数据库软件的安装路径

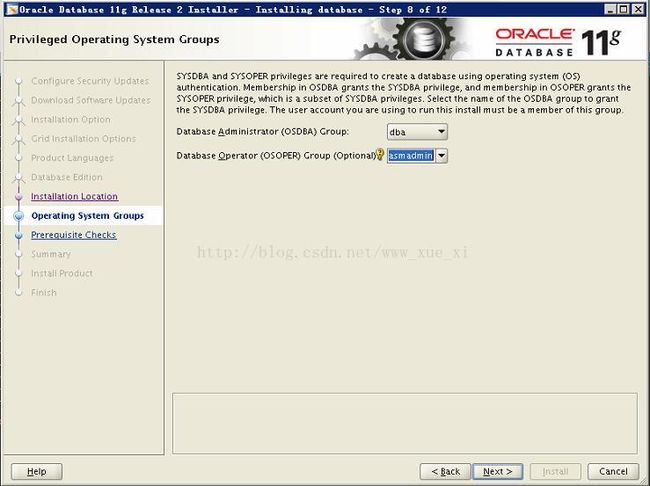

--数据库管理员相关组的选择

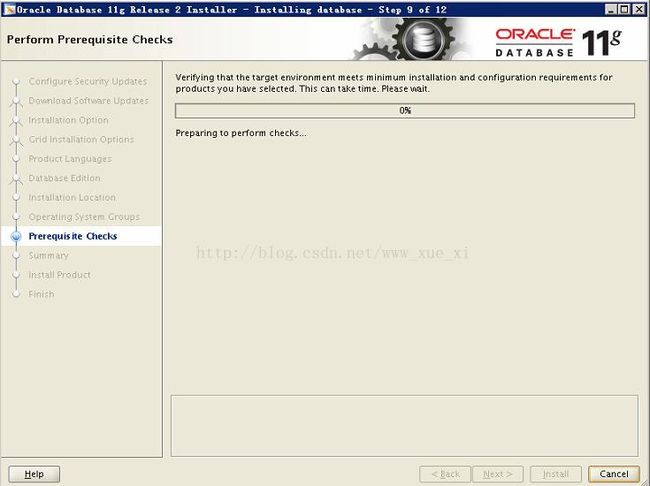

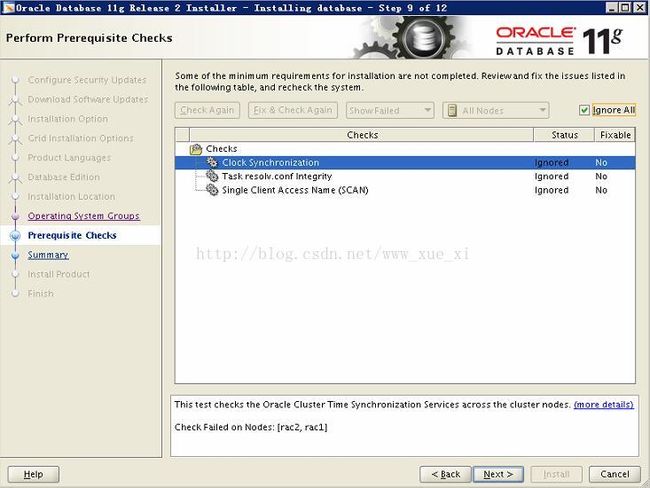

--安装前预检查

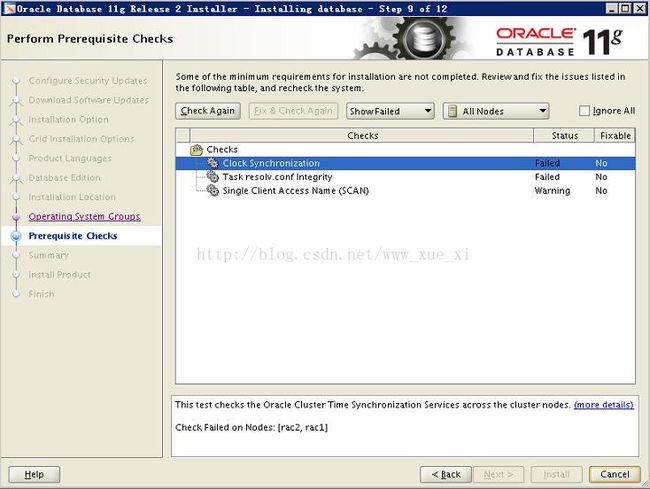

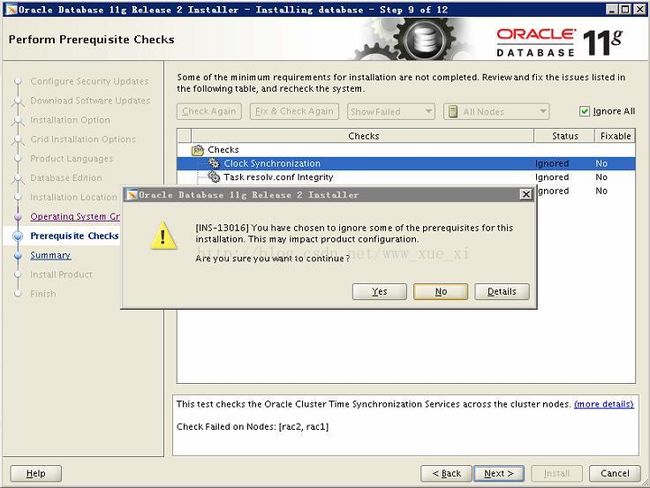

--检查结果,我使用Oracle的自己的时钟同步,忽略检查中的警告,使用hosts解析,忽略resolv.conf解析检查失败,相关SCAN检查警告说是SCAN不唯一的警告,忽略

SCAN警告内容:

Single Client Access Name (SCAN) - This test verifies the Single Client Access Name configuration.? Error:

?-?

PRVG-1101 : SCAN name "racscanip" failed to resolve ?- Cause:? An attempt to resolve specified SCAN name to

a list of IP addresses failed because SCAN could not be resolved in DNS or GNS using 'nslookup'. ?- Action:? Check whether

the specified SCAN name is correct. If SCAN name should be resolved in DNS, check the configuration of SCAN name in DNS. If

it should be resolved in GNS make sure that GNS resource is online.

?-?

PRVF-4657 : Name resolution setup check for "racscanip" (IP address: 192.168.248.37) failed ?- Cause:? Inconsistent

IP address definitions found for the SCAN name identified using DNS and configured name resolution mechanism(s). ?- Action:?

Look up the SCAN name with nslookup, and make sure the returned IP addresses are consistent with those defined in NIS and

/etc/hosts as configured in /etc/nsswitch.conf by reconfiguring the latter. Check the Name Service Cache Daemon (/usr/sbin/nscd)

by clearing its cache and restarting it.

Check Failed on Nodes: [rac2, ?rac1]

Verification result of failed node: rac2

?Details:

?-?

PRVF-4664 : Found inconsistent name resolution entries for SCAN name "racscanip" ?- Cause:? The nslookup utility and the

configured name resolution mechanism(s), as defined in /etc/nsswitch.conf, returned inconsistent IP address information for

the SCAN name identified. ?- Action:? Check the Name Service Cache Daemon (/usr/sbin/nscd), the Domain Name Server (nslookup)

and the /etc/hosts file to make sure the IP address for the SCAN names are registered correctly.

Back to Top

Verification result of failed node: rac1

?Details:

?-?

PRVF-4664 : Found inconsistent name resolution entries for SCAN name "racscanip" ?- Cause:? The nslookup utility and the

configured name resolution mechanism(s), as defined in /etc/nsswitch.conf, returned inconsistent IP address information for

the SCAN name identified. ?- Action:? Check the Name Service Cache Daemon (/usr/sbin/nscd), the Domain Name Server (nslookup)

and the /etc/hosts file to make sure the IP address for the SCAN names are registered correctly.

Back to Top

--软件安装前橄榄

--安装db软件

--rac1、rac2的root交替执行root.sh脚本

[root@rac1 /]# /u01/oracle/app/oracle/product/11.2.0.4/db/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/oracle/app/oracle/product/11.2.0.4/db

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Finished product-specific root actions.

[root@rac1 /]#

[root@rac2 Packages]# /u01/oracle/app/oracle/product/11.2.0.4/db/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/oracle/app/oracle/product/11.2.0.4/db

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Finished product-specific root actions.

[root@rac2 Packages]#

然后点击上图的ok按钮

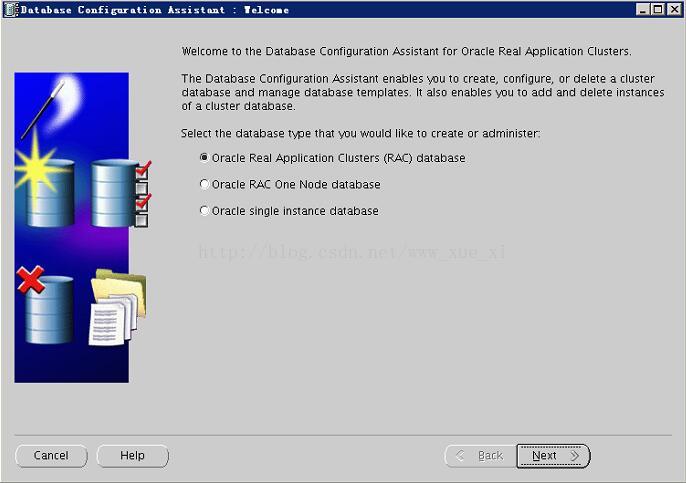

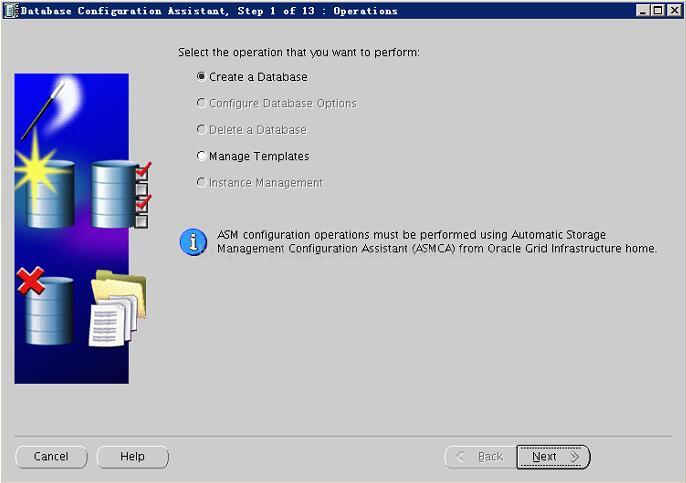

七、创建数据库

rac1节点,oracle用户登录,然后执行dbca启动数据库安装界面

--选择创建数据库

--选择数据库类型

--指定数据库全局名及集群的节点

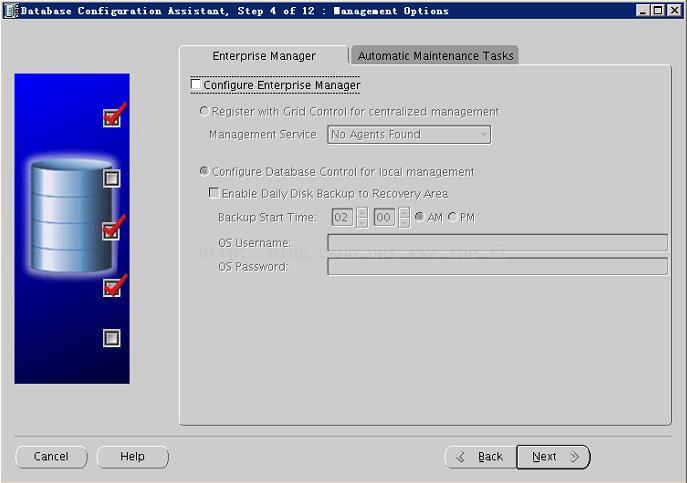

--取消EM

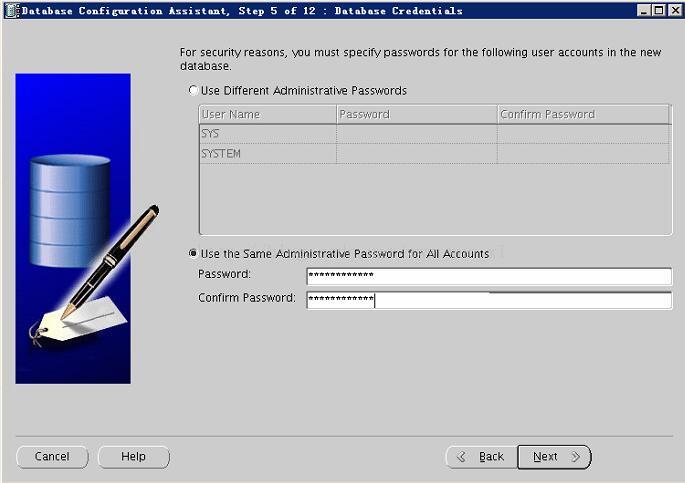

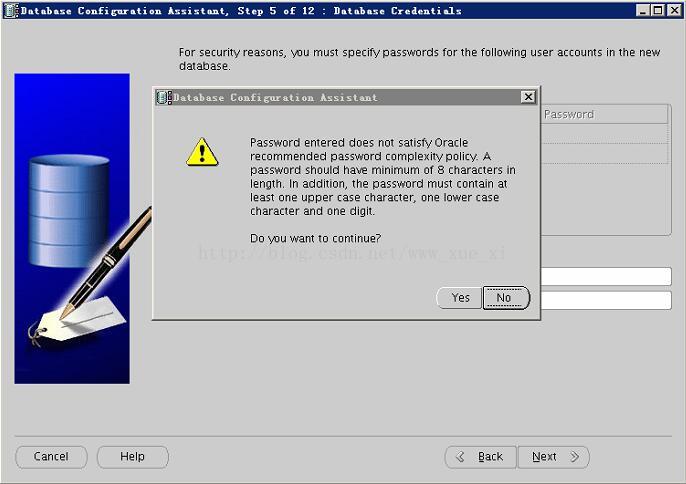

--指定db管理员的密码

--密码过于简单的提示,忽略

--指定数据库文件存放的存储位置

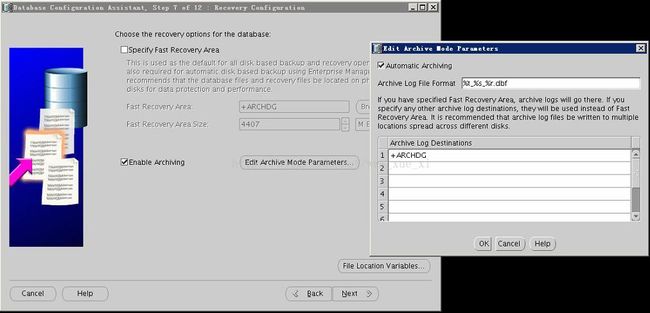

--取消闪回区,指定归档存储位置及归档格式化

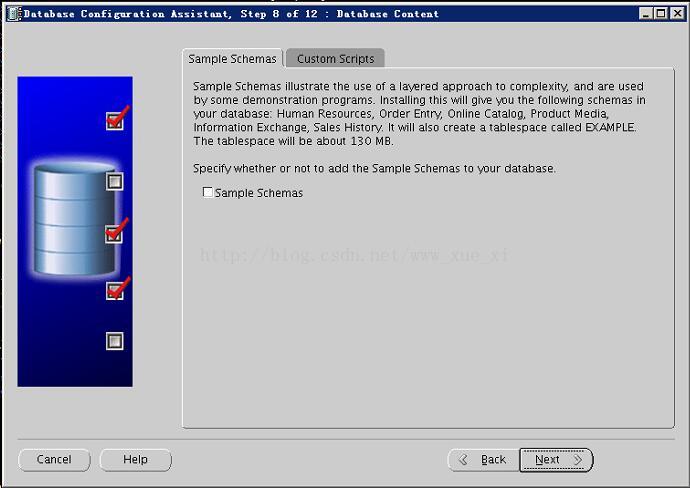

--不创建示例数据库

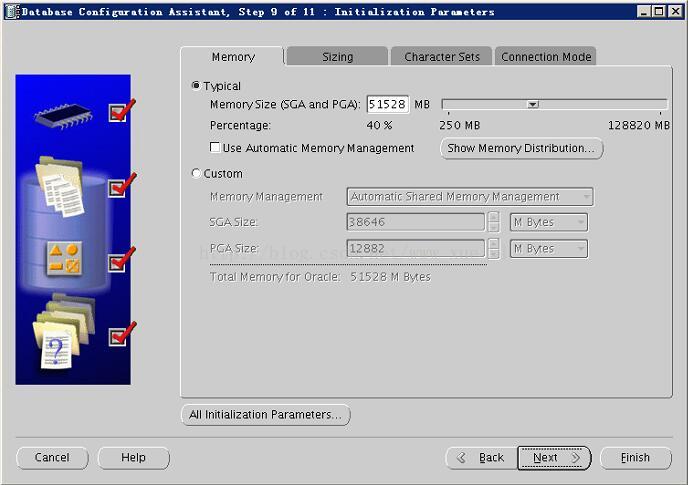

--数据库SGA设置,默认即可

--数据库进程数调整到500

--数据库字符集选择ZHS16GBK

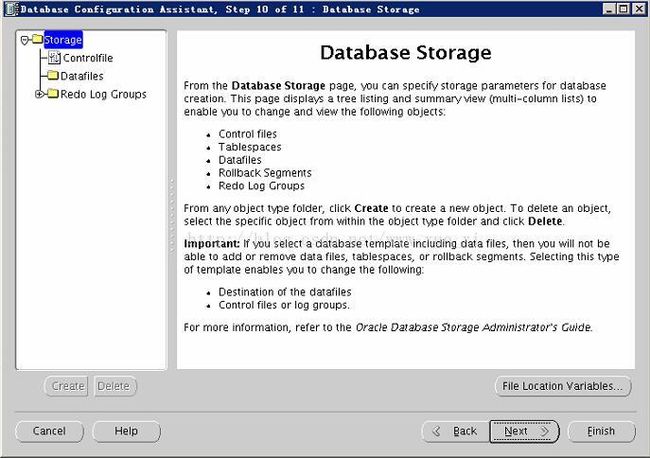

--数据库存储概览

--选择创建数据库

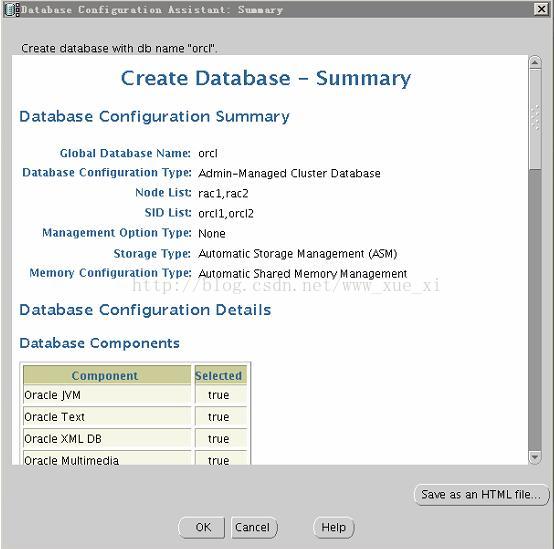

--数据库创建前的信息概览

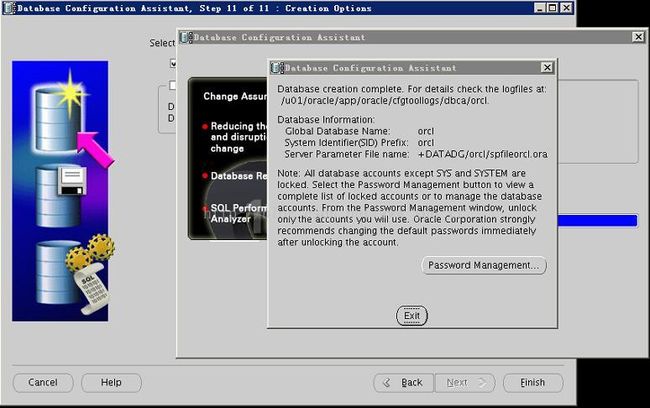

--执行数据库创建操作

--退出密码管理器,完成数据库创建

--检查集群状态

[grid@rac1 ~]$ crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora.ARCHDG.dg ora....up.type ONLINE ONLINE rac1

ora.CRSDG.dg ora....up.type ONLINE ONLINE rac1

ora.DATADG.dg ora....up.type ONLINE ONLINE rac1

ora....ER.lsnr ora....er.type ONLINE ONLINE rac1

ora....N1.lsnr ora....er.type ONLINE ONLINE rac1

ora.asm ora.asm.type ONLINE ONLINE rac1

ora.cvu ora.cvu.type ONLINE ONLINE rac1

ora.gsd ora.gsd.type OFFLINE OFFLINE

ora....network ora....rk.type ONLINE ONLINE rac1

ora.oc4j ora.oc4j.type ONLINE ONLINE rac1

ora.ons ora.ons.type ONLINE ONLINE rac1

ora.orcl.db ora....se.type ONLINE ONLINE rac1

ora....SM1.asm application ONLINE ONLINE rac1

ora....C1.lsnr application ONLINE ONLINE rac1

ora.rac1.gsd application OFFLINE OFFLINE

ora.rac1.ons application ONLINE ONLINE rac1

ora.rac1.vip ora....t1.type ONLINE ONLINE rac1

ora....SM2.asm application ONLINE ONLINE rac2

ora....C2.lsnr application ONLINE ONLINE rac2

ora.rac2.gsd application OFFLINE OFFLINE

ora.rac2.ons application ONLINE ONLINE rac2

ora.rac2.vip ora....t1.type ONLINE ONLINE rac2

ora.scan1.vip ora....ip.type ONLINE ONLINE rac1

[grid@rac1 ~]$ crsctl status resource -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ARCHDG.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.CRSDG.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.DATADG.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1

ora.cvu

1 ONLINE ONLINE rac1

ora.oc4j

1 ONLINE ONLINE rac1

ora.orcl.db

1 ONLINE ONLINE rac1 Open

2 ONLINE ONLINE rac2 Open

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac1

[grid@rac1 ~]$ lsnrctl status

LSNRCTL for Linux: Version 11.2.0.4.0 - Production on 15-SEP-2017 12:17:01

Copyright (c) 1991, 2013, Oracle. All rights reserved.

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER)))

STATUS of the LISTENER

------------------------

Alias LISTENER

Version TNSLSNR for Linux: Version 11.2.0.4.0 - Production

Start Date 15-SEP-2017 11:17:35

Uptime 0 days 0 hr. 59 min. 26 sec

Trace Level off

Security ON: Local OS Authentication

SNMP OFF

Listener Parameter File /u01/oracle/app/grid/home/network/admin/listener.ora

Listener Log File /u01/oracle/app/grid/base/diag/tnslsnr/rac1/listener/alert/log.xml

Listening Endpoints Summary...

(DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.248.35)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.248.36)(PORT=1521)))

Services Summary...

Service "+ASM" has 1 instance(s).

Instance "+ASM1", status READY, has 1 handler(s) for this service...

Service "orcl" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "orclXDB" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

The command completed successfully

[grid@rac1 ~]$

--检查数据库信息

[root@rac1 /]# su - oracle

[oracle@rac1 ~]$ sqlplus / as sysdba

SQL*Plus: Release 11.2.0.4.0 Production on Fri Sep 15 12:17:45 2017

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP,

Data Mining and Real Application Testing options

SQL> select userenv('language') from dual;

USERENV('LANGUAGE')

----------------------------------------------------

AMERICAN_AMERICA.ZHS16GBK

SQL> select name,dbid,open_mode from v$database;

NAME DBID OPEN_MODE

--------- ---------- --------------------

ORCL 1482543510 READ WRITE

SQL> select instance_name,status from v$instance;

INSTANCE_NAME STATUS

---------------- ------------

orcl1 OPEN

SQL> quit

Disconnected from Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP,

Data Mining and Real Application Testing options

[oracle@rac1 ~]$

--到此rac数据库安装全部结束

八、集群补丁集安装

rac1、rac2节点的grid、oracle用户将 p6880880_112000_Linux-x86-64.zip 解压到$ORACLE_HOME目录下覆盖OPatch

oracle查看opatch的版本

[oracle@rac1 OPatch]$ ./opatch lsinventory

Oracle Interim Patch Installer version 11.2.0.3.6

Copyright (c) 2013, Oracle Corporation. All rights reserved.

Oracle Home : /u01/oracle/app/oracle/product/11.2.0.4/db

Central Inventory : /u01/oracle/app/grid/oraInventory

from : /u01/oracle/app/oracle/product/11.2.0.4/db/oraInst.loc

OPatch version : 11.2.0.3.6

OUI version : 11.2.0.4.0

Log file location : /u01/oracle/app/oracle/product/11.2.0.4/db/cfgtoollogs/opatch/opatch2017-09-15_12-21-10PM_1.log

Lsinventory Output file location : /u01/oracle/app/oracle/product/11.2.0.4/db/cfgtoollogs/opatch/lsinv/lsinventory2017-09-15_12-21-10PM.txt

--------------------------------------------------------------------------------

Installed Top-level Products (1):

Oracle Database 11g 11.2.0.4.0

There are 1 product(s) installed in this Oracle Home.

There are no Interim patches installed in this Oracle Home.

Rac system comprising of multiple nodes

Local node = rac1

Remote node = rac2

--------------------------------------------------------------------------------

OPatch succeeded.

[oracle@rac1 OPatch]$

--grid查看opatch版本

[grid@rac1 OPatch]$ ./opatch lsinventory

Oracle Interim Patch Installer version 11.2.0.3.6

Copyright (c) 2013, Oracle Corporation. All rights reserved.

Oracle Home : /u01/oracle/app/grid/home

Central Inventory : /u01/oracle/app/grid/oraInventory

from : /u01/oracle/app/grid/home/oraInst.loc

OPatch version : 11.2.0.3.6

OUI version : 11.2.0.4.0

Log file location : /u01/oracle/app/grid/home/cfgtoollogs/opatch/opatch2017-09-15_12-26-59PM_1.log

Lsinventory Output file location : /u01/oracle/app/grid/home/cfgtoollogs/opatch/lsinv/lsinventory2017-09-15_12-26-59PM.txt

--------------------------------------------------------------------------------

Installed Top-level Products (1):

Oracle Grid Infrastructure 11g 11.2.0.4.0

There are 1 product(s) installed in this Oracle Home.

There are no Interim patches installed in this Oracle Home.