北邮机器人队2020预备队培训(六) —— 传感器数据读取与处理

前言

这一节介绍如何读取两台机器人的传感器数据.

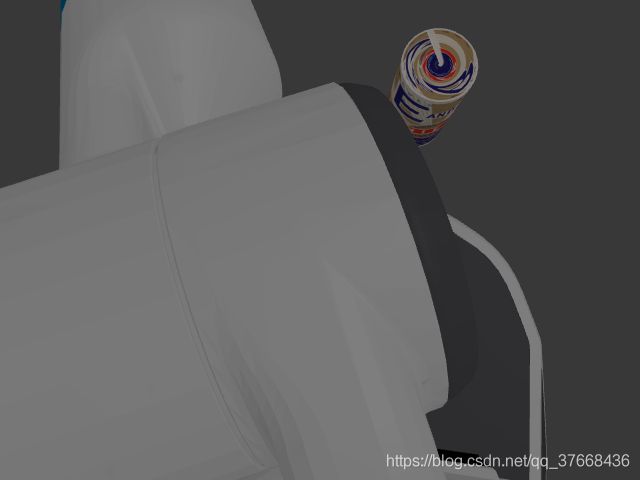

fetch机器人的摄像头数据

读取代码:

#!/usr/bin/env python

# BEGIN ALL

# coding=utf-8

import rospy, cv2, numpy

from sensor_msgs.msg import Image

import sys

import cv_bridge

class Catch:

def __init__(self):

self.bridge = cv_bridge.CvBridge()

self.image_sub = rospy.Subscriber('head_camera/rgb/image_raw',

Image, self.image_callback)

def image_callback(self, msg):

# BEGIN BRIDGE

image = self.bridge.imgmsg_to_cv2(msg,desired_encoding='bgr8')

cv2.imshow("window", image)

cv2.waitKey(3)

rospy.init_node('Catch')

catchbin = Catch()

rospy.spin()

# END ALL

灰度处理

gray = cv2.cvtColor(image,cv2.COLOR_RGB2GRAY)

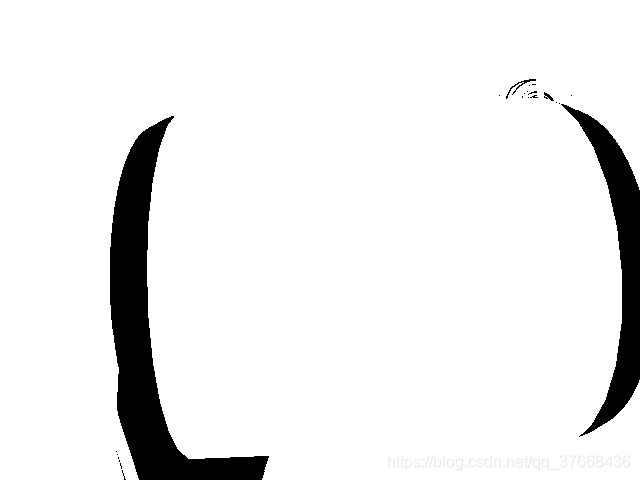

二值化处理

ret, thresh1 = cv2.threshold(gray, 50, 255, cv2.THRESH_BINARY)

边缘提取

img1 = cv2.GaussianBlur(image, (3, 3), 0)

canny = cv2.Canny(img1, 50, 150)

HSV图

img_hsv = cv2.cvtColor(img,cv2.COLOR_BGR2HSV)

HSV图二值化

lower_blue = numpy.array([ 100, 43, 46])

upper_blue = numpy.array([124, 255, 255])

mask = cv2.inRange(img_hsv, lower_blue, upper_blue)

完整代码与效果

#!/usr/bin/env python

# BEGIN ALL

# coding=utf-8

import rospy, cv2, numpy

from sensor_msgs.msg import Image

import sys

import cv_bridge

class Catch:

def __init__(self):

self.bridge = cv_bridge.CvBridge()

self.image_sub = rospy.Subscriber('head_camera/rgb/image_raw',

Image, self.image_callback)

def image_callback(self, msg):

# BEGIN BRIDGE

image = self.bridge.imgmsg_to_cv2(msg,desired_encoding='bgr8')

gray = cv2.cvtColor(image,cv2.COLOR_RGB2GRAY)

ret, thresh1 = cv2.threshold(gray, 50, 255, cv2.THRESH_BINARY)

img1 = cv2.GaussianBlur(image, (3, 3), 0)

canny = cv2.Canny(img1, 50, 150)

img_hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

lower_blue = numpy.array([ 100, 43, 46])

upper_blue = numpy.array([124, 255, 255])

mask = cv2.inRange(img_hsv, lower_blue, upper_blue)

cv2.imshow("gray",gray)

cv2.imshow("2",thresh1)

cv2.imshow("window", image)

cv2.imshow("canny",canny)

cv2.imshow("hsv", img_hsv)

cv2.imshow("mask", mask)

cv2.waitKey(3)

rospy.init_node('Catch')

catchbin = Catch()

rospy.spin()

# END ALL

racecar的激光雷达数据读取

#!/usr/bin/env python

# coding = utf-8

import rospy

from sensor_msgs.msg import LaserScan

def scan_callback(msg):

range_ahead = msg.ranges[len(msg.ranges)/2]

print "range ahead: %0.3f" % range_ahead

rospy.init_node('range_ahead')

scan_sub = rospy.Subscriber('/scan', LaserScan, scan_callback)

rospy.spin()

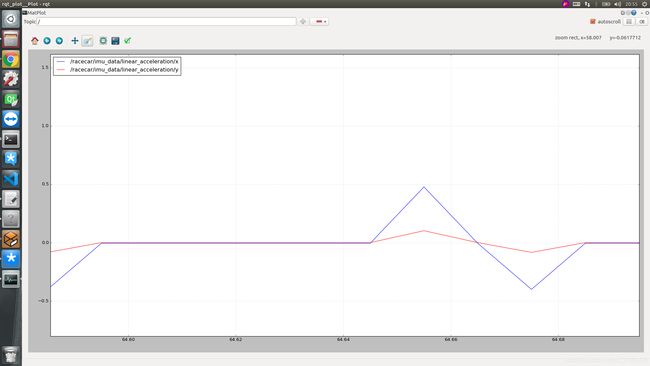

racecar陀螺仪数据读取

#!/usr/bin/env python

# license removed for brevity

import rospy

from sensor_msgs.msg import Imu

import math

def imu_cb(imu_data):

# Read the quaternion of the robot IMU

x = imu_data.orientation.x

y = imu_data.orientation.y

z = imu_data.orientation.z

w = imu_data.orientation.w

# Read the angular velocity of the robot IMU

w_x = imu_data.angular_velocity.x

w_y = imu_data.angular_velocity.y

w_z = imu_data.angular_velocity.z

# Read the linear acceleration of the robot IMU

a_x = imu_data.linear_acceleration.x

a_y = imu_data.linear_acceleration.y

a_z = imu_data.linear_acceleration.z

# Convert Quaternions to Euler-Angles

rpy_angle = [0, 0, 0]

rpy_angle[0] = math.atan2(2 * (w * x + y * z), 1 - 2 * (x**2 + y**2))

rpy_angle[1] = math.asin(2 * (w * y - z * x))

rpy_angle[2] = math.atan2(2 * (w * z + x * y), 1 - 2 * (y**2 + z**2))

print(rpy_angle)

return

if __name__ == '__main__':

rospy.init_node('imu_node', anonymous=True)

rospy.Subscriber("/racecar/imu_data", Imu, imu_cb)

rospy.spin()

使用rqt_plot工具绘制

rqt_plot /racecar/imu_data/linear_acceleration

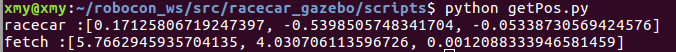

读取机器人位置

#!/usr/bin/env python

#coding=utf-8

import rospy

from gazebo_msgs.srv import *

import time

rospy.init_node('speed_control')

rospy.wait_for_service('/gazebo/get_model_state')

get_state_service = rospy.ServiceProxy('/gazebo/get_model_state', GetModelState)

model = GetModelStateRequest()

model.model_name = 'racecar'

objstate = get_state_service(model)

state = [objstate.pose.position.x, objstate.pose.position.y,objstate.pose.position.z]

print(state)

fetch机器人机械臂运动

#!/usr/bin/env python

import sys, rospy, tf, actionlib, moveit_commander

from control_msgs.msg import (GripperCommandAction, GripperCommandGoal)

from geometry_msgs.msg import *

from tf.transformations import quaternion_from_euler

from look_at_bin import look_at_bin

from std_srvs.srv import Empty

from moveit_msgs.msg import CollisionObject

from moveit_commander import PlanningSceneInterface

if __name__ == '__main__':

moveit_commander.roscpp_initialize(sys.argv)

rospy.init_node('pick_up_item')

rospy.wait_for_service("/clear_octomap")

clear_octomap = rospy.ServiceProxy("/clear_octomap",Empty)

gripper = actionlib.SimpleActionClient("gripper_controller/gripper_action",\

GripperCommandAction)

gripper.wait_for_server() # <1>

arm = moveit_commander.MoveGroupCommander("arm") # <2>

arm.allow_replanning(True)

tf_listener = tf.TransformListener() # <3>

rate = rospy.Rate(10)

gripper_goal = GripperCommandGoal() # <4>

gripper_goal.command.max_effort = 10.0

scene = PlanningSceneInterface("base_link")

p = Pose()

p.position.x = 0.4

p.position.y = -0.4

p.position.z = 0.7

p.orientation = Quaternion(*quaternion_from_euler(0, 1, 1))

arm.set_pose_target(p) # <5>

while True:

if arm.go(True):

break

clear_octomap()

look_at_bin()

while not rospy.is_shutdown():

rate.sleep()

print("back")

p.position.x = 0.4

p.position.y = -0.4

p.position.z = 0.7

p.orientation = Quaternion(*quaternion_from_euler(0, 1.2, 0))

arm.set_pose_target(p)

while True:

if arm.go(True):

break

clear_octomap()

rospy.sleep(1)

p.orientation = Quaternion(*quaternion_from_euler(0, -1.5, 0))

arm.set_pose_target(p)

while True:

if arm.go(True):

break

clear_octomap()

print("in")

p.position.x = 0.38

p.position.y = -0.25

p.position.z = 0.6

p.orientation = Quaternion(*quaternion_from_euler(0, -1.5, 0))

arm.set_pose_target(p)

while True:

if arm.go(True):

break

clear_octomap()

rospy.sleep(1)

break # <13>

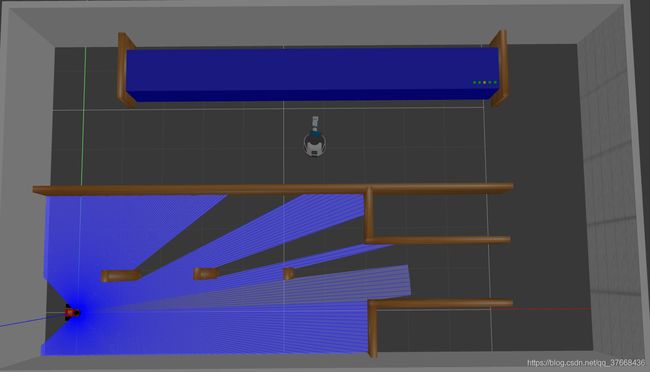

打开racecar激光可视化

修改这个文件:~/robocon_ws/src/racecar_description/urdf/sensors/lidar.xacro

如图改成true

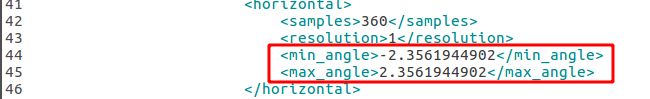

下图两个数可以修改激光的最大最小扫描角度,可以自行修改.

作业

基础版实现提示:

- 首先批处理用于马赛克的图片,使用opencv的resize函数将他们都归一化同样的马赛克大小

- 用这些批处理的马赛克图去遍历目标图,并替换目标图上相同大小的像素块

- 替换完成后使用addWeighted将原始图和替换后的图融合

进阶版实现提示:

- 修改那些提前准备的一堆图片的大小到马赛克般大小。

- 计算每一张图片的颜色均值。

- 修改目标图片的长宽,使之长宽都是马赛克大小的整数倍。

- 以马赛克大小为单位遍历目标图片同时计算这个区域的颜色均值 ,然后和提前准备的用于替换的马赛克图片的均值进行比较,用均值差最接近的替换目标图片。

- 用addWeighted函数对全替换好的图片和最开始准备的目标图片做一个融合,效果更加逼真。

可能用到的opencv函数:

imread:从磁盘读取图片

imwrite:向磁盘写入图片

imshow:显示图片

addWeighted:图片融合

waitKey:延时等待,用于显示图片

rect:创建矩形

copyTo:图片拷贝

cvtColor:图片格式转换函数,例如彩色图转灰度,转hsv等

meanStdDev:计算图片均值

resize:修改图片大小

相信这一个例子就能帮大家掌握opencv的使用了~

- 准备仿真比赛,体验今天的内容

作业提交

2020年8月6日23时59分,提交作业源码,截图效果,必要的文字说明等