在kubernetes集群用helm离线安装harbor

背景说明

在公司内部局域网环境kubernetes集群(未连接互联网)通过helm离线安装harbor

实施步骤

一、kubernetes集群安装helm(已安装的直接跳过此节)

1. 关于helm

我们知道,容器应用在Kubernetes集群发布实际上需要创建不同的资源,写不同类型的yaml文件,如果应用架构比较庞大复杂,管理起来就更加麻烦。所以这时候Helm应运而生,由CNCF孵化和管理,用于对需要在k8s上部署复杂应用进行定义、安装和更新。

Helm 可以理解为 Kubernetes 的包管理工具,可以方便地发现、共享和使用为Kubernetes构建的应用,简单来说,Helm的任务是在仓库(Repository)中查找需要的Chart,然后将Chart以Release的形式安装到K8S集群中。

2. 安装helm和Tiller

Helm由以下两个组件组成:

- HelmClient 客户端,管理Repository、Chart、Release等对象

- TillerServer 负责客户端指令和K8S集群之间的交互,根据Chart的定义生成和管理各种K8S的资源对象

(1)安装Helm Client

下载最新版二进制文件:https://github.com/helm/helm/releases

解压并拷贝到/usr/local/bin/路径下:

[root@k8s-master01 ~]# tar xf helm-v2.11.0-linux-amd64.tar.gz

[root@k8s-master01 ~]# cp linux-amd64/helm linux-amd64/tiller /usr/local/bin/

# 如果你的机器可以联网也可以直接下载拷贝

# wget -qO- https://kubernetes-helm.storage.googleapis.com/helm-v2.9.1-linux-amd64.tar.gz | tar -zx

# mv linux-amd64/helm /usr/local/bin

(2)在K8S Master上创建Helm的ServiceAccount,即sa,并设定RBAC

$ kubectl -n kube-system create sa tiller

$ kubectl create clusterrolebinding tiller --clusterrole cluster-admin --serviceaccount=kube-system:tiller

(3)安装Tiller

在所有K8S节点上下载tiller:v[helm-version]镜像,helm-version为上述Helm的版本,如下:

docker pull dotbalo/tiller:v2.11.0

因为我们是公司内部离线环境,所以我们可以提前先下载好并存到镜像仓库当中,并在所有节点拉取该镜像:

# 先在可以联网的机器上下载

docker pull dotbalo/tiller:v2.11.0

# 打包

docker save -o tiller-v2.11.0.tar dotbalo/tiller:v2.11.0

# 拷贝tar包转移到内网机器上并解压

docker load -i tiller-v2.11.0.tar

docker images |grep tiller

docker tag dotalo/tiller:v2.11.0 harbor.xxx.com.cn/baseimg/tiller:v2.11.0

docker push harbor.xxx.com.cn/baseimg/tiller:v2.11.0

# 在所有K8S节点上拉取该镜像

docker pull harbor.xxx.com.cn/baseimg/tiller:v2.11.0

然后使用helm init安装tiller:

# 指定sa和指定镜像安装

[root@k8s-master01 ~]# helm init --service-account tiller --tiller-image dotbalo/tiller:v2.11.0

Creating /root/.helm

Creating /root/.helm/repository

Creating /root/.helm/repository/cache

Creating /root/.helm/repository/local

Creating /root/.helm/plugins

Creating /root/.helm/starters

Creating /root/.helm/cache/archive

Creating /root/.helm/repository/repositories.yaml

Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com

Adding local repo with URL: http://127.0.0.1:8879/charts

$HELM_HOME has been configured at /root/.helm.

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation

Happy Helming!

PS:如果你一开始未指定sa也可以在安装tiller之后更新deploy即可

kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec": {"template":{"spec":{"serviceAccount":"tiller"}}}}'

(4)查看确认

[root@k8s-master01 ~]# helm version

Client: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"}

[root@k8s-master01 ~]# kubectl get pod,svc -n kube-system | grep tiller

pod/tiller-deploy-5d7c8fcd59-d4djx 1/1 Running 0 3m

service/tiller-deploy ClusterIP 10.106.28.190 <none> 44134/TCP 5m

3. 常用命令

这里只列举最常用以及这里会用到的命令,更多请查阅官方文档

# 查看helm仓库

helm repo

# 搜索Chart

helm search xxx

# 查看Chart包详情

helm inspect xxxx/xxxx

# 下载Chart包

helm fetch ChartName

# 校验配置是否正常

helm lint ${chart_name}

# 打包Chart

helm package ${chart_name} --debug

# 查看当前安装的Chart

helm ls

# 查看所有的Chart

helm ls -a

# 安装Chart

helm install --debug

# 卸载Chart release

helm delete xxxx # 适用helm 2.0,保留原来的release名称,可以通过helm ls看到状态deleted

helm delete --purge xxxx # 适用helm 2.0,参数purge代表彻底删除,释放原来的release名称

helm uninstall # 适用heml 3.0

关于Helm就介绍到这,网上很多文章,大家可以查阅,这里推荐两篇文章,梳理的比较清晰,供参考:

(1)Helm安装和项目使用

(2)使用helm部署release到kubernetes

二、下载harbor-helm chart以及harbor镜像

因为我们是公司内部网络,离线环境,所以我们需要提前下载好Chart包以及harbor镜像,自己可以到harbor官网选择版本release:https://github.com/goharbor/harbor-helm/releases

先找一台可以联网的机器:

# 方法一

# 可以直接在上述release页面下载对应版本的源码

wget https://github.com/goharbor/harbor-helm/archive/v1.1.4.tar.gz

tar zxvf v1.1.4.tar.gz

cd harbor-helm-1.1.4

# 方法二

helm repo add harbor https://helm.goharbor.io

helm fetch harbor/harbor --version 1.1.4

tar xf harbor-1.1.4.tgz

# 方法三

git clone https://github.com/goharbor/harbor-helm

cd harbor-helm

git checkout 1.1.4

进入Chart包目录,我们可以看到Chart文件结构:

.

├── charts

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── ingress.yaml

│ ├── NOTES.txt

│ └── service.yaml

└── values.yaml

其中:

Chart.yaml描述了chart的信息,包括名字,版本,描述信息等等。

values.yaml存储变量,给templates文件中定义的资源使用。

templates文件夹中使用go语言的模版语法,定义了各类kubernetes资源,结合values.yaml中的变量值,声称实际的资源声明文件。

NOTES.txt:在执行helm instll安装此Chart之后会被输出到屏幕的一些自定义信息

可选的文件结构:

LICENSE: chart的license信息

README.md: 友好的chart介绍文档

requirements.yaml: charts的依赖说明

我们下面主要修改的就是values.yaml文件中的配置

对于harbor镜像,需要提前下载好,这里我们同样使用离线安装包的方式统一下载:

[root@localhost harbor]# wget https://github.com/goharbor/harbor/releases/download/v1.8.6/harbor-offline-installer-v1.8.6.tgz

[root@localhost harbor]# tar zxvf harbor-offline-installer-v1.8.6.tgz

[root@localhost harbor]# cd harbor-offline-installer-v1.8.6

[root@localhost harbor-offline-installer-v1.8.6]# ls

common docker-compose.yml harbor.v1.8.6.tar.gz harbor.yml install.sh LICENSE prepare

# 里面的harbor.v1.8.6.tar.gz文件就是harbor 1.8.6的镜像文件,我们把里面的镜像文件load出来即可

[root@localhost harbor-offline-installer-v1.8.6]# docker load -i harbor.v1.8.6.tar.gz

三、架构设计及安装

(1)设计

这里就需要提前结合自己的k8s集群设计harbor的架构,主要思考的点

- 镜像

镜像较多,因为Harbor使用的容器镜像多达10个(其中registry会用到多个容器镜像),会被集群分别调度到多个节点上运行,需要保证所有的节点都有需要的容器镜像,会带来大量的下载流量,完整运行起来的时间比较长。最好是在一个节点上下载,然后上传到所有节点。 - 数据持久化

K8S上POD生命周期是短暂的,需要考虑你的数据持久化怎么做,Harbor可以使用本地存储、外置存储或者网络存储。

因我们集群已经提供了ceph RBD,所以db/redis等pod的数据使用内部PVC提供持久化存储,即通过ceph RBD的卷进行挂载保存。而镜像我们则放到ceph S3存储上。 - 访问服务

使用 Ingress 对外提供服务 - 是否开启TLS

我们公司内部环境,暂时并没有打算启用https,而是http即可 - 域名解析及信任

域名解析什么的之前就已经配置好了,所以也不需要关注,用新申请的域名即可,但是因为是http,所以需要在所有节点上配置/etc/docker/daemon.jsoncat <<EOF> /etc/docker/daemon.json { "insecure-registries": [ "harbor.xxx.com.cn:80", ###harbor的地址 "harbor.xxx.com.cn" ] } # 然后重启docker systemctl restart docker systemctl daemon-reload

(2)自定义配置

那么我们就可以开始配置values文件了,下面改动部分及敏感信息使用[ 高圆圆 ]备注或替代,主要改动点如下,其它都没改动,比如db和redis都使用内部的:

- 用ingress做服务发现

- 禁用tls

- 配置harbor及notary域名

- 数据PVC采用"ceph-rbd"这个StorageClass

- 镜像数据采用ceph s3保存

expose:

# Set the way how to expose the service. Set the type as "ingress",

# "clusterIP", "nodePort" or "loadBalancer" and fill the information

# in the corresponding section

type: ingress

tls:

# Enable the tls or not. Note: if the type is "ingress" and the tls

# is disabled, the port must be included in the command when pull/push

# images. Refer to https://github.com/goharbor/harbor/issues/5291

# for the detail.

enabled: false #[高圆圆]

# Fill the name of secret if you want to use your own TLS certificate.

# The secret must contain keys named:

# "tls.crt" - the certificate

# "tls.key" - the private key

# "ca.crt" - the certificate of CA

# These files will be generated automatically if the "secretName" is not set

secretName: ""

# By default, the Notary service will use the same cert and key as

# described above. Fill the name of secret if you want to use a

# separated one. Only needed when the type is "ingress".

notarySecretName: ""

# The common name used to generate the certificate, it's necessary

# when the type isn't "ingress" and "secretName" is null

commonName: ""

ingress:

hosts:

core: harbor2.xxxx.com.cn #[高圆圆]

notary: notary2.xxxx.com.cn #[高圆圆]

# set to the type of ingress controller if it has specific requirements.

# leave as `default` for most ingress controllers.

# set to `gce` if using the GCE ingress controller

# set to `ncp` if using the NCP (NSX-T Container Plugin) ingress controller

controller: default

annotations:

ingress.kubernetes.io/ssl-redirect: "false"

ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/whitelist-source-range: 0.0.0.0/0

# The external URL for Harbor core service. It is used to

# 1) populate the docker/helm commands showed on portal

# 2) populate the token service URL returned to docker/notary client

#

# Format: protocol://domain[:port]. Usually:

# 1) if "expose.type" is "ingress", the "domain" should be

# the value of "expose.ingress.hosts.core"

# 2) if "expose.type" is "clusterIP", the "domain" should be

# the value of "expose.clusterIP.name"

# 3) if "expose.type" is "nodePort", the "domain" should be

# the IP address of k8s node

#

# If Harbor is deployed behind the proxy, set it as the URL of proxy

externalURL: http://harbor2.xxxx.com.cn #[高圆圆]

# The persistence is enabled by default and a default StorageClass

# is needed in the k8s cluster to provision volumes dynamicly.

# Specify another StorageClass in the "storageClass" or set "existingClaim"

# if you have already existing persistent volumes to use

#

# For storing images and charts, you can also use "azure", "gcs", "s3",

# "swift" or "oss". Set it in the "imageChartStorage" section

persistence:

enabled: true

# Setting it to "keep" to avoid removing PVCs during a helm delete

# operation. Leaving it empty will delete PVCs after the chart deleted

resourcePolicy: "keep"

persistentVolumeClaim:

registry:

# Use the existing PVC which must be created manually before bound,

# and specify the "subPath" if the PVC is shared with other components

existingClaim: ""

# Specify the "storageClass" used to provision the volume. Or the default

# StorageClass will be used(the default).

# Set it to "-" to disable dynamic provisioning

storageClass: "ceph-rbd" #[高圆圆]

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

chartmuseum:

existingClaim: ""

storageClass: "ceph-rbd" #[高圆圆]

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

jobservice:

existingClaim: ""

storageClass: "ceph-rbd" #[高圆圆]

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

# If external database is used, the following settings for database will

# be ignored

database:

existingClaim: ""

storageClass: "ceph-rbd" #[高圆圆]

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

# If external Redis is used, the following settings for Redis will

# be ignored

redis:

existingClaim: ""

storageClass: "ceph-rbd" #[高圆圆]

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

# Define which storage backend is used for registry and chartmuseum to store

# images and charts. Refer to

# https://github.com/docker/distribution/blob/master/docs/configuration.md#storage

# for the detail.

imageChartStorage:

# Specify whether to disable `redirect` for images and chart storage, for

# backends which not supported it (such as using minio for `s3` storage type), please disable

# it. To disable redirects, simply set `disableredirect` to `true` instead.

# Refer to

# https://github.com/docker/distribution/blob/master/docs/configuration.md#redirect

# for the detail.

disableredirect: false

# Specify the type of storage: "filesystem", "azure", "gcs", "s3", "swift",

# "oss" and fill the information needed in the corresponding section. The type

# must be "filesystem" if you want to use persistent volumes for registry

# and chartmuseum

type: s3

filesystem:

rootdirectory: /storage

#maxthreads: 100

azure:

accountname: accountname

accountkey: base64encodedaccountkey

container: containername

#realm: core.windows.net

gcs:

bucket: bucketname

# The base64 encoded json file which contains the key

encodedkey: base64-encoded-json-key-file

#rootdirectory: /gcs/object/name/prefix

#chunksize: "5242880"

s3:

region: default

bucket: [高圆圆]

accesskey: [高圆圆]

secretkey: [高圆圆]

regionendpoint: [高圆圆]

#encrypt: false

#keyid: mykeyid

secure: false

#v4auth: true

#chunksize: "5242880"

rootdirectory: /registry

#storageclass: STANDARD

imagePullPolicy: IfNotPresent

logLevel: debug

# The initial password of Harbor admin. Change it from portal after launching Harbor

harborAdminPassword: "Harbor12345"

# The secret key used for encryption. Must be a string of 16 chars.

secretKey: "not-a-secure-key"

database:

# if external database is used, set "type" to "external"

# and fill the connection informations in "external" section

type: internal

internal:

image:

repository: [高圆圆]

tag: v1.8.6

# The initial superuser password for internal database

password: [高圆圆]

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

(3)安装Chart包

配置完就可以开始安装啦

cd helm-harbor

# 可以先dry-run试运行并debug确认

helm install --debug --dry-run --namespace goharbor --name harbor-1-8-6 .

# 正式安装,并将编排文件写入deploy.yaml文件以便日后检查及使用

helm install --namespace goharbor --name harbor-1-8-6 . |sed 'w ../deploy.yaml'

四、验证

[root@SYSOPS00065318 bankdplyop]# kubectl -n harbor get po

NAME READY STATUS RESTARTS AGE

harbor-1-8-harbor-chartmuseum-59687f9974-rxk4q 1/1 Running 0 3d8h

harbor-1-8-harbor-clair-6c65bd97b-lbnb5 1/1 Running 0 3d8h

harbor-1-8-harbor-core-f9d44d6b9-pl5fl 1/1 Running 0 3d8h

harbor-1-8-harbor-database-0 1/1 Running 0 3d8h

harbor-1-8-harbor-jobservice-d98454d6c-tt77v 1/1 Running 0 3d8h

harbor-1-8-harbor-notary-server-86895f5744-x6v49 1/1 Running 0 3d8h

harbor-1-8-harbor-notary-signer-59d4bf5b58-kl778 1/1 Running 0 3d8h

harbor-1-8-harbor-portal-77d57c4764-qqgw9 1/1 Running 0 3d8h

harbor-1-8-harbor-redis-0 1/1 Running 0 3d8h

harbor-1-8-harbor-registry-848bc49fb7-prwkw 2/2 Running 0 3d8h

[root@SYSOPS00065318 bankdplyop]# kubectl -n harbor get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-harbor-1-8-harbor-redis-0 Bound pvc-ce7f3e96-7440-11ea-b523-06687c008cdb 1Gi RWO ceph-rbd 3d9h

database-data-harbor-1-8-harbor-database-0 Bound pvc-ce7a7fb9-7440-11ea-b523-06687c008cdb 1Gi RWO ceph-rbd 3d9h

harbor-1-8-harbor-jobservice Bound pvc-23459419-741e-11ea-aaee-005056918741 1Gi RWO ceph-rbd 3d9h

PS:部署完需要等待一小段时间等所有pod都完成,如果有用到ceph storage class的话并且提前配置好ceph secret,如果有用到ImagePullSecret也提前配置好。期间可以根据describe及log查看各个pod的启动情况:

kubectl logs harbor-1-8-harbor-database-0 -n goharbor

kubectl describe po harbor-1-8-harbor-database-0 -n goharbor

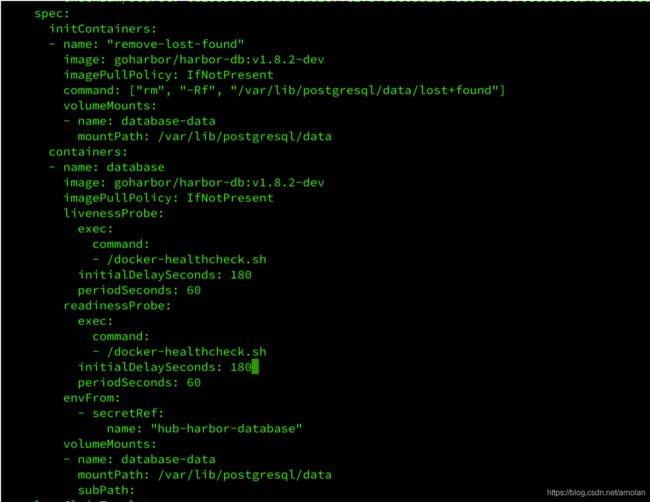

如果db一直无法起来,报找不到以上database的错误,是有db还未启动成功,在Pod探针失败的情况下强制重启了Pod,导致3个Database脚本没有执行完毕,所以加长探针开始扫描时间和超时时间。

延伸阅读

以下知识点根据自己需要添加,以适应自己的具体环境

1. helm下线、更新、回滚

# 下线

helm delete xxxxxx

# 更新,这里稍微留意资源的复用性

helm upgrade --set "key=value" ${chart_name} ${char_repo/name}

# 回滚上一个版本

helm rollback ${chart_name} 1

2. 关于证书

如果通过ingress做服务发现并启用了https,在浏览器访问的时候则还需要点击信任该证书,另外通过docker cli访问harbor会提示错误:

[root@k8s-master harbor-helm]# docker login harbor.xxxx.com.cn

Username: admin

Password:

Error response from daemon: Get https://harbor.xxxx.com.cn/v2/: x509: certificate signed by unknown authority

[root@k8s-master harbor-helm]#

这是因为我们没有提供证书文件,我们将使用到的ca.crt文件复制,拷贝harbor的证书到k8s各个节点,哪里可以看harbor证书呢?在secret里面:

$ kubectl get secret harbor-harbor-ingress -n goharbor -o yaml

apiVersion: v1

data:

ca.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM5VENDQWQyZ0F3SUJBZ0lSQUtNbWp6QUlHcFZKUmZxNnJDdDMySGN3RFFZSktvWklodmNOQVFFTEJRQXcKRkRFU01CQUdBMVVFQXhNSmFHRnlZbTl5TFdOaE1CNFhEVEU1TVRBeU1UQTJOVE14T0ZvWERUSXdNVEF5TURBMgpOVE14T0Zvd0ZERVNNQkFHQTFVRUF4TUphR0Z5WW05eUxXTmhNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DCkFROEFNSUlCQ2dLQ0FRRUF1enp5anRXaStzbm55b0hWc0R1cHRERDF6ZnFvWWpsaFNGTkxBMUdtNmorWkRuR0gKVWJKeGpCMlJ5aDRvN2N5cUF6VFE3YnBhM2ZUeXJmQWo4K0RTZzdCVGNQcy8ycng1aHhrTHowWjJ5ODJ4ZmpaZgplMndPVFRvZ0NtakljZGMwOFNCVVBvSWVQRzBka2NOUnR2U2tENGFVZkx3UE42MmNWNUNidEFSazh4TGwrN3N3ClBLQUx4ZlFVTkt1RGlobXY3blRTUnVIWkoxQXFsS09CdWE4NnhpSVF6T2hlOVhaYUZTRjBGZEZUNVc0eUVkaXkKOGExelNIV0l3eklUaWs2WU82ZFE2T3N1QVV4SUFBREtMVlJYTWszMWlvZ2s0K24zYmxzTnlYR3R0OStwa0FyVwphWXJvcXRXK2FmUGxlUndDQ25DZGUydk9WMndqSTh3a291YW5Xd0lEQVFBQm8wSXdRREFPQmdOVkhROEJBZjhFCkJBTUNBcVF3SFFZRFZSMGxCQll3RkFZSUt3WUJCUVVIQXdFR0NDc0dBUVVGQndNQ01BOEdBMVVkRXdFQi93UUYKTUFNQkFmOHdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBQlBoMnVIRm0xcnp6ekdydkFLQnRjZnMxK3I0MzFrNQpWMWtjampraUl4U0FFQ1d2dW9TSGdXVkVHNVpGRjdScVRnVFl4bEduQ0hUUXpOOXlxRnUxSXU3MmdTcXBNSkZiCmkrRG9kMllDNThhL2h6WC9mWFNhREtqRnVRaWl4QlhGM0E4SlFxL2hucWVXU2pFYnRFaWhQMVlKMGNmQXZaTDAKSmJLVXgyRUluVkhReVh0bXMvcWVHZkpLbHVCTmRVWDFoNklvM0ZzLzVjeVUxeFB3ZEpJaERmMHlYMDlKb3pRMgpydzVaeEhyNGpxT2xpRTBTZCtjRVRFVCsvR2xMUGJWRmZDVDlKZms4S1VXeHZLMnN6aDdYTmRvaGErSjNDZTgrClVPZTIvZkpmdWRWRVdjRlY4a2pOOHVudHd6SWJ2cHpJa3RmY2hWT2NHMEFUZnNXNCs5ZmFRK2c9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

tls.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURLakNDQWhLZ0F3SUJBZ0lRTFNicmtJYlEzVFQrT3hrSlBEMldMREFOQmdrcWhraUc5dzBCQVFzRkFEQVUKTVJJd0VBWURWUVFERXdsb1lYSmliM0l0WTJFd0hoY05NVGt4TURJeE1EWTFNekU0V2hjTk1qQXhNREl3TURZMQpNekU0V2pBY01Sb3dHQVlEVlFRREV4Rm9ZWEppYjNJdWQyRnVaM2gxTG1OdmJUQ0NBU0l3RFFZSktvWklodmNOCkFRRUJCUUFEZ2dFUEFEQ0NBUW9DZ2dFQkFQUXhVTXc3ZEUzMDJLL1NyRTFkL3pmVWZLcmF5MHB3MlFOcFZNL0kKNFR4TVNjR2dYc0Y0RXZGcHM0NUYvajhIRXVrNWJIanlyR0RzaUxocjRpU294UkNLQk1pOEUvMnppVTVvSWRqUgpSWWU2aysvQU8rNUI4VXdmUHQ1VC9JM29ReUdyVmtDWXRxK2ROTHczYldVRHBJNFBoTmdETHRURTV2Y1BhRGFGCk1xRUszVlJKQmJqeHJIUndHTWNXNmhraGIvb3dNZmVGOXo3WjRUWHpPM2ZIcHpnMWhnU3dDcU9JeEhQNE5LYW4KOVNOdW0xMkpKeGgyVlZyOXFBNWg5K29tNXpySjQ0bFB5UFlFRStWRjc0MXF1QUl5eGtpcC9JMkxZYmdZZkNpUwpyRWVXM0Y2TlMrbnRDWkd6OUpySjJRc2lhVHUwR1JmZEIvNDZIN2c3SGxjQmJVMENBd0VBQWFOd01HNHdEZ1lEClZSMFBBUUgvQkFRREFnV2dNQjBHQTFVZEpRUVdNQlFHQ0NzR0FRVUZCd01CQmdnckJnRUZCUWNEQWpBTUJnTlYKSFJNQkFmOEVBakFBTUM4R0ExVWRFUVFvTUNhQ0VXaGhjbUp2Y2k1M1lXNW5lSFV1WTI5dGdoRnViM1JoY25rdQpkMkZ1WjNoMUxtTnZiVEFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBYmJaakw5cG1Gd3RRL2tNdVhNZmNtNnpzCkVRVVBQcmhwZXREVk9sYWtOYjhlNERTaTNDcWhicTFLTmxFdEQwd0kwOVhESG1IUEw1dXpXK2dkbzRXWnRhNUYKTjhJVnJJMStwc2R1bkdYZWxNODdJNlh6YzFzMnpIVGY3VHUreHZ4V3VsYnVZRVU5OEpheXVpa0N6L2VDMm5FdApQaVg3anZvNVBwQ1RYb2tFUG5DTlVZODZYQ3ptbWVhbTNpTFIza3pSOEdmNjRydWh4MXl2VkxEdjZiQ3NkdXJ0Ck1ORE5wbTc0aXp4aWJjRmtwWGhWZWY1ZGNvNVExakVTRmxXOEd5MnRBbXYyZFdTdW5tSWRaaXZweHpGaXdyWU4KWjkxLzR2RHdJZE5OeHo5SUIrN0ZYVVpTazQveW9PZ25pTWZTTnJhMHdwclZMZjZTdysrQUdtRnB5VTYyNUE9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

tls.key: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBOURGUXpEdDBUZlRZcjlLc1RWMy9OOVI4cXRyTFNuRFpBMmxVejhqaFBFeEp3YUJlCndYZ1M4V216amtYK1B3Y1M2VGxzZVBLc1lPeUl1R3ZpSktqRkVJb0V5THdUL2JPSlRtZ2gyTkZGaDdxVDc4QTcKN2tIeFRCOCszbFA4amVoRElhdFdRSmkycjUwMHZEZHRaUU9ramcrRTJBTXUxTVRtOXc5b05vVXlvUXJkVkVrRgp1UEdzZEhBWXh4YnFHU0Z2K2pBeDk0WDNQdG5oTmZNN2Q4ZW5PRFdHQkxBS280akVjL2cwcHFmMUkyNmJYWWtuCkdIWlZXdjJvRG1IMzZpYm5Pc25qaVUvSTlnUVQ1VVh2aldxNEFqTEdTS244all0aHVCaDhLSktzUjViY1hvMUwKNmUwSmtiUDBtc25aQ3lKcE83UVpGOTBIL2pvZnVEc2VWd0Z0VFFJREFRQUJBb0lCQVFDOHgzZElQRnBnZmY0YQpod3JQVVBDaVQ3SUZQOXBUZFVRLzMrbENMWEQ2OVpzN2htaGF0eUlsNGVwKy9kdGRESEh4UFlSL1NGUTlKZjlZClc0YmJnbUcrdElTWVR0WkJsczk2ZndSVG93MVdyY1g2WGltMnV1SDVVRnFBOUhyVmxnNTM5QVpkTC9KamQyd3kKYWNNM2lZWm9rTlRKVGtTaEZvdmJ5ZHh0OGJFL1R5MDFoY2dtSCtTZmxPT0g1bTJyYVNBTFF3SVY5bG9QSWlsWgpzTGFtSzZFNU1rS0pITmVuQmFjVDJwSHl0R2VEbzlXQW1uSGQ2THp0SkxTNzk2dHFZZ1FSMDdDS1VSa09xZ2tUClJWRW9WbzgyWDRHeWI5VmJxQVlIWDNYY3l0VW9QaTZvNE5qRlFUWW9yWGttRHBub25DWXRYaWp0bXljdEZmbEcKc3ZHYXpzT2RBb0dCQVBSQmFMZzhqWGJ3S0p2TzRhSUVDemhLUzlhRDlDOHd6Kzd6SmsrbmZwTDhvbzFYdmlHQwpOMStuRjNaR2NZVk9nNzViOStXdGFHQyswRnY3MjA5em00TittVWFIejAxaExoSjhwZnppc1hoN1JZTDJjQkZPCitTVW9tNlBvcDNFTmtjMEl3ZDZZU1dpUm5MOCtYT0R2UXR4VWtXNUtEVEVIZThndHRVS2V4ay9MQW9HQkFQL3YKSWZzSTZpSjlrNStHMXJYbFNJYWo0RTdsT0VhdU9MWnI0U0FsWWg1anhFU1JFNjRBeENya2h0cjZjOXVJS0p1WApJLzNRcEp4TmpCSzI4NzJ3aWMrU25kK0dvb1E1RG5Ub3QxN2FwVko2L2xkUXZqdUduZjJ2d0p0S25MVEJreE1IClJ0SzdyQmNhNGVoSWNXK2ZJN3ZpMzh3Rys5N3lNTENqaEprOXdtUkhBb0dBWXlZbUJ4dDFaVUZwaW8yNUk1WTIKbzd2cyt3QUhZQnlWVzI3U0wyVlRTUUZLVHN1K1AwWG5pbWwrYWFHQXRWZEF2VVlCNC9hM053WmQ5K2pOaG52cwpOYjF2SktVK2JpK3pqd2VRTFk0cjhqYy82VUIyRDJDYVhBNFcxN3M2TlBjSUowMlZ2UERlWTVjd0pLV0ErRUhIClJ6OEE1ZDhqYWJLYStaQXNVd1cyaEc4Q2dZQkJTWEV6cG95RGkrRXltcVQrOWFSUXBGRStEdjhTR0xOaTVaWWkKS3ljaWRYVEZ3UFJ5T01QUjVVWDVhbFpQdENZWHVyQjF1Tm1rL2Fzend2UGVlY0JOOFNyUXNIbVluUzF3NlVTTgpyOXpvYzNPYU5vQ3drcUNPN0Z5SHdMckU2WFJwTUR3QzJka0djOWNZK0JIbjFZSzZGUi9kM2hJMlJ6WGdlWFlECjJWdFRWUUtCZ1FEYXd3dStQbXNOT2JTcUJQMWtUUXp4cHpIM2svZjF1U0REczJWSmd2dEt2UlZ5YmF0WXd5SFcKVTVabmVjUi91RWZPK1VkeHQrYW9sWEtXMG5mZFo2Q0RSOW5wTmFTcDF5SVJJeStlYUpvK21ySVVrdnhKNFl1cwpETnJTbGU2QWhGNUE1QkphbjlsbUFGZ0VleWRpR2tHKzg1bEVVYTJlNElSbk1FSjRYOHJITHc9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

kind: Secret

metadata:

creationTimestamp: "2019-10-21T06:53:21Z"

labels:

app: harbor

chart: harbor

heritage: Tiller

release: harbor

name: harbor-harbor-ingress

namespace: kube-ops

resourceVersion: "7297296"

selfLink: /api/v1/namespaces/kube-ops/secrets/harbor-harbor-ingress

uid: c35d0829-3a35-425c-ac45-fdd5db7d7694

type: kubernetes.io/tls

# 其中 data 区域中 ca.crt 对应的值就是我们需要证书,不过需要注意还需要做一个 base64 的解码

$ kubectl get secrets/harbor-v1-harbor-ingress -n goharbor -o jsonpath="{.data.ca\.crt}" | base64 --decode

-----BEGIN CERTIFICATE-----

MIIC9DCCAdygAwIBAgIQffFj8E2+DLnbT3a3XRXlBjANBgkqhkiG9w0BAQsFADAU

MRIwEAYDVQQDEwloYXJib3ItY2EwHhcNMTgxMTE2MTYwODA5WhcNMjgxMTEzMTYw

ODA5WjAUMRIwEAYDVQQDEwloYXJib3ItY2EwggEiMA0GCSqGSIb3DQEBAQUAA4IB

DwAwggEKAoIBAQDw1WP6S3O+7zrhVAAZGcrAEdeQxr0c53eyDGcPL6my/h+FhZ1Y

KBvY5CLDVES957u/GtEXFfZr9aQT/PZECcccPcyZvt8NscEAuQONfrQFH/VLCvwm

XOcbFDR5BXDJR8nqGT6DVq8a1HUEOxiY39bp/Jz2HrDIfD9IMwEuyh/2IVXYHwD0

deaBpOY1slSylpOYWPFfy9UMfCsd+Jc7UCzRaiP3XWP9HMFKc4JTU8CDRR80s9UM

siU8QheVXn/Y9SxKaDfrYjaVUkEfJ6cAZkkDLmM1OzSU73N7I4nmm1SUS99vdSiZ

yu/R4oDFMezOkvYGBeDhLmmkK3sqWRh+dNoNAgMBAAGjQjBAMA4GA1UdDwEB/wQE

AwICpDAdBgNVHSUEFjAUBggrBgEFBQcDAQYIKwYBBQUHAwIwDwYDVR0TAQH/BAUw

AwEB/zANBgkqhkiG9w0BAQsFAAOCAQEAJjANauFSPZ+Da6VJSV2lGirpQN+EnrTl

u5VJxhQQGr1of4Je7aej6216KI9W5/Q4lDQfVOa/5JO1LFaiWp1AMBOlEm7FNiqx

LcLZzEZ4i6sLZ965FdrPGvy5cOeLa6D8Vx4faDCWaVYOkXoi/7oH91IuH6eEh+1H

u/Kelp8WEng4vfEcXRKkq4XTO51B1Mg1g7gflxMIoeSpXYSO5qwIL5ZqvoAD9H7J

CnQFO2xO3wrLq6TXH5Z7+0GWNghGk0GIOvF/ULHLWpsyhU5asKLK//MvORwQNHzL

b5LHG9uYeI+Jf12X4TI9qDaTCstiqM8vk1JPvgtSPJ9M62nRKY4ang==

-----END CERTIFICATE-----

把证书保存到本地ca.crt,并拷贝到k8s所有节点的 /etc/docker/certs.d/harbor.xxxx.com.cn/ca.crt 里,这样证书配置上以后就可以正常访问了。

# 1.本地创建ca.crt,并把上述解码的数据复制锦ca.crt文件内

kubectl get secrets/harbor-v1-harbor-ingress -n goharbor -o jsonpath="{.data.ca\.crt}" | base64 --decode|sed 'w ca.crt'

# 2.循环在k8s集群所有节点上创建目录

for n in `seq -w 01 06`;do ssh node-$n "mkdir -p /etc/docker/certs.d/harbor.xxxx.com.cn/ca.crt";done

# 3.将下载下来的harbor CA证书拷贝到每个node节点的etc/docker/certs.d/harbor.xxxx.com.cn/ca.crt目录下

for n in `seq -w 01 06`;do scp ca.crt node-$n:/etc/docker/certs.d/harbor.xxxx.com.cn/ca.crt;done

重启docker并重新docker login

如果报证书不信任错误x509: certificate signed by unknown authority,则可以添加信任:

chmod 644 /etc/pki/ca-trust/extracted/pem/tls-ca-bundle.pem

将上述ca.crt添加到/etc/pki/tls/certs/ca-bundle.crt即可

cp /etc/docker/certs.d/core.harbor.domain/ca.crt /etc/pki/tls/certs/ca-bundle.crt

chmod 444 /etc/pki/ca-trust/extracted/pem/tls-ca-bundle.pem

不过由于上面的方法较为繁琐,所以可以参照未配置https的情况,在使用 docker cli 的时候是在 docker 启动参数后面添加一个–insecure-registry参数来忽略证书的校验的,在 docker 启动配置文件/etc/docker/daemon.json中修改启动参数insecure-registries:

$ cat /etc/docker/daemon.json

{

"insecure-registries": ["harbor.xxxx.com.cn"]

}

# 然后重启docker

$ systemctl restart docker

# 重新登陆

$ docker login harbor.xxxx.com.cn

登陆成功后,登陆授权信息会出现在/root/.docker/config.json内

$ cat /root/.docker/config.json

{

"auths": {

"h.cnlinux.club": {

"auth": "YWRtaW46SGFyYm9yMTIzNDU="

}

}

}

3. 关于pull secret

如果我的k8s集群很多的node节点是不是每个node节点都要上去登录才能pull harbor仓库的镜像?这样是不是就非常麻烦了?

No,K8S提供了一种secret的类型是kubernetes.io/dockerconfigjson就是用来解决这种问题的。如何使用呢?

方法一:手工添加secret

(1)首先将上述docker的登录成功的登陆信息转换成base64格式

[root@node-06 ~]# cat .docker/config.json |base64

ewoJImF1dGhzIjogewoJCSJoLmNubGludXguY2x1YiI6IHsKCQkJImF1dGgiOiAiWVdSdGFXNDZTR0Z5WW05eU1USXpORFU9IgoJCX0KCX0sCgkiSHR0cEhlYWRlcnMiOiB7CgkJIlVzZXItQWdlbnQiOiAiRG9ja2VyLUNsaWVudC8xOC4wNi4xLWNlIChsaW51eCkiCgl9Cn0=

(2)创建secret

secret声明如下:

apiVersion: v1

kind: Secret

metadata:

name: harbor-registry-secret

namespace: default

data:

.dockerconfigjson: ewoJImF1dGhzIjogewoJCSJoLmNubGludXguY2x1YiI6IHsKCQkJImF1dGgiOiAiWVdSdGFXNDZTR0Z5WW05eU1USXpORFU9IgoJCX0KCX0sCgkiSHR0cEhlYWRlcnMiOiB7CgkJIlVzZXItQWdlbnQiOiAiRG9ja2VyLUNsaWVudC8xOC4wNi4xLWNlIChsaW51eCkiCgl9Cn0=

type: kubernetes.io/dockerconfigjson

创建该secret

[root@node-01 ~]# kubectl create -f harbor-registry-secret.yaml

secret/harbor-registry-secret created

方法二:通过命令创建pull secret

$ kubectl create secret docker-registry harbor-registry-secret --docker-server=${server} --docker-username=${username} --docker-password=${pwd} secret/harbor-registry-secret created

使用secret

在部署deployment的时候声明使用imagePullSecrets即可,如下所示:

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-nginx

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: harbor.xxxx.com.cn/test/nginx:latest

ports:

- containerPort: 80

imagePullSecrets:

- name: harbor-registry-secret

参考文章:

- harbor官方安装文档

- harbor-helm官方文档

- kubernetes搭建Harbor无坑及Harbor仓库同步

- Kubernetes - - k8s - v1.12.3 使用Helm安装harbor

- 使用 Helm 在 Kubernetes 上部署 Harbor

- Kubernetes部署(十二):helm部署harbor企业级镜像仓库