深度学习与PyTorch笔记18

交叉熵(cross entropy loss)

Entropy

熵,不确定性,entropy,uncertainty,measure of surprise,higher entropy=less info,定义:

E n t r o p y = − ∑ i p ( i ) l o g P ( i ) Entropy=-\sum_{i}p(i)logP(i) Entropy=−i∑p(i)logP(i)

熵越大越稳定,越小越不稳定。

Cross Entropy

H ( p , q ) = ∑ p ( x ) l o g q ( x ) H(p,q)=\sum p(x)logq(x) H(p,q)=∑p(x)logq(x)

H ( p , q ) = H ( p ) + D K L ( p ∣ q ) H(p,q)=H(p)+D_{KL}(p|q) H(p,q)=H(p)+DKL(p∣q)

(KL,divergence,散度,真正衡量两个分布的距离的关系,越相似越接近于0)

if P = Q P=Q P=Q

c r o s s E n t r o p y = E n t r o p y cross Entropy=Entropy crossEntropy=Entropy

if f o r o n e − h o t e n c o d i n g for\quad one-hot\quad encoding forone−hotencoding(0,1)entropy

e n t r o p y = 1 l o g 1 = 0 entropy=1log1=0 entropy=1log1=0

运算推导

H ( P , Q ) = − P ( c a t ) l o g Q ( c a t ) − ( 1 − P ( c a t ) ) l o g ( 1 − Q ( c a t ) ) H(P,Q)=-P(cat)logQ(cat)-(1-P(cat))log(1-Q(cat)) H(P,Q)=−P(cat)logQ(cat)−(1−P(cat))log(1−Q(cat))

P ( d o g ) = ( 1 − P ( c a t ) ) P(dog)=(1-P(cat)) P(dog)=(1−P(cat))

H ( P , Q ) = − ∑ i = ( c a t , d o g ) P ( i ) l o g Q ( i ) = − P ( c a t ) l o g Q ( c a t ) − P ( d o g ) l o g Q ( d o g ) = − ( y l o g ( p ) + ( 1 − y ) l o g ( 1 − p ) ) H(P,Q)=-\sum_{i=(cat,dog)}P(i)logQ(i)=-P(cat)logQ(cat)-P(dog)logQ(dog)=-(ylog(p)+(1-y)log(1-p)) H(P,Q)=−i=(cat,dog)∑P(i)logQ(i)=−P(cat)logQ(cat)−P(dog)logQ(dog)=−(ylog(p)+(1−y)log(1−p))

若 y = 1 y=1 y=1,需要最大化 l o g ( p ) log(p) log(p)。若 y = 0 y=0 y=0,需要最大化 l o g ( 1 − p ) log(1-p) log(1−p),最小化p。

总结

why not use MSE

- sigmoid+MSE

- gradient vanish。sigmoid饱和,梯度离散。

- converge slower

- But,sometimes好用

- e.g.meta-learing

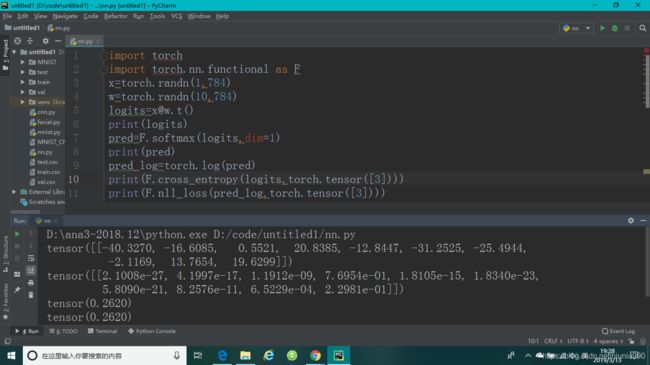

使用F.cross_entropy()时必须传入logits ,如果要自己计算就先用F.softmax()再用torch.log()最后用F.nll.loss(),此时传入的参数为pred_log ,不建议自己计算,建议直接使用F.cross_entropy()。

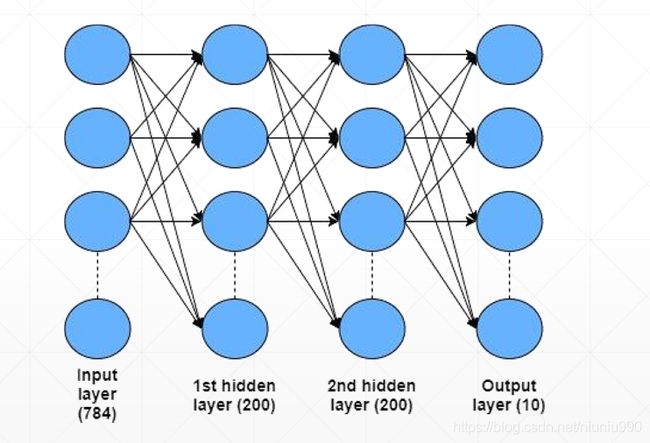

使用交叉熵优化多分类问题

#新建三个线性层

#输入维度为784,输出维度为200,(ch-out,ch-in)

w1, b1 = torch.randn(200, 784, requires_grad=True),\

torch.zeros(200, requires_grad=True)

#第二个隐藏层

w2, b2 = torch.randn(200, 200, requires_grad=True),\

torch.zeros(200, requires_grad=True)

#输出

w3, b3 = torch.randn(10, 200, requires_grad=True),\

torch.zeros(10, requires_grad=True)

#将forward过程写在一个函数里面

def forward(x):

x = [email protected]() + b1

x = F.relu(x)

x = [email protected]() + b2

x = F.relu(x)

x = [email protected]() + b3

x = F.relu(x)#logits

return x

定义一个优化器优化目标为 [ w 1 , b 1 , w 2 , b 2 , w 3 , b 3 ] [w1,b1,w2,b2,w3,b3] [w1,b1,w2,b2,w3,b3]:

optimizer = optim.SGD([w1, b1, w2, b2, w3, b3], lr=learning_rate)#定义一个优化器

criteon = nn.CrossEntropyLoss()#有softmax操作

for epoch in range(epochs):

for batch_idx, (data, target) in enumerate(train_loader):

data = data.view(-1, 28*28)

logits = forward(data)#不能再加softmax了

loss = criteon(logits, target)

optimizer.zero_grad()

loss.backward()

# print(w1.grad.norm(), w2.grad.norm())

optimizer.step()

一定要初始化,不然会出现梯度离散。b已经初始化为0。

torch.nn.init.kaiming_normal_(w1)

torch.nn.init.kaiming_normal_(w2)

torch.nn.init.kaiming_normal_(w3)

(何凯明)