rocketMq刷盘源码分析

最近阅读了rocketMQ的刷盘源码。做个总结

首先我们都知道刷盘分为 同步刷盘和异步刷盘两种。

一个消息发送到broker无非就是broker底层的通信框架netty接收到了一个请求然后转交给一个工作线程来处理这个请求然后返回。

那么如何处理这个请求的过程都在broker包下面的SendMessageProcess类当中。我们来看下处理请求的核心方法

private RemotingCommand sendMessage(final ChannelHandlerContext ctx,

final RemotingCommand request,

final SendMessageContext sendMessageContext,

final SendMessageRequestHeader requestHeader) throws RemotingCommandException {

final RemotingCommand response = RemotingCommand.createResponseCommand(SendMessageResponseHeader.class);

final SendMessageResponseHeader responseHeader = (SendMessageResponseHeader)response.readCustomHeader();

response.setOpaque(request.getOpaque());

response.addExtField(MessageConst.PROPERTY_MSG_REGION, this.brokerController.getBrokerConfig().getRegionId());

response.addExtField(MessageConst.PROPERTY_TRACE_SWITCH, String.valueOf(this.brokerController.getBrokerConfig().isTraceOn()));

log.debug("receive SendMessage request command, {}", request);

final long startTimstamp = this.brokerController.getBrokerConfig().getStartAcceptSendRequestTimeStamp();

if (this.brokerController.getMessageStore().now() < startTimstamp) {

response.setCode(ResponseCode.SYSTEM_ERROR);

response.setRemark(String.format("broker unable to service, until %s", UtilAll.timeMillisToHumanString2(startTimstamp)));

return response;

}

response.setCode(-1);

super.msgCheck(ctx, requestHeader, response);

if (response.getCode() != -1) {

return response;

}

final byte[] body = request.getBody();

int queueIdInt = requestHeader.getQueueId();

TopicConfig topicConfig = this.brokerController.getTopicConfigManager().selectTopicConfig(requestHeader.getTopic());

if (queueIdInt < 0) {

queueIdInt = Math.abs(this.random.nextInt() % 99999999) % topicConfig.getWriteQueueNums();

}

MessageExtBrokerInner msgInner = new MessageExtBrokerInner();

msgInner.setTopic(requestHeader.getTopic());

msgInner.setQueueId(queueIdInt);

if (!handleRetryAndDLQ(requestHeader, response, request, msgInner, topicConfig)) {

return response;

}

msgInner.setBody(body);

msgInner.setFlag(requestHeader.getFlag());

MessageAccessor.setProperties(msgInner, MessageDecoder.string2messageProperties(requestHeader.getProperties()));

msgInner.setPropertiesString(requestHeader.getProperties());

msgInner.setBornTimestamp(requestHeader.getBornTimestamp());

msgInner.setBornHost(ctx.channel().remoteAddress());

msgInner.setStoreHost(this.getStoreHost());

msgInner.setReconsumeTimes(requestHeader.getReconsumeTimes() == null ? 0 : requestHeader.getReconsumeTimes());

PutMessageResult putMessageResult = null;

Map oriProps = MessageDecoder.string2messageProperties(requestHeader.getProperties());

String traFlag = oriProps.get(MessageConst.PROPERTY_TRANSACTION_PREPARED);

if (traFlag != null && Boolean.parseBoolean(traFlag)) {

if (this.brokerController.getBrokerConfig().isRejectTransactionMessage()) {

response.setCode(ResponseCode.NO_PERMISSION);

response.setRemark(

"the broker[" + this.brokerController.getBrokerConfig().getBrokerIP1()

+ "] sending transaction message is forbidden");

return response;

}

putMessageResult = this.brokerController.getTransactionalMessageService().prepareMessage(msgInner);

} else {

putMessageResult = this.brokerController.getMessageStore().putMessage(msgInner);

}

return handlePutMessageResult(putMessageResult, response, request, msgInner, responseHeader, sendMessageContext, ctx, queueIdInt);

} 代码前面的逻辑很简单,先是建立一个response,然后调用super.msgCheck(ctx, requestHeader, response);对消息进行一些必要的check。 之后解析requset,将request中的信息提取出来塞进msgInner中。msgInner就是信息的载体,这里我们先不关注事务消息。所以最终代码会走到

putMessageResult = this.brokerController.getMessageStore().putMessage(msgInner);

这里就是消息持久化的关键代码。

public PutMessageResult putMessage(MessageExtBrokerInner msg) {

if (this.shutdown) {

log.warn("message store has shutdown, so putMessage is forbidden");

return new PutMessageResult(PutMessageStatus.SERVICE_NOT_AVAILABLE, null);

}

if (BrokerRole.SLAVE == this.messageStoreConfig.getBrokerRole()) {

long value = this.printTimes.getAndIncrement();

if ((value % 50000) == 0) {

log.warn("message store is slave mode, so putMessage is forbidden ");

}

return new PutMessageResult(PutMessageStatus.SERVICE_NOT_AVAILABLE, null);

}

if (!this.runningFlags.isWriteable()) {

long value = this.printTimes.getAndIncrement();

if ((value % 50000) == 0) {

log.warn("message store is not writeable, so putMessage is forbidden " + this.runningFlags.getFlagBits());

}

return new PutMessageResult(PutMessageStatus.SERVICE_NOT_AVAILABLE, null);

} else {

this.printTimes.set(0);

}

if (msg.getTopic().length() > Byte.MAX_VALUE) {

log.warn("putMessage message topic length too long " + msg.getTopic().length());

return new PutMessageResult(PutMessageStatus.MESSAGE_ILLEGAL, null);

}

if (msg.getPropertiesString() != null && msg.getPropertiesString().length() > Short.MAX_VALUE) {

log.warn("putMessage message properties length too long " + msg.getPropertiesString().length());

return new PutMessageResult(PutMessageStatus.PROPERTIES_SIZE_EXCEEDED, null);

}

if (this.isOSPageCacheBusy()) {

return new PutMessageResult(PutMessageStatus.OS_PAGECACHE_BUSY, null);

}

long beginTime = this.getSystemClock().now();

PutMessageResult result = this.commitLog.putMessage(msg);

long eclipseTime = this.getSystemClock().now() - beginTime;

if (eclipseTime > 500) {

log.warn("putMessage not in lock eclipse time(ms)={}, bodyLength={}", eclipseTime, msg.getBody().length);

}

this.storeStatsService.setPutMessageEntireTimeMax(eclipseTime);

if (null == result || !result.isOk()) {

this.storeStatsService.getPutMessageFailedTimes().incrementAndGet();

}

return result;

}这段代码前面都是各种判断,broker是否关闭了,是否为slave,是否没有写权限,是否topic的size过大,是否OSpageCacheBusy等。若都没问题最终走到了

PutMessageResult result = this.commitLog.putMessage(msg);

public PutMessageResult putMessage(final MessageExtBrokerInner msg) {

// Set the storage time

msg.setStoreTimestamp(System.currentTimeMillis());

// Set the message body BODY CRC (consider the most appropriate setting

// on the client)

msg.setBodyCRC(UtilAll.crc32(msg.getBody()));

// Back to Results

AppendMessageResult result = null;

StoreStatsService storeStatsService = this.defaultMessageStore.getStoreStatsService();

String topic = msg.getTopic();

int queueId = msg.getQueueId();

final int tranType = MessageSysFlag.getTransactionValue(msg.getSysFlag());

if (tranType == MessageSysFlag.TRANSACTION_NOT_TYPE

|| tranType == MessageSysFlag.TRANSACTION_COMMIT_TYPE) {

// Delay Delivery

if (msg.getDelayTimeLevel() > 0) {

if (msg.getDelayTimeLevel() > this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel()) {

msg.setDelayTimeLevel(this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel());

}

topic = ScheduleMessageService.SCHEDULE_TOPIC;

queueId = ScheduleMessageService.delayLevel2QueueId(msg.getDelayTimeLevel());

// Backup real topic, queueId

MessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_TOPIC, msg.getTopic());

MessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_QUEUE_ID, String.valueOf(msg.getQueueId()));

msg.setPropertiesString(MessageDecoder.messageProperties2String(msg.getProperties()));

msg.setTopic(topic);

msg.setQueueId(queueId);

}

}

long eclipseTimeInLock = 0;

MappedFile unlockMappedFile = null;

MappedFile mappedFile = this.mappedFileQueue.getLastMappedFile();

putMessageLock.lock(); //spin or ReentrantLock ,depending on store config

try {

long beginLockTimestamp = this.defaultMessageStore.getSystemClock().now();

this.beginTimeInLock = beginLockTimestamp;

// Here settings are stored timestamp, in order to ensure an orderly

// global

msg.setStoreTimestamp(beginLockTimestamp);

if (null == mappedFile || mappedFile.isFull()) {

mappedFile = this.mappedFileQueue.getLastMappedFile(0); // Mark: NewFile may be cause noise

}

if (null == mappedFile) {

log.error("create mapped file1 error, topic: " + msg.getTopic() + " clientAddr: " + msg.getBornHostString());

beginTimeInLock = 0;

return new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, null);

}

result = mappedFile.appendMessage(msg, this.appendMessageCallback);

switch (result.getStatus()) {

case PUT_OK:

break;

case END_OF_FILE:

unlockMappedFile = mappedFile;

// Create a new file, re-write the message

mappedFile = this.mappedFileQueue.getLastMappedFile(0);

if (null == mappedFile) {

// XXX: warn and notify me

log.error("create mapped file2 error, topic: " + msg.getTopic() + " clientAddr: " + msg.getBornHostString());

beginTimeInLock = 0;

return new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, result);

}

result = mappedFile.appendMessage(msg, this.appendMessageCallback);

break;

case MESSAGE_SIZE_EXCEEDED:

case PROPERTIES_SIZE_EXCEEDED:

beginTimeInLock = 0;

return new PutMessageResult(PutMessageStatus.MESSAGE_ILLEGAL, result);

case UNKNOWN_ERROR:

beginTimeInLock = 0;

return new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result);

default:

beginTimeInLock = 0;

return new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result);

}

eclipseTimeInLock = this.defaultMessageStore.getSystemClock().now() - beginLockTimestamp;

beginTimeInLock = 0;

} finally {

putMessageLock.unlock();

}

if (eclipseTimeInLock > 500) {

log.warn("[NOTIFYME]putMessage in lock cost time(ms)={}, bodyLength={} AppendMessageResult={}", eclipseTimeInLock, msg.getBody().length, result);

}

if (null != unlockMappedFile && this.defaultMessageStore.getMessageStoreConfig().isWarmMapedFileEnable()) {

this.defaultMessageStore.unlockMappedFile(unlockMappedFile);

}

PutMessageResult putMessageResult = new PutMessageResult(PutMessageStatus.PUT_OK, result);

// Statistics

storeStatsService.getSinglePutMessageTopicTimesTotal(msg.getTopic()).incrementAndGet();

storeStatsService.getSinglePutMessageTopicSizeTotal(topic).addAndGet(result.getWroteBytes());

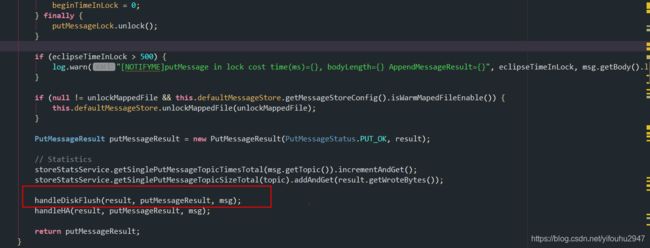

handleDiskFlush(result, putMessageResult, msg);

handleHA(result, putMessageResult, msg);

return putMessageResult;

}按照惯例前缀还是一堆的检查,真正的核心代码是从putMessageLock.lock()方法开始一直到结束。 这里上锁的目的是让mappedFile.appendMessage(msg, this.appendMessageCallback)在内存中追加消息不会有并发的问题。点开mappedFIle.appendMessage方法。

public AppendMessageResult appendMessagesInner(final MessageExt messageExt, final AppendMessageCallback cb) {

assert messageExt != null;

assert cb != null;

int currentPos = this.wrotePosition.get();

if (currentPos < this.fileSize) {

ByteBuffer byteBuffer = writeBuffer != null ? writeBuffer.slice() : this.mappedByteBuffer.slice();

byteBuffer.position(currentPos);

AppendMessageResult result = null;

if (messageExt instanceof MessageExtBrokerInner) {

result = cb.doAppend(this.getFileFromOffset(), byteBuffer, this.fileSize - currentPos, (MessageExtBrokerInner) messageExt);

} else if (messageExt instanceof MessageExtBatch) {

result = cb.doAppend(this.getFileFromOffset(), byteBuffer, this.fileSize - currentPos, (MessageExtBatch) messageExt);

} else {

return new AppendMessageResult(AppendMessageStatus.UNKNOWN_ERROR);

}

this.wrotePosition.addAndGet(result.getWroteBytes());

this.storeTimestamp = result.getStoreTimestamp();

return result;

}

log.error("MappedFile.appendMessage return null, wrotePosition: {} fileSize: {}", currentPos, this.fileSize);

return new AppendMessageResult(AppendMessageStatus.UNKNOWN_ERROR);

}int currentPos = this.wrotePosition.get();这个方法得到的是当前MappedFile追加到的内存位置。(这个position并不是代表刷盘到的位置,刷盘操作是在后续进行的)。 然后判断currentPos是否大于fileSize(fileSize默认大小为1024*1024*1024,即1G)。

ByteBuffer byteBuffer = writeBuffer != null ? writeBuffer.slice() : this.mappedByteBuffer.slice();

writeBuffer是否为空取决于是否开启了transnientStorePoolEable.(这个东西只有在异步刷盘的情况下才有用)。如果是异步刷盘并且开启了transnientStorePoolEable,那么新的消息发送到broker首先会先追加到writeBuffer当中,这个writerBuffer是一个堆外内存,在broker 初始化的时候会创建一个TransientStorePool,由这个TransientStorePool.init()创造出来的。如果未开启transnientStorePoolEable,则将新的消息先追加到mappedByteBuffer内存映射当中。

之后更新wrotePosition的值和storeTimestamp的值。这两个值分别是内存中commitLog追加到的物理位置和消息加入到内存的时间。

this.wrotePosition.addAndGet(result.getWroteBytes());

this.storeTimestamp = result.getStoreTimestamp();

再回过头看putMessage(final MessageExtBrokerInner msg)方法。

appendMeassageInner方法调用后返回一个result,判断result的状态是否为PUT_OK。是的话就继续往下走 将锁解开putMessageLock.unlock();

之后走到最最最关键的刷盘核心代码了!!!!!!!!!!!!!!!!!!

好了现在开始<重点>分析一下handleDIskFlush刷盘方法。

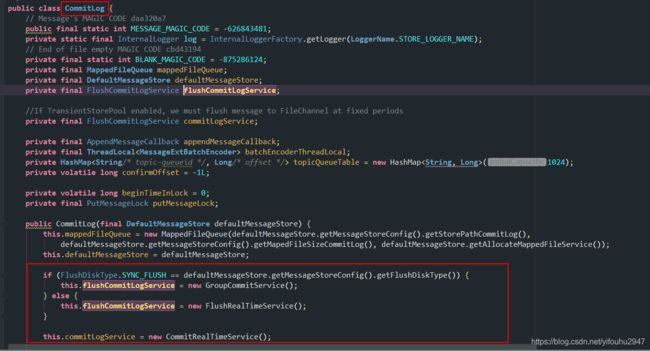

1.同步刷盘:

则(FlushDiskType.SYNC_FLUSH == this.defaultMessageStore.getMessageStoreConfig().getFlushDiskType())为true

那么flushCommitLogService则为GroupCommitService。

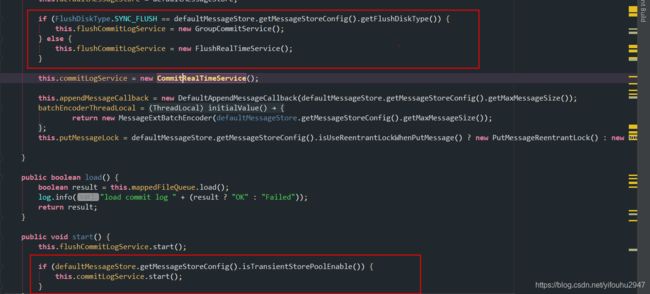

我们先回过头来看下CommitLog在初始化的时候会根据配置文件中刷盘的策略和TransnientStorePoolEable这个值来决定初始化flushCommitLogService和commitLogService这两个成员变量。

具体的逻辑是如果为异步刷盘则flushCommitLogService初始化为FlushRealTimeService,反之则是GroupCommitService。而commitLogService则既要满足异步刷盘的条件同时TransnientStorePoolEable还得为true的情况才会调用其init()方法。

那么再回过头来看handlerDiskFlush方法。会将result包装成GroupCommitRequest放入GroupCommitService当中。

service.putRequest(request);

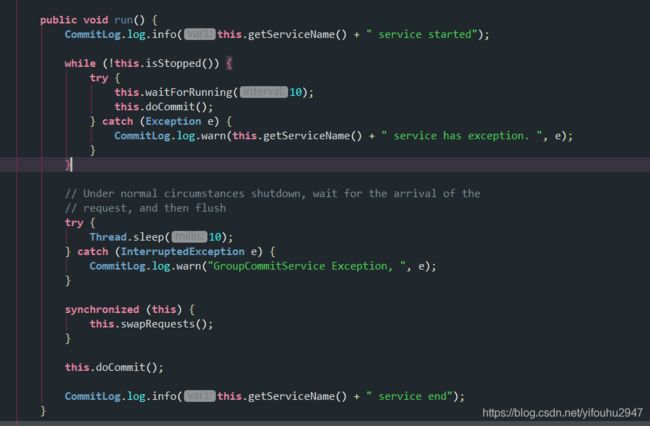

我们点开GroupCommitService类会发现里面有两个成员变量

这正是同步刷盘GroupCommitService设计最巧妙的地方。有两个容器,write容器是供给处理客户端发送的消息的线程,将result包装成request扔到这个容器中,而GroupCommitService中的run()方法则是处理Read容器。run方法中每隔10毫秒才调用一次doCommit()方法。这个waitForRunning(10)方法里面会调用this.swapRequests();将write,read队列进行交换。这样能够不影响消息从客户端发送过来和消息的持久化,大大地增加了吞吐量。

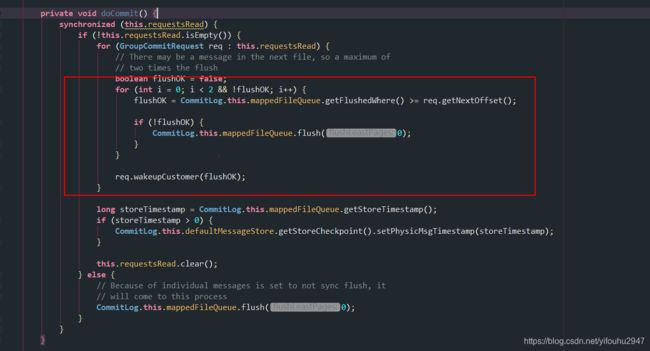

再看下doCommit方法。

这里为什么要for循环两次来进行刷盘是因为被处理的 req.getNextOffset()有可能跟flushedwhere不在同一个mappedfile当中,

很多人可能看到这里很不明白为什么要这样写。我当时也看了好久才明白。flush(0)方法首先会找到上一次flushed结束时候的位置 flushedWhere(mappedFileQueue的成员变量)。 然后用这个postion来定位到对应的mappedFile,之后再调用定位到的mappedFile的mappedFile.flush(0)方法; 那么这种情况下因为flushedwhere有可能在倒数第二个mappedFile中,所以mappedFile.flush(flushLeastPages)执行结束后返回的flushed还是小于在最后一个mappedFile中的 req.getNextOffset(),所以在这种情况下flushOK还是false 还要在进行一次CommitLog.this.mappedFileQueue.flush(0);而第二次再调这个方法则会返回最后一个mappedFile,那么最后一个mappedFile.flush(0)则能真正的刷盘OK。

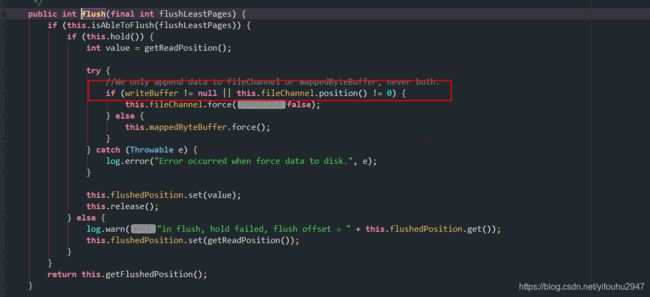

我们来看下mappedFile的flush方法

这个if (writeBuffer != null || this.fileChannel.position() != 0)判断条件。如果是同步刷盘不可能会走这个逻辑,因为这个writeBuffer是否存在取决于刷盘的策略是否为异步刷盘和transnientStorePool是否为true,只有这两个条件都满足这个writeBuffer才会在Broker初始化的时候创建。否则直接使用内存映射mappedByteBuffer.force()强制刷新到磁盘当中。

如果这个刷成功了回到都doCommit方法 则调用req.wakeUpCustomer(flushOK) 能成功。我们看下这个方法内部

public void wakeupCustomer(final boolean flushOK) {

this.flushOK = flushOK;

this.countDownLatch.countDown();

}很关键看到没!!! 这个countDownLatch计数器被countDown()了!!!

那么也就意味着在开始的那个handleDiskFlush()方法中被阻塞的request.waitForFlush(this.defaultMessageStore.getMessageStoreConfig().getSyncFlushTimeout());将会执行下去。那么也就意味着同步刷盘成功了!!!!!!!

同步刷盘的核心代码逻辑都在上面了。

异步刷盘:

异步刷盘在执行handleDiskFlush() 不会走到同步刷盘的代码逻辑中 只会执行以下唤醒

flushCommitLogService.wakeup();

如果是异步刷盘 这个flushCommitLogService在broker初始化的时候会初始化为FlushRealTimeService。同时如果transnientStorePoolEnable为true的话commitLogService(CommitRealTimeService)将会执行init()方法并开始工作。

那先看下 transnientStorePoolEnable开启的情况。

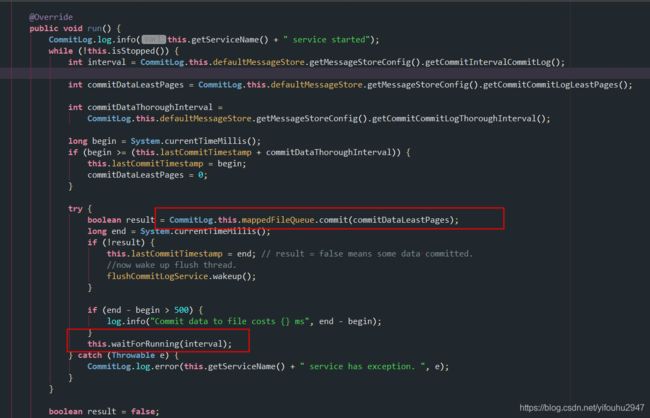

commitLogSerivce线程的run方法。

核心在于这个commit 。commitDataLeastPages默认为4K。 达到了4k则将(writePos - this.committedPosition.get())的值追加到channel管道当中。然后休息个interval(默认200ms)。另外一边看下FlushRealTimeService的run方法。每隔interval(默认500ms)执行一次flush。

public void run() {

CommitLog.log.info(this.getServiceName() + " service started");

while (!this.isStopped()) {

boolean flushCommitLogTimed = CommitLog.this.defaultMessageStore.getMessageStoreConfig().isFlushCommitLogTimed();

int interval = CommitLog.this.defaultMessageStore.getMessageStoreConfig().getFlushIntervalCommitLog();

int flushPhysicQueueLeastPages = CommitLog.this.defaultMessageStore.getMessageStoreConfig().getFlushCommitLogLeastPages();

int flushPhysicQueueThoroughInterval =

CommitLog.this.defaultMessageStore.getMessageStoreConfig().getFlushCommitLogThoroughInterval();

boolean printFlushProgress = false;

// Print flush progress

long currentTimeMillis = System.currentTimeMillis();

if (currentTimeMillis >= (this.lastFlushTimestamp + flushPhysicQueueThoroughInterval)) {

this.lastFlushTimestamp = currentTimeMillis;

flushPhysicQueueLeastPages = 0;

printFlushProgress = (printTimes++ % 10) == 0;

}

try {

if (flushCommitLogTimed) {

Thread.sleep(interval);

} else {

this.waitForRunning(interval);

}

if (printFlushProgress) {

this.printFlushProgress();

}

long begin = System.currentTimeMillis();

CommitLog.this.mappedFileQueue.flush(flushPhysicQueueLeastPages);

long storeTimestamp = CommitLog.this.mappedFileQueue.getStoreTimestamp();

if (storeTimestamp > 0) {

CommitLog.this.defaultMessageStore.getStoreCheckpoint().setPhysicMsgTimestamp(storeTimestamp);

}

long past = System.currentTimeMillis() - begin;

if (past > 500) {

log.info("Flush data to disk costs {} ms", past);

}

} catch (Throwable e) {

CommitLog.log.warn(this.getServiceName() + " service has exception. ", e);

this.printFlushProgress();

}

}这个flush最终调用的还是mappedfile的flush方法。

因为是异步的并且transientStorePoolEable开启,所以writeBuffer这个堆外内存是不为空的 所以用的是fileChannel.force()将对外内存中已经提交的内容强制刷写进磁盘。

另外一种情况就是异步情况下未开启 transientStorePoolEable就不分析了 就是没用堆外内存 直接用mappedByteBuffer来操作内存并异步刷写磁盘。