Flink新手初试

参考官方文档[1],试玩了一下flink,记录整个过程

环境准备

# checkout git仓库

git clone --branch release-1.9 https://github.com/apache/flink-playgrounds.git

目录结构如下,当前只有一个playgroud那就是operations-playground,它让我们可以试玩flink的流式处理作业,涵盖以下功能:

- 错误恢复。停止taskmanager,再启动taskmanager,模拟flink作业节点失败的情况。

- 作业升级发布和扩缩容。当作业的配置、拓扑结构或者用户函数变更时,如何进行重新发布。作业并行度调整的扩缩容操作。都是通过savepoint重新提交作业来实现。

- 作业指标查询。

flink-playgrouds/docker/ops-playgroud-mages/java/flink-playgroud-clickcountjob就是operations-playground的flink作业。

flink-playgrounds

├── docker

│ └── ops-playground-image

│ ├── Dockerfile

│ ├── java

│ │ └── flink-playground-clickcountjob

│ │ ├── LICENSE

│ │ ├── pom.xml

│ │ └── src

│ └── README.md

├── howto-update-playgrounds.md

├── LICENSE

├── operations-playground

│ ├── conf

│ │ ├── flink-conf.yaml

│ │ ├── log4j-cli.properties

│ │ └── log4j-console.properties

│ ├── docker-compose.yaml

│ └── README.md

└── README.md

试玩环境的部署通过docker-compose完成。

# 进入试玩目录

cd flink-playgrounds/operations-playground/

# 编译flink作业镜像,拉取flink、zookeeper、kafka镜像。

# 网络环境不好的话要先有个心理准备,整个过程耗时很长。

docker-compose build

# 启动container

docker-compose up -d

# 运行如下命令查看container

docker-compose ps

Name Command State Ports

--------------------------------------------------------------------------------------------------------------------------

operations-playground_clickevent- /docker-entrypoint.sh java ... Up 6123/tcp, 8081/tcp

generator_1

operations-playground_client_1 /docker-entrypoint.sh flin ... Exit 0

operations-playground_jobmanager_1 /docker-entrypoint.sh jobm ... Up 6123/tcp, 0.0.0.0:8081->8081/tcp

operations-playground_kafka_1 start-kafka.sh Up 0.0.0.0:9094->9094/tcp

operations-playground_taskmanager_1 /docker-entrypoint.sh task ... Up 6123/tcp, 8081/tcp

operations-playground_zookeeper_1 /bin/sh -c /usr/sbin/sshd ... Up 2181/tcp, 22/tcp, 2888/tcp, 3888/tcp

试玩操作

错误恢复

# 观察flink作业的输出

docker-compose exec kafka kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic output

# 新起一个终端,杀掉taskmanager

docker-compose kill taskmanager

ui界面可看到作业的8个task都处于SCHEDULED状态,flink作业输出窗口无输出。

# 启动taskmanger

docker-compose up -d taskmanager

可看到作业的8个task变为running状态,作业输出窗口快速输出多个时间段的处理结果,并无时间段或数据的丢失。

{"windowStart":"01-01-1970 01:00:45:000","windowEnd":"01-01-1970 01:01:00:000","page":"/help","count":1000}

{"windowStart":"01-01-1970 01:00:45:000","windowEnd":"01-01-1970 01:01:00:000","page":"/news","count":1000}

{"windowStart":"01-01-1970 01:01:00:000","windowEnd":"01-01-1970 01:01:15:000","page":"/jobs","count":1000}

{"windowStart":"01-01-1970 01:01:00:000","windowEnd":"01-01-1970 01:01:15:000","page":"/index","count":1000}

{"windowStart":"01-01-1970 01:01:00:000","windowEnd":"01-01-1970 01:01:15:000","page":"/news","count":1000}

{"windowStart":"01-01-1970 01:01:00:000","windowEnd":"01-01-1970 01:01:15:000","page":"/about","count":1000}

{"windowStart":"01-01-1970 01:01:00:000","windowEnd":"01-01-1970 01:01:15:000","page":"/shop","count":1000}

{"windowStart":"01-01-1970 01:01:00:000","windowEnd":"01-01-1970 01:01:15:000","page":"/help","count":1000}

{"windowStart":"01-01-1970 01:01:15:000","windowEnd":"01-01-1970 01:01:30:000","page":"/index","count":1000}

作业升级和扩缩容

这两个操作都是通过两步完成。

- 停止作业。通过savepoint的方式安全的停止作业。savepoint是flink定义好的全局一致的完整作业状态。

- 启动作业。从savepoint启动作业。

同上一个测试,首先在独立的终端观察作业输出。

作业升级

# 查看job,命令行: docker-compose run --no-deps client flink list

Waiting for response...

------------------ Running/Restarting Jobs -------------------

11.02.2020 06:58:43 : f99f358a1ea3dac77c874c9588751f0b : Click Event Count (RUNNING)

--------------------------------------------------------------

No scheduled jobs.

# 停止作业:docker-compose run --no-deps client flink stop f99f358a1ea3dac77c874c9588751f0b

Suspending job "f99f358a1ea3dac77c874c9588751f0b" with a savepoint.

Savepoint completed. Path: file:/tmp/flink-savepoints-directory/savepoint-f99f35-0007baff59ea

# 查看savepoint:ll /tmp/flink-savepoints-directory/savepoint-f99f35-0007baff59ea

total 20

-rw-r--r--. 1 root root 1231 Feb 11 15:35 5c2ed46d-0cea-4ecb-af6c-3989c6432168

-rw-r--r--. 1 root root 2763 Feb 11 15:35 766c74ba-bd87-48f6-a1d0-cf3a89c54e22

-rw-r--r--. 1 root root 2639 Feb 11 15:35 9ac4fc30-9564-4035-b407-837a210c3067

-rw-r--r--. 1 root root 1209 Feb 11 15:35 f60b009b-ad85-4223-b0d8-72231ab8d03a

-rw-r--r--. 1 root root 3772 Feb 11 15:35 _metadata

# 从savepoint启动作业:docker-compose run --no-deps client flink run -s /tmp/flink-savepoints-directory/savepoint-f99f35-0007baff59ea -d -p 2 /opt/ClickCountJob.jar --bootstrap.servers kafka:9092 --checkpointing --event-time

Starting execution of program

Job has been submitted with JobID b9503b3746ab1d79c63d05cb4b188842

作业输出终端快速输出处理结果,可看到并没有时间段中断或者数据丢失。输出的数据时间顺序有些混乱,这是由于kafka的topic有两个partition,数据以某种方式(比如轮寻)存储在两个partition,两个partition的数据交替输出。

{"windowStart":"01-01-1970 12:43:15:000","windowEnd":"01-01-1970 12:43:30:000","page":"/shop","count":1000}

{"windowStart":"01-01-1970 12:43:15:000","windowEnd":"01-01-1970 12:43:30:000","page":"/help","count":1000}

{"windowStart":"01-01-1970 12:43:30:000","windowEnd":"01-01-1970 12:43:45:000","page":"/index","count":1000}

{"windowStart":"01-01-1970 12:43:30:000","windowEnd":"01-01-1970 12:43:45:000","page":"/jobs","count":1000}

{"windowStart":"01-01-1970 12:43:30:000","windowEnd":"01-01-1970 12:43:45:000","page":"/news","count":1000}

{"windowStart":"01-01-1970 12:43:45:000","windowEnd":"01-01-1970 12:44:00:000","page":"/index","count":1000}

{"windowStart":"01-01-1970 12:43:45:000","windowEnd":"01-01-1970 12:44:00:000","page":"/about","count":1000}

{"windowStart":"01-01-1970 12:43:30:000","windowEnd":"01-01-1970 12:43:45:000","page":"/shop","count":1000}

{"windowStart":"01-01-1970 12:43:45:000","windowEnd":"01-01-1970 12:44:00:000","page":"/shop","count":1000}

{"windowStart":"01-01-1970 12:44:00:000","windowEnd":"01-01-1970 12:44:15:000","page":"/help","count":1000}

{"windowStart":"01-01-1970 12:43:30:000","windowEnd":"01-01-1970 12:43:45:000","page":"/about","count":1000}

{"windowStart":"01-01-1970 12:43:45:000","windowEnd":"01-01-1970 12:44:00:000","page":"/news","count":1000}

{"windowStart":"01-01-1970 12:43:45:000","windowEnd":"01-01-1970 12:44:00:000","page":"/jobs","count":1000}

{"windowStart":"01-01-1970 12:44:00:000","windowEnd":"01-01-1970 12:44:15:000","page":"/index","count":1000}

{"windowStart":"01-01-1970 12:43:30:000","windowEnd":"01-01-1970 12:43:45:000","page":"/help","count":1000}

{"windowStart":"01-01-1970 12:43:45:000","windowEnd":"01-01-1970 12:44:00:000","page":"/help","count":1000}

扩缩容

# 停止作业: docker-compose run --no-deps client flink stop b9503b3746ab1d79c63d05cb4b188842

Suspending job "b9503b3746ab1d79c63d05cb4b188842" with a savepoint.

Savepoint completed. Path: file:/tmp/flink-savepoints-directory/savepoint-b9503b-0b0a12d1afd0

# 以并行度3启动作业:docker-compose run --no-deps client flink run -s /tmp/flink-savepoints-directory/savepoint-b9503b-0b0a12d1afd0 -d -p 3 /opt/ClickCountJob.jar --bootstrap.servers kafka:9092 --checkpointing --event-timeStarting execution of program

Job has been submitted with JobID 90f30f0243915c638d5a6391982a8091

可看到由于slots不够,作业的task处于scheduled状态。

# 设置taskmanager个数为2:docker-compose up -d --scale taskmanager=2 taskmanager

operations-playground_jobmanager_1 is up-to-date

Starting operations-playground_taskmanager_1 ... done

Creating operations-playground_taskmanager_2 ... done

作业输出终端快速输出处理结果,可看到并没有时间段中断或者数据丢失。

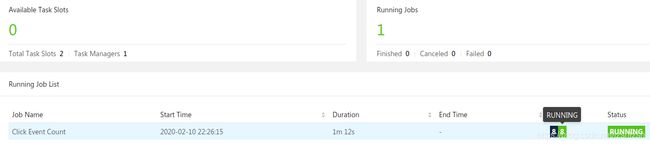

查询作业指标

# 查看所有作业:curl http://localhost:8081/jobs

{"jobs":[{"id":"9bc8941e8b607c3065cf84b606551990","status":"RUNNING"}]}

# 查看作业指标:curl http://localhost:8081/jobs/9bc8941e8b607c3065cf84b606551990

{

"jid": "9bc8941e8b607c3065cf84b606551990",

"name": "Click Event Count",

"isStoppable": false,

"state": "RUNNING",

"start-time": 1581410581291,

"end-time": -1,

"duration": 543708,

"now": 1581411124999,

"timestamps": {

"FINISHED": 0,

"CANCELED": 0,

"RECONCILING": 0,

"FAILING": 0,

"RUNNING": 1581410581621,

"SUSPENDED": 0,

"CREATED": 1581410581291,

"CANCELLING": 0,

"FAILED": 0,

"RESTARTING": 0

},

"vertices": [

{

"id": "bc764cd8ddf7a0cff126f51c16239658",

"name": "Source: ClickEvent Source",

"parallelism": 2,

"status": "RUNNING",

"start-time": 1581410582781,

"end-time": -1,

"duration": 542218,

"tasks": {

"DEPLOYING": 0,

"RECONCILING": 0,

"RUNNING": 2,

"CANCELED": 0,

"FAILED": 0,

"SCHEDULED": 0,

"FINISHED": 0,

"CREATED": 0,

"CANCELING": 0

},

"metrics": {

"read-bytes": 0,

"read-bytes-complete": true,

"write-bytes": 7458400,

"write-bytes-complete": true,

"read-records": 0,

"read-records-complete": true,

"write-records": 246001,

"write-records-complete": true

}

},

{

"id": "0a448493b4782967b150582570326227",

"name": "Timestamps/Watermarks",

"parallelism": 2,

"status": "RUNNING",

"start-time": 1581410582808,

"end-time": -1,

"duration": 542191,

"tasks": {

"DEPLOYING": 0,

"RECONCILING": 0,

"RUNNING": 2,

"CANCELED": 0,

"FAILED": 0,

"SCHEDULED": 0,

"FINISHED": 0,

"CREATED": 0,

"CANCELING": 0

},

"metrics": {

"read-bytes": 7491057,

"read-bytes-complete": true,

"write-bytes": 7526243,

"write-bytes-complete": true,

"read-records": 245981,

"read-records-complete": true,

"write-records": 245981,

"write-records-complete": true

}

},

{

"id": "ea632d67b7d595e5b851708ae9ad79d6",

"name": "ClickEvent Counter",

"parallelism": 2,

"status": "RUNNING",

"start-time": 1581410582843,

"end-time": -1,

"duration": 542156,

"tasks": {

"DEPLOYING": 0,

"RECONCILING": 0,

"RUNNING": 2,

"CANCELED": 0,

"FAILED": 0,

"SCHEDULED": 0,

"FINISHED": 0,

"CREATED": 0,

"CANCELING": 0

},

"metrics": {

"read-bytes": 7587094,

"read-bytes-complete": true,

"write-bytes": 79724,

"write-bytes-complete": true,

"read-records": 245933,

"read-records-complete": true,

"write-records": 246,

"write-records-complete": true

}

},

{

"id": "6d2677a0ecc3fd8df0b72ec675edf8f4",

"name": "Sink: ClickEventStatistics Sink",

"parallelism": 2,

"status": "RUNNING",

"start-time": 1581410582858,

"end-time": -1,

"duration": 542141,

"tasks": {

"DEPLOYING": 0,

"RECONCILING": 0,

"RUNNING": 2,

"CANCELED": 0,

"FAILED": 0,

"SCHEDULED": 0,

"FINISHED": 0,

"CREATED": 0,

"CANCELING": 0

},

"metrics": {

"read-bytes": 109374,

"read-bytes-complete": true,

"write-bytes": 0,

"write-bytes-complete": true,

"read-records": 246,

"read-records-complete": true,

"write-records": 0,

"write-records-complete": true

}

}

],

"status-counts": {

"DEPLOYING": 0,

"RECONCILING": 0,

"RUNNING": 4,

"CANCELED": 0,

"FAILED": 0,

"SCHEDULED": 0,

"FINISHED": 0,

"CREATED": 0,

"CANCELING": 0

},

"plan": {

"jid": "9bc8941e8b607c3065cf84b606551990",

"name": "Click Event Count",

"nodes": [

{

"id": "6d2677a0ecc3fd8df0b72ec675edf8f4",

"parallelism": 2,

"operator": "",

"operator_strategy": "",

"description": "Sink: ClickEventStatistics Sink",

"inputs": [

{

"num": 0,

"id": "ea632d67b7d595e5b851708ae9ad79d6",

"ship_strategy": "FORWARD",

"exchange": "pipelined_bounded"

}

],

"optimizer_properties": {}

},

{

"id": "ea632d67b7d595e5b851708ae9ad79d6",

"parallelism": 2,

"operator": "",

"operator_strategy": "",

"description": "ClickEvent Counter",

"inputs": [

{

"num": 0,

"id": "0a448493b4782967b150582570326227",

"ship_strategy": "HASH",

"exchange": "pipelined_bounded"

}

],

"optimizer_properties": {}

},

{

"id": "0a448493b4782967b150582570326227",

"parallelism": 2,

"operator": "",

"operator_strategy": "",

"description": "Timestamps/Watermarks",

"inputs": [

{

"num": 0,

"id": "bc764cd8ddf7a0cff126f51c16239658",

"ship_strategy": "FORWARD",

"exchange": "pipelined_bounded"

}

],

"optimizer_properties": {}

},

{

"id": "bc764cd8ddf7a0cff126f51c16239658",

"parallelism": 2,

"operator": "",

"operator_strategy": "",

"description": "Source: ClickEvent Source",

"optimizer_properties": {}

}

]

}

}

试玩结束

参考资料

- [1]Flink Operations Playground