ansible-playbook部署K8S集群

通过ansible-playbook,以Kubeadm方式部署K8S高可用集群。

kubernetes安装目录: /etc/kubernetes/

KubeConfig: ~/.kube/config

主机说明:

| 系统 | ip | 角色 | cpu | 内存 | hostname |

|---|---|---|---|---|---|

| CentOS 7.7 | 192.168.30.128 | master | >=2 | >=2G | master1 |

| CentOS 7.7 | 192.168.30.129 | master | >=2 | >=2G | master2 |

| CentOS 7.7 | 192.168.30.130 | node | >=2 | >=2G | node1 |

| CentOS 7.7 | 192.168.30.131 | node | >=2 | >=2G | node2 |

| CentOS 7.7 | 192.168.30.132 | node | >=2 | >=2G | node3 |

准备

- 将所有部署k8s集群的主机分组:

# vim /etc/ansible/hosts

[master]

192.168.30.128 hostname=master1

[add_master]

192.168.30.129 hostname=master2

[add_node]

192.168.30.130 hostname=node1

192.168.30.131 hostname=node2

192.168.30.132 hostname=node3

- 创建管理目录:

# mkdir -p kubeadm_k8s/roles/{docker_install,init_install,master_install,node_install,addons_install}/{files,handlers,meta,tasks,templates,vars}

# cd kubeadm_k8s/

说明:

files:存放需要同步到异地服务器的源码文件及配置文件;

handlers:当资源发生变化时需要进行的操作,若没有此目录可以不建或为空;

meta:存放说明信息、说明角色依赖等信息,可留空;

tasks:K8S 安装过程中需要进行执行的任务;

templates:用于执行 K8S 安装的模板文件,一般为脚本;

vars:本次安装定义的变量

# tree .

.

├── k8s.yml

└── roles

├── addons_install

│ ├── files

│ ├── handlers

│ ├── meta

│ ├── tasks

│ │ ├── calico.yml

│ │ ├── dashboard.yml

│ │ └── main.yml

│ ├── templates

│ │ ├── calico-rbac-kdd.yaml

│ │ ├── calico.yaml

│ │ └── dashboard-all.yaml

│ └── vars

│ └── main.yml

├── docker_install

│ ├── files

│ ├── handlers

│ ├── meta

│ ├── tasks

│ │ ├── install.yml

│ │ ├── main.yml

│ │ └── prepare.yml

│ ├── templates

│ │ ├── daemon.json

│ │ ├── kubernetes.conf

│ │ └── kubernetes.repo

│ └── vars

│ └── main.yml

├── init_install

│ ├── files

│ ├── handlers

│ ├── meta

│ ├── tasks

│ │ ├── install.yml

│ │ └── main.yml

│ ├── templates

│ │ ├── check-apiserver.sh

│ │ ├── keepalived-master.conf

│ │ └── kubeadm-config.yaml

│ └── vars

│ └── main.yml

├── master_install

│ ├── files

│ ├── handlers

│ ├── meta

│ ├── tasks

│ │ ├── install.yml

│ │ └── main.yml

│ ├── templates

│ │ ├── check-apiserver.sh

│ │ └── keepalived-backup.conf

│ └── vars

│ └── main.yml

└── node_install

├── files

├── handlers

├── meta

├── tasks

│ ├── install.yml

│ └── main.yml

├── templates

└── vars

└── main.yml

36 directories, 29 files

- 创建安装入口文件,用来调用roles:

# vim k8s.yml

---

- hosts: all

remote_user: root

gather_facts: True

roles:

- docker_install

- hosts: master

remote_user: root

gather_facts: True

roles:

- init_install

- hosts: add_master

remote_user: root

gather_facts: True

roles:

- master_install

- hosts: add_node

remote_user: root

gather_facts: True

roles:

- node_install

- hosts: master

remote_user: root

gather_facts: True

roles:

- addons_install

docker部分

- 创建docker入口文件,用来调用docker_install:

# vim docker.yml

#用于批量安装Docker

- hosts: all

remote_user: root

gather_facts: True

roles:

- docker_install

- 创建变量:

# vim roles/docker_install/vars/main.yml

#定义docker安装中的变量

SOURCE_DIR: /software

VERSION: 1.14.0-0

- 创建模板文件:

docker配置daemon.json

# vim roles/docker_install/templates/daemon.json

{

"registry-mirrors": ["http://f1361db2.m.daocloud.io"],

"exec-opts":["native.cgroupdriver=systemd"]

}

系统环境kubernetes.conf

# vim roles/docker_install/templates/kubernetes.conf

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

repo文件kubernetes.repo

# vim roles/docker_install/templates/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

- 环境准备prepare.yml:

# vim roles/docker_install/tasks/prepare.yml

- name: 关闭firewalld

service: name=firewalld state=stopped enabled=no

- name: 临时关闭 selinux

shell: "setenforce 0"

failed_when: false

- name: 永久关闭 selinux

lineinfile:

dest: /etc/selinux/config

regexp: "^SELINUX="

line: "SELINUX=disabled"

- name: 添加EPEL仓库

yum: name=epel-release state=latest

- name: 安装常用软件包

yum:

name:

- vim

- lrzsz

- net-tools

- wget

- curl

- bash-completion

- rsync

- gcc

- unzip

- git

- iptables

- conntrack

- ipvsadm

- ipset

- jq

- sysstat

- libseccomp

state: latest

- name: 更新系统

shell: "yum update -y --exclude kubeadm,kubelet,kubectl"

ignore_errors: yes

args:

warn: False

- name: 配置iptables

shell: "iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptables -P FORWARD ACCEPT"

- name: 关闭swap

shell: "swapoff -a && sed -i '/swap/s/^\\(.*\\)$/#\\1/g' /etc/fstab"

- name: 系统配置

template: src=kubernetes.conf dest=/etc/sysctl.d/kubernetes.conf

- name: 加载br_netfilter

shell: "modprobe br_netfilter"

- name: 生效配置

shell: "sysctl -p /etc/sysctl.d/kubernetes.conf"

- docker安装install.yml:

# vim roles/docker_install/tasks/install.yml

- name: 创建software目录

file: name={{ SOURCE_DIR }} state=directory

- name: 更改hostname

raw: "echo {{ hostname }} > /etc/hostname"

- name: 更改生效

shell: "hostname {{ hostname }}"

- name: 设置本地dns

shell: "if [ `grep '{{ ansible_ssh_host }} {{ hostname }}' /etc/hosts |wc -l` -eq 0 ]; then echo {{ ansible_ssh_host }} {{ hostname }} >> /etc/hosts; fi"

- name: 下载repo文件

shell: "if [ ! -f /etc/yum.repos.d/docker.repo ]; then curl http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker.repo; fi"

- name: 生成缓存

shell: "yum makecache fast"

args:

warn: False

- name: 安装docker-ce

yum:

name: docker-ce

state: latest

- name: 启动docker并开机启动

service:

name: docker

state: started

enabled: yes

- name: 配置docker

template: src=daemon.json dest=/etc/docker/daemon.json

- name: 重启docker

service:

name: docker

state: restarted

- name: 配置kubernetes源

template: src=kubernetes.repo dest=/etc/yum.repos.d/kubernetes.repo

- name: 安装kubernetes-cni

yum:

name: kubernetes-cni

state: latest

- name: 安装kubeadm、kubelet、kubectl

shell: "yum install -y kubeadm-{{ VERSION }} kubelet-{{ VERSION }} kubectl-{{ VERSION }} --disableexcludes=kubernetes"

args:

warn: False

- name: 启动kubelet并开机启动

service:

name: kubelet

state: started

enabled: yes

- 引用文件main.yml:

# vim roles/docker_install/tasks/main.yml

#引用prepare、install模块

- include: prepare.yml

- include: install.yml

init部分

- 创建init入口文件,用来调用init_install:

# vim init.yml

#用于初始化k8s集群

- hosts: master

remote_user: root

gather_facts: True

roles:

- init_install

- 创建变量:

# vim roles/init_install/vars/main.yml

#定义初始化k8s集群中的变量

#kubernetes版本

VERSION: v1.14.0

#Pod网段

POD_CIDR: 172.10.0.0/16

#master虚拟ip(建议为同网段地址)

MASTER_VIP: 192.168.30.188

#keepalived用到的网卡接口名

VIP_IF: ens33

SOURCE_DIR: /software

Cluster_Num: "{{ groups['all'] | length }}"

Virtual_Router_ID: 68

- 创建模板文件:

keepalived master配置文件 keepalived-master.conf

# vim roles/init_install/templates/keepalived-master.conf

! Configuration File for keepalived

global_defs {

router_id keepalive-master

}

vrrp_script check_apiserver {

script "/etc/keepalived/check-apiserver.sh"

interval 3

weight -{{ Cluster_Num }}

}

vrrp_instance VI-kube-master {

state MASTER

interface {{ VIP_IF }}

virtual_router_id {{ Virtual_Router_ID }}

priority 100

dont_track_primary

advert_int 3

virtual_ipaddress {

{{ MASTER_VIP }}

}

track_script {

check_apiserver

}

}

keepalived检查脚本 check-apiserver.sh

# vim roles/init_install/templates/check-apiserver.sh

#!/bin/sh

netstat -lntp |grep 6443 || exit 1

kubeadm配置文件 kubeadm-config.yaml

# vim roles/init_install/templates/kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

kubernetesVersion: {{ VERSION }}

controlPlaneEndpoint: "{{ MASTER_VIP }}:6443"

networking:

# This CIDR is a Calico default. Substitute or remove for your CNI provider.

podSubnet: "{{ POD_CIDR }}"

imageRepository: registry.cn-hangzhou.aliyuncs.com/imooc

- 集群初始化install.yml:

# vim roles/init_install/tasks/install.yml

- name: 安装keepalived

yum: name=keepalived state=present

- name: 拷贝keepalived配置文件

template: src=keepalived-master.conf dest=/etc/keepalived/keepalived.conf

- name: 拷贝keepalived检查脚本

template: src=check-apiserver.sh dest=/etc/keepalived/check-apiserver.sh mode=0755

- name: 启动keepalived并开机启动

service:

name: keepalived

state: started

enabled: yes

- name: 拷贝kubeadm配置文件

template: src=kubeadm-config.yaml dest={{ SOURCE_DIR }}

- name: 集群初始化准备1

shell: "swapoff -a && kubeadm reset -f"

- name: 集群初始化准备2

shell: "systemctl daemon-reload && systemctl restart kubelet"

- name: 集群初始化准备3

shell: "iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X"

- name: 拉取kube-scheduler镜像

shell: "docker pull registry.cn-hangzhou.aliyuncs.com/imooc/kube-scheduler:{{ VERSION }}"

- name: 集群初始化

shell: "kubeadm init --config={{ SOURCE_DIR }}/kubeadm-config.yaml --experimental-upload-certs &>{{ SOURCE_DIR }}/token.txt"

- name: 获取master的token

shell: "grep -B2 'experimental-control-plane' {{ SOURCE_DIR }}/token.txt > {{ SOURCE_DIR }}/master.sh"

- name: 获取node的token

shell: "grep -A1 'kubeadm join' {{ SOURCE_DIR }}/token.txt |tail -2 > {{ SOURCE_DIR }}/node.sh"

- name: 分发master.sh

shell: "ansible all -m copy -a 'src={{ SOURCE_DIR }}/master.sh dest={{ SOURCE_DIR }} mode=0755'"

args:

warn: False

- name: 分发node.sh

shell: "ansible all -m copy -a 'src={{ SOURCE_DIR }}/node.sh dest={{ SOURCE_DIR }} mode=0755'"

args:

warn: False

- name: 创建 $HOME/.kube 目录

file: name=$HOME/.kube state=directory

- name: 拷贝KubeConfig

copy: src=/etc/kubernetes/admin.conf dest=$HOME/.kube/config owner=root group=root

- name: kubectl命令补全1

shell: "kubectl completion bash > $HOME/.kube/completion.bash.inc"

- name: kubectl命令补全2

shell: "if [ `grep 'source $HOME/.kube/completion.bash.inc' $HOME/.bash_profile |wc -l` -eq 0 ]; then echo 'source $HOME/.kube/completion.bash.inc' >> $HOME/.bash_profile; fi"

- name: 生效配置

shell: "source $HOME/.bash_profile"

ignore_errors: yes

- 引用文件main.yml:

# vim roles/init_install/tasks/main.yml

#引用install模块

- include: install.yml

master部分

- 创建master入口文件,用来调用master_install:

# vim master.yml

#用于集群新增master

- hosts: add_master

remote_user: root

gather_facts: True

roles:

- master_install

- 创建变量:

# vim roles/master_install/vars/main.yml

#定义新增master到集群中的变量

#注意与keepalived master一致

#master虚拟ip(建议为同网段地址)

MASTER_VIP: 192.168.30.188

#keepalived用到的网卡接口名

VIP_IF: ens33

SOURCE_DIR: /software

Cluster_Num: "{{ groups['all'] | length }}"

Virtual_Router_ID: 68

- 创建模板文件:

keepalived backup配置文件 keepalived-backup.conf

# vim roles/master_install/templates/keepalived-backup.conf

! Configuration File for keepalived

global_defs {

router_id keepalive-backup

}

vrrp_script check_apiserver {

script "/etc/keepalived/check-apiserver.sh"

interval 3

weight -{{ Cluster_Num }}

}

vrrp_instance VI-kube-master {

state BACKUP

interface {{ VIP_IF }}

virtual_router_id {{ Virtual_Router_ID }}

priority 99

dont_track_primary

advert_int 3

virtual_ipaddress {

{{ MASTER_VIP }}

}

track_script {

check_apiserver

}

}

keepalived检查脚本 check-apiserver.sh

# vim roles/master_install/templates/check-apiserver.sh

#!/bin/sh

netstat -lntp |grep 6443 || exit 1

- 添加master到集群install.yml:

# vim roles/master_install/tasks/install.yml

- name: 安装keepalived

yum: name=keepalived state=present

- name: 拷贝keepalived配置文件

template: src=keepalived-backup.conf dest=/etc/keepalived/keepalived.conf

- name: 拷贝keepalived检查脚本

template: src=check-apiserver.sh dest=/etc/keepalived/check-apiserver.sh mode=0755

- name: 启动keepalived并开机启动

service:

name: keepalived

state: started

enabled: yes

- name: 集群初始化准备1

shell: "swapoff -a && kubeadm reset -f"

- name: 集群初始化准备2

shell: "systemctl daemon-reload && systemctl restart kubelet"

- name: 集群初始化准备3

shell: "iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X"

- name: 集群增加master

script: "{{ SOURCE_DIR }}/master.sh"

- name: 创建 $HOME/.kube 目录

file: name=$HOME/.kube state=directory

- name: 拷贝KubeConfig

copy: src=/etc/kubernetes/admin.conf dest=$HOME/.kube/config owner=root group=root

- name: kubectl命令补全1

shell: "kubectl completion bash > $HOME/.kube/completion.bash.inc"

- name: kubectl命令补全2

shell: "if [ `grep 'source $HOME/.kube/completion.bash.inc' $HOME/.bash_profile |wc -l` -eq 0 ]; then echo 'source $HOME/.kube/completion.bash.inc' >> $HOME/.bash_profile; fi"

- name: 生效配置

shell: "source $HOME/.bash_profile"

ignore_errors: yes

- name: 删除master的token

file: name={{ SOURCE_DIR }}/master.sh state=absent

- name: 删除node的token

file: name={{ SOURCE_DIR }}/node.sh state=absent

- 引用文件main.yml:

# vim roles/master_install/tasks/main.yml

#引用install模块

- include: install.yml

node部分

- 创建node入口文件,用来调用node_install:

# vim node.yml

#用于集群增加node

- hosts: add_node

remote_user: root

gather_facts: True

roles:

- node_install

- 创建变量:

# vim roles/node_install/vars/main.yml

#定义新增node到集群中的变量

SOURCE_DIR: /software

- 添加node到集群install.yml:

# vim roles/node_install/tasks/install.yml

- name: 集群初始化准备1

shell: "swapoff -a && kubeadm reset -f"

- name: 集群初始化准备2

shell: "systemctl daemon-reload && systemctl restart kubelet"

- name: 集群初始化准备3

shell: "iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X"

- name: 集群增加node

script: "{{ SOURCE_DIR }}/node.sh"

- name: 删除master的token

file: name={{ SOURCE_DIR }}/master.sh state=absent

- name: 删除node的token

file: name={{ SOURCE_DIR }}/node.sh state=absent

- 引用文件main.yml:

# vim roles/node_install/tasks/main.yml

#引用install模块

- include: install.yml

addons部分

- 创建addons入口文件,用来调用addons_install:

# vim addons.yml

#用于安装k8s集群插件

- hosts: master

remote_user: root

gather_facts: True

roles:

- addons_install

- 创建变量:

# vim roles/addons_install/vars/main.yml

#定义k8s集群插件安装中的变量

#Pod网段

POD_CIDR: 172.10.0.0/16

SOURCE_DIR: /software

- 创建模板文件:

calico rbac配置文件 calico-rbac-kdd.yaml

# vim roles/addons_install/templates/calico-rbac-kdd.yaml

# Calico Version v3.1.3

# https://docs.projectcalico.org/v3.1/releases#v3.1.3

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: calico-node

rules:

- apiGroups: [""]

resources:

- namespaces

verbs:

- get

- list

- watch

- apiGroups: [""]

resources:

- pods/status

verbs:

- update

- apiGroups: [""]

resources:

- pods

verbs:

- get

- list

- watch

- patch

- apiGroups: [""]

resources:

- services

verbs:

- get

- apiGroups: [""]

resources:

- endpoints

verbs:

- get

- apiGroups: [""]

resources:

- nodes

verbs:

- get

- list

- update

- watch

- apiGroups: ["extensions"]

resources:

- networkpolicies

verbs:

- get

- list

- watch

- apiGroups: ["networking.k8s.io"]

resources:

- networkpolicies

verbs:

- watch

- list

- apiGroups: ["crd.projectcalico.org"]

resources:

- globalfelixconfigs

- felixconfigurations

- bgppeers

- globalbgpconfigs

- bgpconfigurations

- ippools

- globalnetworkpolicies

- globalnetworksets

- networkpolicies

- clusterinformations

- hostendpoints

verbs:

- create

- get

- list

- update

- watch

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: calico-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-node

subjects:

- kind: ServiceAccount

name: calico-node

namespace: kube-system

calico配置文件 calico.yaml

# vim roles/addons_install/templates/calico.yaml

# Calico Version v3.1.3

# https://docs.projectcalico.org/v3.1/releases#v3.1.3

# This manifest includes the following component versions:

# calico/node:v3.1.3

# calico/cni:v3.1.3

# This ConfigMap is used to configure a self-hosted Calico installation.

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# To enable Typha, set this to "calico-typha" *and* set a non-zero value for Typha replicas

# below. We recommend using Typha if you have more than 50 nodes. Above 100 nodes it is

# essential.

typha_service_name: "calico-typha"

# The CNI network configuration to install on each node.

cni_network_config: |-

{

"name": "k8s-pod-network",

"cniVersion": "0.3.0",

"plugins": [

{

"type": "calico",

"log_level": "info",

"datastore_type": "kubernetes",

"nodename": "__KUBERNETES_NODE_NAME__",

"mtu": 1500,

"ipam": {

"type": "host-local",

"subnet": "usePodCidr"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "__KUBECONFIG_FILEPATH__"

}

},

{

"type": "portmap",

"snat": true,

"capabilities": {"portMappings": true}

}

]

}

---

# This manifest creates a Service, which will be backed by Calico's Typha daemon.

# Typha sits in between Felix and the API server, reducing Calico's load on the API server.

apiVersion: v1

kind: Service

metadata:

name: calico-typha

namespace: kube-system

labels:

k8s-app: calico-typha

spec:

ports:

- port: 5473

protocol: TCP

targetPort: calico-typha

name: calico-typha

selector:

k8s-app: calico-typha

---

# This manifest creates a Deployment of Typha to back the above service.

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: calico-typha

namespace: kube-system

labels:

k8s-app: calico-typha

spec:

# Number of Typha replicas. To enable Typha, set this to a non-zero value *and* set the

# typha_service_name variable in the calico-config ConfigMap above.

#

# We recommend using Typha if you have more than 50 nodes. Above 100 nodes it is essential

# (when using the Kubernetes datastore). Use one replica for every 100-200 nodes. In

# production, we recommend running at least 3 replicas to reduce the impact of rolling upgrade.

replicas: 1

revisionHistoryLimit: 2

template:

metadata:

labels:

k8s-app: calico-typha

annotations:

# This, along with the CriticalAddonsOnly toleration below, marks the pod as a critical

# add-on, ensuring it gets priority scheduling and that its resources are reserved

# if it ever gets evicted.

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

hostNetwork: true

tolerations:

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

# Since Calico can't network a pod until Typha is up, we need to run Typha itself

# as a host-networked pod.

serviceAccountName: calico-node

containers:

- image: quay.io/calico/typha:v0.7.4

name: calico-typha

ports:

- containerPort: 5473

name: calico-typha

protocol: TCP

env:

# Enable "info" logging by default. Can be set to "debug" to increase verbosity.

- name: TYPHA_LOGSEVERITYSCREEN

value: "info"

# Disable logging to file and syslog since those don't make sense in Kubernetes.

- name: TYPHA_LOGFILEPATH

value: "none"

- name: TYPHA_LOGSEVERITYSYS

value: "none"

# Monitor the Kubernetes API to find the number of running instances and rebalance

# connections.

- name: TYPHA_CONNECTIONREBALANCINGMODE

value: "kubernetes"

- name: TYPHA_DATASTORETYPE

value: "kubernetes"

- name: TYPHA_HEALTHENABLED

value: "true"

# Uncomment these lines to enable prometheus metrics. Since Typha is host-networked,

# this opens a port on the host, which may need to be secured.

#- name: TYPHA_PROMETHEUSMETRICSENABLED

# value: "true"

#- name: TYPHA_PROMETHEUSMETRICSPORT

# value: "9093"

livenessProbe:

httpGet:

path: /liveness

port: 9098

periodSeconds: 30

initialDelaySeconds: 30

readinessProbe:

httpGet:

path: /readiness

port: 9098

periodSeconds: 10

---

# This manifest installs the calico/node container, as well

# as the Calico CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

kind: DaemonSet

apiVersion: extensions/v1beta1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: calico-node

annotations:

# This, along with the CriticalAddonsOnly toleration below,

# marks the pod as a critical add-on, ensuring it gets

# priority scheduling and that its resources are reserved

# if it ever gets evicted.

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

hostNetwork: true

tolerations:

# Make sure calico/node gets scheduled on all nodes.

- effect: NoSchedule

operator: Exists

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

serviceAccountName: calico-node

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

containers:

# Runs calico/node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: quay.io/calico/node:v3.1.3

env:

# Use Kubernetes API as the backing datastore.

- name: DATASTORE_TYPE

value: "kubernetes"

# Enable felix info logging.

- name: FELIX_LOGSEVERITYSCREEN

value: "info"

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

# Disable IPV6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: "false"

# Set MTU for tunnel device used if ipip is enabled

- name: FELIX_IPINIPMTU

value: "1440"

# Wait for the datastore.

- name: WAIT_FOR_DATASTORE

value: "true"

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "{{ POD_CIDR }}"

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always"

# Enable IP-in-IP within Felix.

- name: FELIX_IPINIPENABLED

value: "true"

# Typha support: controlled by the ConfigMap.

- name: FELIX_TYPHAK8SSERVICENAME

valueFrom:

configMapKeyRef:

name: calico-config

key: typha_service_name

# Set based on the k8s node name.

- name: NODENAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

- name: FELIX_HEALTHENABLED

value: "true"

securityContext:

privileged: true

resources:

requests:

cpu: 250m

livenessProbe:

httpGet:

path: /liveness

port: 9099

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

readinessProbe:

httpGet:

path: /readiness

port: 9099

periodSeconds: 10

volumeMounts:

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /var/run/calico

name: var-run-calico

readOnly: false

- mountPath: /var/lib/calico

name: var-lib-calico

readOnly: false

# This container installs the Calico CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: quay.io/calico/cni:v3.1.3

command: ["/install-cni.sh"]

env:

# Name of the CNI config file to create.

- name: CNI_CONF_NAME

value: "10-calico.conflist"

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

# Set the hostname based on the k8s node name.

- name: KUBERNETES_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

volumes:

# Used by calico/node.

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

- name: var-lib-calico

hostPath:

path: /var/lib/calico

# Used to install CNI.

- name: cni-bin-dir

hostPath:

path: /opt/cni/bin

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d

# Create all the CustomResourceDefinitions needed for

# Calico policy and networking mode.

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: felixconfigurations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: FelixConfiguration

plural: felixconfigurations

singular: felixconfiguration

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: bgppeers.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: BGPPeer

plural: bgppeers

singular: bgppeer

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: bgpconfigurations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: BGPConfiguration

plural: bgpconfigurations

singular: bgpconfiguration

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ippools.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: IPPool

plural: ippools

singular: ippool

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: hostendpoints.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: HostEndpoint

plural: hostendpoints

singular: hostendpoint

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: clusterinformations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: ClusterInformation

plural: clusterinformations

singular: clusterinformation

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: globalnetworkpolicies.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: GlobalNetworkPolicy

plural: globalnetworkpolicies

singular: globalnetworkpolicy

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: globalnetworksets.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: GlobalNetworkSet

plural: globalnetworksets

singular: globalnetworkset

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: networkpolicies.crd.projectcalico.org

spec:

scope: Namespaced

group: crd.projectcalico.org

version: v1

names:

kind: NetworkPolicy

plural: networkpolicies

singular: networkpolicy

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-node

namespace: kube-system

dashboard配置文件 dashboard-all.yaml

# vim roles/addons_install/templates/dashboard-all.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-settings

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

containers:

- name: kubernetes-dashboard

image: registry.cn-hangzhou.aliyuncs.com/imooc/kubernetes-dashboard-amd64:v1.8.3

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-key-holder

namespace: kube-system

type: Opaque

---

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443

targetPort: 8443

nodePort: 30005

type: NodePort

- coredns安装calico.yml:

# vim roles/addons_install/tasks/calico.yml

- name: 创建addons目录

file: name=/etc/kubernetes/addons state=directory

- name: 拷贝calico-rbac-kdd.yaml

template: src=calico-rbac-kdd.yaml dest=/etc/kubernetes/addons

- name: 拷贝calico.yaml

template: src=calico.yaml dest=/etc/kubernetes/addons

- name: 拉取calico typha镜像

shell: "ansible all -m shell -a 'docker pull registry.cn-hangzhou.aliyuncs.com/liuyi01/calico-typha:v0.7.4'"

- name: tag calico typha镜像

shell: "ansible all -m shell -a 'docker tag registry.cn-hangzhou.aliyuncs.com/liuyi01/calico-typha:v0.7.4 quay.io/calico/typha:v0.7.4'"

- name: 拉取calico node镜像

shell: "ansible all -m shell -a 'docker pull registry.cn-hangzhou.aliyuncs.com/liuyi01/calico-node:v3.1.3'"

- name: tag calico node镜像

shell: "ansible all -m shell -a 'docker tag registry.cn-hangzhou.aliyuncs.com/liuyi01/calico-node:v3.1.3 quay.io/calico/node:v3.1.3'"

- name: 拉取calico cni镜像

shell: "ansible all -m shell -a 'docker pull registry.cn-hangzhou.aliyuncs.com/liuyi01/calico-cni:v3.1.3'"

- name: tag calico cni镜像

shell: "ansible all -m shell -a 'docker tag registry.cn-hangzhou.aliyuncs.com/liuyi01/calico-cni:v3.1.3 quay.io/calico/cni:v3.1.3'"

- name: 创建calico-rbac

shell: "kubectl apply -f /etc/kubernetes/addons/calico-rbac-kdd.yaml"

- name: 部署calico

shell: "kubectl apply -f /etc/kubernetes/addons/calico.yaml"

- dashboard安装dashboard.yml:

# vim roles/addons_install/tasks/dashboard.yml

- name: 拷贝dashboard-all.yaml

template: src=dashboard-all.yaml dest=/etc/kubernetes/addons

- name: 拉取dashboard镜像

shell: "ansible all -m shell -a 'docker pull registry.cn-hangzhou.aliyuncs.com/imooc/kubernetes-dashboard-amd64:v1.8.3'"

- name: 部署dashboard

shell: "kubectl apply -f /etc/kubernetes/addons/dashboard-all.yaml"

- name: 创建ServiceaAccount

shell: "kubectl create sa dashboard-admin -n kube-system"

- name: 权限绑定

shell: "kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin"

- name: 获取登录token

shell: "ADMIN_SECRET=$(kubectl get secrets -n kube-system | grep dashboard-admin | awk '{print $1}'); kubectl describe secret -n kube-system ${ADMIN_SECRET} | grep -E '^token' | awk '{print $2}' > {{ SOURCE_DIR }}/token.txt"

register: token

- name: 显示token位置

debug: var=token.cmd verbosity=0

- 引用文件main.yml:

# vim roles/addons_install/tasks/main.yml

#引用calico、dashboard模块

- include: calico.yml

- include: dashboard.yml

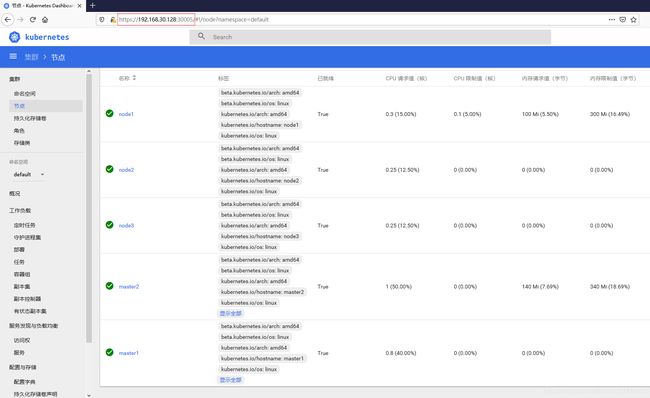

安装测试

- 执行安装:

# ansible-playbook k8s.yml

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready master 8m30s v1.14.0

master2 Ready master 6m38s v1.14.0

node1 Ready <none> 5m50s v1.14.0

node2 Ready <none> 5m49s v1.14.0

node3 Ready <none> 5m49s v1.14.0

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-node-9jqrh 2/2 Running 0 19h

calico-node-brfb8 2/2 Running 0 19h

calico-node-ncjxp 2/2 Running 0 19h

calico-node-qsffm 2/2 Running 0 19h

calico-node-smwq5 2/2 Running 0 19h

calico-typha-666749994b-h99rr 1/1 Running 0 19h

coredns-8567978547-6fvmp 1/1 Running 1 19h

coredns-8567978547-q2rz5 1/1 Running 1 19h

etcd-master1 1/1 Running 0 19h

etcd-master2 1/1 Running 0 19h

kube-apiserver-master1 1/1 Running 0 19h

kube-apiserver-master2 1/1 Running 0 19h

kube-controller-manager-master1 1/1 Running 0 19h

kube-controller-manager-master2 1/1 Running 0 19h

kube-proxy-2wfnz 1/1 Running 0 19h

kube-proxy-46gtf 1/1 Running 0 19h

kube-proxy-mg87t 1/1 Running 0 19h

kube-proxy-s5f5j 1/1 Running 0 19h

kube-proxy-vqdhx 1/1 Running 0 19h

kube-scheduler-master1 1/1 Running 0 19h

kube-scheduler-master2 1/1 Running 0 19h

kubernetes-dashboard-5bd4bfc87-rkvfh 1/1 Running 0 19h

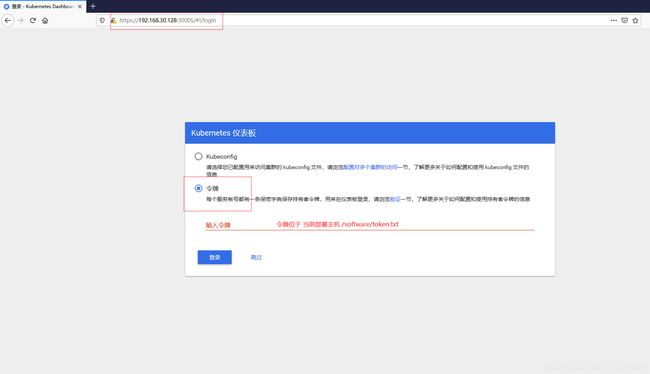

使用火狐浏览器访问https://192.168.30.128:30005,

# cat /software/token.txt

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tZHBudnoiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYWU1N2I5MWItMTg0MC0xMWVhLTgzNzYtMDAwYzI5YzkwMDgxIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.xOSS0owznhpdN0DmALG04ehP7Xw-qYEjX8YQAHy8vA017UFvRxd8oH4EsfkGEkzLWC3kwWUWCxRs7yEVUgGzx7Rp2lJ5xceyga448Kffj9GOQapAF8J_2SP9j2tCll465GSKtjODJgpwnknJ8YqMt6mn-jH9Cn49ljf6rSHuyk2_2qf_PX2ioIKHTKyFLMD-6ci-tUpWHOEQdKlxi3K7LJek4qlJ04hKckcphHZf35YEnBDcB2uvWsW7P9k_2GIwXwjoPLAlidmk56WGtrCy9erZFg1W1aeVh7z9aqECKSs0Jnt-bafPaBwbqpjbkjhGKC8vznBslxO2STaiR0-j1A

测试安装没有问题,注意kubernetes组件版本尽量一致。已存放至个人gitgub:ansible-playbook