基于FFmpeg的音视频播放器

版本信息

AndroidStudio 3.5.2

FFmpeg 4.0.2

背景

AndroidStudio3.5.1下搭建FFmpeg环境

Android使用FFmpeg动态库播放视频

Android基于OpenSL ES的音频播放

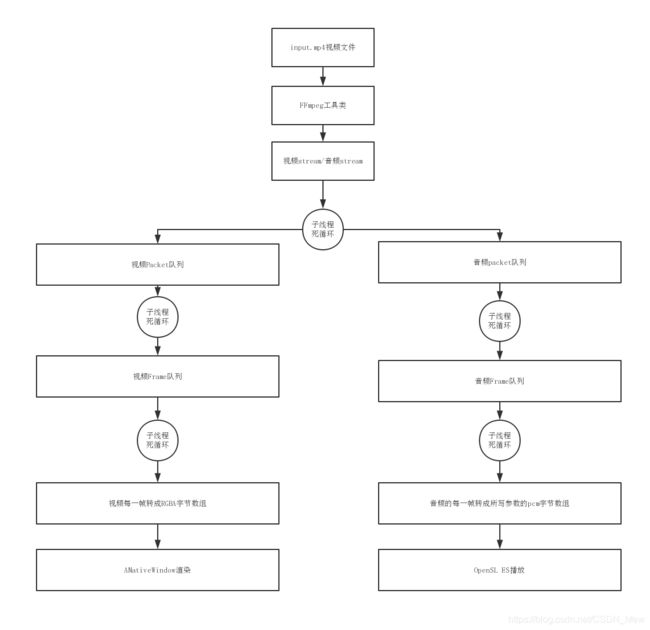

播放器架构

关键代码梳理

视频解封装,将音视频流逐帧分成Packet放到相应的队列

// 解码操作

void NativePlayer::codec_internal() {

int ret = 0;

while (isPlaying) {

// 判断队列是否已满

if (audioChannel && audioChannel->packetQueue.size() > QUEUE_MAX_SIZE) {

// 音频的包已经满了,线程睡10ms

av_usleep(THREAD_SLEEP_TIME);

continue;

}

if (videoChannel && videoChannel->packetQueue.size() > QUEUE_MAX_SIZE) {

// 视频的包已经满了,线程睡10ms

av_usleep(THREAD_SLEEP_TIME);

continue;

}

// 读取数据包

AVPacket *avPacket = av_packet_alloc();

ret = av_read_frame(avFormatContext, avPacket);

if (ret == 0) {

// 将数据包放到队列中

if (audioChannel && avPacket->stream_index == audioChannel->channelId) {

audioChannel->packetQueue.push(avPacket);

} else if (videoChannel && avPacket->stream_index == videoChannel->channelId) {

videoChannel->packetQueue.push(avPacket);

}

} else if (ret == AVERROR_EOF) {

// 读取完毕, 但不一定是播放完毕,只是将数据包全部放到队列中了

if (videoChannel->packetQueue.empty() && videoChannel->frameQueue.empty()

&& audioChannel->packetQueue.empty() && audioChannel->frameQueue.empty()) {

LOGE("播放完毕...");

break;

}

// 因为seek的存在,就算读取完毕,依然要循环下去, 执行av_read_frame(否则seek无效)

} else {

break;

}

}

isPlaying = false;

if (audioChannel) {

audioChannel->stop();

}

if (videoChannel) {

videoChannel->stop();

}

}视频Packet->视频Frame

// 将packet转成frame, 是packetQueue的消费者, 是frameQueue的生产者

void VideoChannel::decode_packet_internal() {

// 子线程运行

AVPacket *avPacket = 0;

while (isPlaying) {

int ret = packetQueue.pop(avPacket);

if (!isPlaying) {

break;

}

if (!ret) {

continue;

}

ret = avcodec_send_packet(codecContext, avPacket);

if (ret == AVERROR(EAGAIN)) {

// 需要更多的数据

continue;

} else if (ret < 0) {

// 失败

break;

}

releaseAvPacket(avPacket);

AVFrame *avFrame = av_frame_alloc();

ret = avcodec_receive_frame(codecContext, avFrame);

if (ret == AVERROR(EAGAIN)) {

// 需要更多的数据

continue;

} else if (ret < 0) {

// 失败

break;

}

frameQueue.push(avFrame);

while (frameQueue.size() > QUEUE_MAX_SIZE && isPlaying) {

av_usleep(THREAD_SLEEP_TIME);

}

}

releaseAvPacket(avPacket);

}解码视频,Frame转成RGBA数组

// 在子线程循环, 从frameQueue中取数据,是frameQueue的消费者

void VideoChannel::play_internal() {

// 初始化转换上下文, 将I420 的帧转成 rgba的帧

SwsContext *sws_cxt = sws_getContext(

codecContext->width, codecContext->height, codecContext->pix_fmt,

codecContext->width, codecContext->height, AV_PIX_FMT_RGBA,

SWS_BILINEAR, 0, 0, 0);

// 4个通道,分别是RGBA

// !!!! 这个数组不能声明成[1] 否则报错都看不懂!!!!

uint8_t *dst_data[4];

int dst_linesize[4];

// 创建一个画布

av_image_alloc(dst_data, dst_linesize,

codecContext->width, codecContext->height, AV_PIX_FMT_RGBA, 1);

AVFrame *avFrame = 0;

while (isPlaying) {

int ret = frameQueue.pop(avFrame);

if (!isPlaying) {

break;

}

if (!ret) {

continue;

}

while (isPause) {

av_usleep(THREAD_SLEEP_TIME);

}

// 执行转换

sws_scale(sws_cxt,

avFrame->data, avFrame->linesize, 0, avFrame->height,

dst_data, dst_linesize);

// 回调到native-lib中绘制到ANativeWindow中

renderFrame(dst_data[0], dst_linesize[0], codecContext->width, codecContext->height);

// 音视频同步处理

// pts 是编码的时候赋值的, 需要加上解码的时间

clock = avFrame->pts * av_q2d(time_base);

// 解码时间, 因为配置差的手机 解码耗时多

double extra_delay = avFrame->repeat_pict / (2 * fps);

double frame_delay = 1.0 / fps;

double audio_clock = audioChannel->clock;

double diff = clock - audio_clock;

double delay = extra_delay + frame_delay;

// LOGE("----相差----%f", diff);

if (clock > audio_clock) {

// 视频超前

if (diff > 1) {

// 差太久 慢慢赶

av_usleep((delay * 2) * 1000 * 1000);

} else {

av_usleep((delay + diff) * 1000 * 1000);

}

} else {

// 音频超前

if (diff > 1) {

// 超太多,放弃同步

} else if (diff >= 0.05) {

// 视频需要追赶,丢帧

// 删除frame队列的非关键帧

// 减少睡眠时间的话会震荡

frameQueue.sync();

} else {

// 视为同步了

}

}

// av_usleep(16 * 1000);

releaseAvFrame(avFrame);

}

if (dst_data[0]) {

av_freep(&dst_data[0]);

}

isPlaying = false;

releaseAvFrame(avFrame);

sws_freeContext(sws_cxt);

}

将RGBA数据渲染到ANativeWindow

void renderFrame(uint8_t *data, int linesize, int width, int height) {

pthread_mutex_lock(&mutex);

// 将rgb的数据渲染到窗口

// 设置窗口属性

if (!window) {

pthread_mutex_unlock(&mutex);

ANativeWindow_release(window);

return;

}

ANativeWindow_setBuffersGeometry(window, width, height, WINDOW_FORMAT_RGBA_8888);

// 锁缓冲区

if (ANativeWindow_lock(window, &buffer, 0)) {

ANativeWindow_release(window);

window = 0;

pthread_mutex_unlock(&mutex);

return;

}

// 输出到屏幕的输入源

uint8_t *srcFirstLine = data;

// 输出到屏幕的输入源的行数

int srcStride = linesize;

// 输出到屏幕时的跨度 是 缓冲区的跨度 * 4 , 因为一个pixel是4个字节

int windowStride = buffer.stride * 4;

// 屏幕的首行地址

uint8_t *windowFirstLine = (uint8_t *) buffer.bits;

for (int i = 0; i < buffer.height; ++i) {

memcpy(windowFirstLine + i * windowStride, srcFirstLine + i * srcStride,

windowStride);

}

// 解锁缓冲区

ANativeWindow_unlockAndPost(window);

pthread_mutex_unlock(&mutex);

}音频OpenSL ES初始化

// 在子线程初始化OpenSLES

void AudioChannel::initOpenSLES_internal() {

// 流程

/**

* 创建音频引擎

* 设置混响器

* 创建播放器

* 设置缓存队列和回调函数

* 设置播放状态

* 启动回调函数

*/

// 音频引擎

SLEngineItf engineInterface = NULL;

// 音频对象

SLObjectItf engineObject = NULL;

// 混响器对象

SLObjectItf outputMixObject = NULL;

// 播放器对象

SLObjectItf bqPlayerObject = NULL;

// 回调接口

SLPlayItf bqPlayerInterface = NULL;

// 缓冲队列

SLAndroidSimpleBufferQueueItf bqPlayerBufferQueue = NULL;

// 初始化播放引擎

SLresult result;

result = slCreateEngine(&engineObject, 0, NULL, 0, NULL, NULL);

if (SL_RESULT_SUCCESS != result) {

return;

}

result = (*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE);

if (SL_RESULT_SUCCESS != result) {

return;

}

// 初始化音频接口

result = (*engineObject)->GetInterface(engineObject, SL_IID_ENGINE, &engineInterface);

if (SL_RESULT_SUCCESS != result) {

return;

}

//初始化播放引擎

// 创建混响器

result = (*engineInterface)->CreateOutputMix(engineInterface, &outputMixObject, 0, 0, 0);

if (SL_RESULT_SUCCESS != result) {

return;

}

// 初始化混响器/

result = (*outputMixObject)->Realize(outputMixObject, SL_BOOLEAN_FALSE);

if (SL_RESULT_SUCCESS != result) {

return;

}

// 设置数据源的信息

SLDataLocator_AndroidSimpleBufferQueue slDataLocatorAndroidSimpleBufferQueue

= {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 2};

SLDataFormat_PCM pcm = {

SL_DATAFORMAT_PCM, // 播放pcm格式的数据

2, // 2个声道,立体声

SL_SAMPLINGRATE_44_1, // 44100hz的频率

SL_PCMSAMPLEFORMAT_FIXED_16, // 采样位数16位

SL_PCMSAMPLEFORMAT_FIXED_16,

SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT, // 立体声(前左前右)

SL_BYTEORDER_LITTLEENDIAN // 小端模式

};

SLDataSource slDataSource = {&slDataLocatorAndroidSimpleBufferQueue, &pcm};

SLDataLocator_OutputMix loc_outmix = {SL_DATALOCATOR_OUTPUTMIX, outputMixObject};

SLDataSink audioSnk = {&loc_outmix, NULL};

const SLInterfaceID ids[1] = {SL_IID_BUFFERQUEUE};

const SLboolean req[1] = {SL_BOOLEAN_TRUE};

// 创建播放器

(*engineInterface)->CreateAudioPlayer(

engineInterface,// 播放器接口

&bqPlayerObject,// 播放器

&slDataSource,// 播放器参数 播放缓冲队列 播放格式

&audioSnk,// 播放缓冲区

1,// 接口回调个数

ids,// 设置播放队列id

req// 是否采用内置的缓冲区

);

// 初始化播放器

(*bqPlayerObject)->Realize(bqPlayerObject, SL_BOOLEAN_FALSE);

// 得到接口后调用 获取Player接口

(*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_PLAY, &bqPlayerInterface);

// 获得播放器接口

(*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_BUFFERQUEUE, &bqPlayerBufferQueue);

// 注册回调

(*bqPlayerBufferQueue)->RegisterCallback(bqPlayerBufferQueue, bqPlayerCallback, this);

// 设置播放状态--

(*bqPlayerInterface)->SetPlayState(bqPlayerInterface, SL_PLAYSTATE_PLAYING);

// 启动回调函数

bqPlayerCallback(bqPlayerBufferQueue, this);

}

音频Packet->Frame

// 在子线程编码,将Packet编码成frame

void AudioChannel::codec_internal() {

// 子线程运行

AVPacket *avPacket = 0;

while (isPlaying) {

int ret = packetQueue.pop(avPacket);

if (!isPlaying) {

break;

}

if (!ret) {

continue;

}

ret = avcodec_send_packet(codecContext, avPacket);

if (ret == AVERROR(EAGAIN)) {

// 需要更多的数据

continue;

} else if (ret < 0) {

// 失败

break;

}

releaseAvPacket(avPacket);

AVFrame *avFrame = av_frame_alloc();

ret = avcodec_receive_frame(codecContext, avFrame);

if (ret == AVERROR(EAGAIN)) {

// 需要更多的数据

continue;

} else if (ret < 0) {

// 失败

break;

}

frameQueue.push(avFrame);

while (frameQueue.size() > QUEUE_MAX_SIZE && isPlaying) {

av_usleep(THREAD_SLEEP_TIME);

}

}

releaseAvPacket(avPacket);

}音频frame数据转pcm源数据

// 将frame的数据放到buffer中

int AudioChannel::getBufferSize() {

AVFrame *avFrame = 0;

int out_buffer_size = 0;

while (isPlaying) {

int ret = frameQueue.pop(avFrame);

if (!isPlaying) {

break;

}

if (!ret) {

continue;

}

while (isPause) {

av_usleep(THREAD_SLEEP_TIME);

}

int64_t dst_nb_samples = av_rescale_rnd(

swr_get_delay(swrContext, avFrame->sample_rate) + avFrame->nb_samples,

out_sample_rate,

avFrame->sample_rate,

AV_ROUND_UP);

// frame --> 统一格式

int nb = swr_convert(swrContext, &out_buffer, dst_nb_samples,

(const uint8_t **) avFrame->data,

avFrame->nb_samples);

// 获取当前帧的实际大小

out_buffer_size = nb * out_channel_nb * out_samplesize;

//av_samples_get_buffer_size(NULL, out_channel_nb, avFrame->nb_samples,out_sample,1);

clock = avFrame->pts * av_q2d(time_base);

break;

}

if (javaCallHelper) {

javaCallHelper->onProgress(THREAD_CHILD, clock);

}

releaseAvFrame(avFrame);

return out_buffer_size;

}OpenSL ES 渲染音频

// 注册给bqPlayerBufferQueue的回调函数

void bqPlayerCallback(SLAndroidSimpleBufferQueueItf bq, void *context) {

AudioChannel *audioChannel = static_cast(context);

int lenth = audioChannel->getBufferSize();

if (lenth > 0) {

(*bq)->Enqueue(bq, audioChannel->out_buffer, lenth);

}

}