yolo训练自己的数据及测试(C++版本)

一、下载yolo项目:

https://github.com/AlexeyAB/darknet(C++版本)

二、多GPU配置C++版本的yolo

修改makefile文件如下:

GPU=1

CUDNN=1

CUDNN_HALF=1

OPENCV=1

AVX=0

OPENMP=0

LIBSO=1

DEBUG=1

三、编译C++版本

遇到的bug1:

/bin/sh: 1: nvcc: not found

Makefile:88: recipe for target 'obj/convolutional_kernels.o' failed

make: *** [obj/convolutional_kernels.o] Error 127

错误原因:nvcc没有安装或者是设置的环境变量不生效

解决方案:在/et/profile中添加cuda的环境变量,添加完成之后切记source /etc/profile

参考文章:

https://blog.csdn.net/chengyq116/article/details/80552163

遇到的bug2:

Error: Assertion failed ((flags & FIXED_TYPE) != 0)

错误原因:opencv版本不匹配

解决方案:编译c++版的yolo必须要装低于3.4.0版本的opencv ;如果电脑上已经装好其他版本的opencv,只需要重新编译所需要的opencv版本即可,然后在执行训练或其他命令之前执行export LD_LIBRARY PATH=$LD_LIBRARY_PATH:/data/opencv-3.4.0/build/lib,但是不能用sudo执行命令

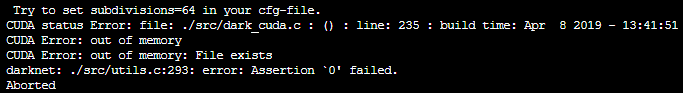

bug3:

error while loading shared libraries: libopencv_highgui.so.3.4 linux

错误原因:安装完opencv时没有将opencv路径写入环境变量

解决方案:添加opencv环境变量

参考文章:

https://blog.csdn.net/u013066730/article/details/79411767(从第9步开始执行就行)

错误原因:显存不足

解决方案:将.cfg文件中的subdivision设置为64

四、标记自己的数据及训练

训练数据:./darknet detector train data/obj.data data/yolov3-tiny_test.cfg yolov3-tiny.conv.15 -gpus 0,1,2

计算锚点:./darknet detector calc_anchors data/obj.data -num_of_clusters 6 -width 416 -height 416

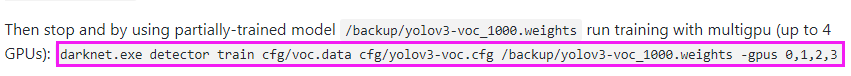

断点续传:./darknet detector train data/obj.data data/yolov3-tiny_test.cfg /backup/yolov3-tiny_train_1000.weights -gpus 0,1,2

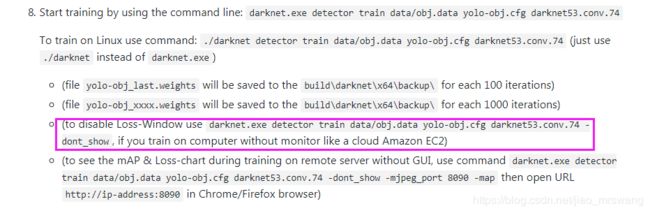

不显示训练的loss变化的图像时用以下红框的语句

五、测试自己的数据

测试单张图像命令:./darknet detector test data/obj.data data/yolov3-tiny_test.cfg backup/yolov3-tiny_final.weights -i 0 -thresh 0.25 /data/0.jpg(-thresh为设置置信度阈值,默认为0.25)

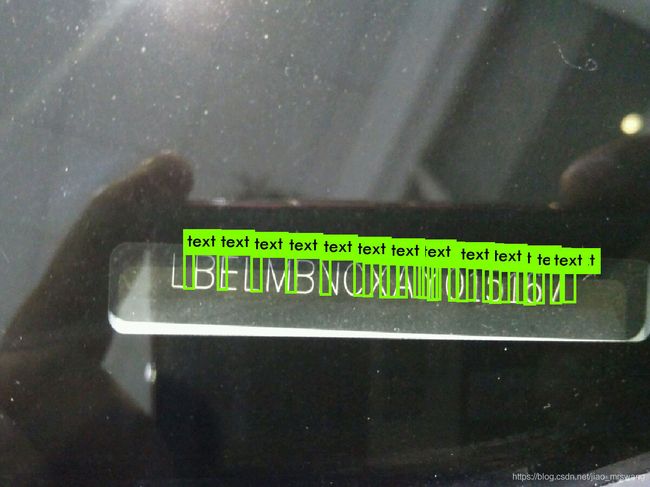

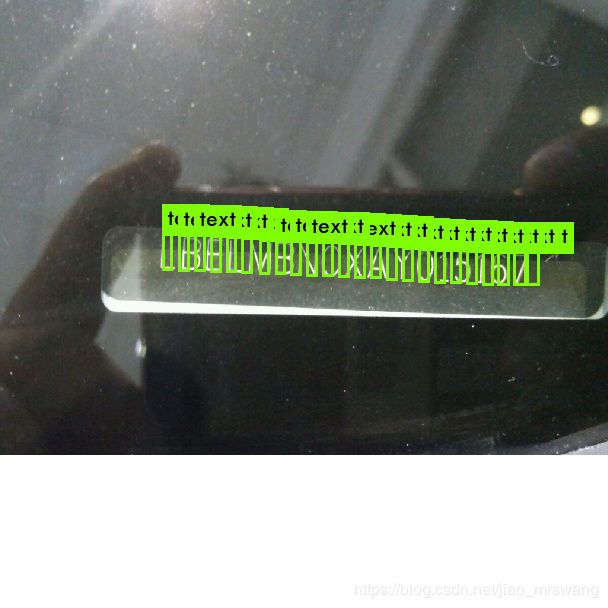

批量测试文件夹中所有图像:./darknet detector test data/obj.data data/yolov3-tiny_test.cfg backup/yolov3-tiny_final.weights -i 0 -thresh 0.25 计算map命令:./darknet detector map data/obj.data data/yolov3-tiny_test.cfg backup/yolov3-tiny_final.weights 六、训练灰度图 https://blog.csdn.net/kbdavid/article/details/81533096 七、cudnn下载地址: https://developer.nvidia.com/rdp/cudnn-download 八、安装opencv: https://blog.csdn.net/weixin_42652125/article/details/81238508(安装opencv时一定要把相关依赖全都安装上,否则在执行完cmake之后会报配置错误) opencv安装注意事项: 1、ubuntu18.04必须安装 libjasper-dev https://blog.csdn.net/weixin_41053564/article/details/81254410(参考文章) https://blog.csdn.net/qq_24878901/article/details/82382895 2、cuda10编译opencv时可能会报错,错误如下: 解决方案:将CMAKE命令改写为:cmake -D CMAKE_BUILD_TYPE=Release -D BUILD_opencv_cudacodec=OFF -D CMAKE_INSTALL_PREFIX=/usr/local .. 参考文章: https://www.cnblogs.com/rabbull/p/11154997.html https://blog.csdn.net/u012370185/article/details/86364091 九、yolov3-tiny训练时loss一直不收敛的原因: 训练集数据有误 十、在CPU上的vs2015下用opencv部署yolo的模型时注意应该设置net.setPreferableTarget为CPU: Net net = readNetFromDarknet(modelConfiguration, modelWeights); 十一、windows下的CPU版本的darknet的编译与运行 本人配置的opencv属性如下图: 注意:链接器中的pthreadVC2.lib不能删除,否则会报错! https://blog.csdn.net/tigerda/article/details/73226441#commentsedit 十二、修改detector.c的test_detector()函数实现批测试保存图片 void test_detector(char *datacfg, char *cfgfile, char *weightfile, char *filename, float thresh, 修改该函数中的画红线的部分,使用的测试命令为: darknet_no_gpu.exe detector test fpdm/CTPN.data fpdm/yolov3-tiny_jiao_test.cfg fpdm/yolov3-tiny_jiao_30000.weights -i 0 -thresh 0.01 备注:实现批测试保存的核心在于修改save_image()的保存图片的路径!!! 十三、超参数理解 https://blog.csdn.net/julialove102123/article/details/78436644 十四、yolo训练时对图像做resize的操作如下: 在测试时直接用随便拿原图测试效果并不理想! 改进方案:如果测试时的cfg文件中的宽高设置为608*608,则应该对原图进行以较长的一边缩放为608,较短的一边以原图的宽高比进行缩放,然后再进行测试! 案例如下: 原图 不做改进的检测结果 按以上方案进行改进的缩放图像如下: 对改进的图像进行检测的结果如下: 对改进的图像的检测结果 对比两种效果,明显第二种更好!!!原因不详!!!敬请指教!!! 处理代码: #include bool GetFolderFileYolo(const string FloderPath, vector Mat pad_img_to_square(Mat img) Mat padding(height, width, CV_8UC3, cv::Scalar(255, 255, 255));

net.setPreferableBackend(DNN_BACKEND_OPENCV);

net.setPreferableTarget(DNN_TARGET_CPU);

float hier_thresh, int dont_show, int ext_output, int save_labels, char *outfile)

#include

#include

#include

#include

#include

#include

#define FLODER "E:\\样本库\\卷票\\3月\\"

;

using namespace cv;

using namespace std;

void pad_img(Mat& img, int width = 40, int height = 32, bool horizontal = true);

Mat pad_img_to_square(Mat img);

float resize_img(Mat src, Mat& dst,int target_size = 608);

int main()

{

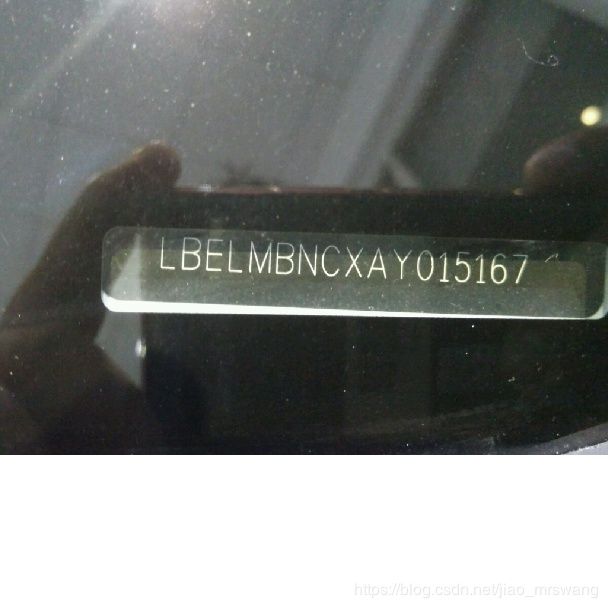

Mat src=imread("D:\\OpenCV\\VIN_all\\result_comper\\LBELMBNCXAY015167.jpg");

Mat dst;

float scale= resize_img(src, dst,608);

cout << "main_scale:" << scale << endl;

line(dst, Point(118,229), Point(555, 203), Scalar(190, 90, 90), 2);

line(dst, Point(555, 203), Point(555, 238), Scalar(190, 90, 90), 2);

line(dst, Point(555, 238), Point(118, 263), Scalar(190, 90, 90), 2);

line(dst, Point(118, 263), Point(118, 229), Scalar(190, 90, 90), 2);

int x1 = 118 / scale;

int y1 = 229 / scale;

int x2 = 555 / scale;

int y2 = 203 / scale;

int x3 = 555 / scale;

int y3 = 238 / scale;

int x4 = 118 / scale;

int y4 = 263 / scale;

line(src, Point(x1, y1), Point(x2, y2), Scalar(190, 90, 90), 2);

line(src, Point(x2, y2), Point(x3, y3), Scalar(190, 90, 90), 2);

line(src, Point(x3, y3), Point(x4, y4), Scalar(190, 90, 90), 2);

line(src, Point(x4, y4), Point(x1, y1), Scalar(190, 90, 90), 2);

//line(src, Point(300, 300), Point(758, 300), Scalar(89, 90, 90), 3);

imshow("src", src);

imshow("i", dst);

imwrite("D:\\OpenCV\\VIN_all\\result_comper\\resize_padding\\LBELMBNCXAY015167.jpg",dst);

waitKey(0);

}

return 0;

}

float resize_img(Mat src,Mat& dst,int target_size)

{

Mat ResImg;

int width = src.cols;

int height = src.rows;

int mx = max(height, width);

float scale = target_size / (mx*1.0);

cout << "scale:" << scale << endl;

Size new_size = Size(int(scale*width), int(scale*height));

resize(src, ResImg, new_size, INTER_CUBIC);

dst=pad_img_to_square(ResImg);

return scale;

}

{

bool horizontal = true;

int len_shortage = 0;

int width = img.cols;

int height = img.rows;

if (width < height) //如果宽小于高则高不变进行横向扩充,否则宽不变进行纵向扩充

{

horizontal = true;

len_shortage = height-width;

pad_img(img,len_shortage,height,horizontal);

}

else

{

horizontal = false;

len_shortage = width-height;

pad_img(img, width, len_shortage, horizontal);

}

return img;

}

void pad_img(Mat& img, int width, int height, bool horizontal) {

/*cout << img.dims;*/

assert(img.dims == 2);

if (horizontal) {

height = img.size[0];

cv::hconcat(img, padding, img);

}

else {

width = img.size[1];

Mat padding(height, width, CV_8UC3, cv::Scalar(255, 255, 255));

cv::vconcat(img, padding, img);

}

}