2019独角兽企业重金招聘Python工程师标准>>> ![]()

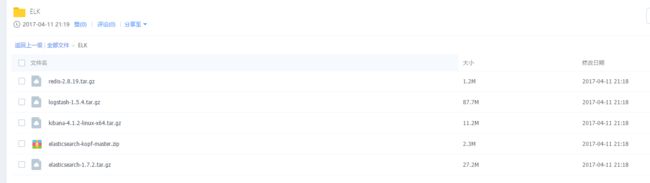

软件包

http://pan.baidu.com/s/1eSGGIGE

大纲:

一、简介

二、Logstash

三、Redis

四、Elasticsearch

五、Kinaba

一、简介

1、核心组成

ELK由Elasticsearch、Logstash和Kibana三部分组件组成;

Elasticsearch是个开源分布式搜索引擎,它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Logstash是一个完全开源的工具,它可以对你的日志进行收集、分析,并将其存储供以后使用

kibana 是一个开源和免费的工具,它可以为 Logstash 和 ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助您汇总、分析和搜索重要数据日志。

2、四大组件

Logstash: logstash server端用来搜集日志;

Elasticsearch: 存储各类日志;

Kibana: web化接口用作查寻和可视化日志;

Logstash Forwarder: logstash client端用来通过lumberjack 网络协议发送日志到logstash server;

3、ELK工作流程

在需要收集日志的所有服务上部署logstash,作为logstash agent(logstash shipper)用于监控并过滤收集日志,将过滤后的内容发送到Redis,然后logstash indexer将日志收集在一起交给全文搜索服务ElasticSearch,可以用ElasticSearch进行自定义搜索通过Kibana 来结合自定义搜索进行页面展示。

4、ELK的帮助手册

ELK官网:https://www.elastic.co/

ELK官网文档:https://www.elastic.co/guide/index.html

ELK中文手册:http://kibana.logstash.es/content/elasticsearch/monitor/logging.html

注释

ELK有两种安装方式

(1)集成环境:Logstash有一个集成包,里面包括了其全套的三个组件;也就是安装一个集成包。

(2)独立环境:三个组件分别单独安装、运行、各司其职。(比较常用)

本实验也以第二种方式独立环境来进行演示;单机版主机地址为:172.16.20.222 / CentOS Linux release 7.2.1511 (Core)

二、Logstash

1、安装jdk

cd /etc

vi profile

export JAVA_HOME=/opt/jdk1.8.0_77

export JRE_HOME=$JAVA_HOME/jre

export LANG=zh_CN

export NLS_LANG="AMERICAN_AMERICA.ZHS16GBK"

export PATH=${JAVA_HOME}/bin:${PATH}

export CLASSPATH=.:$JAVA_HOME/jre/lib:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

source profile

2、安装logstash

# wget https://download.elastic.co/logstash/logstash/logstash-1.5.4.tar.gz

# tar zxf logstash-1.5.4.tar.gz -C /usr/local/

配置logstash的环境变量

# echo "export PATH=\$PATH:/usr/local/logstash-1.5.4/bin" > /etc/profile.d/logstash.sh

# . /etc/profile

3、logstash常用参数

-e :指定logstash的配置信息,可以用于快速测试;

-f :指定logstash的配置文件;可以用于生产环境;4、启动logstash

4.1 通过-e参数指定logstash的配置信息,用于快速测试,直接输出到屏幕。

[root@localhost ELK]# logstash -e "input {stdin{}} output {stdout{}}"

Logstash startup completed

my name is yizhichao

2017-04-11T01:37:36.549Z localhost.localdomain my name is yizhichao

2017-04-11T01:37:38.259Z localhost.localdomain

^CSIGINT received. Shutting down the pipeline. {:level=>:warn}

Logstash shutdown completed

[root@localhost ELK]# 4.2 通过-e参数指定logstash的配置信息,用于快速测试,以json格式输出到屏幕。

[root@localhost ELK]# logstash -e 'input{stdin{}}output{stdout{codec=>rubydebug}}'

Logstash startup completed

my name is yizhichao

{

"message" => "my name is yizhichao",

"@version" => "1",

"@timestamp" => "2017-04-11T01:38:04.860Z",

"host" => "localhost.localdomain"

}

这种输出是以json格式的返回...

5、logstash以配置文件方式启动

5.1 输出信息到屏幕

[root@localhost logstash-1.5.4]# vi logstash-simple.conf

input { stdin {} }

output {

stdout { codec=> rubydebug }

}

~

[root@localhost logstash-1.5.4]# logstash -f logstash-simple.conf

Logstash startup completed

{

"message" => "",

"@version" => "1",

"@timestamp" => "2017-04-11T01:43:20.473Z",

"host" => "localhost.localdomain"

}

^CSIGINT received. Shutting down the pipeline. {:level=>:warn}

^CSIGINT received. Terminating immediately.. {:level=>:fatal}

[root@localhost logstash-1.5.4]#

[root@localhost logstash-1.5.4]#

[root@localhost logstash-1.5.4]# logstash agent -f logstash-simple.conf --verbose //开启debug模式

Pipeline started {:level=>:info}

Logstash startup completed

yizhichao

{

"message" => "yizhichao",

"@version" => "1",

"@timestamp" => "2017-04-11T01:44:08.805Z",

"host" => "localhost.localdomain"

}

^CSIGINT received. Shutting down the pipeline. {:level=>:warn}

Sending shutdown signal to input thread {:thread=>#, :level=>:info}

Plugin is finished {:plugin=>false, codec=>"UTF-8">>, :level=>:info}

^CSIGINT received. Terminating immediately.. {:level=>:fatal}

^C^C[root@localhost logstash-1.5.4]# 效果同命令行配置参数一样...

5.2 logstash输出信息存储到redis数据库中

刚才我们是将信息直接显示在屏幕上了,现在我们将logstash的输出信息保存到redis数据库中,如下

前提是本地(172.16.20.222)有redis数据库,那么下一步我们就是安装redis数据库.

[root@localhost logstash-1.5.4]# logstash agent -f logstash_to_redis.conf --verbose //开启debug模式

Pipeline started {:level=>:info}

Logstash startup completed

{

"message" => "",

"@version" => "1",

"@timestamp" => "2017-04-11T01:55:36.734Z",

"host" => "localhost.localdomain"

}

["INFLIGHT_EVENTS_REPORT", "2017-04-11T09:55:42+08:00", {"input_to_filter"=>1, "filter_to_output"=>1, "outputs"=>[]}] {:level=>:warn}

Failed to send event to Redis6、 查看logstash的监听端口号

[root@localhost logstash-1.5.4]# netstat -tnlp |grep java

tcp6 0 0 :::28080 :::* LISTEN 4852/java

tcp6 0 0 :::8080 :::* LISTEN 4802/java

tcp6 0 0 :::8086 :::* LISTEN 6672/java

tcp6 0 0 :::34105 :::* LISTEN 4802/java

tcp6 0 0 127.0.0.1:8605 :::* LISTEN 6672/java

tcp6 0 0 :::8609 :::* LISTEN 6672/java

tcp6 0 0 127.0.0.1:28005 :::* LISTEN 4852/java

tcp6 0 0 :::2181 :::* LISTEN 4802/java

tcp6 0 0 :::28009 :::* LISTEN 4852/java

[root@localhost logstash-1.5.4]#

[root@localhost logstash-1.5.4]#

[root@localhost logstash-1.5.4]#

[root@localhost logstash-1.5.4]#

[root@localhost logstash-1.5.4]#

[root@localhost logstash-1.5.4]# ps -ef|grep redis

root 7457 7096 30 09:53 pts/0 00:00:14 /usr/java/jdk1.8.0_112/bin/java -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -Djava.awt.headless=true -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Xmx500m -Xss2048k -Djffi.boot.library.path=/usr/local/logstash-1.5.4/vendor/jruby/lib/jni -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -Djava.awt.headless=true -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Xbootclasspath/a:/usr/local/logstash-1.5.4/vendor/jruby/lib/jruby.jar -classpath :.:/usr/java/jdk1.8.0_112/lib/dt.jar:/usr/java/jdk1.8.0_112/lib/tools.jar:/usr/java/jdk1.8.0_112/jre/lib:.:/usr/java/jdk1.8.0_112/lib/dt.jar:/usr/java/jdk1.8.0_112/lib/tools.jar:/usr/java/jdk1.8.0_112/jre/lib: -Djruby.home=/usr/local/logstash-1.5.4/vendor/jruby -Djruby.lib=/usr/local/logstash-1.5.4/vendor/jruby/lib -Djruby.script=jruby -Djruby.shell=/bin/sh org.jruby.Main --1.9 /usr/local/logstash-1.5.4/lib/bootstrap/environment.rb logstash/runner.rb agent -f logstash_to_redis.conf --verbose //开启debug模式

root 7496 7192 0 09:54 pts/2 00:00:00 grep --color=auto redis

[root@localhost logstash-1.5.4]# 三、Redis

1、安装Redis

wget http://download.redis.io/releases/redis-2.8.19.tar.gz

yum install tcl -y

tar zxf redis-2.8.19.tar.gz

cd redis-2.8.19

make MALLOC=libc

make test //这一步时间会稍久点...

[root@localhost redis-2.8.19]# make install

cd src && make install

make[1]: 进入目录“/opt/ELK/redis-2.8.19/src”

Hint: It's a good idea to run 'make test' ;)

INSTALL install

INSTALL install

INSTALL install

INSTALL install

INSTALL install

make[1]: 离开目录“/opt/ELK/redis-2.8.19/src”

[root@localhost redis-2.8.19]#

[root@localhost redis-2.8.19]#

[root@localhost redis-2.8.19]#

[root@localhost redis-2.8.19]# cd cd utils/^C

[root@localhost redis-2.8.19]# cd utils/

[root@localhost utils]# ./install_server.sh

Welcome to the redis service installer

This script will help you easily set up a running redis server

Please select the redis port for this instance: [6379]

Selecting default: 6379

Please select the redis config file name [/etc/redis/6379.conf]

Selected default - /etc/redis/6379.conf

Please select the redis log file name [/var/log/redis_6379.log]

Selected default - /var/log/redis_6379.log

Please select the data directory for this instance [/var/lib/redis/6379]

Selected default - /var/lib/redis/6379

Please select the redis executable path [/usr/local/bin/redis-server]

Selected config:

Port : 6379

Config file : /etc/redis/6379.conf

Log file : /var/log/redis_6379.log

Data dir : /var/lib/redis/6379

Executable : /usr/local/bin/redis-server

Cli Executable : /usr/local/bin/redis-cli

Is this ok? Then press ENTER to go on or Ctrl-C to abort.

Copied /tmp/6379.conf => /etc/init.d/redis_6379

Installing service...

Successfully added to chkconfig!

Successfully added to runlevels 345!

Starting Redis server...

Installation successful!

[root@localhost utils]# 2、查看redis的监控端口

[root@localhost utils]# netstat -tnlp |grep redis

tcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN 8183/redis-server *

tcp6 0 0 :::6379 :::* LISTEN 8183/redis-server *

[root@localhost utils]#

[root@localhost utils]# 3、测试redis是否正常工作

[root@localhost redis-2.8.19]# ll

总用量 140

-rw-rw-r--. 1 root root 30972 12月 16 2014 00-RELEASENOTES

-rw-rw-r--. 1 root root 53 12月 16 2014 BUGS

-rw-rw-r--. 1 root root 1439 12月 16 2014 CONTRIBUTING

-rw-rw-r--. 1 root root 1487 12月 16 2014 COPYING

drwxrwxr-x. 6 root root 4096 4月 11 10:04 deps

-rw-rw-r--. 1 root root 11 12月 16 2014 INSTALL

-rw-rw-r--. 1 root root 151 12月 16 2014 Makefile

-rw-rw-r--. 1 root root 4223 12月 16 2014 MANIFESTO

-rw-rw-r--. 1 root root 4404 12月 16 2014 README

-rw-rw-r--. 1 root root 36141 12月 16 2014 redis.conf

-rwxrwxr-x. 1 root root 271 12月 16 2014 runtest

-rwxrwxr-x. 1 root root 281 12月 16 2014 runtest-sentinel

-rw-rw-r--. 1 root root 7109 12月 16 2014 sentinel.conf

drwxrwxr-x. 2 root root 4096 4月 11 10:04 src

drwxrwxr-x. 9 root root 4096 12月 16 2014 tests

drwxrwxr-x. 3 root root 4096 12月 16 2014 utils

[root@localhost redis-2.8.19]# cd ..

[root@localhost ELK]# cd redis-2.8.19/src/

[root@localhost src]# ./redis-cli -h 172.16.20.222 -p 6379

172.16.20.222:6379> ping

PONG

172.16.20.222:6379> set name yizhichao

OK

172.16.20.222:6379> get name

"yizhichao"

172.16.20.222:6379> quit

[root@localhost src]# 4、redis服务启动命令

[root@localhost src]# ps -ef |grep redis

root 8183 1 0 10:05 ? 00:00:00 /usr/local/bin/redis-server *:6379

root 8194 7192 0 10:10 pts/2 00:00:00 grep --color=auto redis

[root@localhost src]# 5、redis的动态监控

# cd redis-2.8.19/src/

# ./redis-cli monitor //reids动态监控6、logstash结合redis工作

6.1 首先确认redis服务是启动的

[root@localhost src]# netstat -tnlp |grep redis

tcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN 8183/redis-server *

tcp6 0 0 :::6379 :::* LISTEN 8183/redis-server *

[root@localhost src]# 6.2 启动redis动态监控

[root@localhost src]# pwd

/opt/ELK/redis-2.8.19/src

[root@localhost src]# ./redis-cli monitor

OK6.3 基于入口redis启动logstash

[root@localhost src]# cd /usr/local/logstash-1.5.4/

[root@localhost logstash-1.5.4]# cat logstash_to_redis.conf

input { stdin { } }

output {

stdout { codec => rubydebug }

redis {

host => '172.16.20.222'

data_type => 'list'

key => 'logstash:redis'

}

}

[root@localhost logstash-1.5.4]#

[root@localhost logstash-1.5.4]# logstash agent -f logstash_to_redis.conf --verbose

Pipeline started {:level=>:info}

Logstash startup completed

hello

{

"message" => "hello",

"@version" => "1",

"@timestamp" => "2017-04-11T02:14:57.200Z",

"host" => "localhost.localdomain"

}

6.4 查看redis的监控接口上的输出

[root@localhost src]# ./redis-cli monitor

OK

1491876897.378987 [0 172.16.20.222:35312] "rpush" "logstash:redis" "{\"message\":\"hello\",\"@version\":\"1\",\"@timestamp\":\"2017-04-11T02:14:57.200Z\",\"host\":\"localhost.localdomain\"}"

四、Elasticsearch

1、安装Elasticsearch

# wget https://download.elastic.co/elasticsearch/elasticsearch/elasticsearch-1.7.2.tar.gz

# tar zxf elasticsearch-1.7.2.tar.gz -C /usr/local/2、修改elasticsearch配置文件elasticsearch.yml并且做以下修改.

[root@localhost config]# pwd

/usr/local/elasticsearch-1.7.2/config

[root@localhost config]# vi elasticsearch.yml

discovery.zen.ping.multicast.enabled: false #关闭广播,如果局域网有机器开9300 端口,服务会启动不了

network.host: 172.16.20.222 #指定主机地址,其实是可选的,但是最好指定因为后面跟kibana集成的时候会报http连接出错(直观体现好像是监听了:::9200 而不是0.0.0.0:9200)

http.cors.allow-origin: "/.*/"

http.cors.enabled: true #这2项都是解决跟kibana集成的问题,错误体现是 你的 elasticsearch 版本过低,其实不是

3、启动elasticsearch服务

# /usr/local/elasticsearch-1.7.2/bin/elasticsearch #日志会输出到stdout

# /usr/local/elasticsearch-1.7.2/bin/elasticsearch -d #表示以daemon的方式启动

# nohup /usr/local/elasticsearch-1.7.2/bin/elasticsearch > /var/log/logstash.log 2>&1 &4、查看elasticsearch的监听端口

[root@localhost ~]# netstat -tnlp |grep java

tcp6 0 0 172.16.20.222:9200 :::* LISTEN 2122/java

tcp6 0 0 172.16.20.222:9300 :::* LISTEN 2122/java

[root@localhost ~]#

5、elasticsearch和logstash结合

将logstash的信息输出到elasticsearch中

# cat logstash-elasticsearch.conf

input { stdin {} }

output {

elasticsearch { host => "172.16.20.222" }

stdout { codec=> rubydebug }

}6、基于配置文件启动logstash

[root@localhost logstash-1.5.4]# /usr/local/logstash-1.5.4/bin/logstash agent -f logstash-elasticsearch.conf

四月 11, 2017 3:53:04 下午 org.elasticsearch.node.internal.InternalNode

信息: [logstash-localhost.localdomain-2204-11622] version[1.7.0], pid[2204], build[929b973/2015-07-16T14:31:07Z]

四月 11, 2017 3:53:04 下午 org.elasticsearch.node.internal.InternalNode

信息: [logstash-localhost.localdomain-2204-11622] initializing ...

四月 11, 2017 3:53:04 下午 org.elasticsearch.plugins.PluginsService

信息: [logstash-localhost.localdomain-2204-11622] loaded [], sites []

四月 11, 2017 3:53:05 下午 org.elasticsearch.bootstrap.Natives

警告: JNA not found. native methods will be disabled.

四月 11, 2017 3:53:06 下午 org.elasticsearch.node.internal.InternalNode

信息: [logstash-localhost.localdomain-2204-11622] initialized

四月 11, 2017 3:53:06 下午 org.elasticsearch.node.internal.InternalNode start

信息: [logstash-localhost.localdomain-2204-11622] starting ...

四月 11, 2017 3:53:06 下午 org.elasticsearch.transport.TransportService doStart

信息: [logstash-localhost.localdomain-2204-11622] bound_address {inet[/0:0:0:0:0:0:0:0:9301]}, publish_address {inet[/172.16.20.222:9301]}

四月 11, 2017 3:53:06 下午 org.elasticsearch.discovery.DiscoveryService doStart

信息: [logstash-localhost.localdomain-2204-11622] elasticsearch/EjWOM6aMTUutz4yZ8HFagQ

四月 11, 2017 3:53:09 下午 org.elasticsearch.cluster.service.InternalClusterService$UpdateTask run

信息: [logstash-localhost.localdomain-2204-11622] detected_master [Crown][CIl66h7ERGuxI2BQTg5uDw][localhost.localdomain][inet[/172.16.20.222:9300]], added {[Crown][CIl66h7ERGuxI2BQTg5uDw][localhost.localdomain][inet[/172.16.20.222:9300]],}, reason: zen-disco-receive(from master [[Crown][CIl66h7ERGuxI2BQTg5uDw][localhost.localdomain][inet[/172.16.20.222:9300]]])

四月 11, 2017 3:53:09 下午 org.elasticsearch.node.internal.InternalNode start

信息: [logstash-localhost.localdomain-2204-11622] started

Logstash startup completed

python linux java c++ //手动输入

{

"message" => "python linux java c++ ",

"@version" => "1",

"@timestamp" => "2017-04-11T07:53:29.968Z",

"host" => "localhost.localdomain"

} 7、curl命令发送请求来查看elasticsearch是否接收到了数据

[root@localhost ~]# curl http://172.16.20.222:9200/_search?pretty

{

"took" : 2,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"failed" : 0

},

"hits" : {

"total" : 12,

"max_score" : 1.0,

"hits" : [ {

"_index" : "logstash-2017.04.11",

"_type" : "logs",

"_id" : "AVtcAcisxKaGLoND1MTk",

"_score" : 1.0,

"_source":{"message":"","@version":"1","@timestamp":"2017-04-11T07:54:31.772Z","host":"localhost.localdomain"}

}, {

"_index" : "logstash-2017.04.11",

"_type" : "logs",

"_id" : "AVtcCYGsxKaGLoND1MTl",

"_score" : 1.0,

"_source":{"message":"","@version":"1","@timestamp":"2017-04-11T08:02:58.599Z","host":"localhost.localdomain"}

}, {

"_index" : "logstash-2017.04.11",

"_type" : "logs",

"_id" : "AVtcCYXLxKaGLoND1MTo",

"_score" : 1.0,

"_source":{"message":"","@version":"1","@timestamp":"2017-04-11T08:02:59.159Z","host":"localhost.localdomain"}

}, {

"_index" : "logstash-2017.04.11",

"_type" : "logs",

"_id" : "AVtcANZQxKaGLoND1MTh",

"_score" : 1.0,

"_source":{"message":"python linux java c++ ","@version":"1","@timestamp":"2017-04-11T07:53:29.968Z","host":"localhost.localdomain"}

}, {

"_index" : "logstash-2017.04.11",

"_type" : "logs",

"_id" : "AVtcAcisxKaGLoND1MTj",

"_score" : 1.0,

"_source":{"message":"","@version":"1","@timestamp":"2017-04-11T07:54:31.492Z","host":"localhost.localdomain"}

}, {

"_index" : "logstash-2017.04.11",

"_type" : "logs",

"_id" : "AVtcCYXLxKaGLoND1MTn",

"_score" : 1.0,

"_source":{"message":"","@version":"1","@timestamp":"2017-04-11T08:02:59.126Z","host":"localhost.localdomain"}

}, {

"_index" : "logstash-2017.04.11",

"_type" : "logs",

"_id" : "AVtcCYXLxKaGLoND1MTs",

"_score" : 1.0,

"_source":{"message":"","@version":"1","@timestamp":"2017-04-11T08:02:59.291Z","host":"localhost.localdomain"}

}, {

"_index" : "logstash-2017.04.11",

"_type" : "logs",

"_id" : "AVtcCYXLxKaGLoND1MTm",

"_score" : 1.0,

"_source":{"message":"","@version":"1","@timestamp":"2017-04-11T08:02:59.093Z","host":"localhost.localdomain"}

}, {

"_index" : "logstash-2017.04.11",

"_type" : "logs",

"_id" : "AVtcCYXLxKaGLoND1MTr",

"_score" : 1.0,

"_source":{"message":"","@version":"1","@timestamp":"2017-04-11T08:02:59.258Z","host":"localhost.localdomain"}

}, {

"_index" : "logstash-2017.04.11",

"_type" : "logs",

"_id" : "AVtcAcJrxKaGLoND1MTi",

"_score" : 1.0,

"_source":{"message":"","@version":"1","@timestamp":"2017-04-11T07:54:30.884Z","host":"localhost.localdomain"}

} ]

}

}

[root@localhost ~]# 8、安装elasticsearch插件

/usr/local/elasticsearch-1.7.2

/usr/local/elasticsearch-1.7.2/plugins

#Elasticsearch-kopf插件可以查询Elasticsearch中的数据,安装elasticsearch-kopf,只要在你安装Elasticsearch的目录中执行以下命令即可:

# cd /usr/local/elasticsearch-1.7.2/bin/

# ./plugin install lmenezes/elasticsearch-kopf

-> Installing lmenezes/elasticsearch-kopf...

Trying https://github.com/lmenezes/elasticsearch-kopf/archive/master.zip...

Downloading .............................................................................................

Installed lmenezes/elasticsearch-kopf into /usr/local/elasticsearch-1.7.2/plugins/kopf

执行插件安装后会提示失败,很有可能是网络等情况...

-> Installing lmenezes/elasticsearch-kopf...

Trying https://github.com/lmenezes/elasticsearch-kopf/archive/master.zip...

Failed to install lmenezes/elasticsearch-kopf, reason: failed to download out of all possible locations..., use --verbose to get detailed information

解决办法就是手动下载该软件,不通过插件安装命令...

cd /usr/local/elasticsearch-1.7.2/plugins

wget https://github.com/lmenezes/elasticsearch-kopf/archive/master.zip

unzip master.zip

mv elasticsearch-kopf-master kopf

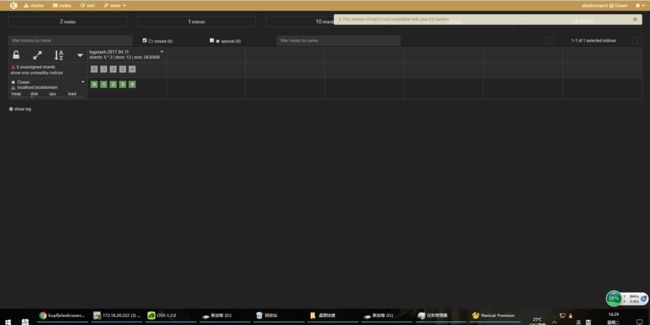

以上操作就完全等价于插件的安装命令9、浏览器访问kopf页面访问elasticsearch保存的数据

[root@localhost plugins]# netstat -tnlp |grep java

tcp6 0 0 172.16.20.222:9200 :::* LISTEN 2122/java

tcp6 0 0 172.16.20.222:9300 :::* LISTEN 2122/java

tcp6 0 0 :::9301 :::* LISTEN 2204/java

[root@localhost plugins]# http://172.16.20.222:9200/_search?pretty

http://172.16.20.222:9200/_plugin/kopf

http://172.16.20.222:9200/_plugin/kopf/#!/cluster

10、从redis数据库中读取然后输出到elasticsearch中

cd /usr/local/logstash-1.5.4

# cat logstash-redis.conf

input {

redis {

host => '172.16.20.222' # 我方便测试没有指定password,最好指定password

data_type => 'list'

port => "6379"

key => 'logstash:redis' #自定义

type => 'redis-input' #自定义

}

}

output {

elasticsearch {

host => "172.16.20.222"

codec => "json"

protocol => "http" #版本1.0+ 必须指定协议http

}

}五、Kinaba

1、安装Kinaba

# wget https://download.elastic.co/kibana/kibana/kibana-4.1.2-linux-x64.tar.gz

# tar zxf kibana-4.1.2-linux-x64.tar.gz -C /usr/local

[root@localhost ELK]# ll

总用量 132680

-rw-r--r--. 1 root root 28478292 4月 11 14:22 elasticsearch-1.7.2.tar.gz

-rw-r--r--. 1 root root 2372669 4月 11 16:24 elasticsearch-kopf-master.zip

-rw-r--r--. 1 root root 11787239 4月 11 09:26 kibana-4.1.2-linux-x64.tar.gz

-rw-r--r--. 1 root root 91956015 4月 11 09:30 logstash-1.5.4.tar.gz

drwxrwxr-x. 6 root root 4096 12月 16 2014 redis-2.8.19

-rw-r--r--. 1 root root 1254857 4月 11 10:06 redis-2.8.19.tar.gz

[root@localhost ELK]# tar zxf kibana-4.1.2-linux-x64.tar.gz -C /usr/local

[root@localhost ELK]# cd /usr/local/

[root@localhost local]# ll

总用量 12

drwxr-xr-x. 2 root root 4096 4月 11 10:04 bin

drwxr-xr-x. 8 root root 4096 4月 11 16:20 elasticsearch-1.7.2

drwxr-xr-x. 2 root root 6 8月 12 2015 etc

drwxr-xr-x. 2 root root 6 8月 12 2015 games

drwxr-xr-x. 2 root root 6 8月 12 2015 include

drwxr-xr-x. 7 501 games 99 9月 9 2015 kibana-4.1.2-linux-x64

drwxr-xr-x. 2 root root 6 8月 12 2015 lib

drwxr-xr-x. 2 root root 6 8月 12 2015 lib64

drwxr-xr-x. 2 root root 6 8月 12 2015 libexec

drwxr-xr-x. 5 root root 4096 4月 11 16:39 logstash-1.5.4

drwxr-xr-x. 2 root root 6 8月 12 2015 sbin

drwxr-xr-x. 5 root root 46 8月 12 2015 share

drwxr-xr-x. 2 root root 6 8月 12 2015 src

[root@localhost local]# cd kibana-4.1.2-linux-x64/config/

[root@localhost config]# pwd

/usr/local/kibana-4.1.2-linux-x64/config

[root@localhost config]# 2、修改kinaba配置文件kinaba.yml

# vi /usr/local/kibana-4.1.2-linux-x64/config/kibana.yml

elasticsearch_url: "http://172.16.20.222:9200"3、启动kinaba

/usr/local/kibana-4.1.2-linux-x64/bin/kibana

输出以下信息,表明kinaba成功.

[root@localhost config]# /usr/local/kibana-4.1.2-linux-x64/bin/kibana

{"name":"Kibana","hostname":"localhost.localdomain","pid":2581,"level":30,"msg":"No existing kibana index found","time":"2017-04-11T08:45:31.988Z","v":0}

{"name":"Kibana","hostname":"localhost.localdomain","pid":2581,"level":30,"msg":"Listening on 0.0.0.0:5601","time":"2017-04-11T08:45:32.007Z","v":0}

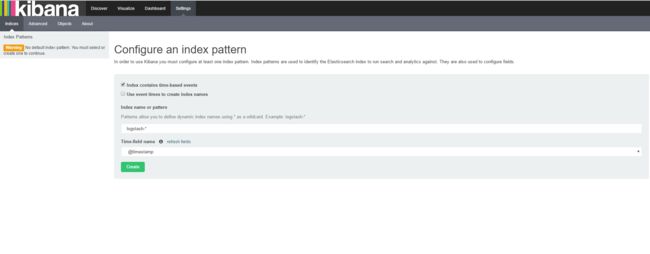

kinaba默认监听在本地的5601端口上4、浏览器访问kinaba

4.1 使用默认的logstash-*的索引名称,并且是基于时间的,点击“Create”即可。

http://172.16.20.222:5601/

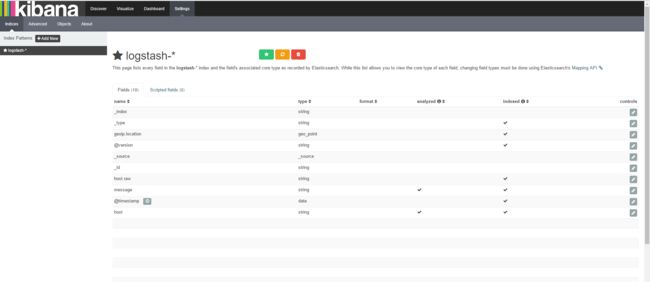

4.2 看到如下界面说明索引创建完成。

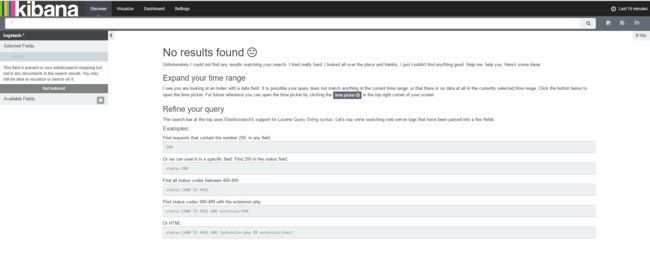

4.3 点击“Discover”,可以搜索和浏览Elasticsearch中的数据。

>>>结束<<<

1、ELK默认端口号

elasticsearch:9200 9300

logstash : 9301

kinaba : 5601

2、错误汇总

(1)java版本过低

[2017-04-11 18:39:18.071] WARN -- Concurrent: [DEPRECATED] Java 7 is deprecated, please use Java 8.

(2)Kibana提示Elasticsearch版本过低...

This version of Kibana requires Elasticsearch 2.0.0 or higher on all nodes. I found the following incompatible nodes in your cluster:

Elasticsearch v1.7.2 @ inet[/172.16.20.222:9200] (127.0.0.1)

===================================================

/usr/local/elasticsearch-1.7.2/bin/elasticsearch

/usr/local/logstash-1.5.4/bin/logstash agent -f logstash-elasticsearch.conf

/usr/local/kibana-4.1.2-linux-x64/bin/kibana