SparkCore:Spark on Yarn运行模式和过程、Client模式新增进程、参数配置

文章目录

- 1、Spark on YARN如何配置

- 2、Cluster和Client两种模式

- 2.1 Cluster和Client对比

- 2.2 Cluster模式

- 2.3 Client模式

- 3、测试,查看运行结果

- 3.1 cluster模式提交程序

- 3.2 client模式提交程序,或者通过spark-shell进入client模式

- 4、参数配置,调优

- 4.1 启动方式

- 4.2 spark.yarn.jars参数

- 4.3 spark.port.maxRetries

- 4.4 spark.yarn.maxAppAttempts

官网:Running Spark on YARN

http://spark.apache.org/docs/2.4.2/running-on-yarn.html

1、Spark on YARN如何配置

- Ensure that HADOOP_CONF_DIR or YARN_CONF_DIR points to the directory which contains the (client side) configuration files for the Hadoop cluster. These configs are used to write to HDFS and connect to the YARN ResourceManager. The configuration contained in this directory will be distributed to the YARN cluster so that all containers used by the application use the same configuration. If the configuration references Java system properties or environment variables not managed by YARN, they should also be set in the Spark application’s configuration (driver, executors, and the AM when running in client mode).

确保HADOOP_CONF_DIR或YARN_CONF_DIR指向包含Hadoop集群(客户端)配置文件的目录。这些配置用于写入HDFS并连接YARN ResourceManager。此目录中包含的配置将分发到YARN cluster,以便应用程序使用的所有containers(容器)都使用相同的配置。如果配置引用的Java系统属性或环境变量不是由YARN管理的,那么还应该在Spark应用程序的配置中设置它们(driver、executors和在client mode下运行的AM(Application Master))。

在spark-env.sh这个文件中,指向HADOOP_CONF_DIR或YARN_CONF_DIR的目录,是不需要配置Slave文件的,这一点一定要和standlone模式区分开,那么spark在提交程序后,会自动根据这个目录找到yarn,然后由yarn调配资源执行你的应用程序。

spark 仅仅只是一个客户端而已,只要client有权限访问到yarn即可。

[hadoop@vm01 hadoop]$ pwd

/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/etc/hadoop

#确保下面几个文件都在该目录下,on yarn模式其实就找的这些文件里面的配置来执行的

[hadoop@vm01 hadoop]$ ll *.xml

-rw-rw-r--. 1 hadoop hadoop 883 Jul 14 12:58 core-site.xml

-rw-rw-r--. 1 hadoop hadoop 1619 Jul 31 06:49 hdfs-site.xml

-rw-rw-r--. 1 hadoop hadoop 863 Jul 14 13:09 mapred-site.xml

-rw-rw-r--. 1 hadoop hadoop 815 Jul 14 13:09 yarn-site.xml

[hadoop@vm01 conf]$ pwd

/home/hadoop/app/spark-2.4.2-bin-2.6.0-cdh5.7.0/conf

[hadoop@vm01 conf]$ vi spark-env.sh

...

export HADOOP_CONF_DIR=/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/etc/hadoop

2、Cluster和Client两种模式

2.1 Cluster和Client对比

- There are two deploy modes that can be used to launch Spark applications on YARN. In cluster mode, the Spark driver runs inside an application master process which is managed by YARN on the cluster, and the client can go away after initiating the application. In client mode, the driver runs in the client process, and the application master is only used for requesting resources from YARN.

有两种部署模式可用于在YARN上启动Spark应用程序。在cluster模式下,Spark驱动程序运行在应用程序主进程中,应用程序主进程由集群上的YARN管理,客户机可以在启动应用程序后离开。在client模式下,driver在客户端进程中运行,应用程序主程序仅用于向YARN请求资源。

In cluster mode:Driver运行在NodeManage的AM(Application Master)中,集群内,它负责向YARN申请资源,并监督作业的运行状况。当用户提交了作业之后,就可以关掉Client,作业会继续在YARN上运行。

In client mode:Driver运行在Client上,集群外,哪台机器提交哪台就是Driver端,不能关闭;通过ApplicationMaster向RM获取资源。本地Driver负责与所有的executor container进行交互,并将最后的结果汇总。

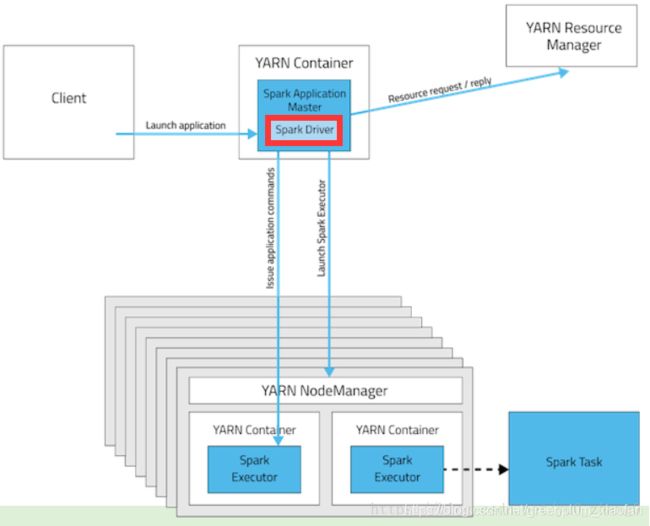

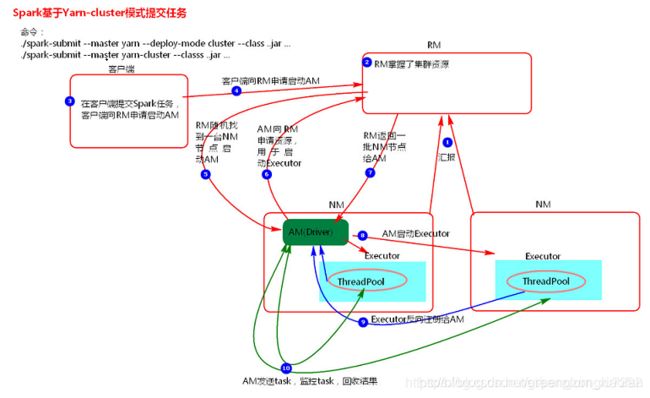

2.2 Cluster模式

#语法

$ ./bin/spark-submit --class path.to.your.Class --master yarn --deploy-mode cluster [options] <app jar> [app options]

#举例,cluster模式是不支持spark-shell的

$ ./bin/spark-submit --class org.apache.spark.examples.SparkPi \ #要执行的类名,要全路径

--master yarn \ #yarn

--deploy-mode cluster \ #cluster模式

--driver-memory 4g \ #driver端内存4G

--executor-memory 2g \ #executor端内存2G

--executor-cores 1 \ #executor端1核

--queue thequeue \ #队列

examples/jars/spark-examples*.jar \ #jab路径,绝对路径

10 #传递的参数,你应用程序里面设置的

- 创建sparkContext对象时,客户端向ResourceManager节点申请启动应用程序的 ApplicationMaster。

- ResourceManager收到请求,找到满足资源条件要求的NodeManager启动第一个Container,然 后要求该NodeManager在Container内启动ApplicationMaster。

- ApplicationMaster启动成功则向ResourceManager申请资源,ResourceManager收到请求给ApplicationMaster返回一批满足资源条件的NodeManager列表。

- Applicatio拿到NodeManager列表则到这些节点启动container,并在container内启动executor,executor启动成功则会向driver注册自己。

- executor注册成功,则driver发送task到executor,一个executor可以运行一个或多个task。

- executor接收到task,首先DAGScheduler按RDD的宽窄依赖关系切割job划分stage,然后将stage以TaskSet的方式提交给TaskScheduler。

- TaskScheduler遍历TaskSet将一个个task发送到executor的线程池中执行。

- driver会监控所有task执行的整个流程,并将执行完的结果回收。

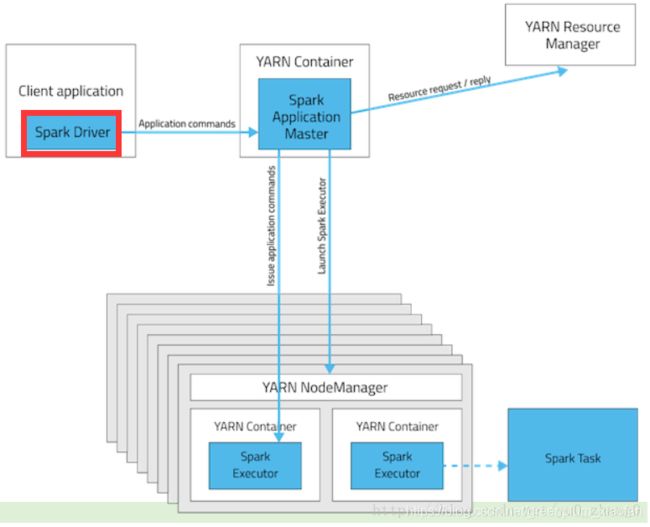

2.3 Client模式

$ ./bin/spark-shell --master yarn --deploy-mode client

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \ #作业类名

--master yarn-clinet \ #client模式 on yarn,这种写法也可以

--driver-memory 4g \ #每一个driver的内存

--executor-memory 2g \ #每一个executor的内存

--executor-cores 1 \ #每一个executor占用的core数量

--queue thequeue \ #作业执行的队列

examples/jars/spark-examples*.jar \ #jar包

100 #传入类中所需要的参数

- 创建sparkContext对象时,客户端先在本地启动driver,然后向ResourceManager节点申请启动应用程序的ApplicationMaster。

- ResourceManager收到请求,找到满足资源条件要求的NodeManager启动第一个Container,然后要求该NodeManager在Container内启动ApplicationMaster。

- ApplicationMaster启动成功则向ResourceManager申请资源,ResourceManager收到请求给ApplicationMaster返回一批满足资源条件的NodeManager列表。

- Applicatio拿到NodeManager列表则到这些节点启动container,并在container内启动executor,executor启动成功则会向driver注册自己。

- executor注册成功,则driver发送task到executor,一个executor可以运行一个或多个task。

- executor接收到task,首先DAGScheduler按RDD的宽窄依赖关系切割job划分stage,然后将stage以TaskSet的方式提交给TaskScheduler。

- TaskScheduler遍历TaskSet将一个个task发送到executor的线程池中执行。

- driver会监控所有task执行的整个流程,并将执行完的结果回收。

由于client模式下driver运行在客户端,当应用程序很多且driver和worker有大量通信的时候,会急剧增加driver和executor之间的网络IO。并且大量的dirver的运行会对客户端的资源造成巨大的压力。

3、测试,查看运行结果

3.1 cluster模式提交程序

应用程序代码参考:

https://blog.csdn.net/greenplum_xiaofan/article/details/98124735

[hadoop@vm01 spark-2.4.2-bin-2.6.0-cdh5.7.0]$ ./bin/spark-submit --class com.ruozedata.spark.WordCountApp \

> --master yarn \

> --deploy-mode cluster \

> --driver-memory 2g \

> --executor-cores 1 \

> --queue thequeue \

> /home/hadoop/lib/spark-train-1.0.jar \

> hdfs://192.168.137.130:9000/test.txt

19/08/02 16:12:52 WARN Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

打开yarn,webUI界面vm01:8088

如果跑在yarn上,那么在yarn界面查看的程序id是application开头的

如果跑在local本地,那么id就是local开头的

点击appID号–>右下角,点击logs–>stout

可以参看运行结果

或者通过命令查看日志

比如:

#yarn logs -applicationId 3.2 client模式提交程序,或者通过spark-shell进入client模式

如果是client模式的话,在客户端jps查看进程会看到如下进程

[hadoop@vm01 spark-2.4.2-bin-2.6.0-cdh5.7.0]$ ./bin/spark-submit --class com.ruozedata.spark.WordCountApp \

> --master yarn-client \

> --driver-memory 2g \

> --executor-cores 1 \

> --queue thequeue \

> /home/hadoop/lib/spark-train-1.0.jar \

> hdfs://192.168.137.130:9000/test.txt

#这里报了个warning,说yarn-client这种写法被弃用了,所以还是乖乖cluster模式的那种写法来吧

Warning: Master yarn-client is deprecated since 2.0. Please use master "yarn" with specified deploy mode instead.

19/08/02 16:23:37 WARN Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

(hello,5)

(spark,2)

(mr,1)

(hive,1)

(yarn,1)

[hadoop@vm01 bin]$ jps

7410 NodeManager

15986 Jps

6775 NameNode

6888 DataNode

7241 ResourceManager

7050 SecondaryNameNode

15130 SparkSubmit

15978 CoarseGrainedExecutorBackend

15836 ExecutorLauncher

15919 CoarseGrainedExecutorBackend

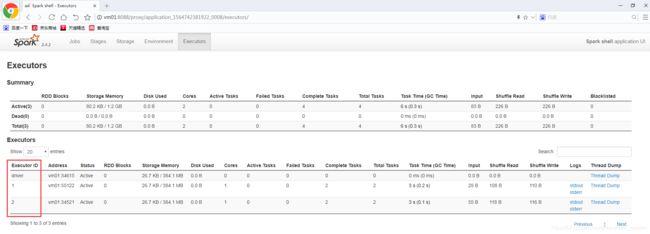

用spark-shell进入yarn,client模式,我们来看看新增的进程到底是什么。

通过ps -ef |grep 15978可以知道CoarseGrainedExecutorBackend的详细信息

[hadoop@vm01 bin]$ spark-shell --master yarn --deploy-mode client

Spark context Web UI available at http://vm01:4040

##这里可以看到yarn,client模式,spark-shell启动就已经产生了一个appID,并且这id是不会消失的,除非

##退出spark-shell客户端

Spark context available as 'sc' (master = yarn, app id = application_1564742381922_0007).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.2

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_45)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

scala> val a = sc.textFile("hdfs://192.168.137.130:9000/test.txt")

a: org.apache.spark.rdd.RDD[String] = hdfs://192.168.137.130:9000/test.txt MapPartitionsRDD[1] at textFile at <console>:24

scala> val wc=a.flatMap(line=>line.split(" ")).map((_,1)).reduceByKey(_+_).collect

然后新开一个客户端,可以看到新增进程信息

[hadoop@vm01 bin]$ jps

28784 CoarseGrainedExecutorBackend

28880 Jps

7410 NodeManager

28291 CoarseGrainedExecutorBackend

6775 NameNode

6888 DataNode

27128 SparkSubmit

7241 ResourceManager

27785 ExecutorLauncher

7050 SecondaryNameNode

[hadoop@vm01 bin]$

[hadoop@vm01 bin]$ ps -ef|grep 28784

hadoop 28784 28343 2 17:36 ? 00:00:04 /usr/java/jdk1.8.0_45/bin/java -server -Xmx1024m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1564742381922_0008/container_1564742381922_0008_01_000003/tmp -Dspark.driver.port=57468 -Dspark.yarn.app.container.log.dir=/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/logs/userlogs/application_1564742381922_0008/container_1564742381922_0008_01_000003 -XX:OnOutOfMemoryError=kill %p org.apache.spark.executor.CoarseGrainedExecutorBackend --driver-url spark://CoarseGrainedScheduler@vm01:57468

--executor-id 2 --hostname vm01 --cores 1 --app-id application_1564742381922_0008--user-class-path file:/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1564742381922_0008/container_1564742381922_0008_01_000003/app.jar

[hadoop@vm01 bin]$ ps -ef|grep 28291

hadoop 28291 27859 1 17:36 ? 00:00:05 /usr/java/jdk1.8.0_45/bin/java -server -Xmx1024m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1564742381922_0008/container_1564742381922_0008_01_000002/tmp -Dspark.driver.port=57468 -Dspark.yarn.app.container.log.dir=/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/logs/userlogs/application_1564742381922_0008/container_1564742381922_0008_01_000002 -XX:OnOutOfMemoryError=kill %p org.apache.spark.executor.CoarseGrainedExecutorBackend --driver-url spark://CoarseGrainedScheduler@vm01:57468

--executor-id 1 --hostname vm01 --cores 1 --app-id application_1564742381922_0008--user-class-path file:/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1564742381922_0008/container_1564742381922_0008_01_000002/app.jar

通过进程信息和UI界面,可以看到CoarseGrainedExecutorBackend就是不同的Executor ID对应的进程

4、参数配置,调优

官网:Spark Properties

这里详细介绍了各个参数的作用

http://spark.apache.org/docs/2.4.2/running-on-yarn.html#spark-properties

4.1 启动方式

- 执行命令

./spark-shell --master yarn默认运行的是client模式。 - 执行

./spark-shell --master yarn-client或者./spark-shell --master yarn --deploy-mode client,建议用第二种 - 执行

./spark-shell --master yarn-cluster或者./spark-shell --master yarn --deploy-mode cluster,建议用第二种

4.2 spark.yarn.jars参数

spark on yarn模式,默认情况下会读取spark本地的jar包(再jars目录下)分配到yarn的containers中,我们可以观察使用spark-shell启动时的日志:

19/08/02 16:12:52 WARN Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

这样每次启动会很慢,读取很多东西,可以通过spark.yarn.jars参数指定放在hdfs上

#把jars目录下的jar包上传到hdfs

[hadoop@hadoop spark-2.2.0-bin-2.6.0-cdh5.7.0]$ hdfs dfs -mkdir -p /spark/jars

[hadoop@hadoop spark-2.2.0-bin-2.6.0-cdh5.7.0]$ hdfs dfs -put jars/ /spark/jars

conf目录下:

[hadoop@vm01 conf]$ cp spark-defaults.conf.template spark-defaults.conf

[hadoop@vm01 conf]$ vi spark-defaults.conf

spark.yarn.jars hdfs:///spark/jars/jars/*

4.3 spark.port.maxRetries

spark对于同时运行的作业数目是有限制,该限制由参spark.port.maxRetries决定,该参数默认值为16

[hadoop@vm01 conf]$ vi spark-defaults.conf

spark.port.maxRetries ${业务所需要的数值}

4.4 spark.yarn.maxAppAttempts

该属性用来设置作业最大重试次数。默认情况应该是偏小的适当放大