CVPR2018_Seeing Small Faces from Robust Anchor’s Perspective

CVPR2018的人脸检测文章,基于frcnn、ssd的anchor机制,作者认为小人脸检测不到的主要原因是因为anchor bbox与小人脸的iou过低,现有的anchor based detector处理scale invariance并不给力;作者提出了Expected Max Overlapping (EMO) score来解释iou低的原因,并提出了anchor stride reduction with new network architectures, extra shifted anchors, stochastic face shifting等策略提升人脸检测性能;

Anchor-based detectors quantize the continuous space of all possible face bounding boxes on the image plane into the discrete space of a set of pre-defined anchor boxes that serve as references.这句话的意思是:face size在图像上其实是连续的,但预定义的anchor size却是离散的;

During training, each face is matched with one or several close anchors. These faces are trained to output high confidence scores and then regress to ground-truth boxes. 训练阶段,一个gt face bbox匹配若干个anchor;

During inference, faces in a testing image are detected by classifying and regressing anchors.测试阶段,根据anchor来分类和回归face

作者认为现有anchor方案存在的问题:

after classifying and adjusting anchor boxes, the new boxes with high confidence scores are still not highly overlapped with enough small faces. 检测阶段,根据anchor调整的bbox与小人脸匹配度不够

分析方案:

For each face we compute its highest IoU with overlapped anchors. Then faces are divided into several scale groups. Within each scale group we compute the averaged highest IoU score. 根据匹配的人脸尺度做group划分,再然后计算平均iou score.

find that average IoUs across face scales are positively correlated with the recall rates. We think anchor boxes with low IoU overlaps with small faces are harder to be adjusted to the ground-truth, resulting in low recall of small faces.

fig 1(b)中,人脸越小,iou score越低;人脸越小,anchor本身与gt bbox iou就很低,再加上pred bbox与gt调整的难度越大,就导致了对小人脸的低召回率;

fig1(b)的意思,就算每个gt face bbox与匹配anchor的max iou score,再根据bbox的尺度做group,计算每个group内的iou score的均值,结论就是人脸越小,iou score越低;

fig1(c)就是作者提出的EMO方案,可以让anchor对face bbox有更高的iou;

EMO操作简介:

given a face of known size and a set of anchors, we compute the expected max IoU of the face with the anchors, assuming the face’s location is drawn from a 2D distribution of on the image

plane. 假设人脸位置分布在图像上服从二维分布,因为人脸可以出现在图像的任何位置,可以理解是二维图像上的均匀分布吧;

The EMO score theoretically explains why larger faces are easier to be highly overlapped by anchors and that densely distributed anchors are more likely to cover faces. EMO score可解释两点:1 face bbox越大,matched anchor的iou score越大;2 anchor分布越密集,越容易match face

基于EMO score,我们提出了若干个方案:

1 reduce the anchor stride with various network architecture designs;减少anchor stride,这点跟S3FD有一点类似;

2 add anchors shifted away from the canonical center so that the anchor distribution becomes denser;在原有生成的anchor位置做一个抖动漂移,可以让anchor分布更加密集;

3 stochastically shift the faces in order to increase the chance of getting higher IoU overlaps;对gt face bbox随机的shift来提升iou score?

4 match low-overlapped faces with multiple anchors;通过多重anchor来提升低匹配face bbox的iou;

作者总结的论文创新点:

1 Provide an in-depth analysis of the anchor matching mechanism under different conditions with the newly proposed Expected Max Overlap (EMO) score to theoretically characterize anchors’ ability of achieving high face IoU scores;通过EMO score分析来提升gt bbox与anchor的iou score;

2 Propose several effective techniques of new anchor design for higher IoU scores, especially for tiny faces including anchor stride reduction with new network architectures, extra shifted anchors,

and stochastic face shifting.基于1,提出了三个方案来提升anchor与face gt的iou;

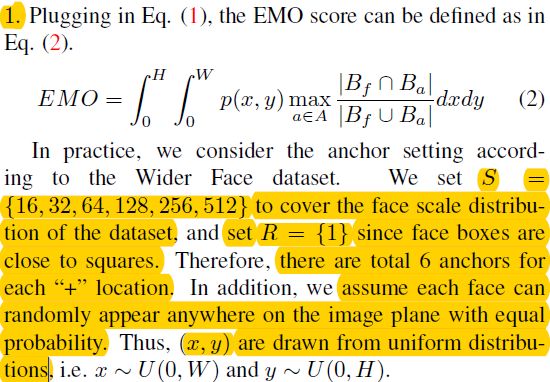

3 Expected Max Overlapping Scores

EMO score:characterize anchors’ ability of achieving high face IoU scores. derive the EMO by computing the expected max IoU between a face and anchors w.r.t. the distribution of face’s location. 依照人脸位置分布,计算face bbox与anchor的最佳匹配;

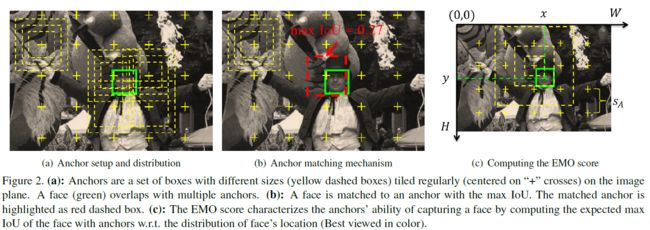

(a) anchor的分布特性,就是frcnn的一套;(b) anchor的匹配策略,有点反直觉,从直觉上看应该是这个位置的更小的anchor匹配gt face bbox;但实际匹配的是更大的anchor;(c) 依照EMO score的匹配方案,可以匹配更贴近的anchor,比单独基于iou的方案更好;

第三节分析了人脸检测中iou的匹配和计算方式,介绍了EMO的计算方式,为第四节提出的各种改进方案提供了理论上的指导;

3.1 Overview of AnchorBased Detector

本小节回顾了two stage detector中anchor匹配的规则;

Anchors are associated with certain feature maps which determine the location and stride of anchors. frcnn的话,就只对应一层的feature map了,ssd对应多层;

c x h x w be interpreted as c-dimensional representations corresponding to h x w sliding-window locations distributed regularly on the image. The distance between adjacent locations is the feature stride sF and decided by H/h = W/w = sF .

Anchors take those locations as their centers and use the corresponded representations to compute confidence scores and bounding box regression. So, the anchor stride is equivalent to the feature stride, i.e. sA = sF .解释了anchor和stride的对应关系,在原始版本的frcnn中,anchor stride和org image size/feature map size相等;

3.2 Anchor Setup and Matching

介绍了anchor的匹配方法,结合fig 2a、2b,比较常规;看看即可;

3.3 Computing the EMO Score

介绍了emo score的定义:

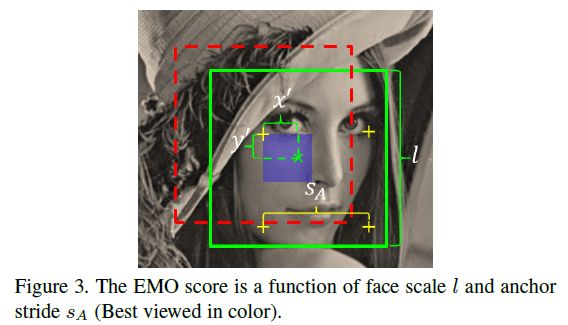

结合fig3介绍了emo score的计算方法:

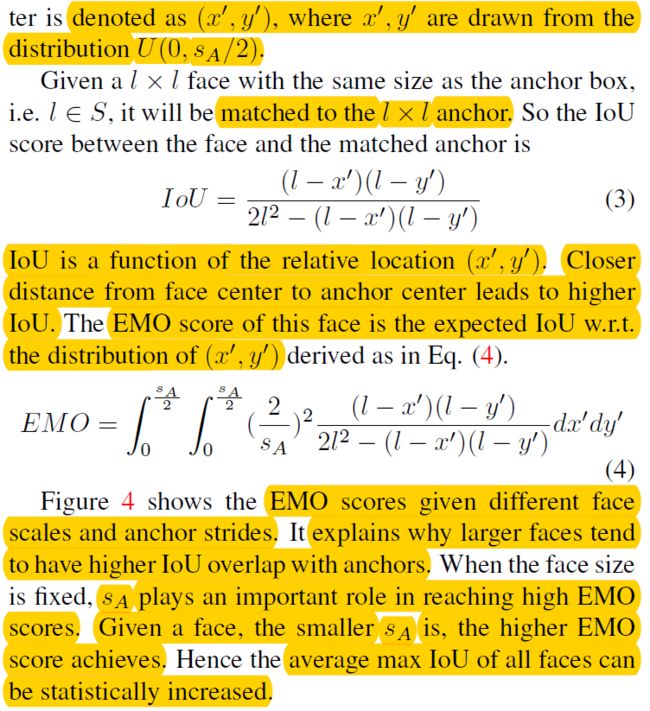

结论就是:

1 在传统的anchor设置方案中,人脸越大,积分里后面一大坨也会对应大,所以emo高;

2 对应给定的人脸,anchor stride越小,相当于anchor采样越密集,那么emo越高;

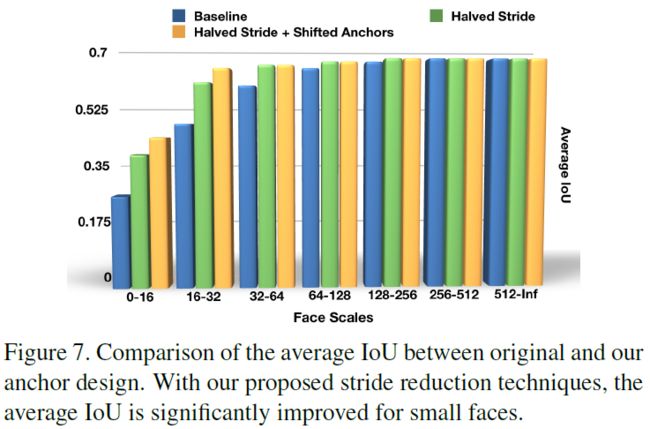

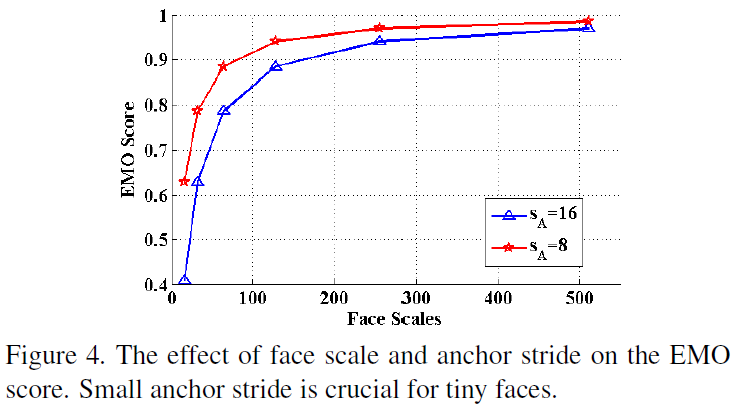

fig4说明,stride越小,emo score越高;

4. Strategies of New Anchor Design

We aim at improving the average IoU especially for tiny faces from the view of theoretically improving EMO score, since average IoU scores are correlated with face recall rate. 作者认为EMO score、IoU scores、 face recall rate三者正相关;

因此想法就是:increase the average IoU by reducing anchor stride as well as reducing the

distance between the face center and the anchor center.

分四步来提高小人脸与anchor的匹配度:

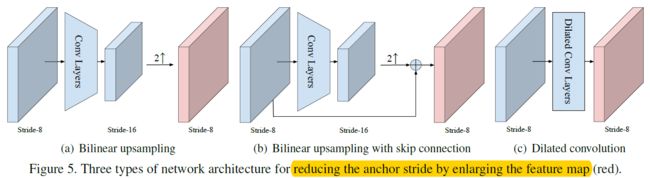

1 提出了三种新的网络架构:new network architectures to change the stride of feature map associated with anchors.

2 重新定义了anchor的位置:we redefined the anchor locations such that the anchor stride can be further reduced

3 人脸位置漂移:face shift jittering method

4 在训练阶段对小人脸加强与anchor的匹配:a compensation strategy for training which matches very tiny faces to multiple anchors.

4.1. Stride Reduction with Enlarged Feature Maps

one way to increase the EMO scores is to reduce the anchor stride by enlarging the feature map

三种方案在保持feature map的前提下,减少stride,其实都是很普遍的方案;

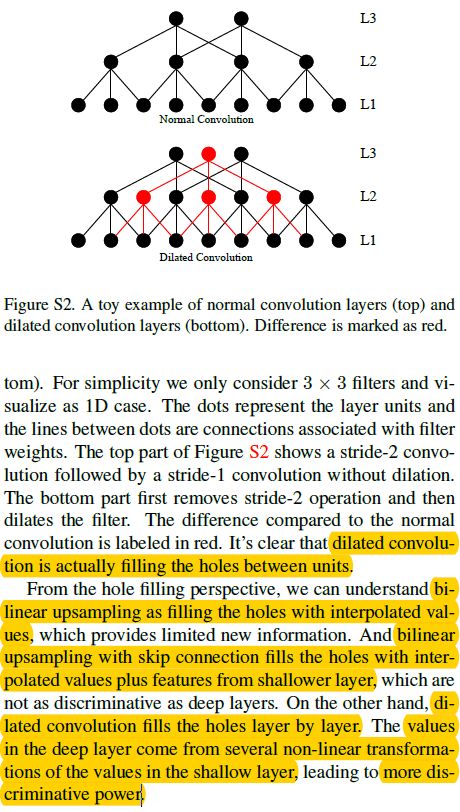

a 使用反卷积;就是先upscale,再用反卷积filter,反卷积filter初始化由双线性上采样参数初始化,再在训练中学习该参数;

b 增加了一个跨层链接,类似fpn;1 x 1卷积使得融合channel一致;再接一个3 x 3卷积;

c 虫洞卷积,也是常规做法;作者认为c方案最好,因为没有使用新的参数增加计算量;

we take out the stride-2 operation (either pooling or convolution) right after the shallower large

map and dilate the filters in all the following convolution layers. Note that 1 x 1 filters are not required to be dilated. In addition to not having any additional parameters, dilated convolution also preserve the size of receptive fields of the filters.

4.2. Extra Shifted Anchors

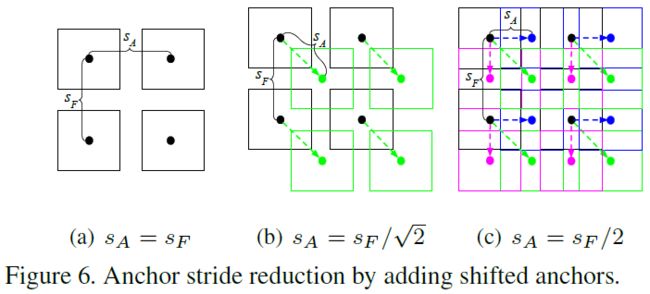

比较简单,就是增加anchor的采样密度,使得Sa更小;

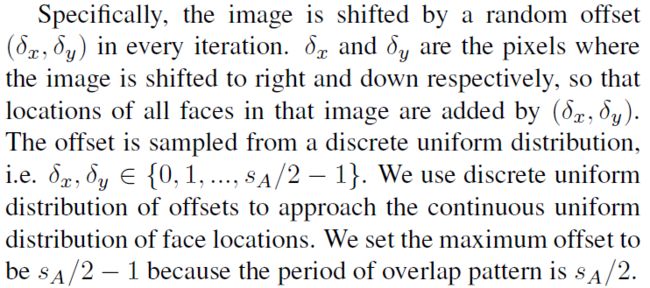

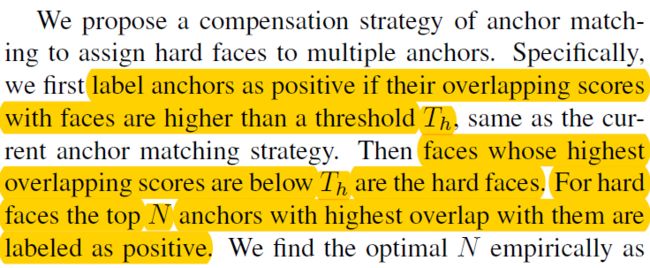

4.1方案中,Sa还是等于Sf的,4.2就可以保证Sa These shifted anchors share the same feature representation with the anchors in the centers.作者将新增的密集采样的anchor特征,设置为与中心anchor的特征保持一致;简而言之,就是复用中心anchor的特征; only need to add small shifted anchors since large anchors already guarantee high average IoUs, 仅仅在小人脸上使用这个策略,也即在conv3_3这种low feature map上用用就行; fig 7说明了,使用4.1+4.2小节的方法,确实是有效果; 4.3. Face Shift Jittering In order to increase the probability for those faces to get high IoU overlap with anchors, they are randomly shifted in each iteration during training.在训练时,对整个图像做一个随机的抖动,这样可以增加每个face与anchor匹配的iou;----因为人脸在图像中的位置是固定的,如果增加了抖动,那么每轮训练,anchor和人脸的位置,就会因为一个随机抖动,就不那么固定了; 操作流程:其实很简单,每次抖动的step在0~Sa/2中随机采样; 4.4. Hard Face Compensation It is because face scales and locations are continuous whereas anchor scales and locations are discrete. Therefore, there are still some faces whose scales or locations are far away from the anchor.因为真实的人脸位置和尺度连续,但anchor的位置和尺度离散,就导致了还是有些小人脸比较难以被匹配; 以上就是作者提出的anchor补偿策略: 1 熟悉的配方,使用Th选择正anchor; 2 如果一个face bbox,与之匹配的maxi iou score的anchor,其score还是小于Th,那么这个人脸就是一个难以搞定的人脸(hard face),然后我们将与之匹配的anchor按score降序排序,选择Top N anchors作为正anchor; 5实验 总结:作者认为anchor和face gt bbox的低iou匹配是造成小人脸检测精度低的原因;因此基于emo score,作者提出了一系列改进小人脸iou低匹配度的方法; This work identified low face-anchor overlap as the major reason hindering anchor-based detectors to detect tiny faces. We proposed the new EMO score to characterize anchors’ capability of getting high overlaps with faces, providing an in-depth analysis of the anchor matching mechanism. This inspired us to come up with several simple but effective strategies of a new anchor design for higher face IoU scores. sumplements里面介绍了下虫洞卷积的思想,讲的蛮好,分析为为什么虫洞卷积比单纯地使用上采样、上采样+跨层连接效果好; 论文参考 1 CVPR2018_Seeing Small Faces from Robust Anchor’s Perspective