【九】hadoop编程之基于内容的推荐算法

基于内容的协同过滤推荐算法:给用户推荐和他们之前喜欢的物品在内容上相似的其他物品

物品特征建模(item profile)

以电影为例

1表示电影具有某特征,0表示电影不具有某特征

科幻 言情 喜剧 动作 纪实 国产 欧美 日韩 斯嘉丽的约翰 成龙 范冰冰

复仇者联盟: 1 0 0 1 0 0 1 0 1 0 0

绿巨人: 1 0 0 1 0 0 1 0 0 0 0

宝贝计划: 0 0 1 1 0 1 0 0 0 1 1

十二生肖: 0 0 0 1 0 1 0 0 0 1 0

算法步骤

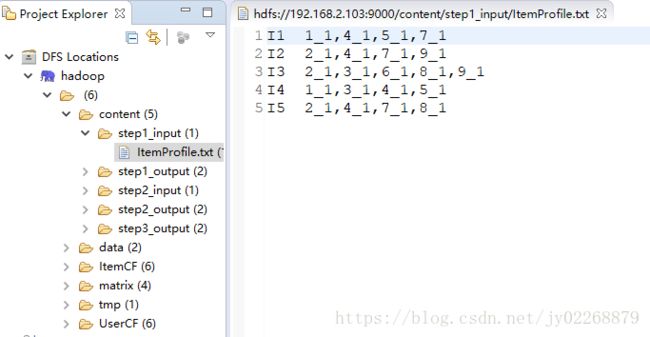

1.构建item profile矩阵

物品ID——标签

tag: (1) (2) (3) (4) (5) (6) (7) (8) (9)

I1: 1 0 0 1 1 0 1 0 0

I2: 0 1 0 1 0 0 1 0 1

I3: 0 1 1 0 0 1 0 1 1

I4: 1 0 1 1 1 0 0 0 0

I5: 0 1 0 1 0 0 1 1 0

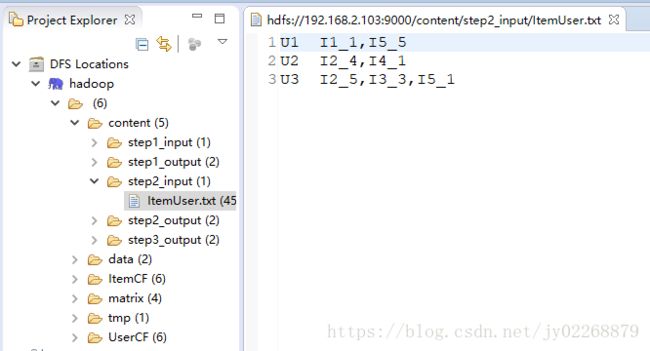

2.构建item user评分矩阵

用户ID——物品ID

I1 I2 I3 I4 I5

U1 1 0 0 0 5

U2 0 4 0 1 0

U3 0 5 3 0 1

3.item user X item profile = user profile

用户ID——标签

tag: (1) (2) (3) (4) (5) (6) (7) (8) (9)

U1 1 5 0 6 1 0 6 5 0

U2 1 4 1 5 1 0 4 0 4

U3 0 9 3 6 0 3 6 4 8

值的含义:用户对所有标签感兴趣的程度

比如: U1-(1)表示用户U1对特征(1)的偏好权重为1,可以看出用户U1对特征(4)(7)最感兴趣,其权重为6

4.对user profile 和 item profile求余弦相似度

左侧矩阵的每一行与右侧矩阵的每一行计算余弦相似度

cos

项目目录:

输入文件如下

MapReduce步骤

1.将item profile转置

输入:物品ID(行)——标签ID(列)——0或1 物品特征建模

输出:标签ID(行)——物品ID(列)——0或1

代码:

mapper1

package step1;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

/**

* @author liyijie

* @date 2018年5月13日下午10:36:18

* @email 37024760@qq.com

* @remark

* @version

*

* 将item profile转置

*/

public class Mapper1 extends Mapper {

private Text outKey = new Text();

private Text outValue = new Text();

/**

* key:1

* value:1 1_0,2_3,3_-1,4_2,5_-3

* */

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] rowAndLine = value.toString().split("\t");

//矩阵行号 物品ID

String itemID = rowAndLine[0];

//列值 用户ID_分值

String[] lines = rowAndLine[1].split(",");

for(int i = 0 ; i

reducer1

package step1;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

/**

* @author liyijie

* @date 2018年5月13日下午10:56:28

* @email 37024760@qq.com

* @remark

* @version

*

*

*

* 将item profile转置

*/

public class Reducer1 extends Reducer {

private Text outKey = new Text();

private Text outValue = new Text();

//key:列号 用户ID value:行号_值,行号_值,行号_值,行号_值... 物品ID_分值

@Override

protected void reduce(Text key, Iterable values, Context context)

throws IOException, InterruptedException {

StringBuilder sb = new StringBuilder();

//text:行号_值 物品ID_分值

for(Text text:values){

sb.append(text).append(",");

}

String line = null;

if(sb.toString().endsWith(",")){

line = sb.substring(0, sb.length()-1);

}

outKey.set(key);

outValue.set(line);

context.write(outKey,outValue);

}

}

mr1

package step1;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

/**

* @author liyijie

* @date 2018年5月13日下午11:07:13

* @email 37024760@qq.com

* @remark

* @version

*

* 将item profile转置

*/

public class MR1 {

private static String inputPath = "/content/step1_input";

private static String outputPath = "/content/step1_output";

private static String hdfs = "hdfs://node1:9000";

public int run(){

try {

Configuration conf=new Configuration();

conf.set("fs.defaultFS", hdfs);

Job job = Job.getInstance(conf,"step1");

//配置任务map和reduce类

job.setJarByClass(MR1.class);

job.setJar("F:\\eclipseworkspace\\content\\content.jar");

job.setMapperClass(Mapper1.class);

job.setReducerClass(Reducer1.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileSystem fs = FileSystem.get(conf);

Path inpath = new Path(inputPath);

if(fs.exists(inpath)){

FileInputFormat.addInputPath(job,inpath);

}else{

System.out.println(inpath);

System.out.println("不存在");

}

Path outpath = new Path(outputPath);

fs.delete(outpath,true);

FileOutputFormat.setOutputPath(job, outpath);

return job.waitForCompletion(true)?1:-1;

} catch (ClassNotFoundException | InterruptedException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

return -1;

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

int result = -1;

result = new MR1().run();

if(result==1){

System.out.println("step1运行成功");

}else if(result==-1){

System.out.println("step1运行失败");

}

}

}

结果

2.item user (评分矩阵) X item profile(已转置)

输入:根据用户的行为列表计算的评分矩阵

缓存:步骤1输出

输出:用户ID(行)——标签ID(列)——分值(用户对所有标签感兴趣的程度)

代码:

mapper2

package step2;

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.text.DecimalFormat;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

/**

* @author liyijie

* @date 2018年5月13日下午11:43:51

* @email 37024760@qq.com

* @remark

* @version

*

*

* item user (评分矩阵) X item profile(已转置)

*/

public class Mapper2 extends Mapper {

private Text outKey = new Text();

private Text outValue = new Text();

private List cacheList = new ArrayList();

private DecimalFormat df = new DecimalFormat("0.00");

/**在map执行之前会执行这个方法,只会执行一次

*

* 通过输入流将全局缓存中的矩阵读入一个java容器中

*/

@Override

protected void setup(Context context)throws IOException, InterruptedException {

super.setup(context);

FileReader fr = new FileReader("itemUserScore1");

BufferedReader br = new BufferedReader(fr);

//右矩阵

//key:行号 物品ID value:列号_值,列号_值,列号_值,列号_值,列号_值... 用户ID_分值

String line = null;

while((line=br.readLine())!=null){

cacheList.add(line);

}

fr.close();

br.close();

}

/**

* key: 行号 物品ID

* value:行 列_值,列_值,列_值,列_值 用户ID_分值

* */

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] rowAndLine_matrix1 = value.toString().split("\t");

//矩阵行号

String row_matrix1 = rowAndLine_matrix1[0];

//列_值

String[] cloumn_value_array_matrix1 = rowAndLine_matrix1[1].split(",");

for(String line:cacheList){

String[] rowAndLine_matrix2 = line.toString().split("\t");

//右侧矩阵line

//格式: 列 tab 行_值,行_值,行_值,行_值

String cloumn_matrix2 = rowAndLine_matrix2[0];

String[] row_value_array_matrix2 = rowAndLine_matrix2[1].split(",");

//矩阵两位相乘得到的结果

double result = 0;

//遍历左侧矩阵一行的每一列

for(String cloumn_value_matrix1:cloumn_value_array_matrix1){

String cloumn_matrix1 = cloumn_value_matrix1.split("_")[0];

String value_matrix1 = cloumn_value_matrix1.split("_")[1];

//遍历右侧矩阵一行的每一列

for(String cloumn_value_matrix2:row_value_array_matrix2){

if(cloumn_value_matrix2.startsWith(cloumn_matrix1+"_")){

String value_matrix2 = cloumn_value_matrix2.split("_")[1];

//将两列的值相乘并累加

result+= Double.valueOf(value_matrix1)*Double.valueOf(value_matrix2);

}

}

}

if(result==0){

continue;

}

//result就是结果矩阵中的某个元素,坐标 行:row_matrix1 列:row_matrix2(右侧矩阵已经被转置)

outKey.set(row_matrix1);

outValue.set(cloumn_matrix2+"_"+df.format(result));

//输出格式为 key:行 value:列_值

context.write(outKey, outValue);

}

}

}

reducer2

package step2;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

/**

* @author liyijie

* @date 2018年5月13日下午11:43:59

* @email 37024760@qq.com

* @remark

* @version

*

* item user (评分矩阵) X item profile(已转置)

*/

public class Reducer2 extends Reducer{

private Text outKey = new Text();

private Text outValue = new Text();

// key:行 物品ID value:列_值 用户ID_分值

@Override

protected void reduce(Text key, Iterable values, Context context)

throws IOException, InterruptedException {

StringBuilder sb = new StringBuilder();

for(Text text:values){

sb.append(text+",");

}

String line = null;

if(sb.toString().endsWith(",")){

line = sb.substring(0, sb.length()-1);

}

outKey.set(key);

outValue.set(line);

context.write(outKey,outValue);

}

}

mr2

package step2;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

/**

* @author liyijie

* @date 2018年5月13日下午11:44:07

* @email 37024760@qq.com

* @remark

* @version

*

* item user (评分矩阵) X item profile(已转置)

*/

public class MR2 {

private static String inputPath = "/content/step2_input";

private static String outputPath = "/content/step2_output";

//将step1中输出的转置矩阵作为全局缓存

private static String cache="/content/step1_output/part-r-00000";

private static String hdfs = "hdfs://node1:9000";

public int run(){

try {

Configuration conf=new Configuration();

conf.set("fs.defaultFS", hdfs);

Job job = Job.getInstance(conf,"step2");

//如果未开启,使用 FileSystem.enableSymlinks()方法来开启符号连接。

FileSystem.enableSymlinks();

//要使用符号连接,需要检查是否启用了符号连接

boolean areSymlinksEnabled = FileSystem.areSymlinksEnabled();

System.out.println(areSymlinksEnabled);

//添加分布式缓存文件

job.addCacheArchive(new URI(cache+"#itemUserScore1"));

//配置任务map和reduce类

job.setJarByClass(MR2.class);

job.setJar("F:\\eclipseworkspace\\content\\content.jar");

job.setMapperClass(Mapper2.class);

job.setReducerClass(Reducer2.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileSystem fs = FileSystem.get(conf);

Path inpath = new Path(inputPath);

if(fs.exists(inpath)){

FileInputFormat.addInputPath(job,inpath);

}else{

System.out.println(inpath);

System.out.println("不存在");

}

Path outpath = new Path(outputPath);

fs.delete(outpath,true);

FileOutputFormat.setOutputPath(job, outpath);

return job.waitForCompletion(true)?1:-1;

} catch (ClassNotFoundException | InterruptedException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

} catch (URISyntaxException e) {

e.printStackTrace();

}

return -1;

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

int result = -1;

result = new MR2().run();

if(result==1){

System.out.println("step2运行成功");

}else if(result==-1){

System.out.println("step2运行失败");

}

}

}

结果

3.cos<步骤1输入,步骤2输出>

输入:步骤1输入 物品特征建模

缓存:步骤2输出

输出:用户ID(行)——物品ID(列)——相似度

代码:

mapper3

package step3;

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.text.DecimalFormat;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

/**

* @author liyijie

* @date 2018年5月13日下午11:43:51

* @email 37024760@qq.com

* @remark

* @version

*

*

* cos<步骤1输入,步骤2输出>

*/

public class Mapper3 extends Mapper {

private Text outKey = new Text();

private Text outValue = new Text();

private List cacheList = new ArrayList();

// 右矩阵列值 下标右行 右值

//private Map cacheMap = new HashMap<>();

private DecimalFormat df = new DecimalFormat("0.00");

/**在map执行之前会执行这个方法,只会执行一次

*

* 通过输入流将全局缓存中的矩阵读入一个java容器中

*/

@Override

protected void setup(Context context)throws IOException, InterruptedException {

super.setup(context);

FileReader fr = new FileReader("itemUserScore2");

BufferedReader br = new BufferedReader(fr);

//右矩阵

//key:行号 物品ID value:列号_值,列号_值,列号_值,列号_值,列号_值... 用户ID_分值

String line = null;

while((line=br.readLine())!=null){

cacheList.add(line);

}

fr.close();

br.close();

}

/**

* key: 行号 物品ID

* value:行 列_值,列_值,列_值,列_值 用户ID_分值

* */

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] rowAndLine_matrix1 = value.toString().split("\t");

//矩阵行号

String row_matrix1 = rowAndLine_matrix1[0];

//列_值

String[] cloumn_value_array_matrix1 = rowAndLine_matrix1[1].split(",");

//计算左侧矩阵行的空间距离

double denominator1 = 0;

for(String column_value:cloumn_value_array_matrix1){

String score = column_value.split("_")[1];

denominator1 += Double.valueOf(score)*Double.valueOf(score);

}

denominator1 = Math.sqrt(denominator1);

for(String line:cacheList){

String[] rowAndLine_matrix2 = line.toString().split("\t");

//右侧矩阵line

//格式: 列 tab 行_值,行_值,行_值,行_值

String cloumn_matrix2 = rowAndLine_matrix2[0];

String[] row_value_array_matrix2 = rowAndLine_matrix2[1].split(",");

//计算右侧矩阵行的空间距离

double denominator2 = 0;

for(String column_value:row_value_array_matrix2){

String score = column_value.split("_")[1];

denominator2 += Double.valueOf(score)*Double.valueOf(score);

}

denominator2 = Math.sqrt(denominator2);

//矩阵两位相乘得到的结果 分子

double numerator = 0;

//遍历左侧矩阵一行的每一列

for(String cloumn_value_matrix1:cloumn_value_array_matrix1){

String cloumn_matrix1 = cloumn_value_matrix1.split("_")[0];

String value_matrix1 = cloumn_value_matrix1.split("_")[1];

//遍历右侧矩阵一行的每一列

for(String cloumn_value_matrix2:row_value_array_matrix2){

if(cloumn_value_matrix2.startsWith(cloumn_matrix1+"_")){

String value_matrix2 = cloumn_value_matrix2.split("_")[1];

//将两列的值相乘并累加

numerator+= Double.valueOf(value_matrix1)*Double.valueOf(value_matrix2);

}

}

}

double cos = numerator/(denominator1*denominator2);

if(cos == 0){

continue;

}

//cos就是结果矩阵中的某个元素,坐标 行:row_matrix1 列:row_matrix2(右侧矩阵已经被转置)

outKey.set(cloumn_matrix2);

outValue.set(row_matrix1+"_"+df.format(cos));

//输出格式为 key:行 value:列_值

context.write(outKey, outValue);

}

}

}

reducer3

package step3;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

/**

* @author liyijie

* @date 2018年5月13日下午11:43:59

* @email 37024760@qq.com

* @remark

* @version

*

* cos<步骤1输入,步骤2输出>

*/

public class Reducer3 extends Reducer{

private Text outKey = new Text();

private Text outValue = new Text();

// key:行 物品ID value:列_值 用户ID_分值

@Override

protected void reduce(Text key, Iterable values, Context context)

throws IOException, InterruptedException {

StringBuilder sb = new StringBuilder();

for(Text text:values){

sb.append(text+",");

}

String line = null;

if(sb.toString().endsWith(",")){

line = sb.substring(0, sb.length()-1);

}

outKey.set(key);

outValue.set(line);

context.write(outKey,outValue);

}

}

mr3

package step3;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

/**

* @author liyijie

* @date 2018年5月13日下午11:44:07

* @email 37024760@qq.com

* @remark

* @version

*

* cos<步骤1输入,步骤2输出>

*/

public class MR3 {

private static String inputPath = "/content/step1_input";

private static String outputPath = "/content/step3_output";

//将step1中输出的转置矩阵作为全局缓存

private static String cache="/content/step2_output/part-r-00000";

private static String hdfs = "hdfs://node1:9000";

public int run(){

try {

Configuration conf=new Configuration();

conf.set("fs.defaultFS", hdfs);

Job job = Job.getInstance(conf,"step3");

//如果未开启,使用 FileSystem.enableSymlinks()方法来开启符号连接。

FileSystem.enableSymlinks();

//要使用符号连接,需要检查是否启用了符号连接

boolean areSymlinksEnabled = FileSystem.areSymlinksEnabled();

System.out.println(areSymlinksEnabled);

//添加分布式缓存文件

job.addCacheArchive(new URI(cache+"#itemUserScore2"));

//配置任务map和reduce类

job.setJarByClass(MR3.class);

job.setJar("F:\\eclipseworkspace\\content\\content.jar");

job.setMapperClass(Mapper3.class);

job.setReducerClass(Reducer3.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileSystem fs = FileSystem.get(conf);

Path inpath = new Path(inputPath);

if(fs.exists(inpath)){

FileInputFormat.addInputPath(job,inpath);

}else{

System.out.println(inpath);

System.out.println("不存在");

}

Path outpath = new Path(outputPath);

fs.delete(outpath,true);

FileOutputFormat.setOutputPath(job, outpath);

return job.waitForCompletion(true)?1:-1;

} catch (ClassNotFoundException | InterruptedException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

} catch (URISyntaxException e) {

e.printStackTrace();

}

return -1;

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

int result = -1;

result = new MR3().run();

if(result==1){

System.out.println("step3运行成功");

}else if(result==-1){

System.out.println("step3运行失败");

}

}

}

结果