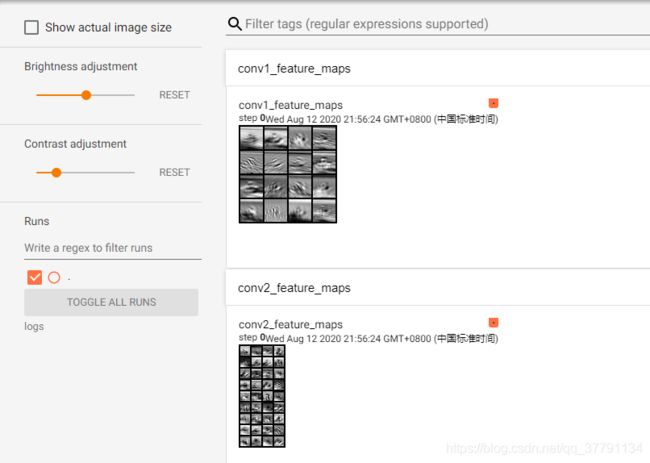

【pytorch】用tensorboardX可视化特征图

利用tensorflowX对特征图进行可视化,不同卷积层的特征图的抽取程度不一样的,

# 利用tensorboardX可视化特征图

import torchvision.utils as vutils

from tensorboardX import SummaryWriter

writer = SummaryWriter(log_dir='logs', comment='feature map')

for i,data in enumerate(trainloader, 0):

# 获取训练数据

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

x = inputs[0].unsqueeze(0) # x 在这里呀

break

img_grid = vutils.make_grid(x, normalize=True, scale_each= True, nrow=2)

net.eval()

for name, layer in net._modules.items():

# 为fc层预处理x

x = x.view(x.size(0), -1) if 'fc' in name else x

print(x.size())

x = layer(x)

print(f'{name}')

# 查看卷积层的特征图

if 'layer' in name or 'conv' in name:

x1 = x.transpose(0, 1) # C,B, H, W ---> B,C, H, W

img_grid = vutils.make_grid(x1, normalize=True, scale_each=True, nrow=4)

writer.add_image(f'{name}_feature_maps', img_grid, global_step=0)这里我有点不明白是:

x1 = x.transpose(0, 1) # C,B, H, W ---> B,C, H, W

为何要变换呢?

因为打印出来如下:

torch.Size([1, 3, 32, 32])

conv1

torch.Size([1, 16, 28, 28])

pool1

torch.Size([1, 16, 14, 14])

conv2

torch.Size([1, 36, 12, 12])

pool2

torch.Size([1, 1296])

fc1

torch.Size([1, 128])

fc2所以奇怪哈,本来就是B,C,H,W,为何要变化呢?