CenOS7下ELK日志分析搭建

ELK由Elasticsearch、Logstash和Kibana三部分组件组成;

Elasticsearch是个开源分布式搜索引擎,它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Logstash是一个完全开源的工具,它可以对你的日志进行收集、分析,并将其存储供以后使用

kibana 是一个开源和免费的工具,它可以为 Logstash 和 ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助您汇总、分析和搜索重要数据日志。

搭建Elasticsearch

安装ElasticSearch

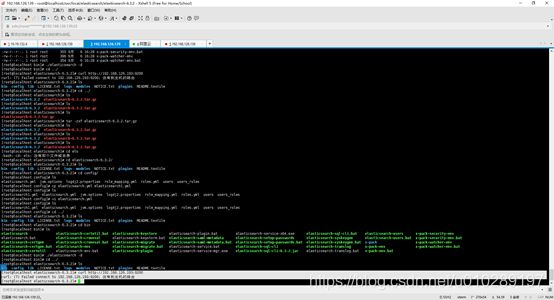

1.下载ElasticSearch

[root@localhost 20190903]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.3.2.tar.gz

2. 创建加压目录

[root@localhost local]# mkdir elasticsearch

3.解压elasticsearch-6.3.2.tar.gz

[root@localhost elasticsearch]# tar -xvf elasticsearch-6.3.2.tar.gz -C /usr/local/elasticsearch

4. 修改配置

[root@localhost config]# vi elasticsearch.yml

network.host: 192.168.126.139 #你自己的服务器ip

http.port: 9200 #端口号

5.启动

[root@localhost bin]# ./elasticsearch –d

6.测试

[root@localhost elasticsearch-6.3.2]# curl http://192.168.126.193:9200

出现问题:

主要是由于jvm内存不足引起的。

/usr/local/elasticsearch/elasticsearch-6.3.2/config中找到jvm.options

[root@localhost config]# vi jvm.options

修改成:

-Xms512m

-Xmx512m

默认是

-Xms1g

-Xmx1g

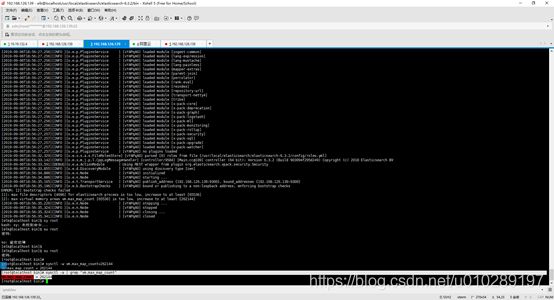

[root@localhost bin]# ./elasticsearch

出现上面的问题:

因为,root不允许直接启动。需要创建新用户和并且给用户授权。

(1)以root用户来创建新的用户 , groupadd 添加一个用户组

[root@localhost /]# groupadd elk

(2)添加一个用户,-g是在用户组下 -p是密码(用户为elk,密码elk)

[root@localhost /]# useradd elk -g elk -p elk

(3)进入es的安装目录

[root@localhost elasticsearch]# cd /usr/local/elasticsearch

(4)给用户elk授权

[root@localhost elasticsearch]# chown -R elk:elk elasticsearch-6.3.2/

(5)切换到 elk用户

[root@localhost elasticsearch]# su elk

[elk@localhost bin]$ ./elasticsearch

[2019-09-06T18:56:32,329][INFO ][o.e.x.s.a.s.FileRolesStore] [vYAPqA0] parsed [0] roles from file [/usr/local/elasticsearch/elasticsearch-6.3.2/config/roles.yml]

[2019-09-06T18:56:33,142][INFO ][o.e.x.m.j.p.l.CppLogMessageHandler] [controller/9584] [Main.cc@109] controller (64 bit): Version 6.3.2 (Build 903094f295d249) Copyright (c) 2018 Elasticsearch BV

[2019-09-06T18:56:33,591][DEBUG][o.e.a.ActionModule ] Using REST wrapper from plugin org.elasticsearch.xpack.security.Security

[2019-09-06T18:56:33,855][INFO ][o.e.d.DiscoveryModule ] [vYAPqA0] using discovery type [zen]

[2019-09-06T18:56:34,886][INFO ][o.e.n.Node ] [vYAPqA0] initialized

[2019-09-06T18:56:34,886][INFO ][o.e.n.Node ] [vYAPqA0] starting ...

[2019-09-06T18:56:35,165][INFO ][o.e.t.TransportService ] [vYAPqA0] publish_address {192.168.126.139:9300}, bound_addresses {192.168.126.139:9300}

[2019-09-06T18:56:35,196][INFO ][o.e.b.BootstrapChecks ] [vYAPqA0] bound or publishing to a non-loopback address, enforcing bootstrap checks

ERROR: [2] bootstrap checks failed

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536]

[2]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

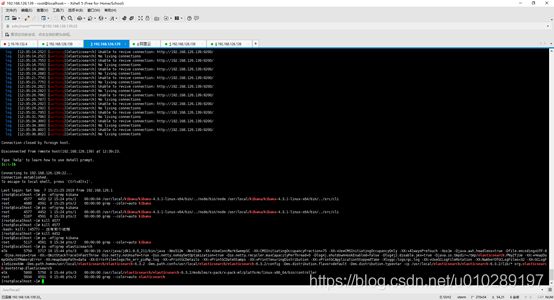

切换到root用户

[elk@localhost bin]$ su root

设置vm.max_map_count值

[root@localhost bin]# sysctl -w vm.max_map_count=262144

检查配置是否生效

[root@localhost bin]# sysctl -a | grep "vm.max_map_count"

再次启动报如下所示错误:

[2019-09-06T19:05:18,204][INFO ][o.e.b.BootstrapChecks ] [vYAPqA0] bound or publishing to a non-loopback address, enforcing bootstrap checks

ERROR: [1] bootstrap checks failed

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536]

报错max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536]是因为操作系统安全检测配置影响的,需要切换到root用户下做如下配置:

[root@localhost bin]# cd /etc/security/

先做一个配置备份

[root@localhost security]# cp limits.conf limits.conf.bak

然后编辑limits.conf增加如下配置:

# elasticsearch config start

* soft nofile 65536

* hard nofile 131072

* soft nproc 2048

* hard nproc 4096

# elasticsearch config end

在次启动:

[elk@localhost bin]$ ./elasticsearch

[2019-09-06T19:13:14,612][INFO ][o.e.x.s.t.n.SecurityNetty4HttpServerTransport] [vYAPqA0] publish_address {192.168.126.139:9200}, bound_addresses {192.168.126.139:9200}

[2019-09-06T19:13:14,612][INFO ][o.e.n.Node ] [vYAPqA0] started

6.测试验证

[root@localhost ~]# ps -ef|grep elasticsearch

在浏览器输入:http://192.168.126.193:9200/

安装elasticsearch-head插件

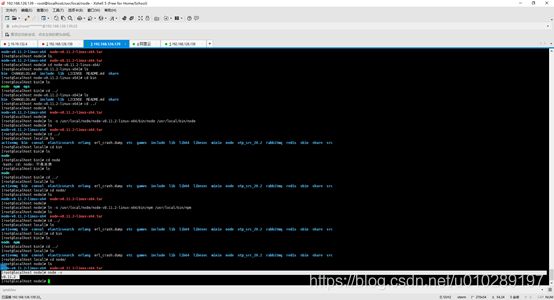

在安装elasticsearch—head插件首先需要安装node。

1.在线下载node-v8.11.2-linux-x64.tar.xz

[root@localhost node]# wget https://nodejs.org/dist/v8.11.2/node-v8.11.2-linux-x64.tar.xz

2.解压node-v8.11.2-linux-x64.tar.xz

[root@localhost node]# xz -d node-v8.11.2-linux-x64.tar.xz

第一步解压之后如下所示:

[root@localhost node]# tar -xvf node-v8.11.2-linux-x64.tar

3.创建软连接

[root@localhost node]# ln -s /usr/local/node/node-v8.11.2-linux-x64/bin/node /usr/local/bin/node

[root@localhost node]# ln -s /usr/local/node/node-v8.11.2-linux-x64/bin/npm /usr/local/bin/npm

4.验证

[root@localhost node]# node –v

出现如下所示表示安装node成功

5. 使用git安装elasticsearch-head

[root@localhost 20190903]# yum install -y epel git

[root@localhost 20190903]# yum install -y npm

[root@localhost 20190903]#git clone git://github.com/mobz/elasticsearch-head.git

[root@localhost 20190903]# cd elasticsearch-head

//设定nodejs安装软件的代理服务器

[root@localhostelasticsearch-head]# npm config set registry https://registry.npm.taobao.org

[root@localhost elasticsearch-head]# npm install

[root@localhost elasticsearch-head]# npm run start

> [email protected] start /usr/20190903/elasticsearch-head

> grunt server

>> Local Npm module "grunt-contrib-jasmine" not found. Is it installed?

(node:35271) ExperimentalWarning: The http2 module is an experimental API.

Running "connect:server" (connect) task

Waiting forever...

Started connect web server on http://localhost:9100

6.验证在浏览器输入

http://192.168.126.139:9100/

搭建搭建NGINX

(1)请参考:https://blog.csdn.net/bbwangj/article/details/80469230

进行nginx搭建

(2)修改配置文件nginx.conf

#user nobody;

worker_processes 1;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

upstream kibana4 { #对Kibana做代理

server 192.168.126.139:5601 fail_timeout=0;

}

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

log_format json '{"@timestamp":"$time_iso8601",' #配置NGINX的日志格式 json

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"url":"$uri",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"agent":"$http_user_agent",'

'"status":"$status"}';

access_log /usr/local/var/log/nginx/access.log_json json; #配置日志路径 json格式

error_log /usr/local/var/log/nginx/error.log;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl;

# server_name localhost;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 5m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

# location / {

# root html;

# index index.html index.htm;

# }

#}

server {

listen *:80;

server_name kibana_server;

access_log /usr/local/var/log/nginx/kibana.srv-log-dev.log;

error_log /usr/local/var/log/nginx/kibana.srv-log-dev.error.log;

location / {

root /var/www/kibana;

index index.html index.htm;

}

location ~ ^/kibana4/.* {

proxy_pass http://kibana4;

rewrite ^/kibana4/(.*) /$1 break;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $host;

auth_basic "Restricted";

auth_basic_user_file /etc/nginx/conf.d/kibana.myhost.org.htpasswd;

}

}

}

搭建Logstash

安装Logstash

1.下载安装包logstash-6.3.2.tar.gz

[root@localhost 20190903]# wget https://artifacts.elastic.co/downloads/logstash/logstash-6.3.2.tar.gz

2.解压安装包logstash-6.3.2.tar.gz

[root@localhost 20190903]# tar -zxvf logstash-6.3.2.tar.gz -C /usr/local/logstash/

3.进入解压后的bin目录

[root@localhost 20190903]#cd /usr/local/logstash/logstash-6.3.2/bin

4. 编写配置文件

这个文件不存在需要重新创建。

[root@localhost bin]# vim stdin.conf

input{

file {

path => "/usr/local/var/log/nginx/access.log_json" #NGINX日志地址 json格式

codec => "json" #json编码

}

}

filter {

mutate {

split => ["upstreamtime", ","]

}

mutate {

convert => ["upstreamtime", "float"]

}

}

output{

elasticsearch {

hosts => ["192.168.0.209:9200"] #elasticsearch地址

index => "logstash-%{type}-%{+YYYY.MM.dd}" #索引

document_type => "%{type}"

workers => 1

flush_size => 20000 #传输数量 默认500

idle_flush_time => 10 #传输秒数 默认1秒

template_overwrite => true

}

}

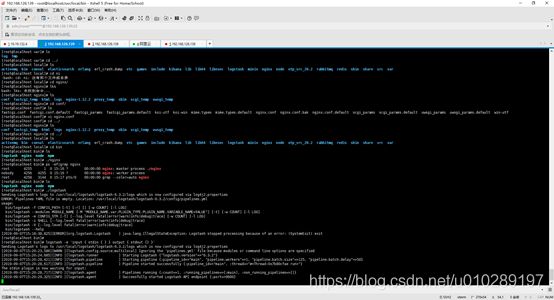

5.启动logstash

[root@localhost bin]# ./logstash -f stdin.conf

如下图所示表示开启lostash成功

6.创建一个软连接,每次执行命令的时候不用在写安装路劲(默认安装在/usr/local下)

[root@localhost bin]# ln -s /usr/local/logstash/logstash-6.3.2/bin/logstash /usr/local/bin/

执行logstash的命令

[root@localhost bin]# logstash -e 'input { stdin { } } output { stdout {} }'

7.验证是logstash是否启动

[root@localhost ~]# ps -ef|grep logstash

搭建Kibana

安装Kibana

1.下载安装包kibana-6.3.2-linux-x86_64.tar.gz

[root@localhost 20190903]#wget https://artifacts.elastic.co/downloads/kibana/kibana-6.3.2-linux-x86_64.tar.gz

2.解压kibana-6.3.2-linux-x86_64.tar.gz

[root@localhost 20190903]# tar -zxvf kibana-6.3.2-linux-x86_64.tar.gz -C /usr/local/kibana/

3.进入解压后的目录

[root@localhost 20190903]# cd /usr/local/kibana/ kibana-6.3.2-linux-x86_64/

4.修改配置文件kibana.yml

[root@localhost kibana-6.3.2-linux-x86_64]# cd config/

[root@localhost config]# vi kibana.yml

修改成自己的Elasticsearch的IP地址。

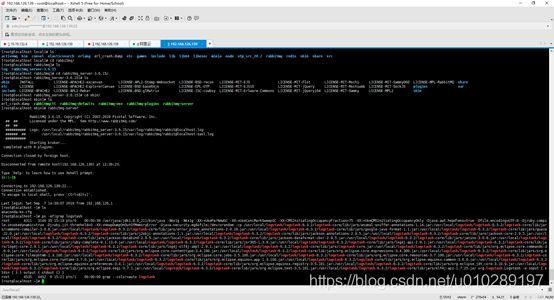

5.启动Kibana

[root@localhost kibana-6.3.2-linux-x86_64]# cd bin

后台启动

[root@localhost bin]# ./kibana &

6.验证

[root@localhost bin]# ps -ef|grep kibana

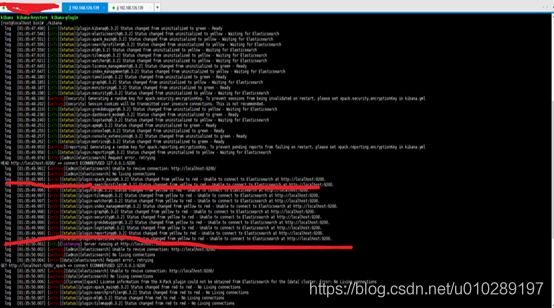

出现如上图所示的问题:主要是有与Elasticsearch和Kibana的版本不一致导致的。

在启动kibana之后出现如下图所示问题:

需要修改kibana的配置文件(/usr/local/kibana/kibana-6.3.2-linux-x86_64/config/kibana.yml)和首先启动Elasticserarch。

在上图所示的红色标记的地方修改成自己的elasticserarch的主机地址既可。

在浏览器输入:

http://192.168.126.139:5601/

验证ELK日志分析

下一步

创建索引参数,点击discover