在idea下使用java将Log4j日志实时写入Kafka(Kafka实时日志写入)

本篇文章主要介绍在windows下使用idea新建web项目将Log4j日志实时写入Kafka。

简要步骤:

①、新建web项目

②、启动zookeeper

③、启动Kafka

④、创建topic

⑤、启动 Kafka 消费者,运行项目,观察Kafka 消费者的控制台

详细步骤:

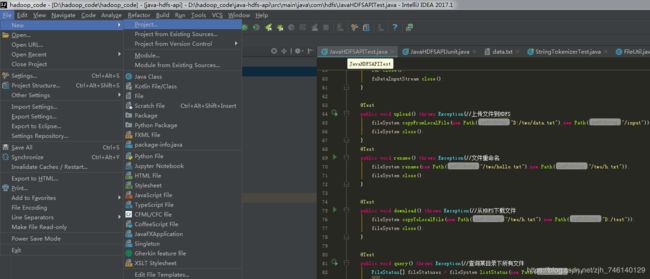

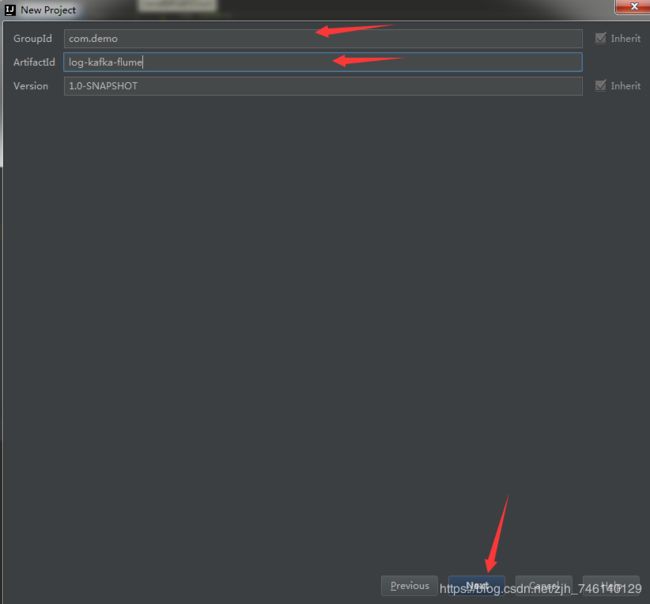

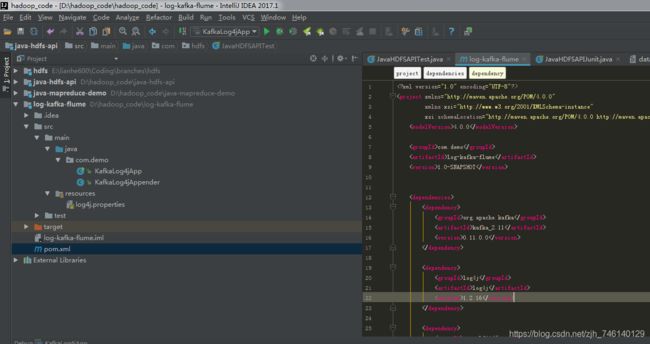

一、新建web项目

代码如下:

package com.demo;

import org.apache.kafka.clients.CommonClientConfigs;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.Producer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.apache.kafka.common.config.ConfigException;

import org.apache.kafka.common.config.SslConfigs;

import org.apache.log4j.AppenderSkeleton;

import org.apache.log4j.helpers.LogLog;

import org.apache.log4j.spi.LoggingEvent;

import java.util.Date;

import java.util.Properties;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.Future;

/**

* A log4j appender that produces log messages to Kafka

*/

public class KafkaLog4jAppender extends AppenderSkeleton {

private static final String BOOTSTRAP_SERVERS_CONFIG = ProducerConfig.BOOTSTRAP_SERVERS_CONFIG;

private static final String COMPRESSION_TYPE_CONFIG = ProducerConfig.COMPRESSION_TYPE_CONFIG;

private static final String ACKS_CONFIG = ProducerConfig.ACKS_CONFIG;

private static final String RETRIES_CONFIG = ProducerConfig.RETRIES_CONFIG;

private static final String KEY_SERIALIZER_CLASS_CONFIG = ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG;

private static final String VALUE_SERIALIZER_CLASS_CONFIG = ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG;

private static final String SECURITY_PROTOCOL = CommonClientConfigs.SECURITY_PROTOCOL_CONFIG;

private static final String SSL_TRUSTSTORE_LOCATION = SslConfigs.SSL_TRUSTSTORE_LOCATION_CONFIG;

private static final String SSL_TRUSTSTORE_PASSWORD = SslConfigs.SSL_TRUSTSTORE_PASSWORD_CONFIG;

private static final String SSL_KEYSTORE_TYPE = SslConfigs.SSL_KEYSTORE_TYPE_CONFIG;

private static final String SSL_KEYSTORE_LOCATION = SslConfigs.SSL_KEYSTORE_LOCATION_CONFIG;

private static final String SSL_KEYSTORE_PASSWORD = SslConfigs.SSL_KEYSTORE_PASSWORD_CONFIG;

private String brokerList = null;

private String topic = null;

private String compressionType = null;

private String securityProtocol = null;

private String sslTruststoreLocation = null;

private String sslTruststorePassword = null;

private String sslKeystoreType = null;

private String sslKeystoreLocation = null;

private String sslKeystorePassword = null;

private int retries = 0;

private int requiredNumAcks = Integer.MAX_VALUE;

private boolean syncSend = false;

private Producer producer = null;

public Producer getProducer() {

return producer;

}

public String getBrokerList() {

return brokerList;

}

public void setBrokerList(String brokerList) {

this.brokerList = brokerList;

}

public int getRequiredNumAcks() {

return requiredNumAcks;

}

public void setRequiredNumAcks(int requiredNumAcks) {

this.requiredNumAcks = requiredNumAcks;

}

public int getRetries() {

return retries;

}

public void setRetries(int retries) {

this.retries = retries;

}

public String getCompressionType() {

return compressionType;

}

public void setCompressionType(String compressionType) {

this.compressionType = compressionType;

}

public String getTopic() {

return topic;

}

public void setTopic(String topic) {

this.topic = topic;

}

public boolean getSyncSend() {

return syncSend;

}

public void setSyncSend(boolean syncSend) {

this.syncSend = syncSend;

}

public String getSslTruststorePassword() {

return sslTruststorePassword;

}

public String getSslTruststoreLocation() {

return sslTruststoreLocation;

}

public String getSecurityProtocol() {

return securityProtocol;

}

public void setSecurityProtocol(String securityProtocol) {

this.securityProtocol = securityProtocol;

}

public void setSslTruststoreLocation(String sslTruststoreLocation) {

this.sslTruststoreLocation = sslTruststoreLocation;

}

public void setSslTruststorePassword(String sslTruststorePassword) {

this.sslTruststorePassword = sslTruststorePassword;

}

public void setSslKeystorePassword(String sslKeystorePassword) {

this.sslKeystorePassword = sslKeystorePassword;

}

public void setSslKeystoreType(String sslKeystoreType) {

this.sslKeystoreType = sslKeystoreType;

}

public void setSslKeystoreLocation(String sslKeystoreLocation) {

this.sslKeystoreLocation = sslKeystoreLocation;

}

public String getSslKeystoreLocation() {

return sslKeystoreLocation;

}

public String getSslKeystoreType() {

return sslKeystoreType;

}

public String getSslKeystorePassword() {

return sslKeystorePassword;

}

@Override

public void activateOptions() {

// check for config parameter validity

Properties props = new Properties();

if (brokerList != null)

props.put(BOOTSTRAP_SERVERS_CONFIG, brokerList);

if (props.isEmpty())

throw new ConfigException("The bootstrap servers property should be specified");

if (topic == null)

throw new ConfigException("Topic must be specified by the Kafka log4j appender");

if (compressionType != null)

props.put(COMPRESSION_TYPE_CONFIG, compressionType);

if (requiredNumAcks != Integer.MAX_VALUE)

props.put(ACKS_CONFIG, Integer.toString(requiredNumAcks));

if (retries > 0)

props.put(RETRIES_CONFIG, retries);

if (securityProtocol != null && sslTruststoreLocation != null &&

sslTruststorePassword != null) {

props.put(SECURITY_PROTOCOL, securityProtocol);

props.put(SSL_TRUSTSTORE_LOCATION, sslTruststoreLocation);

props.put(SSL_TRUSTSTORE_PASSWORD, sslTruststorePassword);

if (sslKeystoreType != null && sslKeystoreLocation != null &&

sslKeystorePassword != null) {

props.put(SSL_KEYSTORE_TYPE, sslKeystoreType);

props.put(SSL_KEYSTORE_LOCATION, sslKeystoreLocation);

props.put(SSL_KEYSTORE_PASSWORD, sslKeystorePassword);

}

}

props.put(KEY_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.ByteArraySerializer");

props.put(VALUE_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.ByteArraySerializer");

this.producer = getKafkaProducer(props);

LogLog.debug("Kafka producer connected to " + brokerList);

LogLog.debug("Logging for topic: " + topic);

}

protected Producer getKafkaProducer(Properties props) {

return new KafkaProducer(props);

}

@Override

protected void append(LoggingEvent event) {

String message = subAppend(event);

LogLog.debug("[" + new Date(event.getTimeStamp()) + "]" + message);

Future response = producer.send(new ProducerRecord(topic, message.getBytes()));

if (syncSend) {

try {

response.get();

} catch (InterruptedException ex) {

throw new RuntimeException(ex);

} catch (ExecutionException ex) {

throw new RuntimeException(ex);

}

}

}

private String subAppend(LoggingEvent event) {

return (this.layout == null) ? event.getRenderedMessage() : this.layout.format(event);

}

public void close() {

if (!this.closed) {

this.closed = true;

producer.close();

}

}

public boolean requiresLayout() {

return true;

}

} package com.demo;

import org.apache.log4j.Logger;

/**

* 模拟日志产生

* Created by zhoujh on 2018/8/9.

*/

public class KafkaLog4jApp {

private static Logger logger = Logger.getLogger(KafkaLog4jApp.class.getName());

public static void main(String[] args) throws Exception {

int index = 0;

while(true) {

Thread.sleep(1000);

logger.info("value is: " + index++);

}

}

}

log4j.properties

log4j.rootLogger=INFO,stdout,kafka

log4j.appender.stdout = org.apache.log4j.ConsoleAppender

log4j.appender.stdout.target = System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss,SSS} [%t] [%c] [%p] - %m%n

log4j.appender.kafka = com.demo.KafkaLog4jAppender

log4j.appender.kafka.topic = log4jtest

log4j.appender.kafka.brokerList=node1:9092

log4j.appender.kafka.layout=org.apache.log4j.PatternLayout

log4j.appender.kafka.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss,SSS} [%t] [%c] [%p] - %m%n

完整pom.cml

4.0.0

com.demo

log-kafka-flume

1.0-SNAPSHOT

org.apache.kafka

kafka_2.11

0.11.0.0

log4j

log4j

1.2.16

org.slf4j

slf4j-log4j12

1.7.21

test

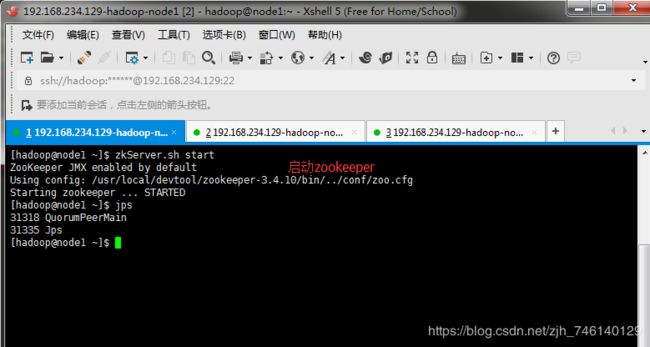

二、启动zookeeper

zkServer.sh start

三、启动Kafka

进入kafka bin目录

bin/kafka-server-start.sh config/server.properties

四、创建topic

./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic log4jtest

./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic log4jtest五、启动 Kafka 消费者,运行项目,观察Kafka 消费者的控制台

./kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic log4jtest控制台打印

[hadoop@node1 bin]$ ./kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic log4jtest

2018-08-09 17:35:13,271 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 0

2018-08-09 17:35:14,406 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 1

2018-08-09 17:35:15,406 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 2

2018-08-09 17:35:16,406 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 3

2018-08-09 17:35:17,406 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 4

2018-08-09 17:35:18,407 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 5

2018-08-09 17:35:19,497 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 6

2018-08-09 17:35:20,497 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 7

2018-08-09 17:35:21,497 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 8

2018-08-09 17:35:22,497 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 9

2018-08-09 17:35:23,497 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 10

2018-08-09 17:35:24,497 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 11

2018-08-09 17:35:25,497 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 12

2018-08-09 17:35:26,497 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 13

2018-08-09 17:35:27,497 [main] [com.demo.KafkaLog4jApp] [INFO] - value is: 14程序截图: