python 爬虫思想,抓取网站上尽可能多的英文内容文章,去除停用词后,统计频次出现排名前 300~600 的高频词

抓取链接

http://www.chinadaily.com.cn/a/201804/14/WS5ad15641a3105cdcf6518417.html

中英文文章及a标签中URL链接中的英文文章,利用这种迭代再迭代的方法获取网站中几乎所有英文文章

import

requests

from

bs4

import

BeautifulSoup

import

time

import

random

import

os

visited_urls

=

set()

def

get_raw_html(url):

if

url

not

in

visited_urls:

time.sleep(

2

)

response

=

requests.get(url)

visited_urls.add(url)

if

response.status_code

==

200

:

print(

"url:"

,url,

"okey"

)

return

BeautifulSoup(response.text,

"html.parser"

)

return

None

def

extract_urls(raw_html):

urls

=

raw_html.find_all(

'a'

)

result

=

set()

for

url

in

urls:

if

url.has_attr(

'href'

)

and

url[

'href'

].startswith(

'http://www.chinadaily.com.cn/a/'

):

#匹配开头相等字符串

result.add(url[

'href'

])

return

result

def

extract_content(raw_html):

content_element

=

raw_html.find(id

=

'Content'

)

if

content_element

!=

None

:

#AttributeError: 'NoneType' object has no attribute 'stripped_strings'

result

=

''

for

text

in

content_element.stripped_strings:

result

=

result

+

'\n'

+

text

return

result

def

content_handler(content,raw_html):

h1

=

raw_html.find(

'h1'

)

if

h1

!=

None

:

h1

=

h1.get_text()

#AttributeError: 'NoneType' object has no attribute 'get_text'

h1

=

h1.strip()

#OSError: [Errno 22] Invalid argument: './demo-dir/\n Video: Trump orders strikes against Syria\n .txt'

h1

=

h1.replace(

':'

,

' '

)

h1

=

h1.replace(

'?'

,

' '

)

print(h1)

if

content

is

not

None

and

content

!=

'\n'

:

with

open(

'./{0}/{1}.txt'

.format(

"demo-dir"

, h1),

"w"

,encoding

=

'utf-8'

)

as

file:

#UnicodeEncodeError: 'gbk' codec can't encode character '\xa0' in position 353: illegal multibyte sequence

file.write(content)

pass

def

crawl(url):

print(

"url:"

,url,

"start"

)

raw_html

=

get_raw_html(url)

if

raw_html

is

not

None

:

urls

=

extract_urls(raw_html)

content

=

extract_content(raw_html)

content_handler(content,raw_html)

for

other_url

in

urls:

crawl(other_url)

seed_url

=

"http://www.chinadaily.com.cn/a/201804/14/WS5ad15641a3105cdcf6518417.html"

crawl(seed_url)

在目录demo-dir下得到抓取到的文章如下:

合并demo-dir目录下所有txt文件:

import

os

def

plus_text(dir):

#获取目标文件夹的路径

filedir

=

os.getcwd()

+

dir

#获取当前文件夹中的文件名称列表

filenames

=

os.listdir(filedir)

#打开当前目录下的result.txt文件,如果没有则创建

f

=

open(

'total.txt'

,

'w'

)

#先遍历文件名

for

filename

in

filenames:

filepath

=

filedir

+

'/'

+

filename

#遍历单个文件,读取行数

for

line

in

open(filepath,encoding

=

'gb18030'

,errors

=

'ignore'

):

#UnicodeDecodeError: 'gbk' codec can't decode byte 0x9d in position 54: illegal multibyte sequence

f.writelines(line)

#关闭文件

f.close()

对合并的total.txt文件进行去',' '(' 等等处理:

import

os

def

article_words_split(content):

result

=

{}

for

line

in

content:

if

line

!=

' '

:

line

=

article_handle(line)

line

=

line.strip()

for

word

in

line.split(

' '

):

word

=

word.lower()

#word.lower() 将字符串转换为小写,返回转换后的字符串

if

word

in

result:

result[word]

+=

1

else

:

result[word]

=

1

return

result

def

article_handle(content):

content

=

content.replace(

'('

,

' '

)

content

=

content.replace(

')'

,

' '

)

content

=

content.replace(

','

,

' '

)

content

=

content.replace(

'"'

,

' '

)

content

=

content.replace(

'.'

,

' '

)

content

=

content.replace(

'。'

,

' '

)

content

=

content.replace(

'$'

,

' '

)

content

=

content.replace(

'/'

,

' '

)

content

=

content.replace(

'['

,

' '

)

content

=

content.replace(

']'

,

' '

)

content

=

content.replace(

'-'

,

' '

)

return

content

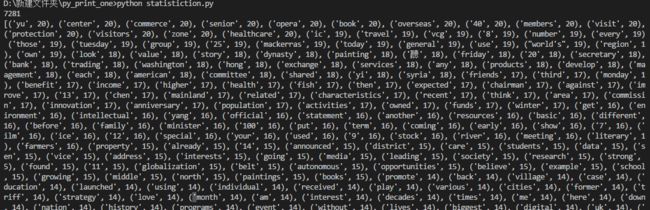

对去停顿号的文章进行统计排序,输出300-600高频的词:

# coding=utf-8

import

glob

import

article_split

import

plus_text

from

collections

import

Counter

# plus_text.plus_text('./demo-dir')

content

=

open(

'total.txt'

,

'r'

)

result

=

article_split.article_words_split(content)

c

=

Counter(result).most_common()

#Counter是字典的子类

print(len(c))

#返回一个列表,按照dict的value从大到小排序[('b', 99), ('g', 89), ('d', 74), ('e', 69), ('a', 66), ('c', 62), ('f', 60)]

print(c[

300

:

600

])

#('', 4405)

content.close()

输出: