基于暗通道先验的单幅图像去雾算法小结

在开始之前,先看一组去雾的效果图。

1. 关于去雾的意义及算法

去雾在视频监控、航拍、遥感、自动/辅助驾驶等需要在室外复杂、恶劣天气下运行的视觉系统,都可能需要运用到这一技术。

目前去雾算法主要有两个思路,一个是基于图像增强的图像去雾处理方法,另一个是基于物理成像模型的图像去雾处理方法。基于图像增强的去雾算法的典型代表就是(全局/局部)直方图和Retinex算法。而基于物理成像模型的图像去雾算法的典型代表就是何凯明等提出的基于暗通道的图像去雾和Jin-Hwan Kim等提出的去雾算法,这两种算法的去雾效果都比较好,计算效率也都比较高,可以达到实时的效果。特别值得一提的是,由于思路的不同,Jin-Hwan Kim的去雾算法对天空的处理效果更为自然。

这里主要是对基于暗通道的图像去雾算法进行小结,如果对Jin-Hwan Kim的去雾算法感兴趣可以阅读原文&代码,也可以参考对其进行解读的博客优化的对比度增强算法用于有雾图像的清晰化处理。

2.暗通道先验

暗通道先验就是基于这样一个假设:在绝大多数非天空的局部区域里,某一些像素总会有至少一个颜色通道具有很低的值。而这个假设是作者基于大量图片的观察得到了。关于暗通道先验去雾的相关理论推导可以参考作者原文《Single Image Haze Removal Using Dark Channel Prior》,也可以参考一些讲解博客,比如下面两篇博客讲解的都很清晰明了。

1.暗通道优先的图像去雾算法(上)

2.《Single Image Haze Removal Using Dark Channel Prior》一文中图像去雾算法的原理、实现、效果(速度可实时)

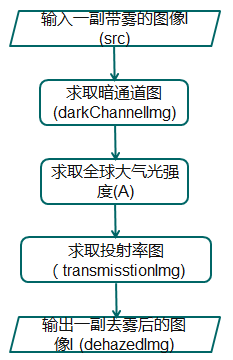

其计算流程

3.基于预估透射率图的去雾

基于预估投射率图的计算流程如上面的流程图所示。下面是通过该方法对一副带雾的图像进行滤波后的效果图。其中src为原图,dehazedImg为去雾后的效果图,而darkChannelImg为暗通道图像,transmissionImage为预估图射率图。可以发现去雾的效果并不是很理想,可以看到很多边缘有明显的白边,主要原因是由于预估透射率图太过粗糙,导致滤波结果不够精细。在何凯明的论文中,提到可以通过soft matting的办法来改进,但这个方法较为繁琐,后来他又提出了guided filter,该算法同样可以改进去雾效果。

上面滤波的代码如下:

#include

#include

using namespace cv;

const double w = 0.95;

const int r = 7;

//计算全球大气光强A

//src为输入的带雾图像,darkChannelImg为暗通道图像,r为最小值滤波的窗口半径

Mat getDarkChannelImg(const Mat src, const int r)

{

int height = src.rows;

int width = src.cols;

Mat darkChannelImg(src.size(), CV_8UC1);

Mat darkTemp(darkChannelImg.size(), darkChannelImg.type());

//求取src中每个像素点三个通道中的最小值,将其赋值给暗通道图像中对应的像素点

for (int i = 0; i < height; i++)

{

const uchar* srcPtr = src.ptr(i);

uchar* dstPtr = darkTemp.ptr(i);

for (int j = 0; j < width; j++)

{

int b = srcPtr[3 * j];

int g = srcPtr[3 * j + 1];

int r = srcPtr[3 * j + 2];

dstPtr[j] = min(min(b, g), r);

}

}

//把图像分成patch,求patch框内的最小值,得到dark_channel image

//r is the patch radius, patchSize=2*r+1

//这一步实际上是最小值滤波的过程

cv::Mat rectImg;

int patchSize = 2 * r + 1;

for (int j = 0; j(j, i) = cv::saturate_cast(minValue);//using saturate_cast to set pixel value to [0,255]

}

}

return darkChannelImg;

}

double getGlobelAtmosphericLight(const Mat darkChannelImg)

{

//这里是简化的处理方式,A的最大值限定为220

double minAtomsLight = 220;//经验值

double maxValue = 0;

cv::Point maxLoc;

minMaxLoc(darkChannelImg, NULL, &maxValue, NULL, &maxLoc);

double A = min(minAtomsLight,maxValue);

return A;

}

Mat getTransimissionImg(const Mat darkChannelImg,const double A)

{

cv::Mat transmissionImg(darkChannelImg.size(),CV_8UC1);

cv::Mat look_up(1,256,CV_8UC1);

uchar* look_up_ptr = look_up.data;

for (int k = 0; k < 256; k++)

{

look_up_ptr[k] = cv::saturate_cast(255*(1 - w * k / A));

}

cv::LUT(darkChannelImg, look_up, transmissionImg);

return transmissionImg;

}

Mat getDehazedImg(const Mat src, const Mat transmissionImage, const int A)

{

double tmin = 0.1;

double tmax=0;

Vec3b srcData;

Mat dehazedImg = Mat::zeros(src.size(), CV_8UC3);

for (int i = 0; i(i, j);

srcData = src.at(i, j);

tmax = max(transmission/255, tmin);

//(I-A)/t +A

for (int c = 0; c<3; c++)

{

dehazedImg.at(i, j)[c] = cv::saturate_cast(abs((srcData.val[c] - A) / tmax + A));

}

}

}

return dehazedImg;

}

int main(void)

{

cv::Mat src, darkChanelImg;

src = cv::imread("d:/Opencv Picture/Haze Removal/6.png", 1);

if (!src.data)

std::cout << "Load Image Error!";

src.convertTo(src, CV_8U, 1, 0);

darkChanelImg = getDarkChannelImg(src, r);

cv::imshow("darkChanelImg", darkChanelImg);

double A = getGlobelAtmosphericLight(darkChanelImg);

Mat transmissionImage(darkChanelImg.size(), darkChanelImg.type());

transmissionImage = getTransimissionImg(darkChanelImg, A);

imshow("transmissionImage", transmissionImage);

Mat dehazedImg = Mat::zeros(src.rows, src.cols, CV_8UC3);

double t = (double)getTickCount();

dehazedImg = getDehazedImg(src, transmissionImage, A);

t = (double)getTickCount() - t;

std::cout << 1000 * t / (getTickFrequency()) << "ms" << std::endl;

imshow("src", src);

imshow("dehazedImg", dehazedImg);

cvWaitKey(0);

return 0;

} 4.基于导向滤波算法改进的去雾

关于导向滤波可以参考导向滤波小结。这里仅仅讲一讲如何把导向滤波运用到去雾中来。

上面提到由于预估的透射率图太过粗糙,所以导致去雾的效果欠佳,因此很容易想到的改进方法就是对透射率图进行精细化处理,这里就可以利用导向滤波对预估透射率图进行滤波处理。如下流程图所示。其中导向滤波的导向图为src。

下面是用了导向滤波之前和之后的效果对比图,可以看到加入导向滤波后,原本的白边也消失了。

然后我们再对比一下透射率图,如下所示。通过加入导向滤波后得到的fineTransmissionChannel明显比之前的投射率图更为精细。

实现代码如下:

const double w = 0.95;

const int r = 7;

//计算全球大气光强A

//src为输入的带雾图像,darkChannelImg为暗通道图像,r为最小值滤波的窗口半径

Mat getDarkChannelImg(const Mat src, const int r)

{

int height = src.rows;

int width = src.cols;

Mat darkChannelImg(src.size(), CV_8UC1);

Mat darkTemp(darkChannelImg.size(), darkChannelImg.type());

//求取src中每个像素点三个通道中的最小值,将其赋值给暗通道图像中对应的像素点

for (int i = 0; i < height; i++)

{

const uchar* srcPtr = src.ptr(i);

uchar* dstPtr = darkTemp.ptr(i);

for (int j = 0; j < width; j++)

{

int b = srcPtr[3 * j];

int g = srcPtr[3 * j + 1];

int r = srcPtr[3 * j + 2];

dstPtr[j] = min(min(b, g), r);

}

}

//把图像分成patch,求patch框内的最小值,得到dark_channel image

//r is the patch radius, patchSize=2*r+1

//这一步实际上是最小值滤波的过程

cv::Mat rectImg;

int patchSize = 2 * r + 1;

for (int j = 0; j(j, i) = cv::saturate_cast(minValue);//using saturate_cast to set pixel value to [0,255]

}

}

return darkChannelImg;

}

double getGlobelAtmosphericLight(const Mat darkChannelImg)

{

//这里是简化的处理方式,A的最大值限定为220

double minAtomsLight = 220;//经验值

double maxValue = 0;

cv::Point maxLoc;

minMaxLoc(darkChannelImg, NULL, &maxValue, NULL, &maxLoc);

double A = min(minAtomsLight,maxValue);

return A;

}

Mat getTransimissionImg(const Mat darkChannelImg,const double A)

{

cv::Mat transmissionImg(darkChannelImg.size(),CV_8UC1);

cv::Mat look_up(1,256,CV_8UC1);

uchar* look_up_ptr = look_up.data;

for (int k = 0; k < 256; k++)

{

look_up_ptr[k] = cv::saturate_cast(255*(1 - w * k / A));

}

cv::LUT(darkChannelImg, look_up, transmissionImg);

return transmissionImg;

}

cv::Mat fastGuidedFilter(cv::Mat I_org, cv::Mat p_org, int r, double eps, int s)

{

/*

% GUIDEDFILTER O(N) time implementation of guided filter.

%

% - guidance image: I (should be a gray-scale/single channel image)

% - filtering input image: p (should be a gray-scale/single channel image)

% - local window radius: r

% - regularization parameter: eps

*/

cv::Mat I, _I;

I_org.convertTo(_I, CV_64FC1, 1.0 / 255);

resize(_I, I, Size(), 1.0 / s, 1.0 / s, 1);

cv::Mat p, _p;

p_org.convertTo(_p, CV_64FC1, 1.0 / 255);

//p = _p;

resize(_p, p, Size(), 1.0 / s, 1.0 / s, 1);

//[hei, wid] = size(I);

int hei = I.rows;

int wid = I.cols;

r = (2 * r + 1) / s + 1;//因为opencv自带的boxFilter()中的Size,比如9x9,我们说半径为4

//mean_I = boxfilter(I, r) ./ N;

cv::Mat mean_I;

cv::boxFilter(I, mean_I, CV_64FC1, cv::Size(r, r));

//mean_p = boxfilter(p, r) ./ N;

cv::Mat mean_p;

cv::boxFilter(p, mean_p, CV_64FC1, cv::Size(r, r));

//mean_Ip = boxfilter(I.*p, r) ./ N;

cv::Mat mean_Ip;

cv::boxFilter(I.mul(p), mean_Ip, CV_64FC1, cv::Size(r, r));

//cov_Ip = mean_Ip - mean_I .* mean_p; % this is the covariance of (I, p) in each local patch.

cv::Mat cov_Ip = mean_Ip - mean_I.mul(mean_p);

//mean_II = boxfilter(I.*I, r) ./ N;

cv::Mat mean_II;

cv::boxFilter(I.mul(I), mean_II, CV_64FC1, cv::Size(r, r));

//var_I = mean_II - mean_I .* mean_I;

cv::Mat var_I = mean_II - mean_I.mul(mean_I);

//a = cov_Ip ./ (var_I + eps); % Eqn. (5) in the paper;

cv::Mat a = cov_Ip / (var_I + eps);

//b = mean_p - a .* mean_I; % Eqn. (6) in the paper;

cv::Mat b = mean_p - a.mul(mean_I);

//mean_a = boxfilter(a, r) ./ N;

cv::Mat mean_a;

cv::boxFilter(a, mean_a, CV_64FC1, cv::Size(r, r));

Mat rmean_a;

resize(mean_a, rmean_a, Size(I_org.cols, I_org.rows), 1);

//mean_b = boxfilter(b, r) ./ N;

cv::Mat mean_b;

cv::boxFilter(b, mean_b, CV_64FC1, cv::Size(r, r));

Mat rmean_b;

resize(mean_b, rmean_b, Size(I_org.cols, I_org.rows), 1);

//q = mean_a .* I + mean_b; % Eqn. (8) in the paper;

cv::Mat q = rmean_a.mul(_I) + rmean_b;

Mat q1;

q.convertTo(q1, CV_8UC1, 255, 0);

return q1;

}

void FastGuidedFilter(Mat I, Mat p, Mat dst, int r, double eps, int s)

{

std::vector bgr_I, bgr_p, bgr_dst;

split(I, bgr_I);//分解每个通道

split(p, bgr_p);

for (int i = 0; i<3; i++)

{

Mat I = bgr_I[i];

Mat p = bgr_p[i];

Mat q = fastGuidedFilter(I, p, r, eps, s);

bgr_dst.push_back(q);

}

Mat dst_color;

merge(bgr_dst, dst_color);

dst = dst_color.clone();

//cv::imshow("dst", dst);

}

cv::Mat getDehazedChannel(cv::Mat srcChannel, cv::Mat transmissionChannel, double A)

{

double tmin = 0.1;

double tmax;

cv::Mat dehazedChannel(srcChannel.size(), CV_8UC1);

for (int i = 0; i(i, j);

tmax = (transmission / 255) < tmin ? tmin : (transmission / 255);

//(I-A)/t +A

dehazedChannel.at(i, j) = cv::saturate_cast(abs((srcChannel.at(i, j) - A) / tmax + A));

}

}

return dehazedChannel;

}

cv::Mat getDehazedImg_guidedFilter(Mat src,Mat darkChannelImg)

{

cv::Mat dehazedImg = cv::Mat::zeros(src.rows, src.cols, CV_8UC3);

cv::Mat transmissionImg(src.rows, src.cols, CV_8UC3);

cv::Mat fineTransmissionImg(src.rows, src.cols, CV_8UC3);

std::vector < cv::Mat> srcChannel, dehazedChannel, transmissionChannel, fineTransmissionChannel;

cv::split(src, srcChannel);

double A0 = getGlobelAtmosphericLight(darkChannelImg);

double A1 = getGlobelAtmosphericLight(darkChannelImg);

double A2 = getGlobelAtmosphericLight(darkChannelImg);

cv::split(transmissionImg, transmissionChannel);

transmissionChannel[0] = getTransimissionImg(darkChannelImg, A0);

transmissionChannel[1] = getTransimissionImg(darkChannelImg, A1);

transmissionChannel[2] = getTransimissionImg(darkChannelImg, A2);

cv::split(fineTransmissionImg, fineTransmissionChannel);

fineTransmissionChannel[0] = fastGuidedFilter(srcChannel[0], transmissionChannel[0], 64, 0.01, 8);

fineTransmissionChannel[1] = fastGuidedFilter(srcChannel[1], transmissionChannel[1], 64, 0.01, 8);

fineTransmissionChannel[2] = fastGuidedFilter(srcChannel[2], transmissionChannel[2], 64, 0.01, 8);

merge(fineTransmissionChannel, fineTransmissionImg);

imshow("fineTransmissionChannel", fineTransmissionImg);

cv::split(dehazedImg, dehazedChannel);

dehazedChannel[0] = getDehazedChannel(srcChannel[0], fineTransmissionChannel[0], A0);

dehazedChannel[1] = getDehazedChannel(srcChannel[1], fineTransmissionChannel[1], A1);

dehazedChannel[2] = getDehazedChannel(srcChannel[2], fineTransmissionChannel[2], A2);

cv::merge(dehazedChannel, dehazedImg);

return dehazedImg;

}

Mat getDehazedImg(const Mat src, const Mat transmissionImage, const int A)

{

double tmin = 0.1;

double tmax=0;

Vec3b srcData;

Mat dehazedImg = Mat::zeros(src.size(), CV_8UC3);

for (int i = 0; i(i, j);

srcData = src.at(i, j);

tmax = max(transmission/255, tmin);

//(I-A)/t +A

for (int c = 0; c<3; c++)

{

dehazedImg.at(i, j)[c] = cv::saturate_cast(abs((srcData.val[c] - A) / tmax + A));

}

}

}

return dehazedImg;

}

int main(void)

{

cv::Mat src, darkChanelImg;

src = cv::imread("d:/Opencv Picture/Haze Removal/6.png", 1);

if (!src.data)

std::cout << "Load Image Error!";

src.convertTo(src, CV_8U, 1, 0);

darkChanelImg = getDarkChannelImg(src, r);

cv::imshow("darkChanelImg", darkChanelImg);

double A = getGlobelAtmosphericLight(darkChanelImg);

Mat transmissionImage(darkChanelImg.size(), darkChanelImg.type());

transmissionImage = getTransimissionImg(darkChanelImg, A);

imshow("transmissionImage", transmissionImage);

//Mat dehazedImg = Mat::zeros(src.rows, src.cols, CV_8UC3);

Mat dehazedImg, dehazedImg_guideFilter;

double t = (double)getTickCount();

dehazedImg = getDehazedImg(src, transmissionImage, A);

t = (double)getTickCount() - t;

std::cout << 1000 * t / (getTickFrequency()) << "ms" << std::endl;

double t1 = (double)getTickCount();

dehazedImg_guideFilter = getDehazedImg_guidedFilter(src, darkChanelImg);

t1 = (double)getTickCount() - t1;

std::cout << 1000 * t1 / (getTickFrequency()) << "ms" << std::endl;

imshow("src", src);

imshow("dehazedImg", dehazedImg);

imshow("dehazedImg_guideFilter", dehazedImg_guideFilter);

cvWaitKey(0);

return 0;

} 5. 去雾增强

上面的去雾图片总是有些偏暗,可以通过自动色阶,或者直方图均衡化对图像进行增强。关于自动色阶和直方图均衡化的资料有很多,这里不再赘述。

6. 视频去雾

从计算速度上来说,基于暗通道图像的去雾算法对视频进行去雾,应该是没有问题。但是视频去雾的主要问题是去雾后的视频会出现帧与帧之间的明暗变化。针对这一问题,可以对大气光值进行平滑处理,但是无法完全消除这一问题。具体可以参考image-video-dehazing-OpenCV。

值得一提的是,针对视频去雾,可以参考前面提到的Jin-Hwan Kim的去雾算法。