Yolo训练准备

准备数据:

1、当你阅读这份文档前,请确认你已经准备好了需要训练的图片,这些图片中包含需要识别的目标

2、请准备好标注工具,这里推荐用labelImg,下载地址: https://github.com/tzutalin/labelImg

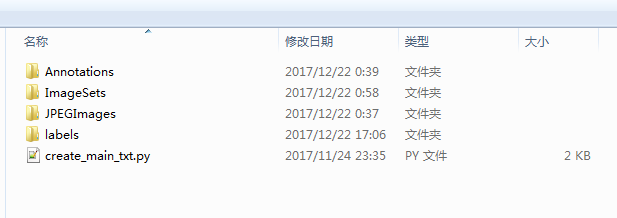

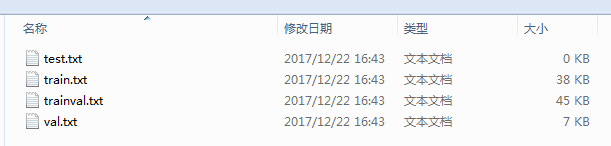

3、到这里你需要准备好Annotations 和 JPEGImages,Annotations里面放的是标注完数据生成的xml文件,xml里面有我们标注的信息,JPEGImages里面存放的是标注的图片,ImageSets里面是一个Main文件夹,Main里面是四个txt文件,四个里面我们训练主要要用的是train.txt和val.txt,再看labels文件夹,它是我们存放VOC转成yolo需要的格式数据后的一些txt文件,后面会将如何生成。接下来我们要说的就是create_main_txt.py脚本。

4、create_main_txt.py脚本

# coding:utf-8

import os

if __name__ == '__main__':

path = os.getcwd()

dataAnnotated = os.listdir(path + '/Annotations')#有待改善

dataNum = len(dataAnnotated) # 数据集数量

ftest = open('ImageSets/Main/test.txt', 'w') # 测试集

ftrain = open('ImageSets/Main/train.txt', 'w') # 训练集

ftrainval = open('ImageSets/Main/trainval.txt', 'w') # 训练验证集

fval = open('ImageSets/Main/val.txt', 'w') # 验证集

testScale = 0.0 # 测试集占总数据集的比例

trainScale = 0.85 # 训练集占训练验证集的比例

i = 1

testNum = int(dataNum * testScale) # 测试集的数量

trainNum = int((dataNum - testNum) * trainScale) # 训练集的数量

for name in dataAnnotated:

(filename,extension) = os.path.splitext(name)

if i <= testNum:

ftest.write(str(filename) + "\n")

elif i <= testNum + trainNum:

ftrain.write(str(filename) + "\n")

ftrainval.write(str(filename) + "\n")

else:

fval.write(str(filename) + "\n")

ftrainval.write(str(filename) + "\n")

i += 1

ftrain.close

ftrainval.close

fval.close

ftest.close

该脚本的作用主要是生成Main下的四个txt文件,txt中主要存放的各个集合(训练集、测试集,训练验证集、测试验证集)图片的绝对路径,需要注意的是我这边的脚本有点笨,前提是在Annotations里面的xml都是有效的(图片在用labelImg标记后即会生成,如果后面又删除标记的物体,那么即使图片里面没有我们要训练的问题,该图片对应的xml还在,这样的话也会拿去做训练),所有使用本脚本,请确保xml都是有效的。

5、到此,我们已经准备好了VOC格式的数据。接下来我们需要将VOC转成YOLO支持的数据。同样,也有一个脚本可以实现。

voc_label.py

6、voc_label.py。

import xml.etree.ElementTree as ET

import pickle

import os

from os import listdir, getcwd

from os.path import join

sets=[('2012', 'train'), ('2012', 'val'), ('2007', 'train'), ('2007', 'val'), ('2007', 'test')]

classes = ["aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog", "horse", "motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor"]

def convert(size, box):

dw = 1./size[0]

dh = 1./size[1]

x = (box[0] + box[1])/2.0

y = (box[2] + box[3])/2.0

w = box[1] - box[0]

h = box[3] - box[2]

x = x*dw

w = w*dw

y = y*dh

h = h*dh

return (x,y,w,h)

def convert_annotation(year, image_id):

in_file = open('VOCdevkit/VOC%s/Annotations/%s.xml'%(year, image_id))

out_file = open('VOCdevkit/VOC%s/labels/%s.txt'%(year, image_id), 'w')

tree=ET.parse(in_file)

root = tree.getroot()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

difficult = obj.find('difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult) == 1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text), float(xmlbox.find('ymax').text))

bb = convert((w,h), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

wd = getcwd()

for year, image_set in sets:

if not os.path.exists('VOCdevkit/VOC%s/labels/'%(year)):

os.makedirs('VOCdevkit/VOC%s/labels/'%(year))

image_ids = open('VOCdevkit/VOC%s/ImageSets/Main/%s.txt'%(year, image_set)).read().strip().split()

list_file = open('%s_%s.txt'%(year, image_set), 'w')

for image_id in image_ids:

list_file.write('%s/VOCdevkit/VOC%s/JPEGImages/%s.jpg\n'%(wd, year, image_id))

convert_annotation(year, image_id)

list_file.close()

同样的,要根据具体自己的路径修改一下脚本中的一些路径。

7、voc_label.py生成的东西有:

'%s_%s.txt'%(year, image_set)

'VOCdevkit/VOC%s/labels/%s.txt'%(year, image_id)

准备训练代码及配置文件

1、下载训练网络代码https://github.com/pjreddie/darknet

2、配置文件修改好,用到的文件有:

- 训练集和验证集绝对路径集合(两个前面voc_label.py生成的txt,这里是train.txt和val.txt)

- .data文件,里面必须有的信息为

classes= 20

train = /home/pjreddie/data/voc/train.txt

valid = /home/pjreddie/data/voc/val.txt

names = data/voc.names

backup = backup - .names文件,里面是标注的种类名称,格式如下:

aeroplane

bicycle

bird

boat

bottle

bus

car

cat

chair

cow

diningtable

dog

horse

motorbike

person

pottedplant

sheep

sofa

train

tvmonitor - backup参数为指定的存放训练生成的权重文件的路径,这里是同目录下的backup目录

- .cfg文件,如下yolo.cfg:

[net]

batch=1

subdivisions=1

width=416

height=416

channels=3

momentum=0.9

decay=0.0005

angle=0

saturation = 1.5

exposure = 1.5

hue=.1

learning_rate=0.001

max_batches = 120000

policy=steps

steps=-1,100,80000,100000

scales=.1,10,.1,.1

[convolutional]

batch_normalize=1

filters=32

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=64

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=64

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=512

size=3

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=512

size=3

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=512

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=1024

size=3

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=1024

size=3

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=1024

size=3

stride=1

pad=1

activation=leaky

#######

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=1024

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=1024

activation=leaky

[route]

layers=-9

[reorg]

stride=2

[route]

layers=-1,-3

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=1024

activation=leaky

[convolutional]

size=1

stride=1

pad=1

filters=425

activation=linear

[region]

anchors = 0.738768,0.874946, 2.42204,2.65704, 4.30971,7.04493, 10.246,4.59428, 12.6868,11.8741

bias_match=1

classes=80

coords=4

num=5

softmax=1

jitter=.2

rescore=1

object_scale=5

noobject_scale=1

class_scale=1

coord_scale=1

absolute=1

thresh = .6

random=0

3、训练脚本

编译成功后,会生成darknet可执行文件,执行darknet 后面接准备好的.data和.cfg文件路径,另外还可以接-gpus 0,1,2,3,4 选择用来训练的GPU编号(多GPU可能导致Obj参数接近零),和其他的一些参数。

./darknet detector train cfg/voc.data cfg/yolo-voc.2.0.cfg -gpus 0,1,2,3 2>netlog.txt | tee train_log.txt

4、开始训练。