- 什么是多模态机器学习:跨感知融合的智能前沿

非凡暖阳

人工智能神经网络

在人工智能的广阔天地里,多模态机器学习(MultimodalMachineLearning)作为一项前沿技术,正逐步解锁人机交互和信息理解的新境界。它超越了单一感官输入的限制,通过整合视觉、听觉、文本等多种数据类型,构建了一个更加丰富、立体的认知模型,为机器赋予了接近人类的综合感知与理解能力。本文将深入探讨多模态机器学习的定义、核心原理、关键技术、面临的挑战以及未来的应用前景,旨在为读者勾勒出这一

- AI大模型如何赋能电商行业,引领变革

虞书欣的C

人工智能开发语言

•个性化推荐:利用机器学习算法分析用户的历史购买记录、浏览行为和喜好,生成个性化的产品推荐列表,提升用户的购买意愿和满意度。•优化用户体验:•智能搜索引擎:运用自然语言处理技术,优化搜索引擎,让用户能够通过自然语言进行搜索。•虚拟客服:通过聊天机器人和语音助手,提供24/7的客户支持,快速解答用户咨询。•图像识别:利用计算机视觉技术,用户可以通过拍照识别商品,快速找到相似商品或进行排版搭配推荐。•

- 数学:机器学习的理论基石

每天五分钟玩转人工智能

机器学习人工智能

一、数学:机器学习的理论基石机器学习是一种通过数据学习模式和规律的科学。其核心目标是从数据中提取有用的信息,以便对未知数据进行预测和分类。为了实现这一目标,机器学习需要一种数学框架来描述和解决问题。数学在机器学习中起着至关重要的作用,它提供了一种数学模型来描述数据和模式,以及一种数学方法来优化模型。数学在机器学习中的应用非常广泛,涵盖了线性代数、概率论、统计学、微积分、优化等多个领域。这些数学方法

- 【机器学习:二十六、决策树】

KeyPan

机器学习机器学习决策树人工智能算法深度学习数据挖掘

1.决策树概述决策树是一种基于树状结构的监督学习算法,既可以用于分类任务,也可以用于回归任务。其主要通过递归地将数据划分为子集,从而生成一个具有条件结构的树模型。核心概念节点(Node):每个节点表示一个特定的决策条件。根节点(RootNode):树的起点,包含所有样本。分支(Branch):每个分支代表一个条件划分的结果。叶节点(LeafNode):终止节点,表示最终的决策结果。优点直观可解释:

- 机器学习数学基础-极值和最值

华东算法王(原聪明的小孩子

小孩哥解析宋浩微积分机器学习算法人工智能

极值和最值极值和最值是数学中关于函数变化的重要概念,它们描述了函数在某些点附近或在整个定义域内的“最大”或“最小”行为。理解极值和最值对优化问题、函数分析、物理建模等领域有重要的应用。1.极值(LocalExtrema)极值是指函数在某个区间内的某一点取得的局部最大值或最小值。(1)局部最大值(LocalMaximum)一个函数在某点(x=c)取得局部最大值,意味着存在一个包含(c)的小区间,使得

- 17-7 向量数据库之野望7 - PostgreSQL 和pgvector

拉达曼迪斯II

AIGC学习数据库管理工具AI创业数据库postgresql人工智能机器学习AIGC搜索引擎

PostgreSQL是一款功能强大的开源对象关系数据库系统,它已将其功能扩展到传统数据管理之外,通过pgvector扩展支持矢量数据。这一新增功能满足了对高效处理高维矢量数据日益增长的需求,这些数据通常用于机器学习、自然语言处理(NLP)和推荐系统等应用。https://github.com/mazzasaverio/find-your-opensource-project什么是pgvector?

- 【MySQL】Mysql数据库导入导出sql文件、备份数据库、迁移数据库

程序员洲洲

数据库数据库mysql导入导出sqlsql文件备份迁移

本文摘要:本文提出了xxx的实用开发小技巧。作者介绍:我是程序员洲洲,一个热爱写作的非著名程序员。CSDN全栈优质领域创作者、华为云博客社区云享专家、阿里云博客社区专家博主。同时欢迎大家关注其他专栏,我将分享Web前后端开发、人工智能、机器学习、深度学习从0到1系列文章。同时洲洲已经建立了程序员技术交流群,如果您感兴趣,可以私信我加入我的社群,也可以直接vx联系(文末有名片)v:bdizztt随时

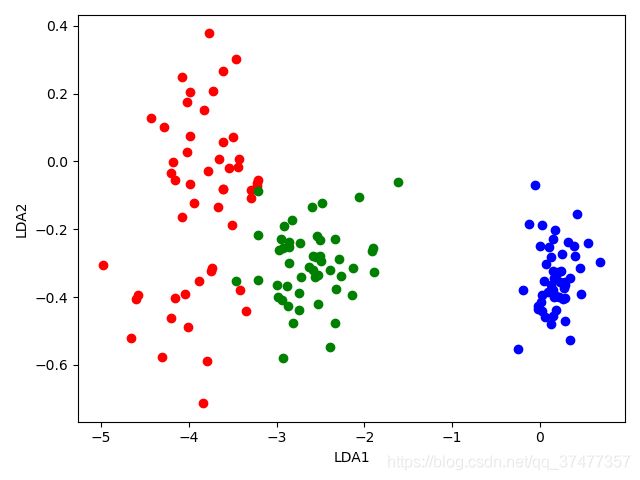

- 【Python机器学习】无监督学习——K-均值聚类算法

zhangbin_237

Python机器学习机器学习算法pythonkmeansk-means均值算法

聚类是一种无监督的学习,它将相似的对象归到同一簇中,它有点像全自动分类。聚类方法几乎可以应用于所有的对象,簇内的对象越相似,聚类的效果越好。K-均值聚类算法就是一种典型的聚类算法,之所以称之为K-均值是因为它可以发现k个不同的簇,且每个簇的中心采用簇中所含值的均值计算而成。簇识别给出聚类结果的含义,假定有一些数据,现在将相似数据归到一起,簇识别会告诉我们这些簇到底都是些什么。聚类与分类的最大不同在

- 【Python】已解决:WARNING: pip is configured with locations that require TLS/SSL, however the ssl module i

屿小夏

pythonpipssl

个人简介:某不知名博主,致力于全栈领域的优质博客分享|用最优质的内容带来最舒适的阅读体验!文末获取免费IT学习资料!文末获取更多信息精彩专栏推荐订阅收藏专栏系列直达链接相关介绍书籍分享点我跳转书籍作为获取知识的重要途径,对于IT从业者来说更是不可或缺的资源。不定期更新IT图书,并在评论区抽取随机粉丝,书籍免费包邮到家AI前沿点我跳转探讨人工智能技术领域的最新发展和创新,涵盖机器学习、深度学习、自然

- 机器学习特征重要性之feature_importances_属性与permutation_importance方法

一叶_障目

机器学习python数据挖掘

一、feature_importances_属性在机器学习中,分类和回归算法的feature_importances_属性用于衡量每个特征对模型预测的重要性。这个属性通常在基于树的算法中使用,通过feature_importances_属性,您可以了解哪些特征对模型的预测最为重要,从而可以进行特征选择或特征工程,以提高模型的性能和解释性。1、决策树1.1.sklearn.tree.Decision

- 机器学习-期末测试

难以触及的高度

机器学习python人工智能

机器学习-期末测试线性回归1.代码展示#coding=UTF-8#拆分训练集和测试集importmatplotlib.pyplotaspltfromsklearn.model_selectionimporttrain_test_split#是线性回归类是sklearn写好的根据梯度下降法fromsklearn.linear_modelimportLinearRegressionimportpand

- 机器学习的介绍

2201_75874206

机器学习人工智能

目录1.机器学习的定义2.机器学习的原理3.机器学习的方法4.机器学习的分类5.机器学习的评估6.机器学习的应用场景7.机器学习与人工智能的关系结论机器学习在自然语言处理中的最新应用和技术是什么?如何评估机器学习模型的性能,除了交叉验证、MSE和RMSE外,还有哪些其他重要的指标?在金融风险管理中,机器学习如何帮助预测市场趋势和信用风险?市场趋势预测信用风险评估机器学习与人工智能之间的关系在未来发

- Kaggle欺诈检测:使用生成对抗网络(GAN)解决正负样本极度不平衡问题

Loving_enjoy

论文深度学习计算机视觉人工智能

###Kaggle欺诈检测:使用生成对抗网络(GAN)解决正负样本极度不平衡问题####引言在金融领域中,欺诈检测是一项至关重要的任务。然而,欺诈交易数据往往呈现出正负样本极度不平衡的特点,这给机器学习模型的训练带来了挑战。传统的分类算法在面对这种不平衡数据时,往往会导致模型对多数类(正常交易)过拟合,而对少数类(欺诈交易)的识别能力较差。为了解决这个问题,生成对抗网络(GAN)提供了一种有效的手

- 一文读懂:无监督学习与有监督学习的区别与应用

码上飞扬

学习

在机器学习的世界里,无监督学习和有监督学习是两个最为常见且重要的概念。理解这两者的区别和应用场景,不仅有助于我们选择合适的算法和模型,还能帮助我们更好地解决实际问题。那么,什么是无监督学习和有监督学习呢?本文将带你详细了解这两种学习方式的定义、区别以及典型应用。目录无监督学习是什么?有监督学习是什么?无监督学习与有监督学习的主要区别无监督学习的典型应用有监督学习的典型应用如何选择合适的学习方法?1

- Spark Livy 指南及livy部署访问实践

house.zhang

大数据-Spark大数据

背景:ApacheSpark是一个比较流行的大数据框架、广泛运用于数据处理、数据分析、机器学习中,它提供了两种方式进行数据处理,一是交互式处理:比如用户使用spark-shell,编写交互式代码编译成spark作业提交到集群上去执行;二是批处理,通过spark-submit提交打包好的spark应用jar到集群中进行执行。这两种运行方式都需要安装spark客户端配置好yarn集群信息,并打通集群网

- C#遇见TensorFlow.NET:开启机器学习的全新时代

墨夶

C#学习资料1机器学习c#tensorflow

在当今快速发展的科技世界里,机器学习(MachineLearning,ML)已经成为推动创新的重要力量。从个性化推荐系统到自动驾驶汽车,ML的应用无处不在。对于那些习惯于使用C#进行开发的程序员来说,将机器学习集成到他们的项目中似乎是一项具有挑战性的任务。但随着TensorFlow.NET的出现,这一切变得不再困难。今天,我们将一起探索如何利用这一强大的工具,在熟悉的.NET环境中轻松构建、训练和

- 深入探索Python编程技术:从入门到精通的全方位学习指南

小码快撩

python开发语言

引言在当今信息技术飞速发展的时代,Python以其简洁优雅、功能强大、易于上手的特点,成为了众多开发者和初学者首选的编程语言。无论是数据科学、机器学习、Web开发、自动化脚本编写,还是桌面应用开发,Python都能发挥其独特优势,帮助开发者高效完成任务。本文旨在为Python学习者提供一个全面的学习路径与关键知识点概述,助您快速掌握这门强大的编程语言。一、基础语法1.变量定义与数据类型示例代码:#

- 从零开始的 AI Infra 学习之路

SSS不知-道

MLSys人工智能深度学习pytorch

从零开始的AIInfra学习之路文章目录从零开始的AIInfra学习之路一、概述二、AI算法应用2.1机器学习2.2深度学习2.3LLM三、AI开发体系3.1编程语言四、AI训练框架&推理引擎4.1PyTorch4.2llama.cpp4.3vLLM五、AI编译&计算架构5.1CUDA5.2CANN六、AI硬件&体系结构6.1INVIDIAGPU6.2AscendNPU一、概述AIInfra(AI

- python 特征选择方法_【来点干货】机器学习中常用的特征选择方法及非常详细的Python实例...

Blair Long

python特征选择方法

花费了很长时间整理编辑,转载请联系作者授权,违者必究。特征选择(Featureselection)是在构建预测模型的过程中减少输入变量的一个过程。它是机器学习中非常重要的一步并在很大程度上可以提高模型预测精度。这里我总结了一些机器学习中常见的比较有用的特征选择方法并附上相关python实现code。希望可以给大家一些启发。首先,我们为什么要进行特征选择呢?它有以下几个优点:减少过拟合:冗余数据常常

- chatgpt赋能python:Python群发微信消息:解决方案

suimodina

ChatGptpythonchatgpt微信计算机

Python群发微信消息:解决方案肆无忌惮的群发微信消息,是否是你目前所需的解决方案?如果是,那么你来对地方了。Python是一门十分强大的编程语言,广泛用于各种人工智能、计算机视觉、机器学习等领域。Python可以用于开发各种应用程序,它也可以用于批量处理和发送微信消息。本文将概述如何用Python发送微信消息。我们将介绍用Python实现微信消息的流程和步骤,并提供一些有关如何使用Python

- ChatGPT4.0最新功能和使用技巧,助力日常生活、学习与工作!

WangYan2022

教程人工智能chatgpt数据分析ai绘画AI写作

熟练掌握ChatGPT4.0在数据分析、自动生成代码等方面的强大功能,系统学习人工智能(包括传统机器学习、深度学习等)的基础理论知识,以及具体的代码实现方法,同时掌握ChatGPT4.0在科研工作中的各种使用方法与技巧,以及人工智能领域经典机器学习算法(BP神经网络、支持向量机、决策树、随机森林、变量降维与特征选择、群优化算法等)和热门深度学习方法(卷积神经网络、迁移学习、RNN与LSTM神经网络

- ASPICE 4.0引领自动驾驶未来:机器学习模型的特点与实践

亚远景aspice

机器学习自动驾驶人工智能

ASPICE4.0-ML机器学习模型是针对汽车行业,特别是在汽车软件开发中,针对机器学习(MachineLearning,ML)应用的特定标准和过程。ASPICE(AutomotiveSPICE)是一种基于软件控制的系统开发过程的国际标准,旨在提升软件开发过程的质量、效率和可靠性。ASPICE4.0中的ML模型部分则进一步细化了机器学习在汽车软件开发中的具体要求和流程。以下是对ASPICE4.0-

- python中tensorflow_python机器学习TensorFlow框架

弦歌缓缓

TensorFlow框架关注公众号“轻松学编程”了解更多。一、简介TensorFlow是谷歌基于DistBelief进行研发的第二代人工智能学习系统,其命名来源于本身的运行原理。Tensor(张量)意味着N维数组,Flow(流)意味着基于数据流图的计算,TensorFlow为张量从流图的一端流动到另一端的计算过程。TensorFlow是将复杂的数据结构传输至人工智能神经网中进行分析和处理过程的系统

- 【机器学习实战中阶】音乐流派分类-自动化分类不同音乐风格

精通代码大仙

数据挖掘深度学习python机器学习分类自动化人工智能数据挖掘深度学习

音乐流派分类–自动化分类不同音乐风格在本教程中,我们将开发一个深度学习项目,用于自动化地从音频文件中分类不同的音乐流派。我们将使用音频文件的频率域和时间域低级特征来分类这些音频文件。对于这个项目,我们需要一个具有相似大小和相似频率范围的音频曲目数据集。GTZAN流派分类数据集是音乐流派分类项目中最推荐的数据集,并且它是为了这个任务而收集的。音乐流派分类器模型音乐流派分类关于数据集:GTZAN流派收

- 全面解读 Databricks:从架构、引擎到优化策略

克里斯蒂亚诺罗纳尔多阿维罗

架构spark大数据

导语:Databricks是一家由ApacheSpark创始团队成员创立的公司,同时也是一个统一分析平台,帮助企业构建数据湖与数据仓库一体化(Lakehouse)的架构。在Databricks平台上,数据工程、数据科学与数据分析团队能够协作使用Spark、DeltaLake、MLflow等工具高效处理数据与构建机器学习应用。本文将深入介绍Databricks的平台概念、架构特点、优化机制、功能特性

- AI歌手会成为主流吗?

网络安全我来了

IT技术人工智能

AI歌手会成为主流吗?在如今这个科技迅猛发展的时代,AI歌手渐渐走入我们的视野。或许你会想,AI真的能够唱歌,它的歌声能与真实歌手相媲美吗?让我们一起探索这个引人入胜的主题,看看AI歌手的发展现状、优缺点,以及它在音乐行业的未来前景。1.AI歌手的发展现状1.1技术背景我们处于一个机器学习和深度学习技术飞速发展的时代,AI歌手的诞生并非偶然。通过收集和分析大量的音乐数据,AI能够学习并模仿特定歌手

- 【机器学习:三十二、强化学习:理论与应用】

KeyPan

机器学习机器学习机器人人工智能深度学习数据挖掘

1.强化学习概述**强化学习(ReinforcementLearning,RL)**是一种机器学习方法,旨在通过试验与反馈的交互,使智能体(Agent)在动态环境中学习决策策略,以最大化累积奖励(CumulativeReward)。相比监督学习和无监督学习,强化学习更关注长期目标,而非简单地从标签中学习。核心概念智能体(Agent):进行学习和决策的主体。环境(Environment):智能体所在

- 【机器学习:二十七、决策树集合】

KeyPan

机器学习机器学习决策树人工智能数据挖掘深度学习算法分类

1.决策树集合的概述决策树集合是一种基于多个决策树模型集成的机器学习方法,通过组合多个弱学习器(决策树)形成一个强学习器,显著提升预测性能和泛化能力。核心思想集成学习的核心是通过结合多个模型的优点,降低单个模型的偏差与方差,提高整体的准确性和鲁棒性。决策树集合利用多棵树的组合,减少了单棵树可能出现的过拟合或对噪声的敏感性。主要优势性能提升:在分类和回归任务中通常表现优于单独的决策树。稳定性更强:对

- 学习AI大模型的小白入门建议和具体的学习方法推荐

aFakeProgramer

机器学习人工智能人工智能

深度思考我是一名在汽车行业工作的嵌入式系统工程师,现在我想进入人工智能领域,特别是大型语言模型。说到人工智能,我是一个完全的新手,所以我需要弄清楚从哪里开始。让我们把它分解一下。首先,我知道嵌入式系统涉及许多低级编程、微控制器、实时操作系统等。人工智能,特别是像GPT这样的大型模型,似乎处于另一端,处理高级计算、海量数据集和复杂算法。这里肯定有一个学习曲线。我可能应该从了解机器学习的基础知识开始。

- 机器学习——认识有监督学习与无监督学习

伊一大数据&人工智能学习日志

机器学习机器学习学习人工智能

目录有监督学习与无监督学习有监督学习无监督学习监督学习与无监督学习的区别有监督学习与无监督学习有监督学习和无监督学习是机器学习中的两种主要学习方式,它们的主要区别在于数据的标注情况和学习的目标。有监督学习有监督学习中,数据集中的每个样本都有明确的标签或目标输出。学习的目标是通过对有标签数据的学习,建立输入特征和输出标签之间的映射关系,以便能够对新的、未见过的输入数据预测其相应的输出标签。常见的有监

- ViewController添加button按钮解析。(翻译)

张亚雄

c

<div class="it610-blog-content-contain" style="font-size: 14px"></div>// ViewController.m

// Reservation software

//

// Created by 张亚雄 on 15/6/2.

- mongoDB 简单的增删改查

开窍的石头

mongodb

在上一篇文章中我们已经讲了mongodb怎么安装和数据库/表的创建。在这里我们讲mongoDB的数据库操作

在mongo中对于不存在的表当你用db.表名 他会自动统计

下边用到的user是表明,db代表的是数据库

添加(insert):

- log4j配置

0624chenhong

log4j

1) 新建java项目

2) 导入jar包,项目右击,properties—java build path—libraries—Add External jar,加入log4j.jar包。

3) 新建一个类com.hand.Log4jTest

package com.hand;

import org.apache.log4j.Logger;

public class

- 多点触摸(图片缩放为例)

不懂事的小屁孩

多点触摸

多点触摸的事件跟单点是大同小异的,上个图片缩放的代码,供大家参考一下

import android.app.Activity;

import android.os.Bundle;

import android.view.MotionEvent;

import android.view.View;

import android.view.View.OnTouchListener

- 有关浏览器窗口宽度高度几个值的解析

换个号韩国红果果

JavaScripthtml

1 元素的 offsetWidth 包括border padding content 整体的宽度。

clientWidth 只包括内容区 padding 不包括border。

clientLeft = offsetWidth -clientWidth 即这个元素border的值

offsetLeft 若无已定位的包裹元素

- 数据库产品巡礼:IBM DB2概览

蓝儿唯美

db2

IBM DB2是一个支持了NoSQL功能的关系数据库管理系统,其包含了对XML,图像存储和Java脚本对象表示(JSON)的支持。DB2可被各种类型的企 业使用,它提供了一个数据平台,同时支持事务和分析操作,通过提供持续的数据流来保持事务工作流和分析操作的高效性。 DB2支持的操作系统

DB2可应用于以下三个主要的平台:

工作站,DB2可在Linus、Unix、Windo

- java笔记5

a-john

java

控制执行流程:

1,true和false

利用条件表达式的真或假来决定执行路径。例:(a==b)。它利用条件操作符“==”来判断a值是否等于b值,返回true或false。java不允许我们将一个数字作为布尔值使用,虽然这在C和C++里是允许的。如果想在布尔测试中使用一个非布尔值,那么首先必须用一个条件表达式将其转化成布尔值,例如if(a!=0)。

2,if-els

- Web开发常用手册汇总

aijuans

PHP

一门技术,如果没有好的参考手册指导,很难普及大众。这其实就是为什么很多技术,非常好,却得不到普遍运用的原因。

正如我们学习一门技术,过程大概是这个样子:

①我们日常工作中,遇到了问题,困难。寻找解决方案,即寻找新的技术;

②为什么要学习这门技术?这门技术是不是很好的解决了我们遇到的难题,困惑。这个问题,非常重要,我们不是为了学习技术而学习技术,而是为了更好的处理我们遇到的问题,才需要学习新的

- 今天帮助人解决的一个sql问题

asialee

sql

今天有个人问了一个问题,如下:

type AD value

A

- 意图对象传递数据

百合不是茶

android意图IntentBundle对象数据的传递

学习意图将数据传递给目标活动; 初学者需要好好研究的

1,将下面的代码添加到main.xml中

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http:/

- oracle查询锁表解锁语句

bijian1013

oracleobjectsessionkill

一.查询锁定的表

如下语句,都可以查询锁定的表

语句一:

select a.sid,

a.serial#,

p.spid,

c.object_name,

b.session_id,

b.oracle_username,

b.os_user_name

from v$process p, v$s

- mac osx 10.10 下安装 mysql 5.6 二进制文件[tar.gz]

征客丶

mysqlosx

场景:在 mac osx 10.10 下安装 mysql 5.6 的二进制文件。

环境:mac osx 10.10、mysql 5.6 的二进制文件

步骤:[所有目录请从根“/”目录开始取,以免层级弄错导致找不到目录]

1、下载 mysql 5.6 的二进制文件,下载目录下面称之为 mysql5.6SourceDir;

下载地址:http://dev.mysql.com/downl

- 分布式系统与框架

bit1129

分布式

RPC框架 Dubbo

什么是Dubbo

Dubbo是一个分布式服务框架,致力于提供高性能和透明化的RPC远程服务调用方案,以及SOA服务治理方案。其核心部分包含: 远程通讯: 提供对多种基于长连接的NIO框架抽象封装,包括多种线程模型,序列化,以及“请求-响应”模式的信息交换方式。 集群容错: 提供基于接

- 那些令人蛋痛的专业术语

白糖_

springWebSSOIOC

spring

【控制反转(IOC)/依赖注入(DI)】:

由容器控制程序之间的关系,而非传统实现中,由程序代码直接操控。这也就是所谓“控制反转”的概念所在:控制权由应用代码中转到了外部容器,控制权的转移,是所谓反转。

简单的说:对象的创建又容器(比如spring容器)来执行,程序里不直接new对象。

Web

【单点登录(SSO)】:SSO的定义是在多个应用系统中,用户

- 《给大忙人看的java8》摘抄

braveCS

java8

函数式接口:只包含一个抽象方法的接口

lambda表达式:是一段可以传递的代码

你最好将一个lambda表达式想象成一个函数,而不是一个对象,并记住它可以被转换为一个函数式接口。

事实上,函数式接口的转换是你在Java中使用lambda表达式能做的唯一一件事。

方法引用:又是要传递给其他代码的操作已经有实现的方法了,这时可以使

- 编程之美-计算字符串的相似度

bylijinnan

java算法编程之美

public class StringDistance {

/**

* 编程之美 计算字符串的相似度

* 我们定义一套操作方法来把两个不相同的字符串变得相同,具体的操作方法为:

* 1.修改一个字符(如把“a”替换为“b”);

* 2.增加一个字符(如把“abdd”变为“aebdd”);

* 3.删除一个字符(如把“travelling”变为“trav

- 上传、下载压缩图片

chengxuyuancsdn

下载

/**

*

* @param uploadImage --本地路径(tomacat路径)

* @param serverDir --服务器路径

* @param imageType --文件或图片类型

* 此方法可以上传文件或图片.txt,.jpg,.gif等

*/

public void upload(String uploadImage,Str

- bellman-ford(贝尔曼-福特)算法

comsci

算法F#

Bellman-Ford算法(根据发明者 Richard Bellman 和 Lester Ford 命名)是求解单源最短路径问题的一种算法。单源点的最短路径问题是指:给定一个加权有向图G和源点s,对于图G中的任意一点v,求从s到v的最短路径。有时候这种算法也被称为 Moore-Bellman-Ford 算法,因为 Edward F. Moore zu 也为这个算法的发展做出了贡献。

与迪科

- oracle ASM中ASM_POWER_LIMIT参数

daizj

ASMoracleASM_POWER_LIMIT磁盘平衡

ASM_POWER_LIMIT

该初始化参数用于指定ASM例程平衡磁盘所用的最大权值,其数值范围为0~11,默认值为1。该初始化参数是动态参数,可以使用ALTER SESSION或ALTER SYSTEM命令进行修改。示例如下:

SQL>ALTER SESSION SET Asm_power_limit=2;

- 高级排序:快速排序

dieslrae

快速排序

public void quickSort(int[] array){

this.quickSort(array, 0, array.length - 1);

}

public void quickSort(int[] array,int left,int right){

if(right - left <= 0

- C语言学习六指针_何谓变量的地址 一个指针变量到底占几个字节

dcj3sjt126com

C语言

# include <stdio.h>

int main(void)

{

/*

1、一个变量的地址只用第一个字节表示

2、虽然他只使用了第一个字节表示,但是他本身指针变量类型就可以确定出他指向的指针变量占几个字节了

3、他都只存了第一个字节地址,为什么只需要存一个字节的地址,却占了4个字节,虽然只有一个字节,

但是这些字节比较多,所以编号就比较大,

- phpize使用方法

dcj3sjt126com

PHP

phpize是用来扩展php扩展模块的,通过phpize可以建立php的外挂模块,下面介绍一个它的使用方法,需要的朋友可以参考下

安装(fastcgi模式)的时候,常常有这样一句命令:

代码如下:

/usr/local/webserver/php/bin/phpize

一、phpize是干嘛的?

phpize是什么?

phpize是用来扩展php扩展模块的,通过phpi

- Java虚拟机学习 - 对象引用强度

shuizhaosi888

JAVA虚拟机

本文原文链接:http://blog.csdn.net/java2000_wl/article/details/8090276 转载请注明出处!

无论是通过计数算法判断对象的引用数量,还是通过根搜索算法判断对象引用链是否可达,判定对象是否存活都与“引用”相关。

引用主要分为 :强引用(Strong Reference)、软引用(Soft Reference)、弱引用(Wea

- .NET Framework 3.5 Service Pack 1(完整软件包)下载地址

happyqing

.net下载framework

Microsoft .NET Framework 3.5 Service Pack 1(完整软件包)

http://www.microsoft.com/zh-cn/download/details.aspx?id=25150

Microsoft .NET Framework 3.5 Service Pack 1 是一个累积更新,包含很多基于 .NET Framewo

- JAVA定时器的使用

jingjing0907

javatimer线程定时器

1、在应用开发中,经常需要一些周期性的操作,比如每5分钟执行某一操作等。

对于这样的操作最方便、高效的实现方式就是使用java.util.Timer工具类。

privatejava.util.Timer timer;

timer = newTimer(true);

timer.schedule(

newjava.util.TimerTask() { public void run()

- Webbench

流浪鱼

webbench

首页下载地址 http://home.tiscali.cz/~cz210552/webbench.html

Webbench是知名的网站压力测试工具,它是由Lionbridge公司(http://www.lionbridge.com)开发。

Webbench能测试处在相同硬件上,不同服务的性能以及不同硬件上同一个服务的运行状况。webbench的标准测试可以向我们展示服务器的两项内容:每秒钟相

- 第11章 动画效果(中)

onestopweb

动画

index.html

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/

- windows下制作bat启动脚本.

sanyecao2314

javacmd脚本bat

java -classpath C:\dwjj\commons-dbcp.jar;C:\dwjj\commons-pool.jar;C:\dwjj\log4j-1.2.16.jar;C:\dwjj\poi-3.9-20121203.jar;C:\dwjj\sqljdbc4.jar;C:\dwjj\voucherimp.jar com.citsamex.core.startup.MainStart

- Java进行RSA加解密的例子

tomcat_oracle

java

加密是保证数据安全的手段之一。加密是将纯文本数据转换为难以理解的密文;解密是将密文转换回纯文本。 数据的加解密属于密码学的范畴。通常,加密和解密都需要使用一些秘密信息,这些秘密信息叫做密钥,将纯文本转为密文或者转回的时候都要用到这些密钥。 对称加密指的是发送者和接收者共用同一个密钥的加解密方法。 非对称加密(又称公钥加密)指的是需要一个私有密钥一个公开密钥,两个不同的密钥的

- Android_ViewStub

阿尔萨斯

ViewStub

public final class ViewStub extends View

java.lang.Object

android.view.View

android.view.ViewStub

类摘要: ViewStub 是一个隐藏的,不占用内存空间的视图对象,它可以在运行时延迟加载布局资源文件。当 ViewSt